Learning Human Body Motions from Skeleton-Based Observations for Robot-Assisted Therapy

Paper and Code

Jul 25, 2022

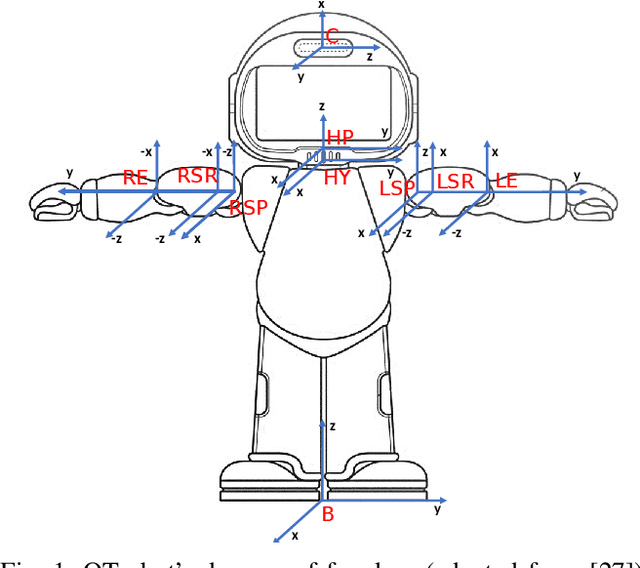

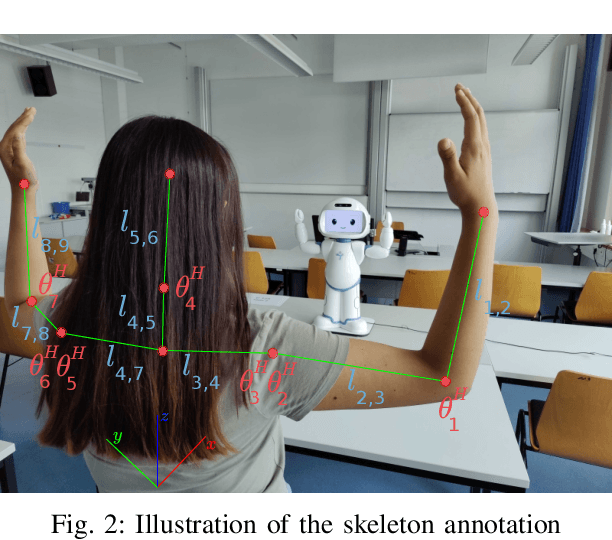

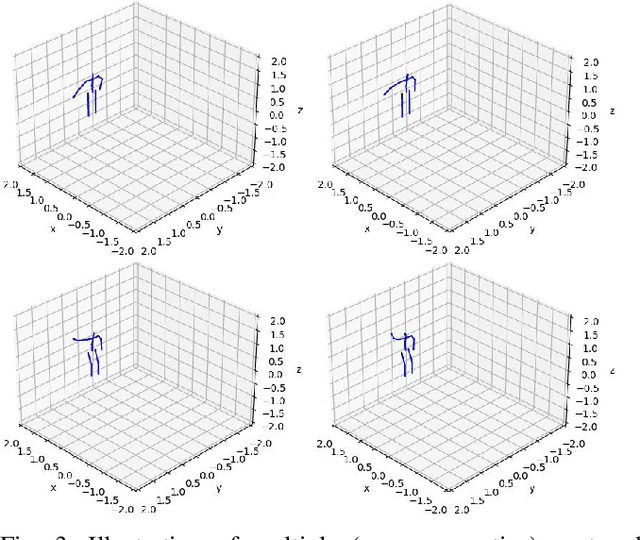

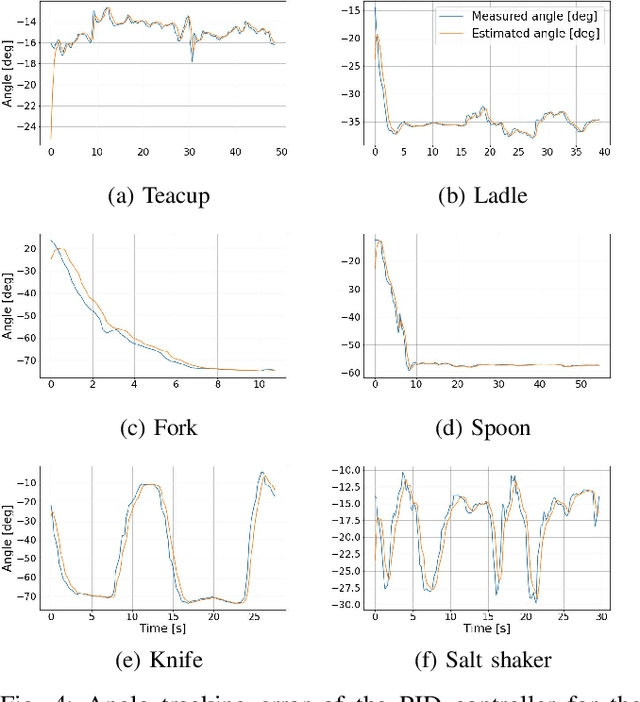

Robots applied in therapeutic scenarios, for instance in the therapy of individuals with Autism Spectrum Disorder, are sometimes used for imitation learning activities in which a person needs to repeat motions by the robot. To simplify the task of incorporating new types of motions that a robot can perform, it is desirable that the robot has the ability to learn motions by observing demonstrations from a human, such as a therapist. In this paper, we investigate an approach for acquiring motions from skeleton observations of a human, which are collected by a robot-centric RGB-D camera. Given a sequence of observations of various joints, the joint positions are mapped to match the configuration of a robot before being executed by a PID position controller. We evaluate the method, in particular the reproduction error, by performing a study with QTrobot in which the robot acquired different upper-body dance moves from multiple participants. The results indicate the method's overall feasibility, but also indicate that the reproduction quality is affected by noise in the skeleton observations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge