Negar Mehr

TACO: Temporal Consensus Optimization for Continual Neural Mapping

Feb 05, 2026Abstract:Neural implicit mapping has emerged as a powerful paradigm for robotic navigation and scene understanding. However, real-world robotic deployment requires continual adaptation to changing environments under strict memory and computation constraints, which existing mapping systems fail to support. Most prior methods rely on replaying historical observations to preserve consistency and assume static scenes. As a result, they cannot adapt to continual learning in dynamic robotic settings. To address these challenges, we propose TACO (TemporAl Consensus Optimization), a replay-free framework for continual neural mapping. We reformulate mapping as a temporal consensus optimization problem, where we treat past model snapshots as temporal neighbors. Intuitively, our approach resembles a model consulting its own past knowledge. We update the current map by enforcing weighted consensus with historical representations. Our method allows reliable past geometry to constrain optimization while permitting unreliable or outdated regions to be revised in response to new observations. TACO achieves a balance between memory efficiency and adaptability without storing or replaying previous data. Through extensive simulated and real-world experiments, we show that TACO robustly adapts to scene changes, and consistently outperforms other continual learning baselines.

MIMIC-D: Multi-modal Imitation for MultI-agent Coordination with Decentralized Diffusion Policies

Sep 17, 2025Abstract:As robots become more integrated in society, their ability to coordinate with other robots and humans on multi-modal tasks (those with multiple valid solutions) is crucial. We propose to learn such behaviors from expert demonstrations via imitation learning (IL). However, when expert demonstrations are multi-modal, standard IL approaches can struggle to capture the diverse strategies, hindering effective coordination. Diffusion models are known to be effective at handling complex multi-modal trajectory distributions in single-agent systems. Diffusion models have also excelled in multi-agent scenarios where multi-modality is more common and crucial to learning coordinated behaviors. Typically, diffusion-based approaches require a centralized planner or explicit communication among agents, but this assumption can fail in real-world scenarios where robots must operate independently or with agents like humans that they cannot directly communicate with. Therefore, we propose MIMIC-D, a Centralized Training, Decentralized Execution (CTDE) paradigm for multi-modal multi-agent imitation learning using diffusion policies. Agents are trained jointly with full information, but execute policies using only local information to achieve implicit coordination. We demonstrate in both simulation and hardware experiments that our method recovers multi-modal coordination behavior among agents in a variety of tasks and environments, while improving upon state-of-the-art baselines.

CRAFT: Coaching Reinforcement Learning Autonomously using Foundation Models for Multi-Robot Coordination Tasks

Sep 17, 2025

Abstract:Multi-Agent Reinforcement Learning (MARL) provides a powerful framework for learning coordination in multi-agent systems. However, applying MARL to robotics still remains challenging due to high-dimensional continuous joint action spaces, complex reward design, and non-stationary transitions inherent to decentralized settings. On the other hand, humans learn complex coordination through staged curricula, where long-horizon behaviors are progressively built upon simpler skills. Motivated by this, we propose CRAFT: Coaching Reinforcement learning Autonomously using Foundation models for multi-robot coordination Tasks, a framework that leverages the reasoning capabilities of foundation models to act as a "coach" for multi-robot coordination. CRAFT automatically decomposes long-horizon coordination tasks into sequences of subtasks using the planning capability of Large Language Models (LLMs). In what follows, CRAFT trains each subtask using reward functions generated by LLM, and refines them through a Vision Language Model (VLM)-guided reward-refinement loop. We evaluate CRAFT on multi-quadruped navigation and bimanual manipulation tasks, demonstrating its capability to learn complex coordination behaviors. In addition, we validate the multi-quadruped navigation policy in real hardware experiments.

UDON: Uncertainty-weighted Distributed Optimization for Multi-Robot Neural Implicit Mapping under Extreme Communication Constraints

Sep 16, 2025

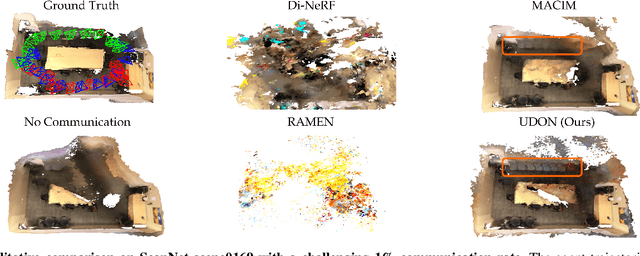

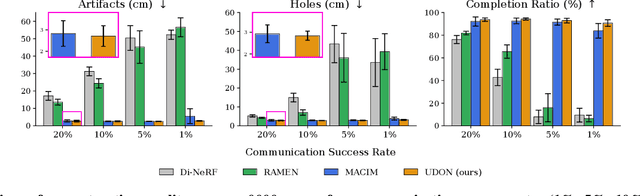

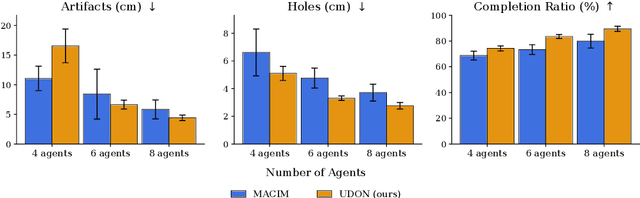

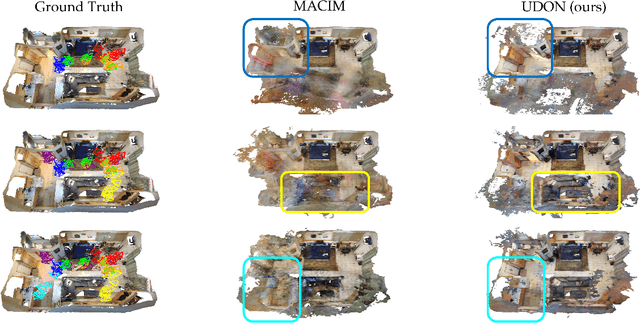

Abstract:Multi-robot mapping with neural implicit representations enables the compact reconstruction of complex environments. However, it demands robustness against communication challenges like packet loss and limited bandwidth. While prior works have introduced various mechanisms to mitigate communication disruptions, performance degradation still occurs under extremely low communication success rates. This paper presents UDON, a real-time multi-agent neural implicit mapping framework that introduces a novel uncertainty-weighted distributed optimization to achieve high-quality mapping under severe communication deterioration. The uncertainty weighting prioritizes more reliable portions of the map, while the distributed optimization isolates and penalizes mapping disagreement between individual pairs of communicating agents. We conduct extensive experiments on standard benchmark datasets and real-world robot hardware. We demonstrate that UDON significantly outperforms existing baselines, maintaining high-fidelity reconstructions and consistent scene representations even under extreme communication degradation (as low as 1% success rate).

RAMEN: Real-time Asynchronous Multi-agent Neural Implicit Mapping

Feb 26, 2025Abstract:Multi-agent neural implicit mapping allows robots to collaboratively capture and reconstruct complex environments with high fidelity. However, existing approaches often rely on synchronous communication, which is impractical in real-world scenarios with limited bandwidth and potential communication interruptions. This paper introduces RAMEN: Real-time Asynchronous Multi-agEnt Neural implicit mapping, a novel approach designed to address this challenge. RAMEN employs an uncertainty-weighted multi-agent consensus optimization algorithm that accounts for communication disruptions. When communication is lost between a pair of agents, each agent retains only an outdated copy of its neighbor's map, with the uncertainty of this copy increasing over time since the last communication. Using gradient update information, we quantify the uncertainty associated with each parameter of the neural network map. Neural network maps from different agents are brought to consensus on the basis of their levels of uncertainty, with consensus biased towards network parameters with lower uncertainty. To achieve this, we derive a weighted variant of the decentralized consensus alternating direction method of multipliers (C-ADMM) algorithm, facilitating robust collaboration among agents with varying communication and update frequencies. Through extensive evaluations on real-world datasets and robot hardware experiments, we demonstrate RAMEN's superior mapping performance under challenging communication conditions.

DDAT: Diffusion Policies Enforcing Dynamically Admissible Robot Trajectories

Feb 20, 2025

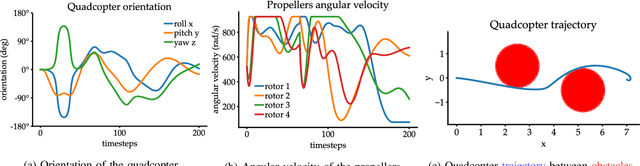

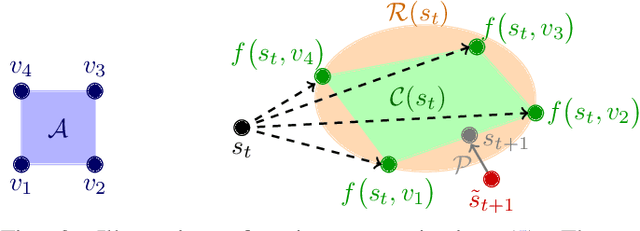

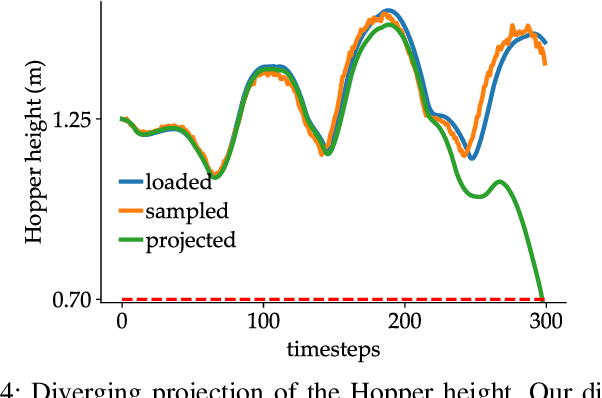

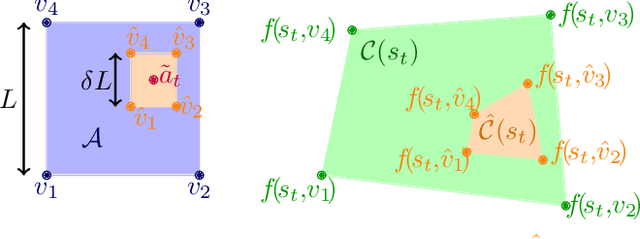

Abstract:Diffusion models excel at creating images and videos thanks to their multimodal generative capabilities. These same capabilities have made diffusion models increasingly popular in robotics research, where they are used for generating robot motion. However, the stochastic nature of diffusion models is fundamentally at odds with the precise dynamical equations describing the feasible motion of robots. Hence, generating dynamically admissible robot trajectories is a challenge for diffusion models. To alleviate this issue, we introduce DDAT: Diffusion policies for Dynamically Admissible Trajectories to generate provably admissible trajectories of black-box robotic systems using diffusion models. A sequence of states is a dynamically admissible trajectory if each state of the sequence belongs to the reachable set of its predecessor by the robot's equations of motion. To generate such trajectories, our diffusion policies project their predictions onto a dynamically admissible manifold during both training and inference to align the objective of the denoiser neural network with the dynamical admissibility constraint. The auto-regressive nature of these projections along with the black-box nature of robot dynamics render these projections immensely challenging. We thus enforce admissibility by iteratively sampling a polytopic under-approximation of the reachable set of a state onto which we project its predicted successor, before iterating this process with the projected successor. By producing accurate trajectories, this projection eliminates the need for diffusion models to continually replan, enabling one-shot long-horizon trajectory planning. We demonstrate that our framework generates higher quality dynamically admissible robot trajectories through extensive simulations on a quadcopter and various MuJoCo environments, along with real-world experiments on a Unitree GO1 and GO2.

Risk-Sensitive Orbital Debris Collision Avoidance using Distributionally Robust Chance Constraints

Dec 23, 2024

Abstract:The exponential increase in orbital debris and active satellites will lead to congested orbits, necessitating more frequent collision avoidance maneuvers by satellites. To minimize fuel consumption while ensuring the safety of satellites, enforcing a chance constraint, which poses an upper bound in collision probability with debris, can serve as an intuitive safety measure. However, accurately evaluating collision probability, which is critical for the effective implementation of chance constraints, remains a non-trivial task. This difficulty arises because uncertainty propagation in nonlinear orbit dynamics typically provides only limited information, such as finite samples or moment estimates about the underlying arbitrary non-Gaussian distributions. Furthermore, even if the full distribution were known, it remains unclear how to effectively compute chance constraints with such non-Gaussian distributions. To address these challenges, we propose a distributionally robust chance-constrained collision avoidance algorithm that provides a sufficient condition for collision probabilities under limited information about the underlying non-Gaussian distribution. Our distributionally robust approach satisfies the chance constraint for all debris position distributions sharing a given mean and covariance, thereby enabling the enforcement of chance constraints with limited distributional information. To achieve computational tractability, the chance constraint is approximated using a Conditional Value-at-Risk (CVaR) constraint, which gives a conservative and tractable approximation of the distributionally robust chance constraint. We validate our algorithm on a real-world inspired satellite-debris conjunction scenario with different uncertainty propagation methods and show that our controller can effectively avoid collisions.

Understanding and Imitating Human-Robot Motion with Restricted Visual Fields

Oct 07, 2024

Abstract:When working around humans, it is important to model their perception limitations in order to predict their behavior more accurately. In this work, we consider agents with a limited field of view, viewing range, and ability to miss objects within viewing range (e.g., transparency). By considering the observation model independently from the motion policy, we can better predict the agent's behavior by considering these limitations and approximating them. We perform a user study where human operators navigate a cluttered scene while scanning the region for obstacles with a limited field of view and range. Using imitation learning, we show that a robot can adopt a human's strategy for observing an environment with limitations on observation and navigate with minimal collision with dynamic and static obstacles. We also show that this learned model helps it successfully navigate a physical hardware vehicle in real time.

MultiNash-PF: A Particle Filtering Approach for Computing Multiple Local Generalized Nash Equilibria in Trajectory Games

Oct 07, 2024

Abstract:Modern-world robotics involves complex environments where multiple autonomous agents must interact with each other and other humans. This necessitates advanced interactive multi-agent motion planning techniques. Generalized Nash equilibrium(GNE), a solution concept in constrained game theory, provides a mathematical model to predict the outcome of interactive motion planning, where each agent needs to account for other agents in the environment. However, in practice, multiple local GNEs may exist. Finding a single GNE itself is complex as it requires solving coupled constrained optimal control problems. Furthermore, finding all such local GNEs requires exploring the solution space of GNEs, which is a challenging task. This work proposes the MultiNash-PF framework to efficiently compute multiple local GNEs in constrained trajectory games. Potential games are a class of games for which a local GNE of a trajectory game can be found by solving a single constrained optimal control problem. We propose MultiNash-PF that integrates the potential game approach with implicit particle filtering, a sample-efficient method for non-convex trajectory optimization. We first formulate the underlying game as a constrained potential game and then utilize the implicit particle filtering to identify the coarse estimates of multiple local minimizers of the game's potential function. MultiNash-PF then refines these estimates with optimization solvers, obtaining different local GNEs. We show through numerical simulations that MultiNash-PF reduces computation time by up to 50\% compared to a baseline approach.

Distributed NeRF Learning for Collaborative Multi-Robot Perception

Sep 30, 2024

Abstract:Effective environment perception is crucial for enabling downstream robotic applications. Individual robotic agents often face occlusion and limited visibility issues, whereas multi-agent systems can offer a more comprehensive mapping of the environment, quicker coverage, and increased fault tolerance. In this paper, we propose a collaborative multi-agent perception system where agents collectively learn a neural radiance field (NeRF) from posed RGB images to represent a scene. Each agent processes its local sensory data and shares only its learned NeRF model with other agents, reducing communication overhead. Given NeRF's low memory footprint, this approach is well-suited for robotic systems with limited bandwidth, where transmitting all raw data is impractical. Our distributed learning framework ensures consistency across agents' local NeRF models, enabling convergence to a unified scene representation. We show the effectiveness of our method through an extensive set of experiments on datasets containing challenging real-world scenes, achieving performance comparable to centralized mapping of the environment where data is sent to a central server for processing. Additionally, we find that multi-agent learning provides regularization benefits, improving geometric consistency in scenarios with sparse input views. We show that in such scenarios, multi-agent mapping can even outperform centralized training.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge