Hongrui Zhao

TACO: Temporal Consensus Optimization for Continual Neural Mapping

Feb 05, 2026Abstract:Neural implicit mapping has emerged as a powerful paradigm for robotic navigation and scene understanding. However, real-world robotic deployment requires continual adaptation to changing environments under strict memory and computation constraints, which existing mapping systems fail to support. Most prior methods rely on replaying historical observations to preserve consistency and assume static scenes. As a result, they cannot adapt to continual learning in dynamic robotic settings. To address these challenges, we propose TACO (TemporAl Consensus Optimization), a replay-free framework for continual neural mapping. We reformulate mapping as a temporal consensus optimization problem, where we treat past model snapshots as temporal neighbors. Intuitively, our approach resembles a model consulting its own past knowledge. We update the current map by enforcing weighted consensus with historical representations. Our method allows reliable past geometry to constrain optimization while permitting unreliable or outdated regions to be revised in response to new observations. TACO achieves a balance between memory efficiency and adaptability without storing or replaying previous data. Through extensive simulated and real-world experiments, we show that TACO robustly adapts to scene changes, and consistently outperforms other continual learning baselines.

UDON: Uncertainty-weighted Distributed Optimization for Multi-Robot Neural Implicit Mapping under Extreme Communication Constraints

Sep 16, 2025

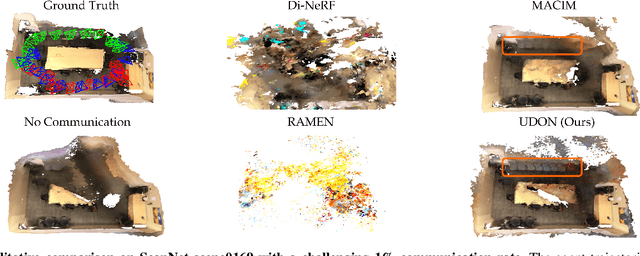

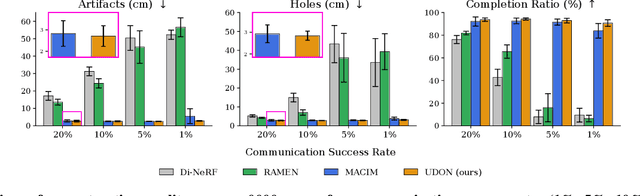

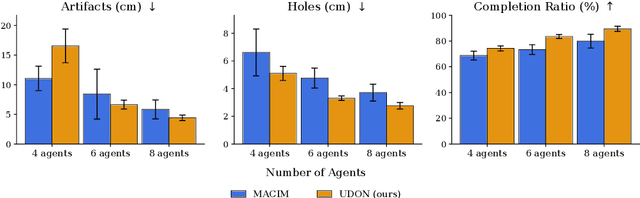

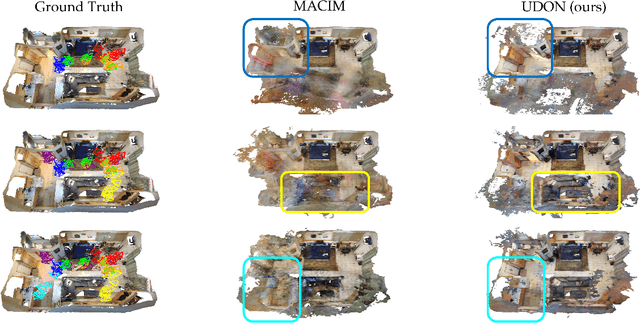

Abstract:Multi-robot mapping with neural implicit representations enables the compact reconstruction of complex environments. However, it demands robustness against communication challenges like packet loss and limited bandwidth. While prior works have introduced various mechanisms to mitigate communication disruptions, performance degradation still occurs under extremely low communication success rates. This paper presents UDON, a real-time multi-agent neural implicit mapping framework that introduces a novel uncertainty-weighted distributed optimization to achieve high-quality mapping under severe communication deterioration. The uncertainty weighting prioritizes more reliable portions of the map, while the distributed optimization isolates and penalizes mapping disagreement between individual pairs of communicating agents. We conduct extensive experiments on standard benchmark datasets and real-world robot hardware. We demonstrate that UDON significantly outperforms existing baselines, maintaining high-fidelity reconstructions and consistent scene representations even under extreme communication degradation (as low as 1% success rate).

RAMEN: Real-time Asynchronous Multi-agent Neural Implicit Mapping

Feb 26, 2025Abstract:Multi-agent neural implicit mapping allows robots to collaboratively capture and reconstruct complex environments with high fidelity. However, existing approaches often rely on synchronous communication, which is impractical in real-world scenarios with limited bandwidth and potential communication interruptions. This paper introduces RAMEN: Real-time Asynchronous Multi-agEnt Neural implicit mapping, a novel approach designed to address this challenge. RAMEN employs an uncertainty-weighted multi-agent consensus optimization algorithm that accounts for communication disruptions. When communication is lost between a pair of agents, each agent retains only an outdated copy of its neighbor's map, with the uncertainty of this copy increasing over time since the last communication. Using gradient update information, we quantify the uncertainty associated with each parameter of the neural network map. Neural network maps from different agents are brought to consensus on the basis of their levels of uncertainty, with consensus biased towards network parameters with lower uncertainty. To achieve this, we derive a weighted variant of the decentralized consensus alternating direction method of multipliers (C-ADMM) algorithm, facilitating robust collaboration among agents with varying communication and update frequencies. Through extensive evaluations on real-world datasets and robot hardware experiments, we demonstrate RAMEN's superior mapping performance under challenging communication conditions.

Distributed NeRF Learning for Collaborative Multi-Robot Perception

Sep 30, 2024

Abstract:Effective environment perception is crucial for enabling downstream robotic applications. Individual robotic agents often face occlusion and limited visibility issues, whereas multi-agent systems can offer a more comprehensive mapping of the environment, quicker coverage, and increased fault tolerance. In this paper, we propose a collaborative multi-agent perception system where agents collectively learn a neural radiance field (NeRF) from posed RGB images to represent a scene. Each agent processes its local sensory data and shares only its learned NeRF model with other agents, reducing communication overhead. Given NeRF's low memory footprint, this approach is well-suited for robotic systems with limited bandwidth, where transmitting all raw data is impractical. Our distributed learning framework ensures consistency across agents' local NeRF models, enabling convergence to a unified scene representation. We show the effectiveness of our method through an extensive set of experiments on datasets containing challenging real-world scenes, achieving performance comparable to centralized mapping of the environment where data is sent to a central server for processing. Additionally, we find that multi-agent learning provides regularization benefits, improving geometric consistency in scenarios with sparse input views. We show that in such scenarios, multi-agent mapping can even outperform centralized training.

Real-Time Convolutional Neural Network-Based Star Detection and Centroiding Method for CubeSat Star Tracker

Apr 29, 2024

Abstract:Star trackers are one of the most accurate celestial sensors used for absolute attitude determination. The devices detect stars in captured images and accurately compute their projected centroids on an imaging focal plane with subpixel precision. Traditional algorithms for star detection and centroiding often rely on threshold adjustments for star pixel detection and pixel brightness weighting for centroid computation. However, challenges like high sensor noise and stray light can compromise algorithm performance. This article introduces a Convolutional Neural Network (CNN)-based approach for star detection and centroiding, tailored to address the issues posed by noisy star tracker images in the presence of stray light and other artifacts. Trained using simulated star images overlayed with real sensor noise and stray light, the CNN produces both a binary segmentation map distinguishing star pixels from the background and a distance map indicating each pixel's proximity to the nearest star centroid. Leveraging this distance information alongside pixel coordinates transforms centroid calculations into a set of trilateration problems solvable via the least squares method. Our method employs efficient UNet variants for the underlying CNN architectures, and the variants' performances are evaluated. Comprehensive testing has been undertaken with synthetic image evaluations, hardware-in-the-loop assessments, and night sky tests. The tests consistently demonstrated that our method outperforms several existing algorithms in centroiding accuracy and exhibits superior resilience to high sensor noise and stray light interference. An additional benefit of our algorithms is that they can be executed in real-time on low-power edge AI processors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge