Nathanael Perraudin

Evaluating GANs via Duality

Nov 13, 2018

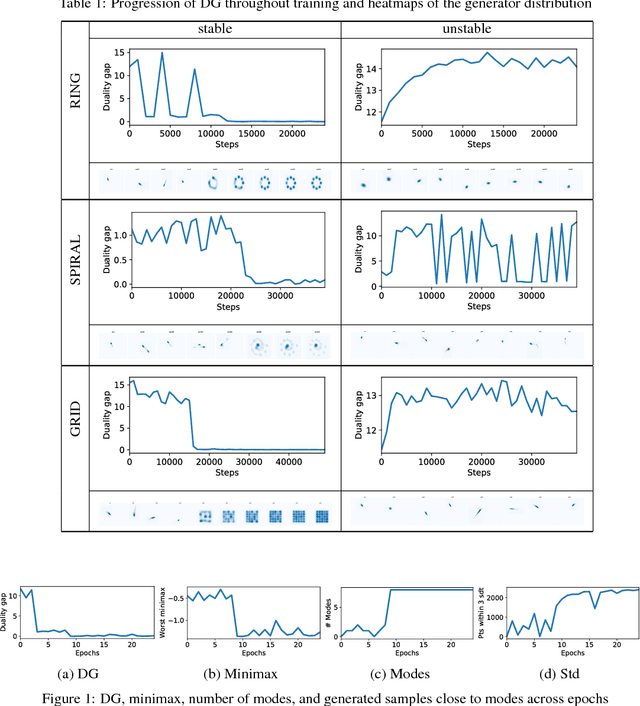

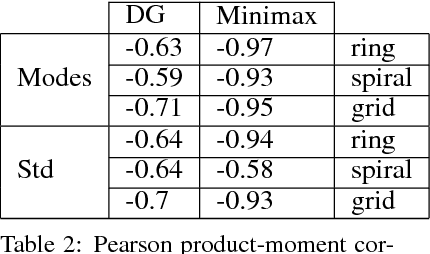

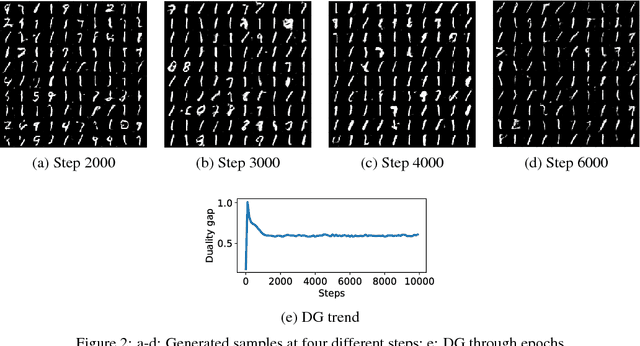

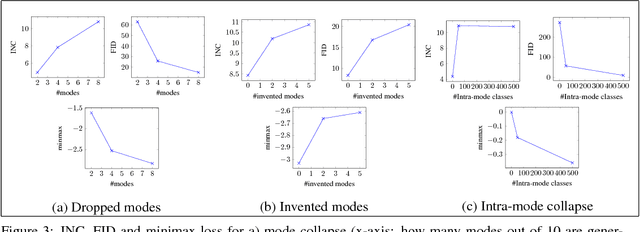

Abstract:Generative Adversarial Networks (GANs) have shown great results in accurately modeling complex distributions, but their training is known to be difficult due to instabilities caused by a challenging minimax optimization problem. This is especially troublesome given the lack of an evaluation metric that can reliably detect non-convergent behaviors. We leverage the notion of duality gap from game theory in order to propose a novel convergence metric for GANs that has low computational cost. We verify the validity of the proposed metric for various test scenarios commonly used in the literature.

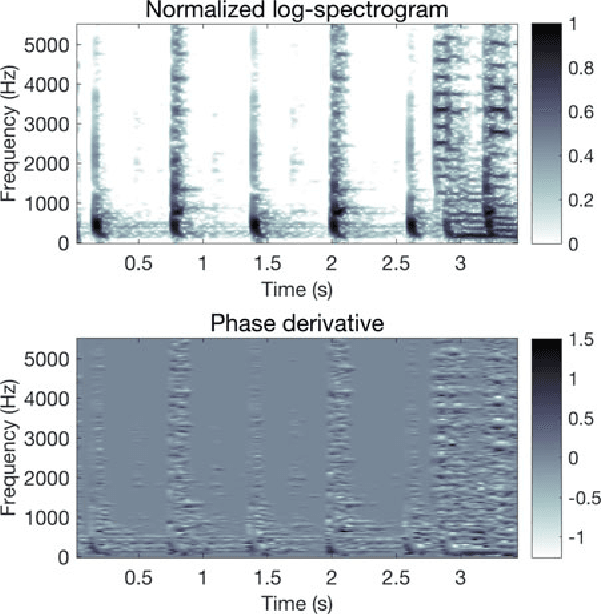

Improving DNN-based Music Source Separation using Phase Features

Jul 16, 2018

Abstract:Music source separation with deep neural networks typically relies only on amplitude features. In this paper we show that additional phase features can improve the separation performance. Using the theoretical relationship between STFT phase and amplitude, we conjecture that derivatives of the phase are a good feature representation opposed to the raw phase. We verify this conjecture experimentally and propose a new DNN architecture which combines amplitude and phase. This joint approach achieves a better signal-to distortion ratio on the DSD100 dataset for all instruments compared to a network that uses only amplitude features. Especially, the bass instrument benefits from the phase information.

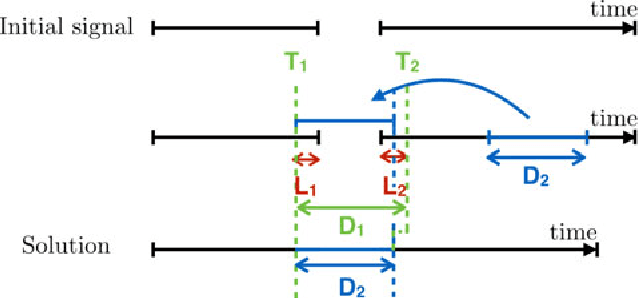

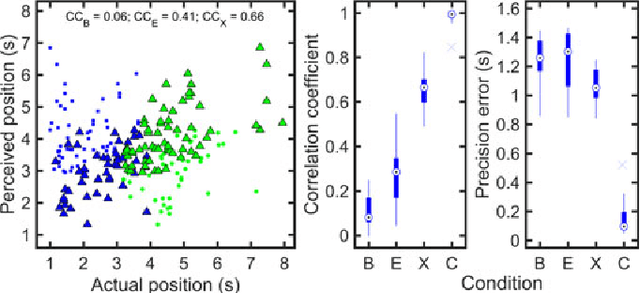

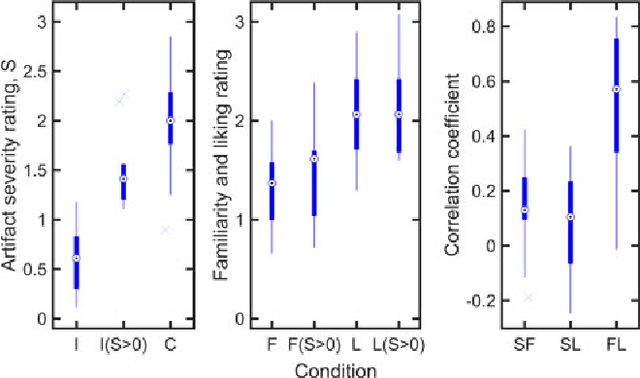

Inpainting of long audio segments with similarity graphs

Feb 23, 2018

Abstract:We present a novel method for the compensation of long duration data loss in audio signals, in particular music. The concealment of such signal defects is based on a graph that encodes signal structure in terms of time-persistent spectral similarity. A suitable candidate segment for the substitution of the lost content is proposed by an intuitive optimization scheme and smoothly inserted into the gap, i.e. the lost or distorted signal region. Extensive listening tests show that the proposed algorithm provides highly promising results when applied to a variety of real-world music signals.

UNLocBoX: A MATLAB convex optimization toolbox for proximal-splitting methods

Dec 27, 2016

Abstract:Convex optimization is an essential tool for machine learning, as many of its problems can be formulated as minimization problems of specific objective functions. While there is a large variety of algorithms available to solve convex problems, we can argue that it becomes more and more important to focus on efficient, scalable methods that can deal with big data. When the objective function can be written as a sum of "simple" terms, proximal splitting methods are a good choice. UNLocBoX is a MATLAB library that implements many of these methods, designed to solve convex optimization problems of the form $\min_{x \in \mathbb{R}^N} \sum_{n=1}^K f_n(x).$ It contains the most recent solvers such as FISTA, Douglas-Rachford, SDMM as well a primal dual techniques such as Chambolle-Pock and forward-backward-forward. It also includes an extensive list of common proximal operators that can be combined, allowing for a quick implementation of a large variety of convex problems.

Compressive PCA for Low-Rank Matrices on Graphs

Oct 04, 2016

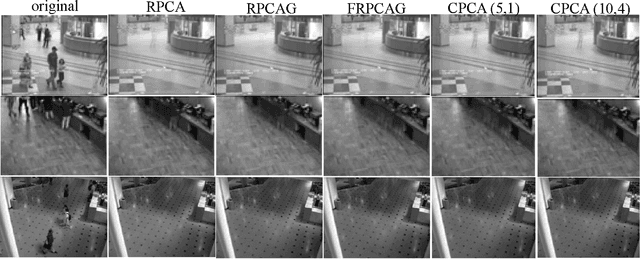

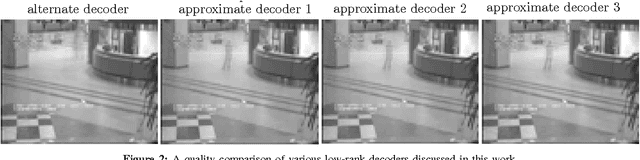

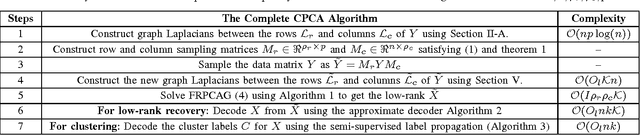

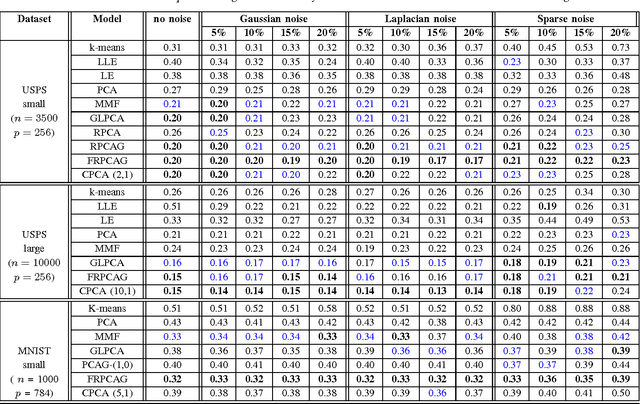

Abstract:We introduce a novel framework for an approxi- mate recovery of data matrices which are low-rank on graphs, from sampled measurements. The rows and columns of such matrices belong to the span of the first few eigenvectors of the graphs constructed between their rows and columns. We leverage this property to recover the non-linear low-rank structures efficiently from sampled data measurements, with a low cost (linear in n). First, a Resrtricted Isometry Property (RIP) condition is introduced for efficient uniform sampling of the rows and columns of such matrices based on the cumulative coherence of graph eigenvectors. Secondly, a state-of-the-art fast low-rank recovery method is suggested for the sampled data. Finally, several efficient, parallel and parameter-free decoders are presented along with their theoretical analysis for decoding the low-rank and cluster indicators for the full data matrix. Thus, we overcome the computational limitations of the standard linear low-rank recovery methods for big datasets. Our method can also be seen as a major step towards efficient recovery of non- linear low-rank structures. For a matrix of size n X p, on a single core machine, our method gains a speed up of $p^2/k$ over Robust Principal Component Analysis (RPCA), where k << p is the subspace dimension. Numerically, we can recover a low-rank matrix of size 10304 X 1000, 100 times faster than Robust PCA.

Predicting the evolution of stationary graph signals

Jul 12, 2016

Abstract:An emerging way of tackling the dimensionality issues arising in the modeling of a multivariate process is to assume that the inherent data structure can be captured by a graph. Nevertheless, though state-of-the-art graph-based methods have been successful for many learning tasks, they do not consider time-evolving signals and thus are not suitable for prediction. Based on the recently introduced joint stationarity framework for time-vertex processes, this letter considers multivariate models that exploit the graph topology so as to facilitate the prediction. The resulting method yields similar accuracy to the joint (time-graph) mean-squared error estimator but at lower complexity, and outperforms purely time-based methods.

Towards stationary time-vertex signal processing

Jun 22, 2016

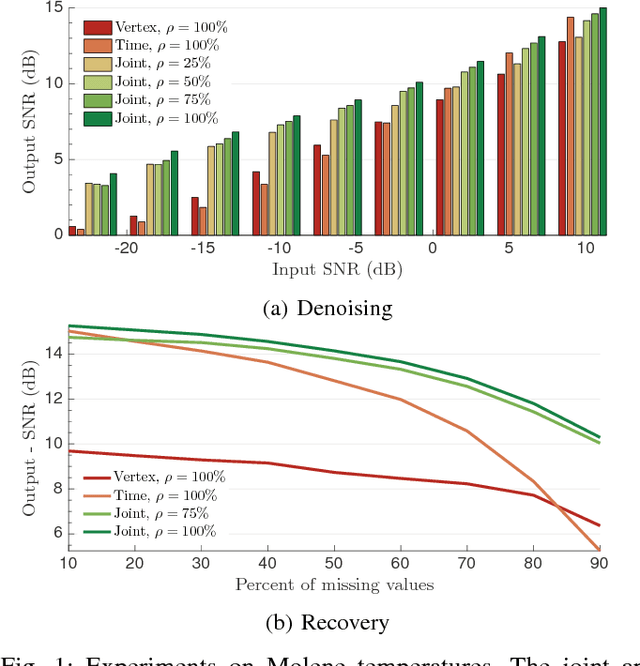

Abstract:Graph-based methods for signal processing have shown promise for the analysis of data exhibiting irregular structure, such as those found in social, transportation, and sensor networks. Yet, though these systems are often dynamic, state-of-the-art methods for signal processing on graphs ignore the dimension of time, treating successive graph signals independently or taking a global average. To address this shortcoming, this paper considers the statistical analysis of time-varying graph signals. We introduce a novel definition of joint (time-vertex) stationarity, which generalizes the classical definition of time stationarity and the more recent definition appropriate for graphs. Joint stationarity gives rise to a scalable Wiener optimization framework for joint denoising, semi-supervised learning, or more generally inversing a linear operator, that is provably optimal. Experimental results on real weather data demonstrate that taking into account graph and time dimensions jointly can yield significant accuracy improvements in the reconstruction effort.

Tracking Time-Vertex Propagation using Dynamic Graph Wavelets

Jun 21, 2016

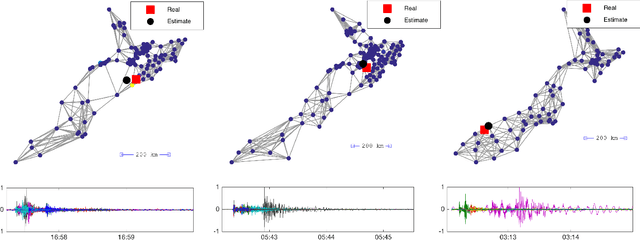

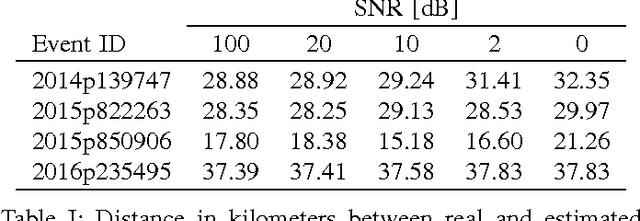

Abstract:Graph Signal Processing generalizes classical signal processing to signal or data indexed by the vertices of a weighted graph. So far, the research efforts have been focused on static graph signals. However numerous applications involve graph signals evolving in time, such as spreading or propagation of waves on a network. The analysis of this type of data requires a new set of methods that fully takes into account the time and graph dimensions. We propose a novel class of wavelet frames named Dynamic Graph Wavelets, whose time-vertex evolution follows a dynamic process. We demonstrate that this set of functions can be combined with sparsity based approaches such as compressive sensing to reveal information on the dynamic processes occurring on a graph. Experiments on real seismological data show the efficiency of the technique, allowing to estimate the epicenter of earthquake events recorded by a seismic network.

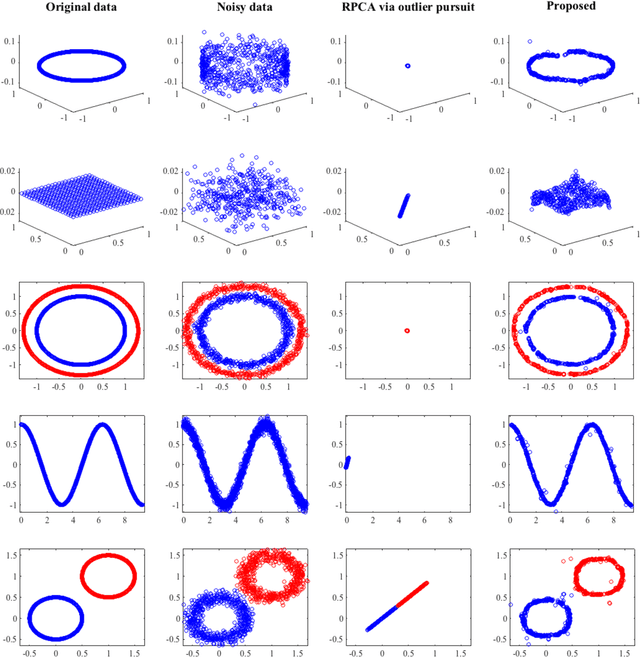

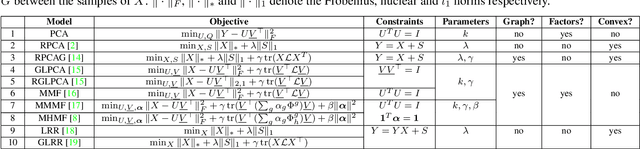

Low-Rank Matrices on Graphs: Generalized Recovery & Applications

May 25, 2016

Abstract:Many real world datasets subsume a linear or non-linear low-rank structure in a very low-dimensional space. Unfortunately, one often has very little or no information about the geometry of the space, resulting in a highly under-determined recovery problem. Under certain circumstances, state-of-the-art algorithms provide an exact recovery for linear low-rank structures but at the expense of highly inscalable algorithms which use nuclear norm. However, the case of non-linear structures remains unresolved. We revisit the problem of low-rank recovery from a totally different perspective, involving graphs which encode pairwise similarity between the data samples and features. Surprisingly, our analysis confirms that it is possible to recover many approximate linear and non-linear low-rank structures with recovery guarantees with a set of highly scalable and efficient algorithms. We call such data matrices as \textit{Low-Rank matrices on graphs} and show that many real world datasets satisfy this assumption approximately due to underlying stationarity. Our detailed theoretical and experimental analysis unveils the power of the simple, yet very novel recovery framework \textit{Fast Robust PCA on Graphs}

Global and Local Uncertainty Principles for Signals on Graphs

Mar 10, 2016

Abstract:Uncertainty principles such as Heisenberg's provide limits on the time-frequency concentration of a signal, and constitute an important theoretical tool for designing and evaluating linear signal transforms. Generalizations of such principles to the graph setting can inform dictionary design for graph signals, lead to algorithms for reconstructing missing information from graph signals via sparse representations, and yield new graph analysis tools. While previous work has focused on generalizing notions of spreads of a graph signal in the vertex and graph spectral domains, our approach is to generalize the methods of Lieb in order to develop uncertainty principles that provide limits on the concentration of the analysis coefficients of any graph signal under a dictionary transform whose atoms are jointly localized in the vertex and graph spectral domains. One challenge we highlight is that due to the inhomogeneity of the underlying graph data domain, the local structure in a single small region of the graph can drastically affect the uncertainty bounds for signals concentrated in different regions of the graph, limiting the information provided by global uncertainty principles. Accordingly, we suggest a new way to incorporate a notion of locality, and develop local uncertainty principles that bound the concentration of the analysis coefficients of each atom of a localized graph spectral filter frame in terms of quantities that depend on the local structure of the graph around the center vertex of the given atom. Finally, we demonstrate how our proposed local uncertainty measures can improve the random sampling of graph signals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge