Michael Manhart

Gradient-Based Geometry Learning for Fan-Beam CT Reconstruction

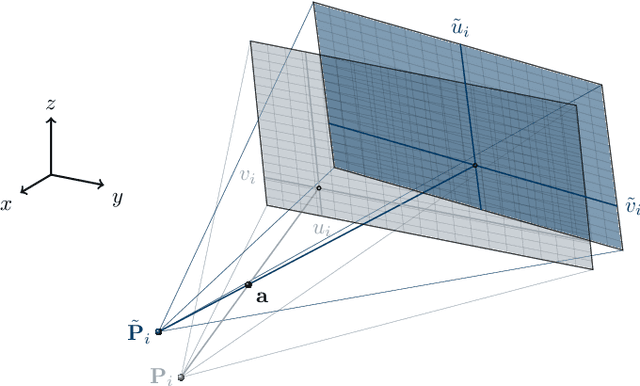

Dec 05, 2022Abstract:Incorporating computed tomography (CT) reconstruction operators into differentiable pipelines has proven beneficial in many applications. Such approaches usually focus on the projection data and keep the acquisition geometry fixed. However, precise knowledge of the acquisition geometry is essential for high quality reconstruction results. In this paper, the differentiable formulation of fan-beam CT reconstruction is extended to the acquisition geometry. This allows to propagate gradient information from a loss function on the reconstructed image into the geometry parameters. As a proof-of-concept experiment, this idea is applied to rigid motion compensation. The cost function is parameterized by a trained neural network which regresses an image quality metric from the motion affected reconstruction alone. Using the proposed method, we are the first to optimize such an autofocus-inspired algorithm based on analytical gradients. The algorithm achieves a reduction in MSE by 35.5 % and an improvement in SSIM by 12.6 % over the motion affected reconstruction. Next to motion compensation, we see further use cases of our differentiable method for scanner calibration or hybrid techniques employing deep models.

Appearance Learning for Image-based Motion Estimation in Tomography

Jun 18, 2020

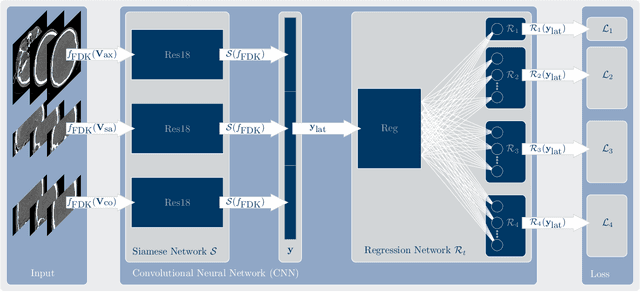

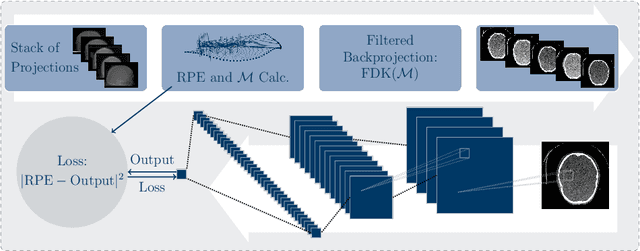

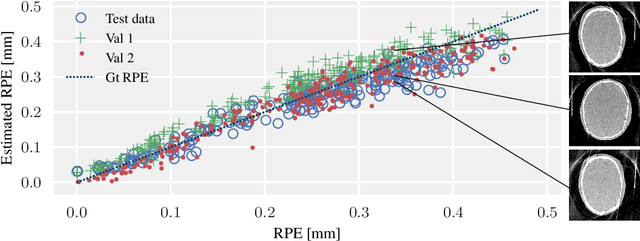

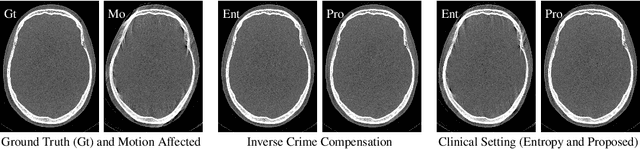

Abstract:In tomographic imaging, anatomical structures are reconstructed by applying a pseudo-inverse forward model to acquired signals. Geometric information within this process is usually depending on the system setting only, i. e., the scanner position or readout direction. Patient motion therefore corrupts the geometry alignment in the reconstruction process resulting in motion artifacts. We propose an appearance learning approach recognizing the structures of rigid motion independently from the scanned object. To this end, we train a siamese triplet network to predict the reprojection error (RPE) for the complete acquisition as well as an approximate distribution of the RPE along the single views from the reconstructed volume in a multi-task learning approach. The RPE measures the motioninduced geometric deviations independent of the object based on virtual marker positions, which are available during training. We train our network using 27 patients and deploy a 21-4-2 split for training, validation and testing. In average, we achieve a residual mean RPE of 0.013mm with an inter-patient standard deviation of 0.022 mm. This is twice the accuracy compared to previously published results. In a motion estimation benchmark the proposed approach achieves superior results in comparison with two state-of-the-art measures in nine out of twelve experiments. The clinical applicability of the proposed method is demonstrated on a motion-affected clinical dataset.

Data Consistent CT Reconstruction from Insufficient Data with Learned Prior Images

May 20, 2020

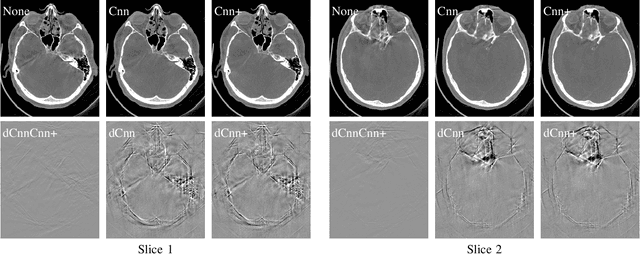

Abstract:Image reconstruction from insufficient data is common in computed tomography (CT), e.g., image reconstruction from truncated data, limited-angle data and sparse-view data. Deep learning has achieved impressive results in this field. However, the robustness of deep learning methods is still a concern for clinical applications due to the following two challenges: a) With limited access to sufficient training data, a learned deep learning model may not generalize well to unseen data; b) Deep learning models are sensitive to noise. Therefore, the quality of images processed by neural networks only may be inadequate. In this work, we investigate the robustness of deep learning in CT image reconstruction by showing false negative and false positive lesion cases. Since learning-based images with incorrect structures are likely not consistent with measured projection data, we propose a data consistent reconstruction (DCR) method to improve their image quality, which combines the advantages of compressed sensing and deep learning: First, a prior image is generated by deep learning. Afterwards, unmeasured projection data are inpainted by forward projection of the prior image. Finally, iterative reconstruction with reweighted total variation regularization is applied, integrating data consistency for measured data and learned prior information for missing data. The efficacy of the proposed method is demonstrated in cone-beam CT with truncated data, limited-angle data and sparse-view data, respectively. For example, for truncated data, DCR achieves a mean root-mean-square error of 24 HU and a mean structure similarity index of 0.999 inside the field-of-view for different patients in the noisy case, while the state-of-the-art U-Net method achieves 55 HU and 0.995 respectively for these two metrics.

Deep autofocus with cone-beam CT consistency constraint

Dec 04, 2019

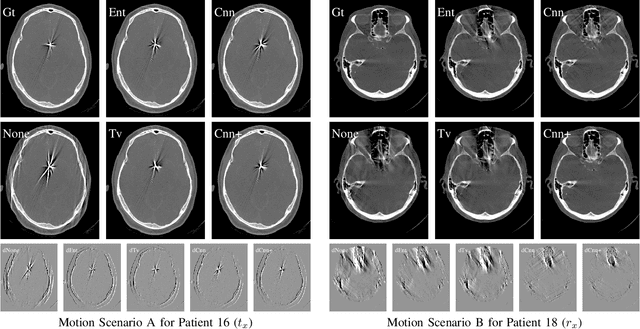

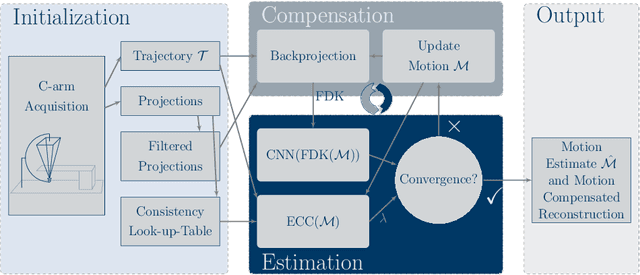

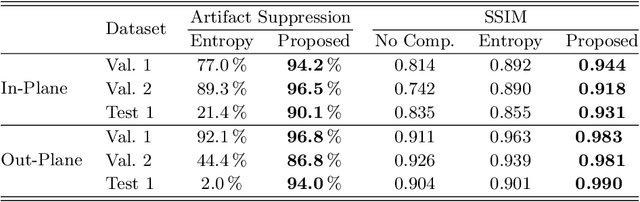

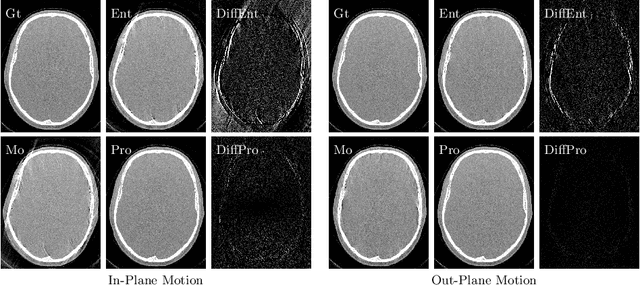

Abstract:High quality reconstruction with interventional C-arm cone-beam computed tomography (CBCT) requires exact geometry information. If the geometry information is corrupted, e. g., by unexpected patient or system movement, the measured signal is misplaced in the backprojection operation. With prolonged acquisition times of interventional C-arm CBCT the likelihood of rigid patient motion increases. To adapt the backprojection operation accordingly, a motion estimation strategy is necessary. Recently, a novel learning-based approach was proposed, capable of compensating motions within the acquisition plane. We extend this method by a CBCT consistency constraint, which was proven to be efficient for motions perpendicular to the acquisition plane. By the synergistic combination of these two measures, in and out-plane motion is well detectable, achieving an average artifact suppression of 93 [percent]. This outperforms the entropy-based state-of-the-art autofocus measure which achieves on average an artifact suppression of 54 [percent].

Image Quality Assessment for Rigid Motion Compensation

Oct 09, 2019

Abstract:Diagnostic stroke imaging with C-arm cone-beam computed tomography (CBCT) enables reduction of time-to-therapy for endovascular procedures. However, the prolonged acquisition time compared to helical CT increases the likelihood of rigid patient motion. Rigid motion corrupts the geometry alignment assumed during reconstruction, resulting in image blurring or streaking artifacts. To reestablish the geometry, we estimate the motion trajectory by an autofocus method guided by a neural network, which was trained to regress the reprojection error, based on the image information of a reconstructed slice. The network was trained with CBCT scans from 19 patients and evaluated using an additional test patient. It adapts well to unseen motion amplitudes and achieves superior results in a motion estimation benchmark compared to the commonly used entropy-based method.

Data Consistent Artifact Reduction for Limited Angle Tomography with Deep Learning Prior

Aug 28, 2019

Abstract:Robustness of deep learning methods for limited angle tomography is challenged by two major factors: a) due to insufficient training data the network may not generalize well to unseen data; b) deep learning methods are sensitive to noise. Thus, generating reconstructed images directly from a neural network appears inadequate. We propose to constrain the reconstructed images to be consistent with the measured projection data, while the unmeasured information is complemented by learning based methods. For this purpose, a data consistent artifact reduction (DCAR) method is introduced: First, a prior image is generated from an initial limited angle reconstruction via deep learning as a substitute for missing information. Afterwards, a conventional iterative reconstruction algorithm is applied, integrating the data consistency in the measured angular range and the prior information in the missing angular range. This ensures data integrity in the measured area, while inaccuracies incorporated by the deep learning prior lie only in areas where no information is acquired. The proposed DCAR method achieves significant image quality improvement: for 120-degree cone-beam limited angle tomography more than 10% RMSE reduction in noise-free case and more than 24% RMSE reduction in noisy case compared with a state-of-the-art U-Net based method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge