Mehdi Bennis

LiQSS: Post-Transformer Linear Quantum-Inspired State-Space Tensor Networks for Real-Time 6G

Jan 18, 2026Abstract:Proactive and agentic control in Sixth-Generation (6G) Open Radio Access Networks (O-RAN) requires control-grade prediction under stringent Near-Real-Time (Near-RT) latency and computational constraints. While Transformer-based models are effective for sequence modeling, their quadratic complexity limits scalability in Near-RT RAN Intelligent Controller (RIC) analytics. This paper investigates a post-Transformer design paradigm for efficient radio telemetry forecasting. We propose a quantum-inspired many-body state-space tensor network that replaces self-attention with stable structured state-space dynamics kernels, enabling linear-time sequence modeling. Tensor-network factorizations in the form of Tensor Train (TT) / Matrix Product State (MPS) representations are employed to reduce parameterization and data movement in both input projections and prediction heads, while lightweight channel gating and mixing layers capture non-stationary cross-Key Performance Indicator (KPI) dependencies. The proposed model is instantiated as an agentic perceive-predict xApp and evaluated on a bespoke O-RAN KPI time-series dataset comprising 59,441 sliding windows across 13 KPIs, using Reference Signal Received Power (RSRP) forecasting as a representative use case. Our proposed Linear Quantum-Inspired State-Space (LiQSS) model is 10.8x-15.8x smaller and approximately 1.4x faster than prior structured state-space baselines. Relative to Transformer-based models, LiQSS achieves up to a 155x reduction in parameter count and up to 2.74x faster inference, without sacrificing forecasting accuracy.

Logic-Driven Semantic Communication for Resilient Multi-Agent Systems

Jan 11, 2026Abstract:The advent of 6G networks is accelerating autonomy and intelligence in large-scale, decentralized multi-agent systems (MAS). While this evolution enables adaptive behavior, it also heightens vulnerability to stressors such as environmental changes and adversarial behavior. Existing literature on resilience in decentralized MAS largely focuses on isolated aspects, such as fault tolerance, without offering a principled unified definition of multi-agent resilience. This gap limits the ability to design systems that can continuously sense, adapt, and recover under dynamic conditions. This article proposes a formal definition of MAS resilience grounded in two complementary dimensions: epistemic resilience, wherein agents recover and sustain accurate knowledge of the environment, and action resilience, wherein agents leverage that knowledge to coordinate and sustain goals under disruptions. We formalize resilience via temporal epistemic logic and quantify it using recoverability time (how quickly desired properties are re-established after a disturbance) and durability time (how long accurate beliefs and goal-directed behavior are sustained after recovery). We design an agent architecture and develop decentralized algorithms to achieve both epistemic and action resilience. We provide formal verification guarantees, showing that our specifications are sound with respect to the metric bounds and admit finite-horizon verification, enabling design-time certification and lightweight runtime monitoring. Through a case study on distributed multi-agent decision-making under stressors, we show that our approach outperforms baseline methods. Our formal verification analysis and simulation results highlight that the proposed framework enables resilient, knowledge-driven decision-making and sustained operation, laying the groundwork for resilient decentralized MAS in next-generation communication systems.

Compositional Distributed Learning for Multi-View Perception: A Maximal Coding Rate Reduction Perspective

Nov 11, 2025

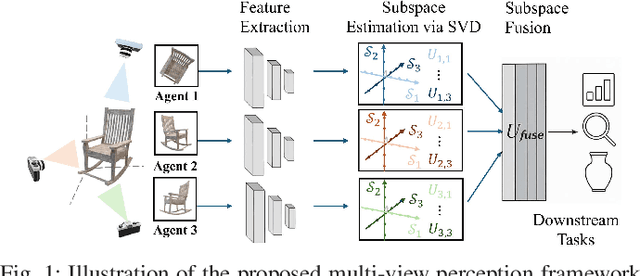

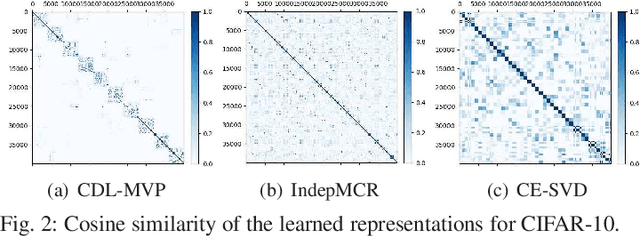

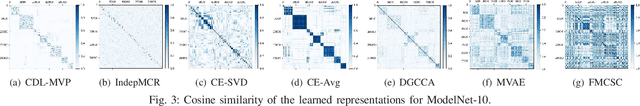

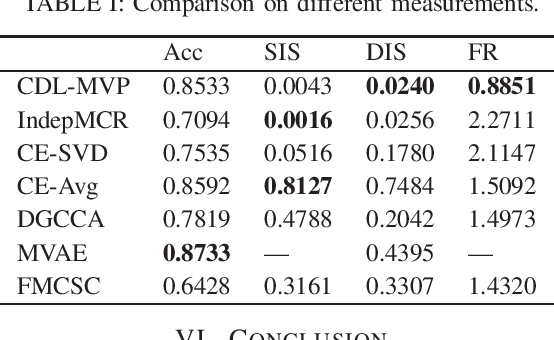

Abstract:In this letter, we formulate a compositional distributed learning framework for multi-view perception by leveraging the maximal coding rate reduction principle combined with subspace basis fusion. In the proposed algorithm, each agent conducts a periodic singular value decomposition on its learned subspaces and exchanges truncated basis matrices, based on which the fused subspaces are obtained. By introducing a projection matrix and minimizing the distance between the outputs and its projection, the learned representations are enforced towards the fused subspaces. It is proved that the trace on the coding-rate change is bounded and the consistency of basis fusion is guaranteed theoretically. Numerical simulations validate that the proposed algorithm achieves high classification accuracy while maintaining representations' diversity, compared to baselines showing correlated subspaces and coupled representations.

SheafAlign: A Sheaf-theoretic Framework for Decentralized Multimodal Alignment

Oct 23, 2025Abstract:Conventional multimodal alignment methods assume mutual redundancy across all modalities, an assumption that fails in real-world distributed scenarios. We propose SheafAlign, a sheaf-theoretic framework for decentralized multimodal alignment that replaces single-space alignment with multiple comparison spaces. This approach models pairwise modality relations through sheaf structures and leverages decentralized contrastive learning-based objectives for training. SheafAlign overcomes the limitations of prior methods by not requiring mutual redundancy among all modalities, preserving both shared and unique information. Experiments on multimodal sensing datasets show superior zero-shot generalization, cross-modal alignment, and robustness to missing modalities, with 50\% lower communication cost than state-of-the-art baselines.

Active Inference Framework for Closed-Loop Sensing, Communication, and Control in UAV Systems

Sep 17, 2025Abstract:Integrated sensing and communication (ISAC) is a core technology for 6G, and its application to closed-loop sensing, communication, and control (SCC) enables various services. Existing SCC solutions often treat sensing and control separately, leading to suboptimal performance and resource usage. In this work, we introduce the active inference framework (AIF) into SCC-enabled unmanned aerial vehicle (UAV) systems for joint state estimation, control, and sensing resource allocation. By formulating a unified generative model, the problem reduces to minimizing variational free energy for inference and expected free energy for action planning. Simulation results show that both control cost and sensing cost are reduced relative to baselines.

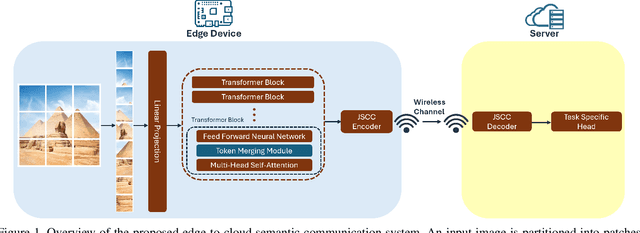

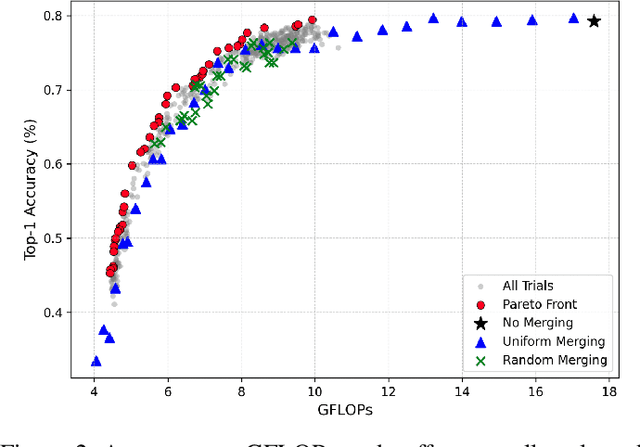

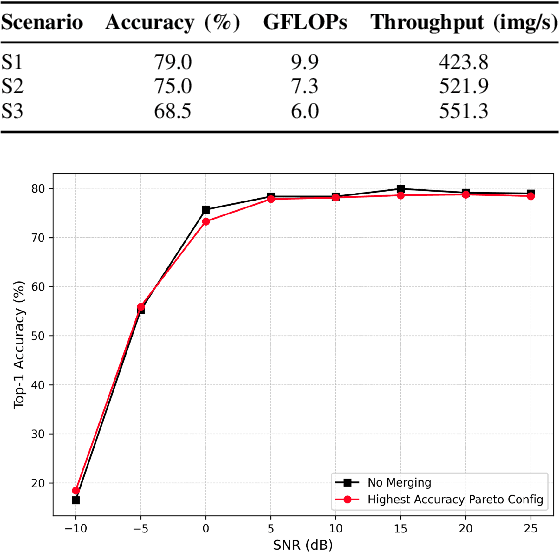

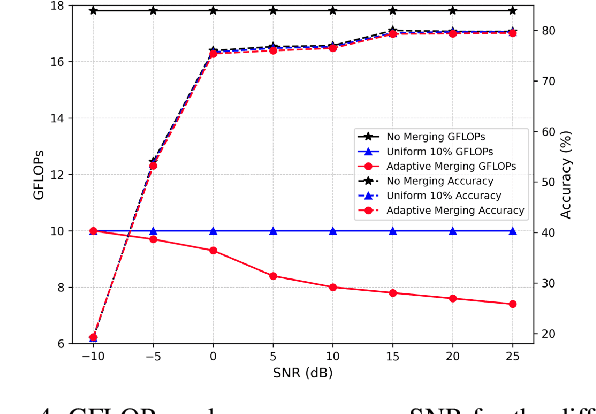

Adaptive Pareto-Optimal Token Merging for Edge Transformer Models in Semantic Communication

Sep 11, 2025

Abstract:Large-scale transformer models have emerged as a powerful tool for semantic communication systems, enabling edge devices to extract rich representations for robust inference across noisy wireless channels. However, their substantial computational demands remain a major barrier to practical deployment in resource-constrained 6G networks. In this paper, we present a training-free framework for adaptive token merging in pretrained vision transformers to jointly reduce inference time and transmission resource usage. We formulate the selection of per-layer merging proportions as a multi-objective optimization problem to balance accuracy and computational cost. We employ Gaussian process-based Bayesian optimization to construct a Pareto frontier of optimal configurations, enabling flexible runtime adaptation to dynamic application requirements and channel conditions. Extensive experiments demonstrate that our method consistently outperforms other baselines and achieves significant reductions in floating-point operations while maintaining competitive accuracy across a wide range of signal-to-noise ratio (SNR) conditions. Additional results highlight the effectiveness of adaptive policies that adjust merging aggressiveness in response to channel quality, providing a practical mechanism to trade off latency and semantic fidelity on demand. These findings establish a scalable and efficient approach for deploying transformer-based semantic communication in future edge intelligence systems.

Resilient-native and Intelligent NextG Systems

Jun 15, 2025

Abstract:Just like power, water and transportation systems, wireless networks are a crucial societal infrastructure. As natural and human-induced disruptions continue to grow, wireless networks must be resilient to unforeseen events, able to withstand and recover from unexpected adverse conditions, shocks, unmodeled disturbances and cascading failures. Despite its critical importance, resilience remains an elusive concept, with its mathematical foundations still underdeveloped. Unlike robustness and reliability, resilience is premised on the fact that disruptions will inevitably happen. Resilience, in terms of elasticity, focuses on the ability to bounce back to favorable states, while resilience as plasticity involves agents (or networks) that can flexibly expand their states, hypotheses and course of actions, by transforming through real-time adaptation and reconfiguration. This constant situational awareness and vigilance of adapting world models and counterfactually reasoning about potential system failures and the corresponding best responses, is a core aspect of resilience. This article seeks to first define resilience and disambiguate it from reliability and robustness, before delving into the mathematics of resilience. Finally, the article concludes by presenting nuanced metrics and discussing trade-offs tailored to the unique characteristics of network resilience.

Learning to Collaborate Over Graphs: A Selective Federated Multi-Task Learning Approach

Jun 11, 2025Abstract:We present a novel federated multi-task learning method that leverages cross-client similarity to enable personalized learning for each client. To avoid transmitting the entire model to the parameter server, we propose a communication-efficient scheme that introduces a feature anchor, a compact vector representation that summarizes the features learned from the client's local classes. This feature anchor is shared with the server to account for local clients' distribution. In addition, the clients share the classification heads, a lightweight linear layer, and perform a graph-based regularization to enable collaboration among clients. By modeling collaboration between clients as a dynamic graph and continuously updating and refining this graph, we can account for any drift from the clients. To ensure beneficial knowledge transfer and prevent negative collaboration, we leverage a community detection-based approach that partitions this dynamic graph into homogeneous communities, maximizing the sum of task similarities, represented as the graph edges' weights, within each community. This mechanism restricts collaboration to highly similar clients within their formed communities, ensuring positive interaction and preserving personalization. Extensive experiments on two heterogeneous datasets demonstrate that our method significantly outperforms state-of-the-art baselines. Furthermore, we show that our method exhibits superior computation and communication efficiency and promotes fairness across clients.

Semantic Communication meets System 2 ML: How Abstraction, Compositionality and Emergent Languages Shape Intelligence

May 27, 2025Abstract:The trajectories of 6G and AI are set for a creative collision. However, current visions for 6G remain largely incremental evolutions of 5G, while progress in AI is hampered by brittle, data-hungry models that lack robust reasoning capabilities. This paper argues for a foundational paradigm shift, moving beyond the purely technical level of communication toward systems capable of semantic understanding and effective, goal-oriented interaction. We propose a unified research vision rooted in the principles of System-2 cognition, built upon three pillars: Abstraction, enabling agents to learn meaningful world models from raw sensorimotor data; Compositionality, providing the algebraic tools to combine learned concepts and subsystems; and Emergent Communication, allowing intelligent agents to create their own adaptive and grounded languages. By integrating these principles, we lay the groundwork for truly intelligent systems that can reason, adapt, and collaborate, unifying advances in wireless communications, machine learning, and robotics under a single coherent framework.

Distributionally Robust Federated Learning with Client Drift Minimization

May 21, 2025

Abstract:Federated learning (FL) faces critical challenges, particularly in heterogeneous environments where non-independent and identically distributed data across clients can lead to unfair and inefficient model performance. In this work, we introduce \textit{DRDM}, a novel algorithm that addresses these issues by combining a distributionally robust optimization (DRO) framework with dynamic regularization to mitigate client drift. \textit{DRDM} frames the training as a min-max optimization problem aimed at maximizing performance for the worst-case client, thereby promoting robustness and fairness. This robust objective is optimized through an algorithm leveraging dynamic regularization and efficient local updates, which significantly reduces the required number of communication rounds. Moreover, we provide a theoretical convergence analysis for convex smooth objectives under partial participation. Extensive experiments on three benchmark datasets, covering various model architectures and data heterogeneity levels, demonstrate that \textit{DRDM} significantly improves worst-case test accuracy while requiring fewer communication rounds than existing state-of-the-art baselines. Furthermore, we analyze the impact of signal-to-noise ratio (SNR) and bandwidth on the energy consumption of participating clients, demonstrating that the number of local update steps can be adaptively selected to achieve a target worst-case test accuracy with minimal total energy cost across diverse communication environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge