Robert-Jeron Reifert

6G Resilience -- White Paper

Sep 10, 2025

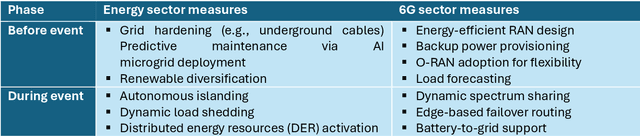

Abstract:6G must be designed to withstand, adapt to, and evolve amid prolonged, complex disruptions. Mobile networks' shift from efficiency-first to sustainability-aware has motivated this white paper to assert that resilience is a primary design goal, alongside sustainability and efficiency, encompassing technology, architecture, and economics. We promote resilience by analysing dependencies between mobile networks and other critical systems, such as energy, transport, and emergency services, and illustrate how cascading failures spread through infrastructures. We formalise resilience using the 3R framework: reliability, robustness, resilience. Subsequently, we translate this into measurable capabilities: graceful degradation, situational awareness, rapid reconfiguration, and learning-driven improvement and recovery. Architecturally, we promote edge-native and locality-aware designs, open interfaces, and programmability to enable islanded operations, fallback modes, and multi-layer diversity (radio, compute, energy, timing). Key enablers include AI-native control loops with verifiable behaviour, zero-trust security rooted in hardware and supply-chain integrity, and networking techniques that prioritise critical traffic, time-sensitive flows, and inter-domain coordination. Resilience also has a techno-economic aspect: open platforms and high-quality complementors generate ecosystem externalities that enhance resilience while opening new markets. We identify nine business-model groups and several patterns aligned with the 3R objectives, and we outline governance and standardisation. This white paper serves as an initial step and catalyst for 6G resilience. It aims to inspire researchers, professionals, government officials, and the public, providing them with the essential components to understand and shape the development of 6G resilience.

Accelerated Recovery with RIS: Designing Wireless Resilience in Mission-Critical Environments

Apr 15, 2025

Abstract:As 6G and beyond redefine connectivity, wireless networks become the foundation of critical operations, making resilience more essential than ever. With this shift, wireless systems cannot only take on vital services previously handled by wired infrastructures but also enable novel innovative applications that would not be possible with wired systems. As a result, there is a pressing demand for strategies that can adapt to dynamic channel conditions, interference, and unforeseen disruptions, ensuring seamless and reliable performance in an increasingly complex environment. Despite considerable research, existing resilience assessments lack comprehensive key performance indicators (KPIs), especially those quantifying its adaptability, which are vital for identifying a system's capacity to rapidly adapt and reallocate resources. In this work, we bridge this gap by proposing a novel framework that explicitly quantifies the adaption performance by augmenting the gradient of the system's rate function. To further enhance the network resilience, we integrate Reconfigurable Intelligent Surfaces (RISs) into our framework due to their capability to dynamically reshape the propagation environment while providing alternative channel paths. Numerical results show that gradient augmentation enhances resilience by improving adaptability under adverse conditions while proactively preparing for future disruptions.

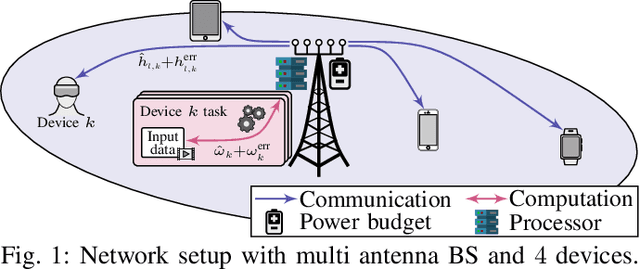

Robust Communication and Computation using Deep Learning via Joint Uncertainty Injection

Jun 05, 2024

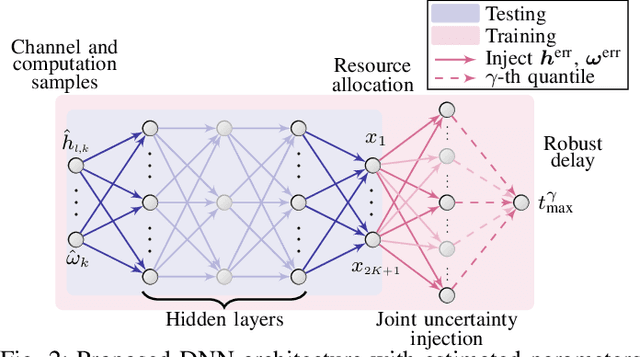

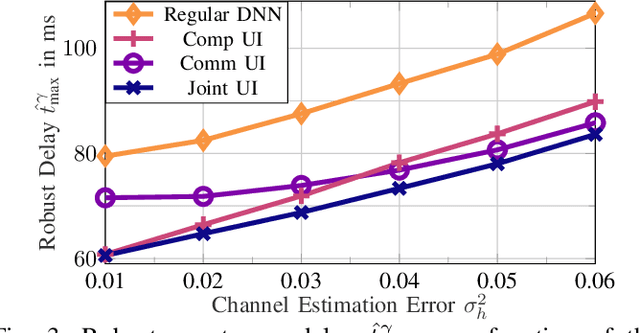

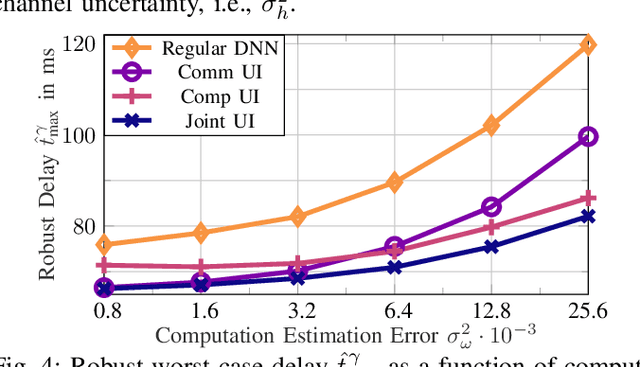

Abstract:The convergence of communication and computation, along with the integration of machine learning and artificial intelligence, stand as key empowering pillars for the sixth-generation of communication systems (6G). This paper considers a network of one base station serving a number of devices simultaneously using spatial multiplexing. The paper then presents an innovative deep learning-based approach to simultaneously manage the transmit and computing powers, alongside computation allocation, amidst uncertainties in both channel and computing states information. More specifically, the paper aims at proposing a robust solution that minimizes the worst-case delay across the served devices subject to computation and power constraints. The paper uses a deep neural network (DNN)-based solution that maps estimated channels and computation requirements to optimized resource allocations. During training, uncertainty samples are injected after the DNN output to jointly account for both communication and computation estimation errors. The DNN is then trained via backpropagation using the robust utility, thus implicitly learning the uncertainty distributions. Our results validate the enhanced robust delay performance of the joint uncertainty injection versus the classical DNN approach, especially in high channel and computational uncertainty regimes.

Extended Reality via Cooperative NOMA in Hybrid Cloud/Mobile-Edge Computing Networks

Oct 09, 2023Abstract:Extended reality (XR) applications often perform resource-intensive tasks, which are computed remotely, a process that prioritizes the latency criticality aspect. To this end, this paper shows that through leveraging the power of the central cloud (CC), the close proximity of edge computers (ECs), and the flexibility of uncrewed aerial vehicles (UAVs), a UAV-aided hybrid cloud/mobile-edge computing architecture promises to handle the intricate requirements of future XR applications. In this context, this paper distinguishes between two types of XR devices, namely, strong and weak devices. The paper then introduces a cooperative non-orthogonal multiple access (Co-NOMA) scheme, pairing strong and weak devices, so as to aid the XR devices quality-of-user experience by intelligently selecting either the direct or the relay links toward the weak XR devices. A sum logarithmic-rate maximization problem is, thus, formulated so as to jointly determine the computation and communication resources, and link-selection strategy as a means to strike a trade-off between the system throughput and fairness. Subject to realistic network constraints, e.g., power consumption and delay, the optimization problem is then solved iteratively via discrete relaxations, successive-convex approximation, and fractional programming, an approach which can be implemented in a distributed fashion across the network. Simulation results validate the proposed algorithms performance in terms of log-rate maximization, delay-sensitivity, scalability, and runtime performance. The practical distributed Co-NOMA implementation is particularly shown to offer appreciable benefits over traditional multiple access and NOMA methods, highlighting its applicability in decentralized XR systems.

Optimizing the Age of Information in Mixed-Critical Wireless Communication Networks

Nov 10, 2022Abstract:Beyond fifth generation wireless communication networks (B5G) are applied in many use-cases, such as industrial control systems, smart public transport, and power grids. Those applications require innovative techniques for timely transmission and increased wireless network capacities. Hence, this paper proposes optimizing the data freshness measured by the age of information (AoI) in dense internet of things (IoT) sensor-actuator networks. Given different priorities of data-streams, i.e., different sensitivities to outdated information, mixed-criticality is introduced by analyzing different functions of the age, i.e., we consider linear and exponential aging functions. An intricate non-convex optimization problem managing the physical transmission time and packet outage probability is derived. Such problem is tackled using stochastic reformulations, successive convex approximations, and fractional programming, resulting in an efficient iterative algorithm for AoI optimization. Simulation results validate the proposed scheme's performance in terms of AoI, mixed-criticality, and scalability. The proposed non-orthogonal transmission is shown to outperform an orthogonal access scheme in various deployment cases. Results emphasize the potential gains for dense B5G empowered IoT networks in minimizing the AoI.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge