Hayssam Dahrouj

Sum Rate and Worst Case SINR Optimization in Multi HAPS Ground Integrated Networks

Nov 09, 2025Abstract:Balancing throughput and fairness promises to be a key enabler for achieving large-scale digital inclusion in future vertical heterogeneous networks (VHetNets). In an attempt to address the global digital divide problem, this paper explores a multi-high-altitude platform system (HAPS)-ground integrated network, in which multiple HAPSs collaborate with ground base stations (BSs) to enhance the users' quality of service on the ground to achieve the highly sought-after digital equity. To this end, this paper considers maximizing both the network-wide weighted sum rate function and the worst-case signal-to-interference-plus-noise ratio (SINR) function subject to the same system level constraints. More specifically, the paper tackles the two different optimization problems so as to balance throughput and fairness, by accounting for the individual HAPS payload connectivity constraints, HAPS and BS distinct power limitations, and per-user rate requirements. This paper solves the considered problems using techniques from optimization theory by adopting a generalized assignment problem (GAP)-based methodology to determine the user association variables, jointly with successive convex approximation (SCA)-based iterative algorithms for optimizing the corresponding beamforming vectors. One of the main advantages of the proposed algorithms is their amenability for distributed implementation across the multiple HAPSs and BSs. The simulation results particularly validate the performance of the presented algorithms, demonstrating the capability of multi-HAPS networks to boost-up the overall network digital inclusion toward democratizing future digital services.

An Overview of the Prospects and Challenges of Using Artificial Intelligence for Energy Management Systems in Microgrids

May 06, 2025Abstract:Microgrids have emerged as a pivotal solution in the quest for a sustainable and energy-efficient future. While microgrids offer numerous advantages, they are also prone to issues related to reliably forecasting renewable energy demand and production, protecting against cyberattacks, controlling operational costs, optimizing power flow, and regulating the performance of energy management systems (EMS). Tackling these energy management challenges is essential to facilitate microgrid applications and seamlessly incorporate renewable energy resources. Artificial intelligence (AI) has recently demonstrated immense potential for optimizing energy management in microgrids, providing efficient and reliable solutions. This paper highlights the combined benefits of enabling AI-based methodologies in the energy management systems of microgrids by examining the applicability and efficiency of AI-based EMS in achieving specific technical and economic objectives. The paper also points out several future research directions that promise to spearhead AI-driven EMS, namely the development of self-healing microgrids, integration with blockchain technology, use of Internet of things (IoT), and addressing interpretability, data privacy, scalability, and the prospects to generative AI in the context of future AI-based EMS.

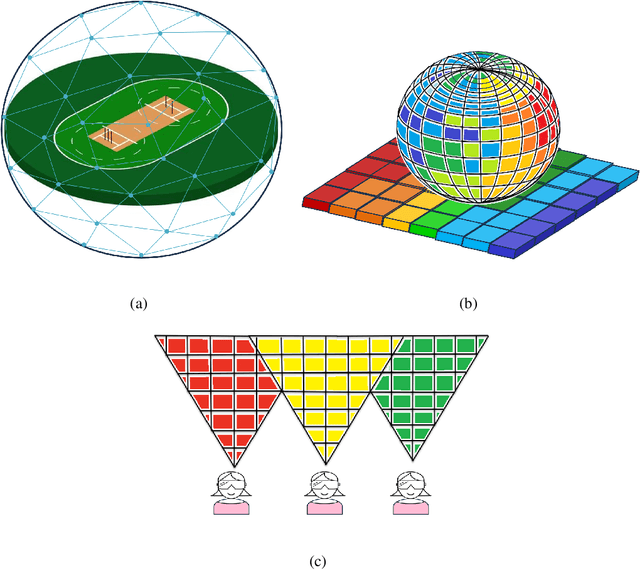

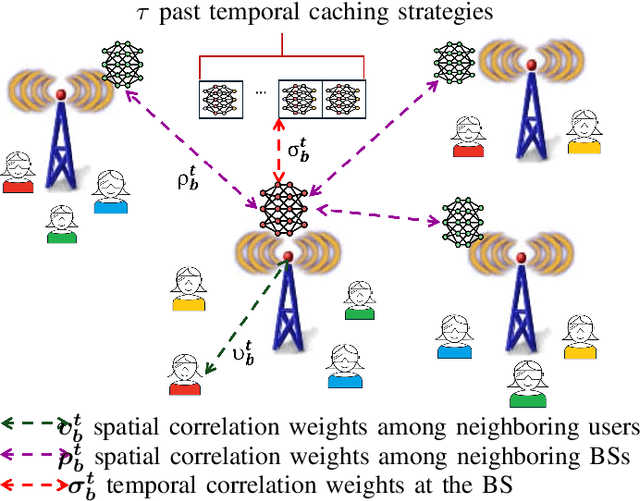

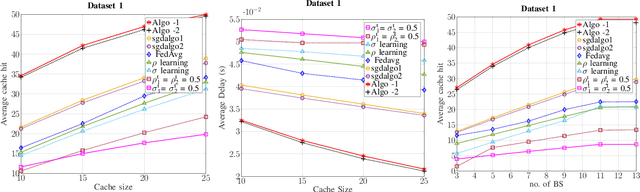

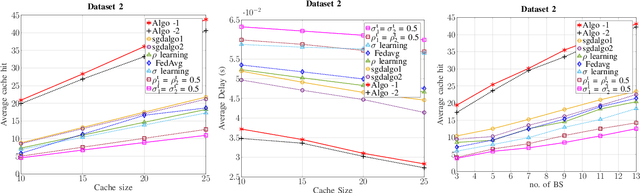

Personalized Federated Learning for Cellular VR: Online Learning and Dynamic Caching

Jan 20, 2025

Abstract:Delivering an immersive experience to virtual reality (VR) users through wireless connectivity offers the freedom to engage from anywhere at any time. Nevertheless, it is challenging to ensure seamless wireless connectivity that delivers real-time and high-quality videos to the VR users. This paper proposes a field of view (FoV) aware caching for mobile edge computing (MEC)-enabled wireless VR network. In particular, the FoV of each VR user is cached/prefetched at the base stations (BSs) based on the caching strategies tailored to each BS. Specifically, decentralized and personalized federated learning (DP-FL) based caching strategies with guarantees are presented. Considering VR systems composed of multiple VR devices and BSs, a DP-FL caching algorithm is implemented at each BS to personalize content delivery for VR users. The utilized DP-FL algorithm guarantees a probably approximately correct (PAC) bound on the conditional average cache hit. Further, to reduce the cost of communicating gradients, one-bit quantization of the stochastic gradient descent (OBSGD) is proposed, and a convergence guarantee of $\mathcal{O}(1/\sqrt{T})$ is obtained for the proposed algorithm, where $T$ is the number of iterations. Additionally, to better account for the wireless channel dynamics, the FoVs are grouped into multicast or unicast groups based on the number of requesting VR users. The performance of the proposed DP-FL algorithm is validated through realistic VR head-tracking dataset, and the proposed algorithm is shown to have better performance in terms of average delay and cache hit as compared to baseline algorithms.

UAV-assisted Unbiased Hierarchical Federated Learning: Performance and Convergence Analysis

Jul 05, 2024Abstract:The development of the sixth generation (6G) of wireless networks is bound to streamline the transition of computation and learning towards the edge of the network. Hierarchical federated learning (HFL) becomes, therefore, a key paradigm to distribute learning across edge devices to reach global intelligence. In HFL, each edge device trains a local model using its respective data and transmits the updated model parameters to an edge server for local aggregation. The edge server, then, transmits the locally aggregated parameters to a central server for global model aggregation. The unreliability of communication channels at the edge and backhaul links, however, remains a bottleneck in assessing the true benefit of HFL-empowered systems. To this end, this paper proposes an unbiased HFL algorithm for unmanned aerial vehicle (UAV)-assisted wireless networks that counteracts the impact of unreliable channels by adjusting the update weights during local and global aggregations at UAVs and terrestrial base stations (BS), respectively. To best characterize the unreliability of the channels involved in HFL, we adopt tools from stochastic geometry to determine the success probabilities of the local and global model parameter transmissions. Accounting for such metrics in the proposed HFL algorithm aims at removing the bias towards devices with better channel conditions in the context of the considered UAV-assisted network.. The paper further examines the theoretical convergence guarantee of the proposed unbiased UAV-assisted HFL algorithm under adverse channel conditions. One of the developed approach's additional benefits is that it allows for optimizing and designing the system parameters, e.g., the number of UAVs and their corresponding heights. The paper results particularly highlight the effectiveness of the proposed unbiased HFL scheme as compared to conventional FL and HFL algorithms.

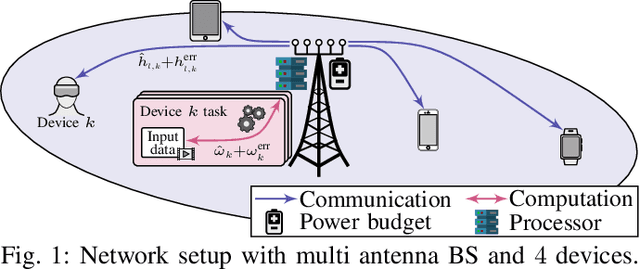

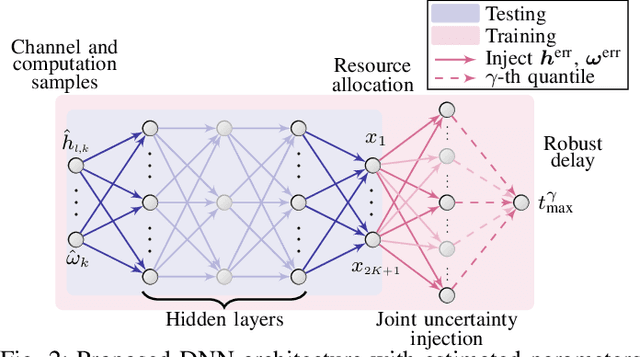

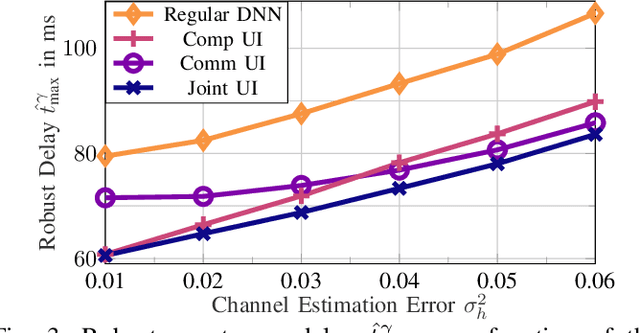

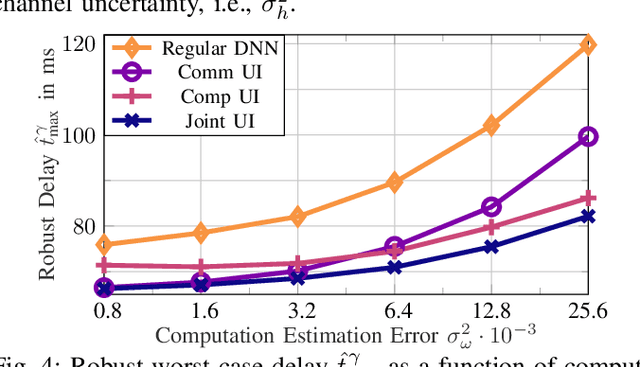

Robust Communication and Computation using Deep Learning via Joint Uncertainty Injection

Jun 05, 2024

Abstract:The convergence of communication and computation, along with the integration of machine learning and artificial intelligence, stand as key empowering pillars for the sixth-generation of communication systems (6G). This paper considers a network of one base station serving a number of devices simultaneously using spatial multiplexing. The paper then presents an innovative deep learning-based approach to simultaneously manage the transmit and computing powers, alongside computation allocation, amidst uncertainties in both channel and computing states information. More specifically, the paper aims at proposing a robust solution that minimizes the worst-case delay across the served devices subject to computation and power constraints. The paper uses a deep neural network (DNN)-based solution that maps estimated channels and computation requirements to optimized resource allocations. During training, uncertainty samples are injected after the DNN output to jointly account for both communication and computation estimation errors. The DNN is then trained via backpropagation using the robust utility, thus implicitly learning the uncertainty distributions. Our results validate the enhanced robust delay performance of the joint uncertainty injection versus the classical DNN approach, especially in high channel and computational uncertainty regimes.

Manipulating Predictions over Discrete Inputs in Machine Teaching

Jan 31, 2024Abstract:Machine teaching often involves the creation of an optimal (typically minimal) dataset to help a model (referred to as the `student') achieve specific goals given by a teacher. While abundant in the continuous domain, the studies on the effectiveness of machine teaching in the discrete domain are relatively limited. This paper focuses on machine teaching in the discrete domain, specifically on manipulating student models' predictions based on the goals of teachers via changing the training data efficiently. We formulate this task as a combinatorial optimization problem and solve it by proposing an iterative searching algorithm. Our algorithm demonstrates significant numerical merit in the scenarios where a teacher attempts at correcting erroneous predictions to improve the student's models, or maliciously manipulating the model to misclassify some specific samples to the target class aligned with his personal profits. Experimental results show that our proposed algorithm can have superior performance in effectively and efficiently manipulating the predictions of the model, surpassing conventional baselines.

Extended Reality via Cooperative NOMA in Hybrid Cloud/Mobile-Edge Computing Networks

Oct 09, 2023Abstract:Extended reality (XR) applications often perform resource-intensive tasks, which are computed remotely, a process that prioritizes the latency criticality aspect. To this end, this paper shows that through leveraging the power of the central cloud (CC), the close proximity of edge computers (ECs), and the flexibility of uncrewed aerial vehicles (UAVs), a UAV-aided hybrid cloud/mobile-edge computing architecture promises to handle the intricate requirements of future XR applications. In this context, this paper distinguishes between two types of XR devices, namely, strong and weak devices. The paper then introduces a cooperative non-orthogonal multiple access (Co-NOMA) scheme, pairing strong and weak devices, so as to aid the XR devices quality-of-user experience by intelligently selecting either the direct or the relay links toward the weak XR devices. A sum logarithmic-rate maximization problem is, thus, formulated so as to jointly determine the computation and communication resources, and link-selection strategy as a means to strike a trade-off between the system throughput and fairness. Subject to realistic network constraints, e.g., power consumption and delay, the optimization problem is then solved iteratively via discrete relaxations, successive-convex approximation, and fractional programming, an approach which can be implemented in a distributed fashion across the network. Simulation results validate the proposed algorithms performance in terms of log-rate maximization, delay-sensitivity, scalability, and runtime performance. The practical distributed Co-NOMA implementation is particularly shown to offer appreciable benefits over traditional multiple access and NOMA methods, highlighting its applicability in decentralized XR systems.

Characterization of the Global Bias Problem in Aerial Federated Learning

Dec 29, 2022Abstract:Unmanned aerial vehicles (UAVs) mobility enables flexible and customized federated learning (FL) at the network edge. However, the underlying uncertainties in the aerial-terrestrial wireless channel may lead to a biased FL model. In particular, the distribution of the global model and the aggregation of the local updates within the FL learning rounds at the UAVs are governed by the reliability of the wireless channel. This creates an undesirable bias towards the training data of ground devices with better channel conditions, and vice versa. This paper characterizes the global bias problem of aerial FL in large-scale UAV networks. To this end, the paper proposes a channel-aware distribution and aggregation scheme to enforce equal contribution from all devices in the FL training as a means to resolve the global bias problem. We demonstrate the convergence of the proposed method by experimenting with the MNIST dataset and show its superiority compared to existing methods. The obtained results enable system parameter tuning to relieve the impact of the aerial channel deficiency on the FL convergence rate.

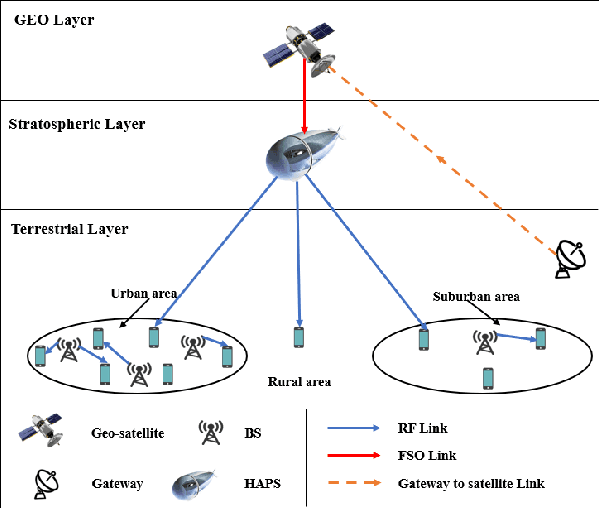

Equitable 6G Access Service via Cloud-Enabled HAPS for Optimizing Hybrid Air-Ground Networks

Dec 05, 2022

Abstract:The evolvement of wireless communication services concurs with significant growth in data traffic, thereby inflicting stringent requirements on terrestrial networks. This work invigorates a novel connectivity solution that integrates aerial and terrestrial communications with a cloud-enabled high-altitude platform station (HAPS) to promote an equitable connectivity landscape. Consider a cloud-enabled HAPS connected to both terrestrial base-stations and hot-air balloons via a data-sharing fronthauling strategy. The paper then assumes that both the terrestrial base-stations and the hot-air balloons are grouped into disjoint clusters to serve the aerial and terrestrial users in a coordinated fashion. The work then focuses on finding the user-to-transmitter scheduling and the associated beamforming policies in the downlink direction of cloud-enabled HAPS systems by maximizing two different objectives, namely, the sum-rate and sum-of-log of the long-term average rate, both subject to limited transmit power and finite fronthaul capacity. The paper proposes solving the two non-convex discrete and continuous optimization problems using numerical iterative optimization algorithms. The proposed algorithms rely on well-chosen convexification and approximation steps, namely, fractional programming and sparse beamforming via re-weighted $\ell_0$-norm approximation. The numerical results outline the yielded gain illustrated through equitable access service in crowded and unserved areas, and showcase the numerical benefits stemming from the proposed cloud-enabled HAPS coordination of hot-air balloons and terrestrial base-stations for democratizing connectivity and empowering the digital inclusion framework.

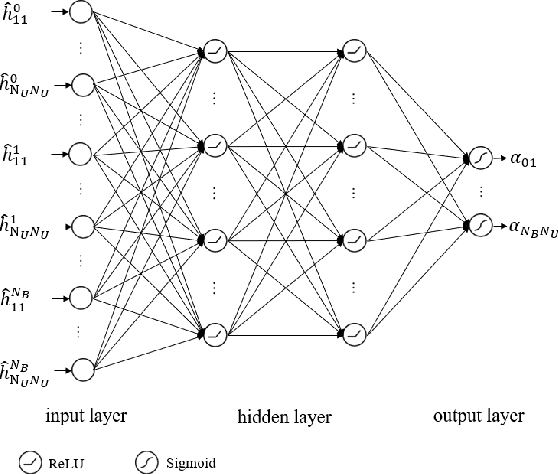

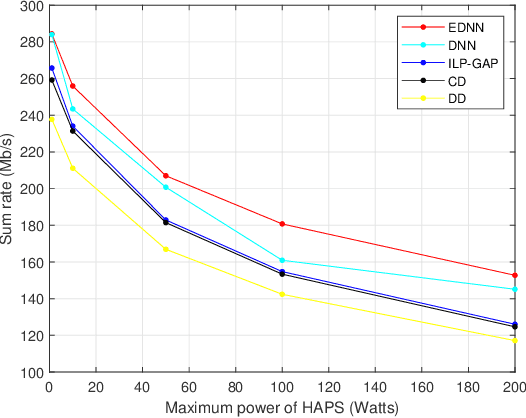

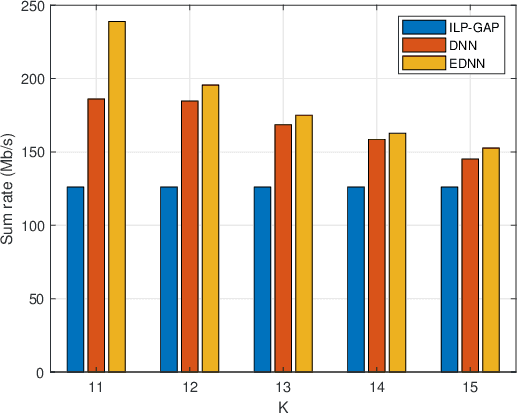

Machine Learning-Based User Scheduling in Integrated Satellite-HAPS-Ground Networks

Jun 04, 2022

Abstract:Integrated space-air-ground networks promise to offer a valuable solution space for empowering the sixth generation of communication networks (6G), particularly in the context of connecting the unconnected and ultraconnecting the connected. Such digital inclusion thrive makes resource management problems, especially those accounting for load-balancing considerations, of particular interest. The conventional model-based optimization methods, however, often fail to meet the real-time processing and quality-of-service needs, due to the high heterogeneity of the space-air-ground networks, and the typical complexity of the classical algorithms. Given the premises of artificial intelligence at automating wireless networks design, this paper focuses on showcasing the prospects of machine learning in the context of user scheduling in integrated space-air-ground communications. The paper first overviews the most relevant state-of-the art in the context of machine learning applications to the resource allocation problems, with a dedicated attention to space-air-ground networks. The paper then proposes, and shows the benefit of, one specific application that uses ensembling deep neural networks for optimizing the user scheduling policies in integrated space-high altitude platform station (HAPS)-ground networks. Finally, the paper sheds light on the challenges and open issues that promise to spur the integration of machine learning in space-air-ground networks, namely, online HAPS power adaptation, learning-based channel sensing, data-driven multi-HAPSs resource management, and intelligent flying taxis-empowered systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge