Mahdi Boloursaz Mashhadi

Wireless TokenCom: RL-Based Tokenizer Agreement for Multi-User Wireless Token Communications

Feb 12, 2026Abstract:Token Communications (TokenCom) has recently emerged as an effective new paradigm, where tokens are the unified units of multimodal communications and computations, enabling efficient digital semantic- and goal-oriented communications in future wireless networks. To establish a shared semantic latent space, the transmitters/receivers in TokenCom need to agree on an identical tokenizer model and codebook. To this end, an initial Tokenizer Agreement (TA) process is carried out in each communication episode, where the transmitter/receiver cooperate to choose from a set of pre-trained tokenizer models/ codebooks available to them both for efficient TokenCom. In this correspondence, we investigate TA in a multi-user downlink wireless TokenCom scenario, where the base station equipped with multiple antennas transmits video token streams to multiple users. We formulate the corresponding mixed-integer non-convex problem, and propose a hybrid reinforcement learning (RL) framework that integrates a deep Q-network (DQN) for joint tokenizer agreement and sub-channel assignment, with a deep deterministic policy gradient (DDPG) for beamforming. Simulation results show that the proposed framework outperforms baseline methods in terms of semantic quality and resource efficiency, while reducing the freezing events in video transmission by 68% compared to the conventional H.265-based scheme.

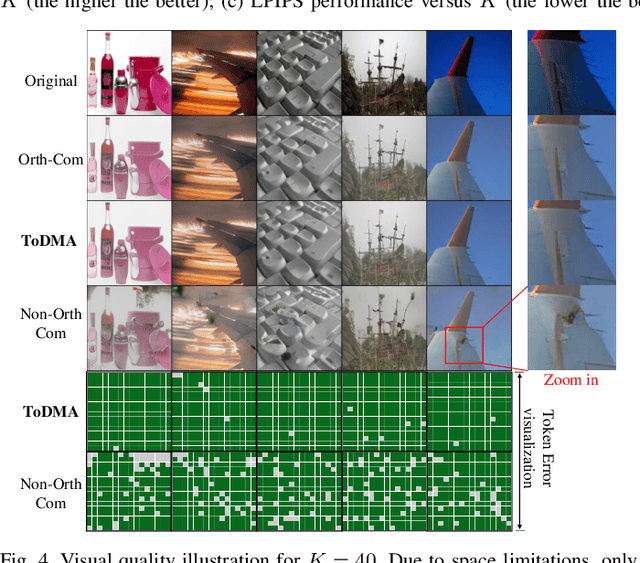

ToDMA: Large Model-Driven Token-Domain Multiple Access for Semantic Communications

May 16, 2025Abstract:Token communications (TokCom) is an emerging generative semantic communication concept that reduces transmission rates by using context and multimodal large language model (MLLM)-based token processing, with tokens serving as universal semantic units across modalities. In this paper, we propose a semantic multiple access scheme in the token domain, referred to as token domain multiple access (ToDMA), where a large number of devices share a token codebook and a modulation codebook for source and channel coding, respectively. Specifically, each transmitter first tokenizes its source signal and modulate each token to a codeword. At the receiver, compressed sensing is employed first to detect active tokens and the corresponding channel state information (CSI) from the superposed signals. Then, the source token sequences are reconstructed by clustering the token-associated CSI across multiple time slots. In case of token collisions, some active tokens cannot be assigned and some positions in the reconstructed token sequences are empty. We propose to use pre-trained MLLMs to leverage the context, predict masked tokens, and thus mitigate token collisions. Simulation results demonstrate the effectiveness of the proposed ToDMA framework for both text and image transmission tasks, achieving significantly lower latency compared to context-unaware orthogonal communication schemes, while also delivering superior distortion and perceptual quality compared to state-of-the-art context-unaware non-orthogonal communication methods.

Token Communications: A Unified Framework for Cross-modal Context-aware Semantic Communications

Feb 17, 2025

Abstract:In this paper, we introduce token communications (TokCom), a unified framework to leverage cross-modal context information in generative semantic communications (GenSC). TokCom is a new paradigm, motivated by the recent success of generative foundation models and multimodal large language models (GFM/MLLMs), where the communication units are tokens, enabling efficient transformer-based token processing at the transmitter and receiver. In this paper, we introduce the potential opportunities and challenges of leveraging context in GenSC, explore how to integrate GFM/MLLMs-based token processing into semantic communication systems to leverage cross-modal context effectively, present the key principles for efficient TokCom at various layers in future wireless networks. We demonstrate the corresponding TokCom benefits in a GenSC setup for image, leveraging cross-modal context information, which increases the bandwidth efficiency by 70.8% with negligible loss of semantic/perceptual quality. Finally, the potential research directions are identified to facilitate adoption of TokCom in future wireless networks.

Token-Domain Multiple Access: Exploiting Semantic Orthogonality for Collision Mitigation

Feb 10, 2025

Abstract:Token communications is an emerging generative semantic communication concept that reduces transmission rates by using context and transformer-based token processing, with tokens serving as universal semantic units. In this paper, we propose a semantic multiple access scheme in the token domain, referred to as ToDMA, where a large number of devices share a tokenizer and a modulation codebook for source and channel coding, respectively. Specifically, the source signal is tokenized into sequences, with each token modulated into a codeword. Codewords from multiple devices are transmitted simultaneously, resulting in overlap at the receiver. The receiver detects the transmitted tokens, assigns them to their respective sources, and mitigates token collisions by leveraging context and semantic orthogonality across the devices' messages. Simulations demonstrate that the proposed ToDMA framework outperforms context-unaware orthogonal and non-orthogonal communication methods in image transmission tasks, achieving lower latency and better image quality.

Single Antenna Tracking and Localization of RIS-enabled Vehicular Users

Nov 23, 2024

Abstract:Reconfigurable Intelligent Surfaces (RISs) are envisioned to be employed in next generation wireless networks to enhance the communication and radio localization services. In this paper, we propose novel localization and tracking algorithms exploiting reflections through RISs at multiple receivers. We utilize a single antenna transmitter (Tx) and multiple single antenna receivers (Rxs) to estimate the position and the velocity of users (e.g. vehicles) equipped with RISs. Then, we design the RIS phase shifts to separate the signals from different users. The proposed algorithms exploit the geometry information of the signal at the RISs to localize and track the users. We also conduct a comprehensive analysis of the Cramer-Rao lower bound (CRLB) of the localization system. Compared to the time of arrival (ToA)-based localization approach, the proposed method reduces the localization error by a factor up to three. Also, the simulation results show the accuracy of the proposed tracking approach.

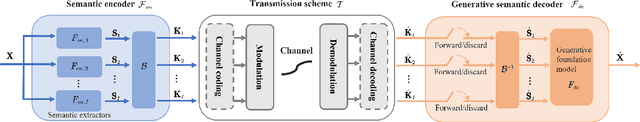

Generative Semantic Communications with Foundation Models: Perception-Error Analysis and Semantic-Aware Power Allocation

Nov 07, 2024

Abstract:Generative foundation models can revolutionize the design of semantic communication (SemCom) systems allowing high fidelity exchange of semantic information at ultra low rates. In this work, a generative SemCom framework with pretrained foundation models is proposed, where both uncoded forward-with-error and coded discard-with-error schemes are developed for the semantic decoder. To characterize the impact of transmission reliability on the perceptual quality of the regenerated signal, their mathematical relationship is analyzed from a rate-distortion-perception perspective, which is proved to be non-decreasing. The semantic values are defined to measure the semantic information of multimodal semantic features accordingly. We also investigate semantic-aware power allocation problems aiming at power consumption minimization for ultra low rate and high fidelity SemComs. To solve these problems, two semantic-aware power allocation methods are proposed by leveraging the non-decreasing property of the perception-error relationship. Numerically, perception-error functions and semantic values of semantic data streams under both schemes for image tasks are obtained based on the Kodak dataset. Simulation results show that our proposed semanticaware method significantly outperforms conventional approaches, particularly in the channel-coded case (up to 90% power saving).

Diffusion-based Generative Multicasting with Intent-aware Semantic Decomposition

Nov 04, 2024

Abstract:Generative diffusion models (GDMs) have recently shown great success in synthesizing multimedia signals with high perceptual quality enabling highly efficient semantic communications in future wireless networks. In this paper, we develop an intent-aware generative semantic multicasting framework utilizing pre-trained diffusion models. In the proposed framework, the transmitter decomposes the source signal to multiple semantic classes based on the multi-user intent, i.e. each user is assumed to be interested in details of only a subset of the semantic classes. The transmitter then sends to each user only its intended classes, and multicasts a highly compressed semantic map to all users over shared wireless resources that allows them to locally synthesize the other classes, i.e. non-intended classes, utilizing pre-trained diffusion models. The signal retrieved at each user is thereby partially reconstructed and partially synthesized utilizing the received semantic map. This improves utilization of the wireless resources, with better preserving privacy of the non-intended classes. We design a communication/computation-aware scheme for per-class adaptation of the communication parameters, such as the transmission power and compression rate to minimize the total latency of retrieving signals at multiple receivers, tailored to the prevailing channel conditions as well as the users reconstruction/synthesis distortion/perception requirements. The simulation results demonstrate significantly reduced per-user latency compared with non-generative and intent-unaware multicasting benchmarks while maintaining high perceptual quality of the signals retrieved at the users.

Semantic-Aware Power Allocation for Generative Semantic Communications with Foundation Models

Jul 03, 2024

Abstract:Recent advancements in diffusion models have made a significant breakthrough in generative modeling. The combination of the generative model and semantic communication (SemCom) enables high-fidelity semantic information exchange at ultra-low rates. A novel generative SemCom framework for image tasks is proposed, wherein pre-trained foundation models serve as semantic encoders and decoders for semantic feature extractions and image regenerations, respectively. The mathematical relationship between the transmission reliability and the perceptual quality of the regenerated image and the semantic values of semantic features are modeled, which are obtained by conducting numerical simulations on the Kodak dataset. We also investigate the semantic-aware power allocation problem, with the objective of minimizing the total power consumption while guaranteeing semantic performance. To solve this problem, two semanticaware power allocation methods are proposed by constraint decoupling and bisection search, respectively. Numerical results show that the proposed semantic-aware methods demonstrate superior performance compared to the conventional one in terms of total power consumption.

Massive Digital Over-the-Air Computation for Communication-Efficient Federated Edge Learning

May 24, 2024

Abstract:Over-the-air computation (AirComp) is a promising technology converging communication and computation over wireless networks, which can be particularly effective in model training, inference, and more emerging edge intelligence applications. AirComp relies on uncoded transmission of individual signals, which are added naturally over the multiple access channel thanks to the superposition property of the wireless medium. Despite significantly improved communication efficiency, how to accommodate AirComp in the existing and future digital communication networks, that are based on discrete modulation schemes, remains a challenge. This paper proposes a massive digital AirComp (MD-AirComp) scheme, that leverages an unsourced massive access protocol, to enhance compatibility with both current and next-generation wireless networks. MD-AirComp utilizes vector quantization to reduce the uplink communication overhead, and employs shared quantization and modulation codebooks. At the receiver, we propose a near-optimal approximate message passing-based algorithm to compute the model aggregation results from the superposed sequences, which relies on estimating the number of devices transmitting each code sequence, rather than trying to decode the messages of individual transmitters. We apply MD-AirComp to the federated edge learning (FEEL), and show that it significantly accelerates FEEL convergence compared to state-of-the-art while using the same amount of communication resources. To support further research and ensure reproducibility, we have made our code available at https://github.com/liqiao19/MD-AirComp.

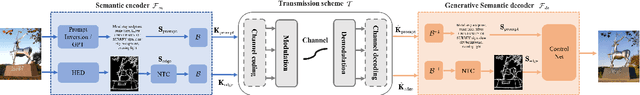

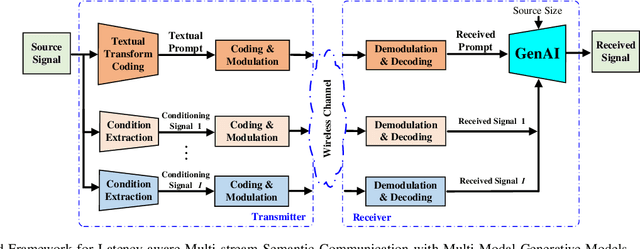

Latency-Aware Generative Semantic Communications with Pre-Trained Diffusion Models

Mar 25, 2024

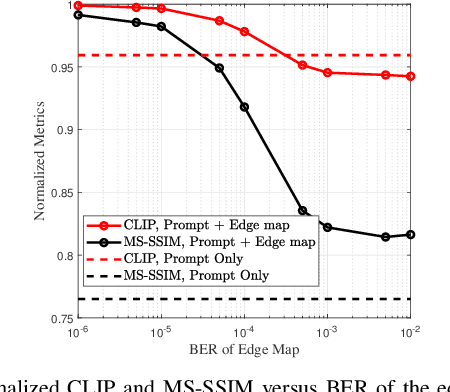

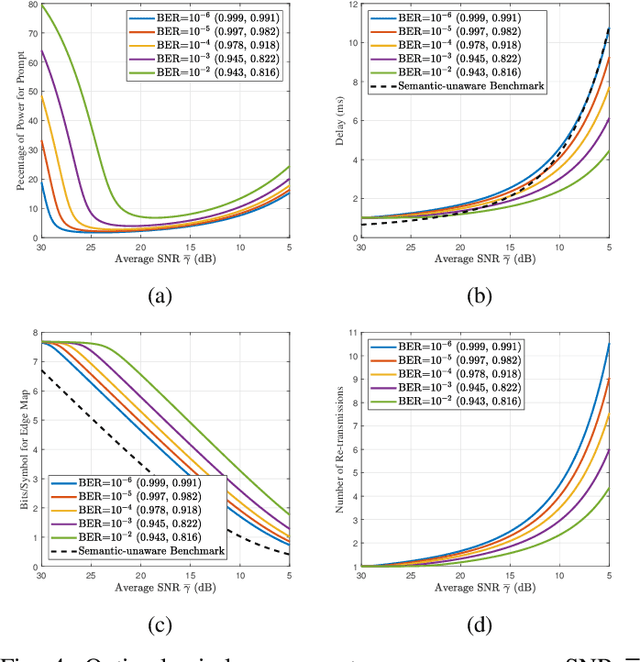

Abstract:Generative foundation AI models have recently shown great success in synthesizing natural signals with high perceptual quality using only textual prompts and conditioning signals to guide the generation process. This enables semantic communications at extremely low data rates in future wireless networks. In this paper, we develop a latency-aware semantic communications framework with pre-trained generative models. The transmitter performs multi-modal semantic decomposition on the input signal and transmits each semantic stream with the appropriate coding and communication schemes based on the intent. For the prompt, we adopt a re-transmission-based scheme to ensure reliable transmission, and for the other semantic modalities we use an adaptive modulation/coding scheme to achieve robustness to the changing wireless channel. Furthermore, we design a semantic and latency-aware scheme to allocate transmission power to different semantic modalities based on their importance subjected to semantic quality constraints. At the receiver, a pre-trained generative model synthesizes a high fidelity signal using the received multi-stream semantics. Simulation results demonstrate ultra-low-rate, low-latency, and channel-adaptive semantic communications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge