Chuan Heng Foh

Dynamic Resource Allocation in Distributed MIMO-LEO Satellite Networks

May 27, 2025Abstract:This paper characterizes the impacts of channel estimation errors and Rician factors on achievable data rate and investigates the user scheduling strategy, combining scheme, power control, and dynamic bandwidth allocation to maximize the sum data rate in the distributed multiple-input-multiple-output (MIMO)-enabled low earth orbit (LEO) satellite networks. However, due to the resource-assignment problem, it is challenging to find the optimal solution for maximizing the sum data rate. To transform this problem into a more tractable form, we first quantify the channel estimation errors based on the minimum mean square error (MMSE) estimator and rigorously derive a closed-form lower bound of the achievable data rate, offering an explicit formulation for resource allocation. Then, to solve the NP-hard problem, we decompose it into three sub-problems, namely, user scheduling strategy, joint combination and power control, and dynamic bandwidth allocation, by using alternative optimization (AO). Specifically, the user scheduling is formulated as a graph coloring problem by iteratively updating an undirected graph based on user requirements, which is then solved using the DSatur algorithm. For the combining weights and power control, the successive convex approximation (SCA) and geometrical programming (GP) are adopted to obtain the sub-optimal solution with lower complexity. Finally, the optimal bandwidth allocation can be achieved by solving the concave problem. Numerical results validate the analytical tightness of the derived bound, especially for large Rician factors, and demonstrate significant performance gains over other benchmarks.

Use of Parallel Explanatory Models to Enhance Transparency of Neural Network Configurations for Cell Degradation Detection

Apr 17, 2024Abstract:In a previous paper, we have shown that a recurrent neural network (RNN) can be used to detect cellular network radio signal degradations accurately. We unexpectedly found, though, that accuracy gains diminished as we added layers to the RNN. To investigate this, in this paper, we build a parallel model to illuminate and understand the internal operation of neural networks, such as the RNN, which store their internal state in order to process sequential inputs. This model is widely applicable in that it can be used with any input domain where the inputs can be represented by a Gaussian mixture. By looking at the RNN processing from a probability density function perspective, we are able to show how each layer of the RNN transforms the input distributions to increase detection accuracy. At the same time we also discover a side effect acting to limit the improvement in accuracy. To demonstrate the fidelity of the model we validate it against each stage of RNN processing as well as the output predictions. As a result, we have been able to explain the reasons for the RNN performance limits with useful insights for future designs for RNNs and similar types of neural network.

Latency-Aware Generative Semantic Communications with Pre-Trained Diffusion Models

Mar 25, 2024

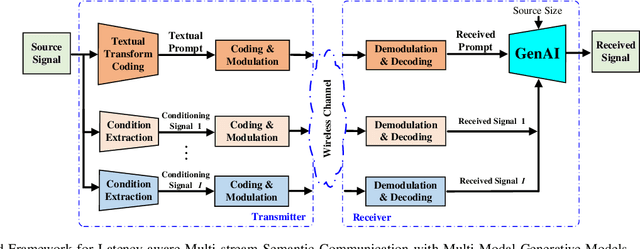

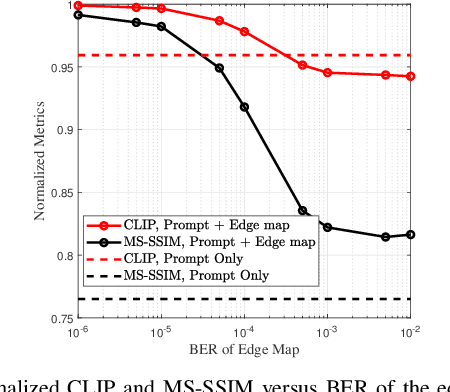

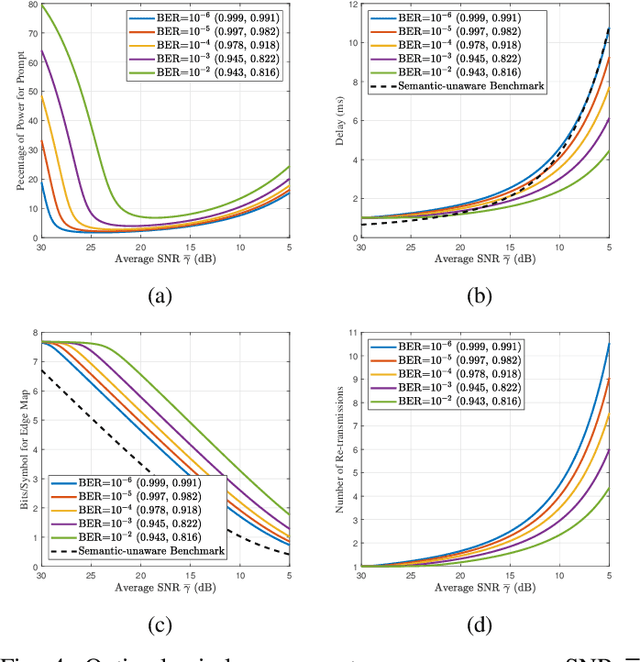

Abstract:Generative foundation AI models have recently shown great success in synthesizing natural signals with high perceptual quality using only textual prompts and conditioning signals to guide the generation process. This enables semantic communications at extremely low data rates in future wireless networks. In this paper, we develop a latency-aware semantic communications framework with pre-trained generative models. The transmitter performs multi-modal semantic decomposition on the input signal and transmits each semantic stream with the appropriate coding and communication schemes based on the intent. For the prompt, we adopt a re-transmission-based scheme to ensure reliable transmission, and for the other semantic modalities we use an adaptive modulation/coding scheme to achieve robustness to the changing wireless channel. Furthermore, we design a semantic and latency-aware scheme to allocate transmission power to different semantic modalities based on their importance subjected to semantic quality constraints. At the receiver, a pre-trained generative model synthesizes a high fidelity signal using the received multi-stream semantics. Simulation results demonstrate ultra-low-rate, low-latency, and channel-adaptive semantic communications.

Multi-Agent Context Learning Strategy for Interference-Aware Beam Allocation in mmWave Vehicular Communications

Jan 04, 2024Abstract:Millimeter wave (mmWave) has been recognized as one of key technologies for 5G and beyond networks due to its potential to enhance channel bandwidth and network capacity. The use of mmWave for various applications including vehicular communications has been extensively discussed. However, applying mmWave to vehicular communications faces challenges of high mobility nodes and narrow coverage along the mmWave beams. Due to high mobility in dense networks, overlapping beams can cause strong interference which leads to performance degradation. As a remedy, beam switching capability in mmWave can be utilized. Then, frequent beam switching and cell change become inevitable to manage interference, which increase computational and signalling complexity. In order to deal with the complexity in interference control, we develop a new strategy called Multi-Agent Context Learning (MACOL), which utilizes Contextual Bandit to manage interference while allocating mmWave beams to serve vehicles in the network. Our approach demonstrates that by leveraging knowledge of neighbouring beam status, the machine learning agent can identify and avoid potential interfering transmissions to other ongoing transmissions. Furthermore, we show that even under heavy traffic loads, our proposed MACOL strategy is able to maintain low interference levels at around 10%.

Age of Information in Federated Learning over Wireless Networks

Sep 14, 2022

Abstract:In this paper, federated learning (FL) over wireless networks is investigated. In each communication round, a subset of devices is selected to participate in the aggregation with limited time and energy. In order to minimize the convergence time, global loss and latency are jointly considered in a Stackelberg game based framework. Specifically, age of information (AoI) based device selection is considered at leader-level as a global loss minimization problem, while sub-channel assignment, computational resource allocation, and power allocation are considered at follower-level as a latency minimization problem. By dividing the follower-level problem into two sub-problems, the best response of the follower is obtained by a monotonic optimization based resource allocation algorithm and a matching based sub-channel assignment algorithm. By deriving the upper bound of convergence rate, the leader-level problem is reformulated, and then a list based device selection algorithm is proposed to achieve Stackelberg equilibrium. Simulation results indicate that the proposed device selection scheme outperforms other schemes in terms of the global loss, and the developed algorithms can significantly decrease the time consumption of computation and communication.

Low-Complexity Block Coordinate Descend Based Multiuser Detection for Uplink Grant-Free NOMA

May 23, 2022

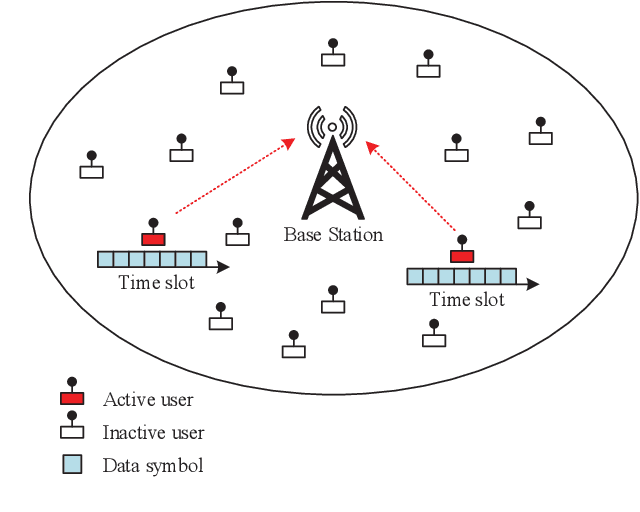

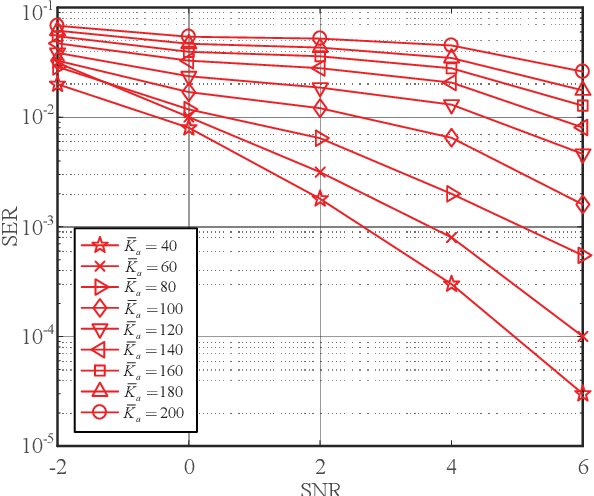

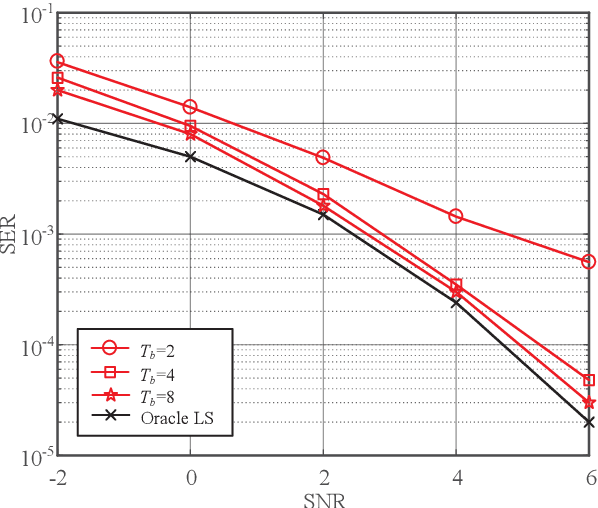

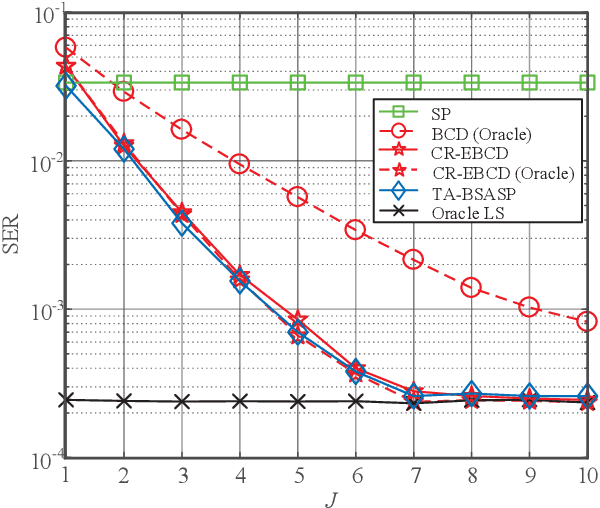

Abstract:Grant-free non-orthogonal multiple access (NOMA) scheme is considered as a promising candidate for the enabling of massive connectivity and reduced signalling overhead for Internet of Things (IoT) applications in massive machine-type communication (mMTC) networks. Exploiting the inherent nature of sporadic transmissions in the grant-free NOMA systems, compressed sensing based multiuser detection (CS-MUD) has been deemed as a powerful solution to user activity detection (UAD) and data detection (DD). In this paper, block coordinate descend (BCD) method is employed in CS-MUD to reduce the computational complexity. We propose two modified BCD based algorithms, called enhanced BCD (EBCD) and complexity reduction enhanced BCD (CR-EBCD), respectively. To be specific, by incorporating a novel candidate set pruning mechanism into the original BCD framework, our proposed EBCD algorithm achieves remarkable CS-MUD performance improvement. In addition, the proposed CR-EBCD algorithm further ameliorates the proposed EBCD by eliminating the redundant matrix multiplications during the iteration process. As a consequence, compared with the proposed EBCD algorithm, our proposed CR-EBCD algorithm enjoys two orders of magnitude complexity saving without any CS-MUD performance degradation, rendering it a viable solution for future mMTC scenarios. Extensive simulation results demonstrate the bound-approaching performance as well as ultra-low computational complexity.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge