Martin Vossiek

UAV-Borne Digital Radar System for Coherent Multistatic SAR Imaging

Jul 28, 2025Abstract:Advancements in analog-to-digital converter (ADC) technology have enabled higher sampling rates, making it feasible to adopt digital radar architectures that directly sample the radio-frequency (RF) signal, eliminating the need for analog downconversion. This digital approach supports greater flexibility in waveform design and signal processing, particularly through digital modulation schemes like orthogonal frequency division multiplexing (OFDM). This paper presents a digital radar system mounted on an uncrewed aerial vehicle (UAV), which employs OFDM waveforms for coherent multistatic synthetic aperture radar (SAR) imaging in the L-band. The radar setup features a primary UAV node responsible for signal transmission and monostatic data acquisition, alongside secondary nodes that operate in a receive-only mode. These secondary nodes capture the radar signal reflected from the scene as well as a direct sidelink signal. RF signals from both the radar and sidelink paths are sampled and processed offline. To manage data storage efficiently, a trigger mechanism is employed to record only the relevant portions of the radar signal. The system maintains coherency in both fast-time and slow-time domains, which is essential for multistatic SAR imaging. Because the secondary nodes are passive, the system can be easily scaled to accommodate a larger swarm of UAVs. The paper details the full signal processing workflow for both monostatic and multistatic SAR image formation, including an analysis and correction of synchronization errors that arise from the uncoupled operation of the nodes. The proposed coherent processing method is validated through static radar measurements, demonstrating coherency achieved by the concept. Additionally, a UAV-based bistatic SAR experiment demonstrates the system's performance by producing high-resolution monostatic, bistatic, and combined multistatic SAR images.

3D Face Reconstruction From Radar Images

Dec 03, 2024

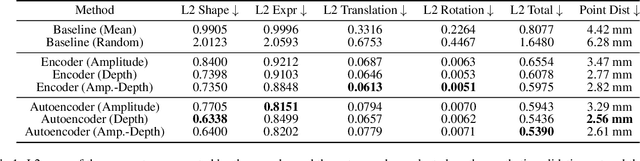

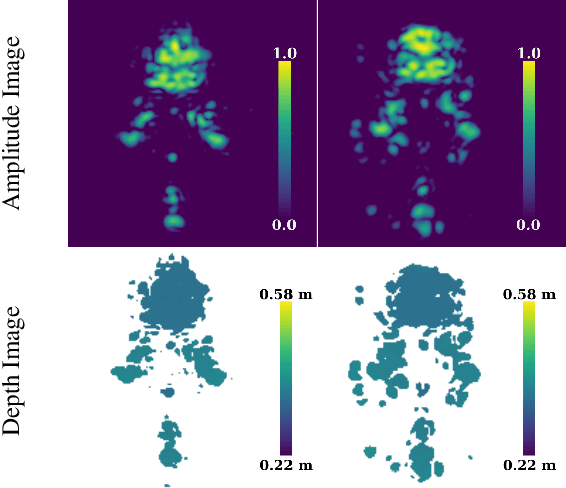

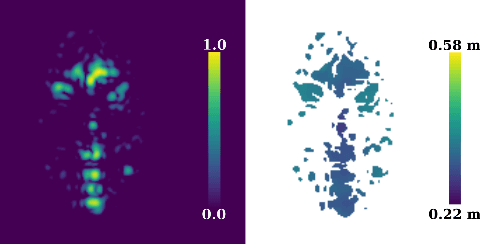

Abstract:The 3D reconstruction of faces gains wide attention in computer vision and is used in many fields of application, for example, animation, virtual reality, and even forensics. This work is motivated by monitoring patients in sleep laboratories. Due to their unique characteristics, sensors from the radar domain have advantages compared to optical sensors, namely penetration of electrically non-conductive materials and independence of light. These advantages of radar signals unlock new applications and require adaptation of 3D reconstruction frameworks. We propose a novel model-based method for 3D reconstruction from radar images. We generate a dataset of synthetic radar images with a physics-based but non-differentiable radar renderer. This dataset is used to train a CNN-based encoder to estimate the parameters of a 3D morphable face model. Whilst the encoder alone already leads to strong reconstructions of synthetic data, we extend our reconstruction in an Analysis-by-Synthesis fashion to a model-based autoencoder. This is enabled by learning the rendering process in the decoder, which acts as an object-specific differentiable radar renderer. Subsequently, the combination of both network parts is trained to minimize both, the loss of the parameters and the loss of the resulting reconstructed radar image. This leads to the additional benefit, that at test time the parameters can be further optimized by finetuning the autoencoder unsupervised on the image loss. We evaluated our framework on generated synthetic face images as well as on real radar images with 3D ground truth of four individuals.

MAROON: A Framework for the Joint Characterization of Near-Field High-Resolution Radar and Optical Depth Imaging Techniques

Nov 01, 2024

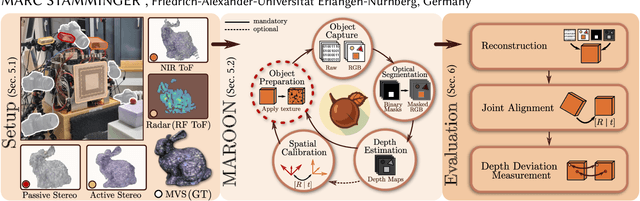

Abstract:Utilizing the complementary strengths of wavelength-specific range or depth sensors is crucial for robust computer-assisted tasks such as autonomous driving. Despite this, there is still little research done at the intersection of optical depth sensors and radars operating close range, where the target is decimeters away from the sensors. Together with a growing interest in high-resolution imaging radars operating in the near field, the question arises how these sensors behave in comparison to their traditional optical counterparts. In this work, we take on the unique challenge of jointly characterizing depth imagers from both, the optical and radio-frequency domain using a multimodal spatial calibration. We collect data from four depth imagers, with three optical sensors of varying operation principle and an imaging radar. We provide a comprehensive evaluation of their depth measurements with respect to distinct object materials, geometries, and object-to-sensor distances. Specifically, we reveal scattering effects of partially transmissive materials and investigate the response of radio-frequency signals. All object measurements will be made public in form of a multimodal dataset, called MAROON.

Sensing Accuracy Optimization for Communication-assisted Dual-baseline UAV-InSAR

Oct 24, 2024Abstract:In this paper, we study the optimization of the sensing accuracy of unmanned aerial vehicle (UAV)-based dual-baseline interferometric synthetic aperture radar (InSAR) systems. A swarm of three UAV-synthetic aperture radar (SAR) systems is deployed to image an area of interest from different angles, enabling the creation of two independent digital elevation models (DEMs). To reduce the InSAR sensing error, i.e., the height estimation error, the two DEMs are fused based on weighted average techniques into one final DEM. The heavy computations required for this process are performed on the ground. To this end, the radar data is offloaded in real time via a frequency division multiple access (FDMA) air-to-ground backhaul link. In this work, we focus on improving the sensing accuracy by minimizing the worst-case height estimation error of the final DEM. To this end, the UAV formation and the power allocated for offloading are jointly optimized based on alternating optimization (AO), while meeting practical InSAR sensing and communication constraints. Our simulation results demonstrate that the proposed solution can improve the sensing accuracy by over 39% compared to a classical single-baseline UAV-InSAR system and by more than 12% compared to other benchmark schemes.

UAV Formation and Resource Allocation Optimization for Communication-Assisted 3D InSAR Sensing

Jul 09, 2024Abstract:In this paper, we investigate joint unmanned aerial vehicle (UAV) formation and resource allocation optimization for communication-assisted three-dimensional (3D) synthetic aperture radar (SAR) sensing. We consider a system consisting of two UAVs that perform bistatic interferometric SAR (InSAR) sensing for generation of a digital elevation model (DEM) and transmit the radar raw data to a ground station (GS) in real time. To account for practical 3D sensing requirements, we use non-conventional sensing performance metrics, such as the SAR interferometric coherence, i.e., the local cross-correlation between the two co-registered UAV SAR images, the point-to-point InSAR relative height error, and the height of ambiguity, which together characterize the accuracy with which the InSAR system can determine the height of ground targets. Our objective is to jointly optimize the UAV formation, speed, and communication power allocation for maximization of the InSAR coverage while satisfying energy, communication, and InSAR-specific sensing constraints. To solve the formulated non-smooth and non-convex optimization problem, we divide it into three sub-problems and propose a novel alternating optimization (AO) framework that is based on classical, monotonic, and stochastic optimization techniques. The effectiveness of the proposed algorithm is validated through extensive simulations and compared to several benchmark schemes. Furthermore, our simulation results highlight the impact of the UAV-GS communication link on the flying formation and sensing performance and show that the DEM of a large area of interest can be mapped and offloaded to ground successfully, while the ground topography can be estimated with centimeter-scale precision. Lastly, we demonstrate that a low UAV velocity is preferable for InSAR applications as it leads to better sensing accuracy.

An Efficient yet High-Performance Method for Precise Radar-Based Imaging of Human Hand Poses

Jun 19, 2024Abstract:Contactless hand pose estimation requires sensors that provide precise spatial information and low computational complexity for real-time processing. Unlike vision-based systems, radar offers lighting independence and direct motion assessments. Yet, there is limited research balancing real-time constraints, suitable frame rates for motion evaluations, and the need for precise 3D data. To address this, we extend the ultra-efficient two-tone hand imaging method from our prior work to a three-tone approach. Maintaining high frame rates and real-time constraints, this approach significantly enhances reconstruction accuracy and precision. We assess these measures by evaluating reconstruction results for different hand poses obtained by an imaging radar. Accuracy is assessed against ground truth from a spatially calibrated photogrammetry setup, while precision is measured using 3D-printed hand poses. The results emphasize the method's great potential for future radar-based hand sensing.

Automatic Spatial Calibration of Near-Field MIMO Radar With Respect to Optical Sensors

Mar 16, 2024

Abstract:Despite an emerging interest in MIMO radar, the utilization of its complementary strengths in combination with optical sensors has so far been limited to far-field applications, due to the challenges that arise from mutual sensor calibration in the near field. In fact, most related approaches in the autonomous industry propose target-based calibration methods using corner reflectors that have proven to be unsuitable for the near field. In contrast, we propose a novel, joint calibration approach for optical RGB-D sensors and MIMO radars that is designed to operate in the radar's near-field range, within decimeters from the sensors. Our pipeline consists of a bespoke calibration target, allowing for automatic target detection and localization, followed by the spatial calibration of the two sensor coordinate systems through target registration. We validate our approach using two different depth sensing technologies from the optical domain. The experiments show the efficiency and accuracy of our calibration for various target displacements, as well as its robustness of our localization in terms of signal ambiguities.

Radar-Based Recognition of Static Hand Gestures in American Sign Language

Feb 20, 2024

Abstract:In the fast-paced field of human-computer interaction (HCI) and virtual reality (VR), automatic gesture recognition has become increasingly essential. This is particularly true for the recognition of hand signs, providing an intuitive way to effortlessly navigate and control VR and HCI applications. Considering increased privacy requirements, radar sensors emerge as a compelling alternative to cameras. They operate effectively in low-light conditions without capturing identifiable human details, thanks to their lower resolution and distinct wavelength compared to visible light. While previous works predominantly deploy radar sensors for dynamic hand gesture recognition based on Doppler information, our approach prioritizes classification using an imaging radar that operates on spatial information, e.g. image-like data. However, generating large training datasets required for neural networks (NN) is a time-consuming and challenging process, often falling short of covering all potential scenarios. Acknowledging these challenges, this study explores the efficacy of synthetic data generated by an advanced radar ray-tracing simulator. This simulator employs an intuitive material model that can be adjusted to introduce data diversity. Despite exclusively training the NN on synthetic data, it demonstrates promising performance when put to the test with real measurement data. This emphasizes the practicality of our methodology in overcoming data scarcity challenges and advancing the field of automatic gesture recognition in VR and HCI applications.

UAV Formation Optimization for Communication-assisted InSAR Sensing

Nov 12, 2023

Abstract:Interferometric synthetic aperture radar (InSAR) is an increasingly important remote sensing technique that enables three-dimensional (3D) sensing applications such as the generation of accurate digital elevation models (DEMs). In this paper, we investigate the joint formation and communication resource allocation optimization for a system comprising two unmanned aerial vehicles (UAVs) to perform InSAR sensing and to transfer the acquired data to the ground. To this end, we adopt as sensing performance metrics the interferometric coherence, i.e., the local correlation between the two co-registered UAV radar images, and the height of ambiguity (HoA), which together are a measure for the accuracy with which the InSAR system can estimate the height of ground objects. In addition, an analytical expression for the coverage of the considered InSAR sensing system is derived. Our objective is to maximize the InSAR coverage while satisfying all relevant InSAR-specific sensing and communication performance metrics. To tackle the non-convexity of the formulated optimization problem, we employ alternating optimization (AO) techniques combined with successive convex approximation (SCA). Our simulation results reveal that the resulting resource allocation algorithm outperforms two benchmark schemes in terms of InSAR coverage while satisfying all sensing and real-time communication requirements. Furthermore, we highlight the importance of efficient communication resource allocation in facilitating real-time sensing and unveil the trade-off between InSAR height estimation accuracy and coverage.

Joint Transmit Signal and Beamforming Design for Integrated Sensing and Power Transfer Systems

Nov 12, 2023

Abstract:Integrating different functionalities, conventionally implemented as dedicated systems, into a single platform allows utilising the available resources more efficiently. We consider an integrated sensing and power transfer (ISAPT) system and propose the joint optimisation of the rectangular pulse-shaped transmit signal and the beamforming vector to combine sensing and wireless power transfer (WPT) functionalities efficiently. In contrast to prior works, we adopt an accurate non-linear circuit-based energy harvesting (EH) model. We formulate and solve a non-convex optimisation problem for a general number of EH receivers to maximise a weighted sum of the average harvested powers at the EH receivers while ensuring the received echo signal reflected by a sensing target (ST) has sufficient power for estimating the range to the ST with a prescribed accuracy within the considered coverage region. The average harvested power is shown to monotonically increase with the pulse duration when the average transmit power budget is sufficiently large. We discuss the trade-off between sensing performance and power transfer for the considered ISAPT system. The proposed approach significantly outperforms a heuristic baseline scheme based on a linear EH model, which linearly combines energy beamforming with the beamsteering vector in the direction to the ST as its transmit strategy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge