Christian Schüßler

3D Face Reconstruction From Radar Images

Dec 03, 2024

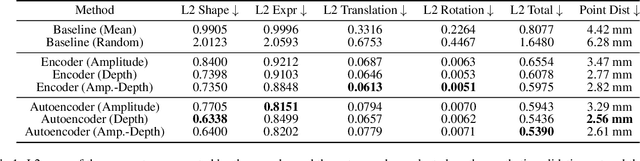

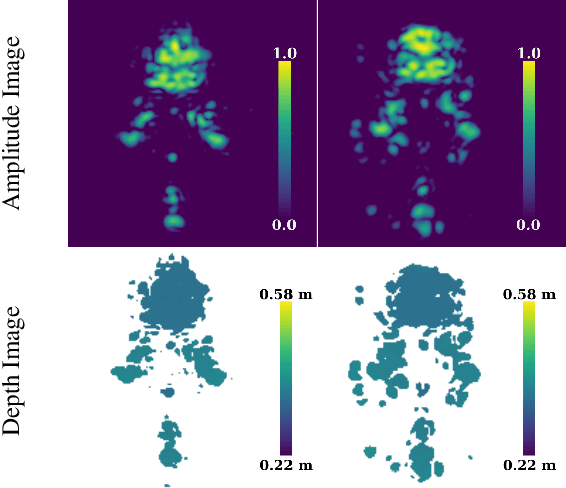

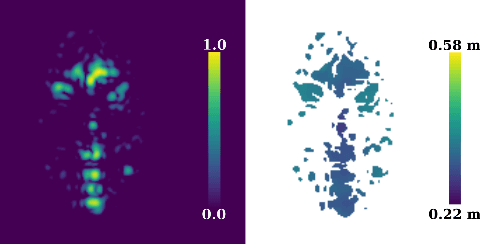

Abstract:The 3D reconstruction of faces gains wide attention in computer vision and is used in many fields of application, for example, animation, virtual reality, and even forensics. This work is motivated by monitoring patients in sleep laboratories. Due to their unique characteristics, sensors from the radar domain have advantages compared to optical sensors, namely penetration of electrically non-conductive materials and independence of light. These advantages of radar signals unlock new applications and require adaptation of 3D reconstruction frameworks. We propose a novel model-based method for 3D reconstruction from radar images. We generate a dataset of synthetic radar images with a physics-based but non-differentiable radar renderer. This dataset is used to train a CNN-based encoder to estimate the parameters of a 3D morphable face model. Whilst the encoder alone already leads to strong reconstructions of synthetic data, we extend our reconstruction in an Analysis-by-Synthesis fashion to a model-based autoencoder. This is enabled by learning the rendering process in the decoder, which acts as an object-specific differentiable radar renderer. Subsequently, the combination of both network parts is trained to minimize both, the loss of the parameters and the loss of the resulting reconstructed radar image. This leads to the additional benefit, that at test time the parameters can be further optimized by finetuning the autoencoder unsupervised on the image loss. We evaluated our framework on generated synthetic face images as well as on real radar images with 3D ground truth of four individuals.

Concept for an Automatic Annotation of Automotive Radar Data Using AI-segmented Aerial Camera Images

Sep 01, 2023

Abstract:This paper presents an approach to automatically annotate automotive radar data with AI-segmented aerial camera images. For this, the images of an unmanned aerial vehicle (UAV) above a radar vehicle are panoptically segmented and mapped in the ground plane onto the radar images. The detected instances and segments in the camera image can then be applied directly as labels for the radar data. Owing to the advantageous bird's eye position, the UAV camera does not suffer from optical occlusion and is capable of creating annotations within the complete field of view of the radar. The effectiveness and scalability are demonstrated in measurements, where 589 pedestrians in the radar data were automatically labeled within 2 minutes.

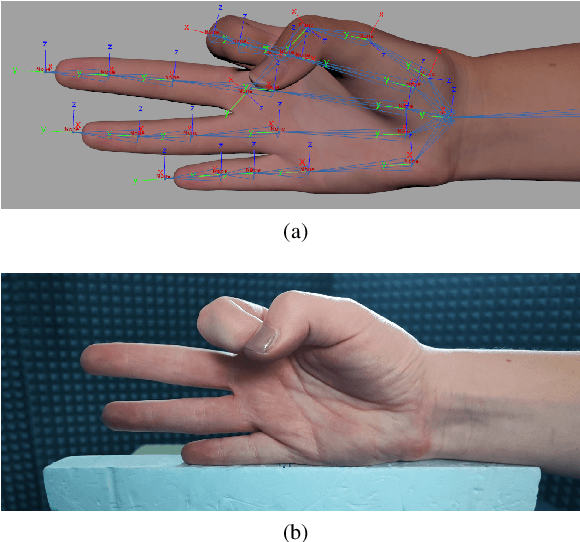

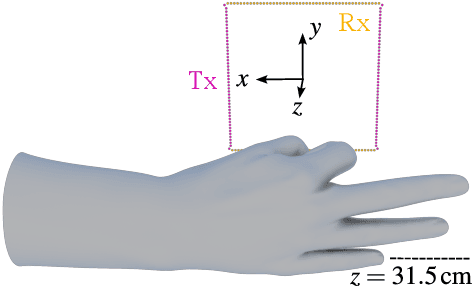

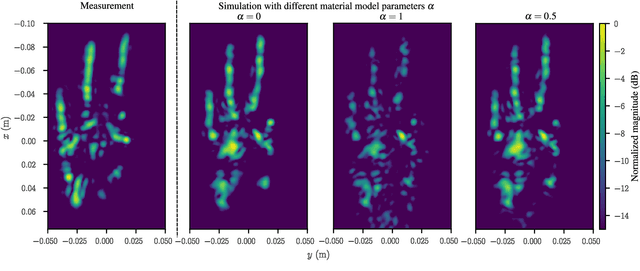

A Realistic Radar Ray Tracing Simulator for Hand Pose Imaging

Jul 28, 2023

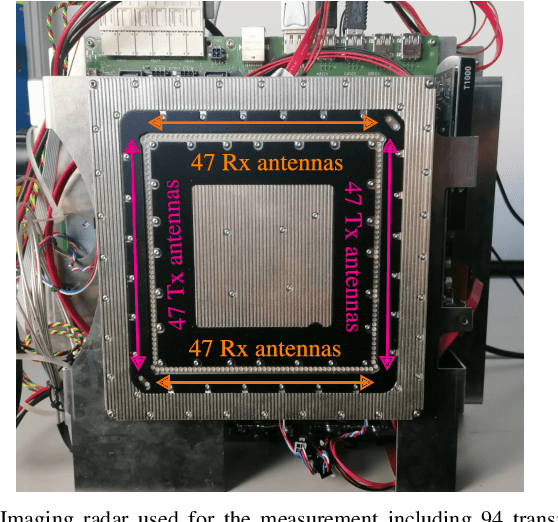

Abstract:With the increasing popularity of human-computer interaction applications, there is also growing interest in generating sufficiently large and diverse data sets for automatic radar-based recognition of hand poses and gestures. Radar simulations are a vital approach to generating training data (e.g., for machine learning). Therefore, this work applies a ray tracing method to radar imaging of the hand. The performance of the proposed simulation approach is verified by a comparison of simulation and measurement data based on an imaging radar with a high lateral resolution. In addition, the surface material model incorporated into the ray tracer is highlighted in more detail and parameterized for radar hand imaging. Measurements and simulations show a very high similarity between synthetic and real radar image captures. The presented results demonstrate that it is possible to generate very realistic simulations of radar measurement data even for complex radar hand pose imaging systems.

Implementation of Real-Time Automotive SAR Imaging

Jun 16, 2023

Abstract:This paper presents measures to reduce the computation time of automotive synthetic aperture radar (SAR) imaging to achieve real-time capability. For this, the image formation, which is based on the Back-Projection algorithm, was thoroughly analyzed. Various optimizations were individually tested and analyzed on graphics processing units (GPU). Apart from the time reduction gained from these measures, the data size needed for processing was also drastically decreased. With a combination of all measures, a high-resolution SAR image of 30 m by 30 m that combines 8192 chirps can be reconstructed in less than 30 ms using a standard GPU. It is thus demonstrated that a real-time implementation of automotive SAR is possible.

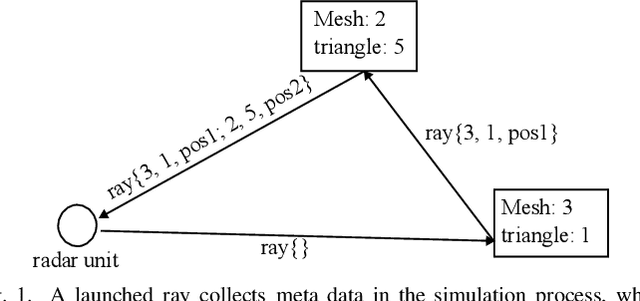

Achieving Efficient and Realistic Full-Radar Simulations and Automatic Data Annotation by exploiting Ray Meta Data of a Radar Ray Tracing Simulator

May 23, 2023

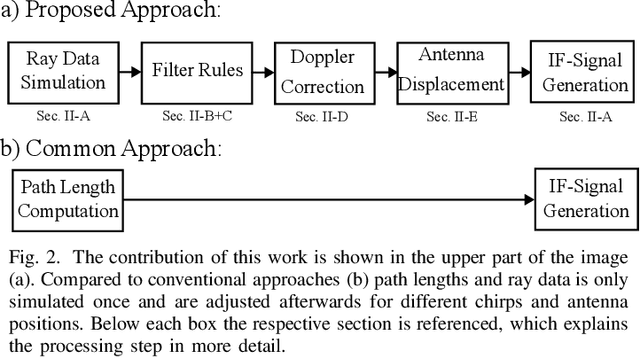

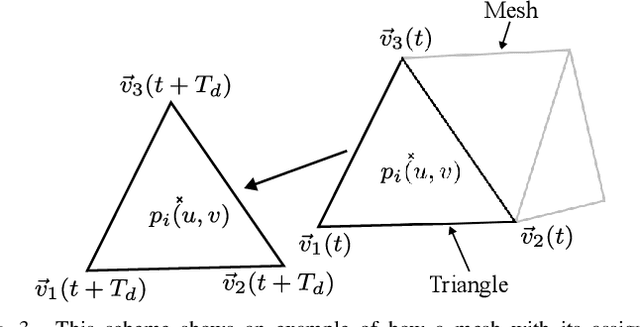

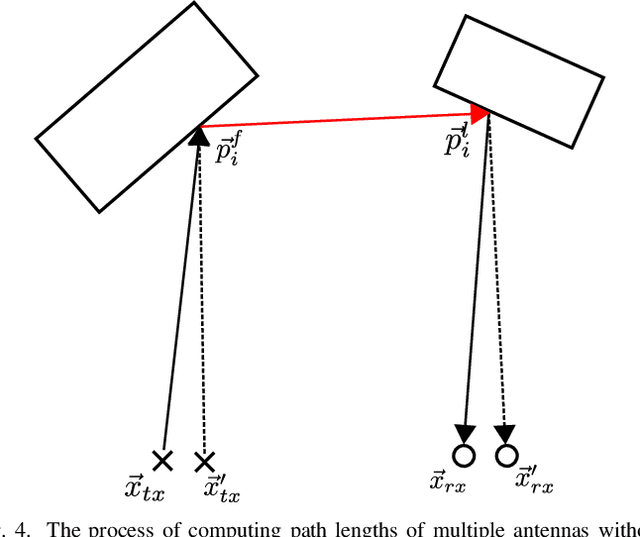

Abstract:In this work a novel radar simulation concept is introduced that allows to simulate realistic radar data for Range, Doppler, and for arbitrary antenna positions in an efficient way. Further, it makes it possible to automatically annotate the simulated radar signal by allowing to decompose it into different parts. This approach allows not only almost perfect annotations possible, but also allows the annotation of exotic effects, such as multi-path effects or to label signal parts originating from different parts of an object. This is possible by adapting the computation process of a Monte Carlo shooting and bouncing rays (SBR) simulator. By considering the hits of each simulated ray, various meta data can be stored such as hit position, mesh pointer, object IDs, and many more. This collected meta data can then be utilized to predict the change of path lengths introduced by object motion to obtain Doppler information or to apply specific ray filter rules in order obtain radar signals that only fulfil specific conditions, such as multiple bounces or containing specific object IDs. Using this approach, perfect and otherwise almost impossible annotations schemes can be realized.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge