Marin Kobilarov

Multi-Agent Feedback Motion Planning using Probably Approximately Correct Nonlinear Model Predictive Control

Jan 21, 2025

Abstract:For many tasks, multi-robot teams often provide greater efficiency, robustness, and resiliency. However, multi-robot collaboration in real-world scenarios poses a number of major challenges, especially when dynamic robots must balance competing objectives like formation control and obstacle avoidance in the presence of stochastic dynamics and sensor uncertainty. In this paper, we propose a distributed, multi-agent receding-horizon feedback motion planning approach using Probably Approximately Correct Nonlinear Model Predictive Control (PAC-NMPC) that is able to reason about both model and measurement uncertainty to achieve robust multi-agent formation control while navigating cluttered obstacle fields and avoiding inter-robot collisions. Our approach relies not only on the underlying PAC-NMPC algorithm but also on a terminal cost-function derived from gyroscopic obstacle avoidance. Through numerical simulation, we show that our distributed approach performs on par with a centralized formulation, that it offers improved performance in the case of significant measurement noise, and that it can scale to more complex dynamical systems.

A Feasible Workflow for Retinal Vein Cannulation in Ex Vivo Porcine Eyes with Robotic Assistance

Dec 16, 2024

Abstract:A potential Retinal Vein Occlusion (RVO) treatment involves Retinal Vein Cannulation (RVC), which requires the surgeon to insert a microneedle into the affected retinal vein and administer a clot-dissolving drug. This procedure presents significant challenges due to human physiological limitations, such as hand tremors, prolonged tool-holding periods, and constraints in depth perception using a microscope. This study proposes a robot-assisted workflow for RVC to overcome these limitations. The test robot is operated through a keyboard. An intraoperative Optical Coherence Tomography (iOCT) system is used to verify successful venous puncture before infusion. The workflow is validated using 12 ex vivo porcine eyes. These early results demonstrate a successful rate of 10 out of 12 cannulations (83.33%), affirming the feasibility of the proposed workflow.

Surgical Robot Transformer (SRT): Imitation Learning for Surgical Tasks

Jul 17, 2024

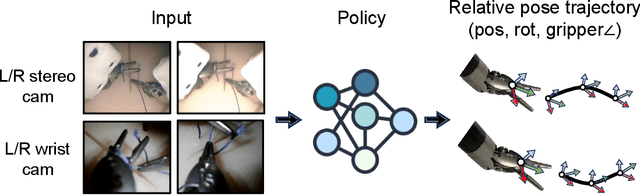

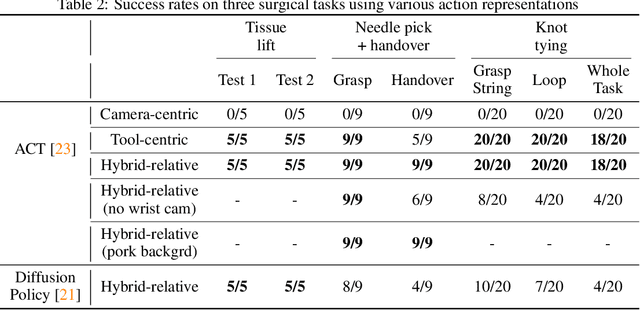

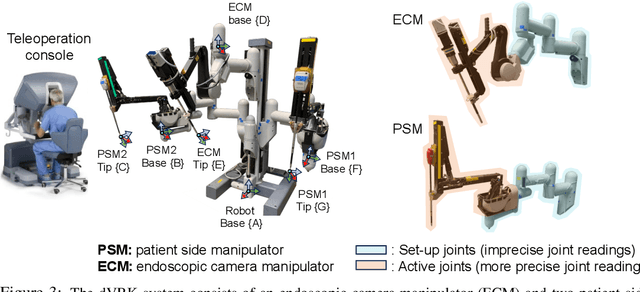

Abstract:We explore whether surgical manipulation tasks can be learned on the da Vinci robot via imitation learning. However, the da Vinci system presents unique challenges which hinder straight-forward implementation of imitation learning. Notably, its forward kinematics is inconsistent due to imprecise joint measurements, and naively training a policy using such approximate kinematics data often leads to task failure. To overcome this limitation, we introduce a relative action formulation which enables successful policy training and deployment using its approximate kinematics data. A promising outcome of this approach is that the large repository of clinical data, which contains approximate kinematics, may be directly utilized for robot learning without further corrections. We demonstrate our findings through successful execution of three fundamental surgical tasks, including tissue manipulation, needle handling, and knot-tying.

Non-Prehensile Aerial Manipulation using Model-Based Deep Reinforcement Learning

Jul 01, 2024Abstract:With the continual adoption of Uncrewed Aerial Vehicles (UAVs) across a wide-variety of application spaces, robust aerial manipulation remains a key research challenge. Aerial manipulation tasks require interacting with objects in the environment, often without knowing their dynamical properties like mass and friction a priori. Additionally, interacting with these objects can have a significant impact on the control and stability of the vehicle. We investigated an approach for robust control and non-prehensile aerial manipulation in unknown environments. In particular, we use model-based Deep Reinforcement Learning (DRL) to learn a world model of the environment while simultaneously learning a policy for interaction with the environment. We evaluated our approach on a series of push tasks by moving an object between goal locations and demonstrated repeatable behaviors across a range of friction values.

Survey of Simulators for Aerial Robots

Nov 04, 2023Abstract:Uncrewed Aerial Vehicle (UAV) research faces challenges with safety, scalability, costs, and ecological impact when conducting hardware testing. High-fidelity simulators offer a vital solution by replicating real-world conditions to enable the development and evaluation of novel perception and control algorithms. However, the large number of available simulators poses a significant challenge for researchers to determine which simulator best suits their specific use-case, based on each simulator's limitations and customization readiness. This paper analyzes existing UAV simulators and decision factors for their selection, aiming to enhance the efficiency and safety of research endeavors.

Uncertainty-Aware Planning for Heterogeneous Robot Teams using Dynamic Topological Graphs and Mixed-Integer Programming

Oct 12, 2023

Abstract:Planning under uncertainty is a fundamental challenge in robotics. For multi-robot teams, the challenge is further exacerbated, since the planning problem can quickly become computationally intractable as the number of robots increase. In this paper, we propose a novel approach for planning under uncertainty using heterogeneous multi-robot teams. In particular, we leverage the notion of a dynamic topological graph and mixed-integer programming to generate multi-robot plans that deploy fast scout team members to reduce uncertainty about the environment. We test our approach in a number of representative scenarios where the robot team must move through an environment while minimizing detection in the presence of uncertain observer positions. We demonstrate that our approach is sufficiently computationally tractable for real-time re-planning in changing environments, can improve performance in the presence of imperfect information, and can be adjusted to accommodate different risk profiles.

PAC-NMPC with Learned Perception-Informed Value Function

Sep 22, 2023

Abstract:Nonlinear model predictive control (NMPC) is typically restricted to short, finite horizons to limit the computational burden of online optimization. This makes a global planner necessary to avoid local minima when using NMPC for navigation in complex environments. For this reason, the performance of NMPC approaches are often limited by that of the global planner. While control policies trained with reinforcement learning (RL) can theoretically learn to avoid such local minima, they are usually unable to guarantee enforcement of general state constraints. In this paper, we augment a sampling-based stochastic NMPC (SNMPC) approach with an RL trained perception-informed value function. This allows the system to avoid observable local minima in the environment by reasoning about perception information beyond the finite planning horizon. By using Probably Approximately Correct NMPC (PAC-NMPC) as our base controller, we are also able to generate statistical guarantees of performance and safety. We demonstrate our approach in simulation and on hardware using a 1/10th scale rally car with lidar.

A Small Form Factor Aerial Research Vehicle for Pick-and-Place Tasks with Onboard Real-Time Object Detection and Visual Odometry

Aug 02, 2023

Abstract:This paper introduces a novel, small form-factor, aerial vehicle research platform for agile object detection, classification, tracking, and interaction tasks. General-purpose hardware components were designed to augment a given aerial vehicle and enable it to perform safe and reliable grasping. These components include a custom collision tolerant cage and low-cost Gripper Extension Package, which we call GREP, for object grasping. Small vehicles enable applications in highly constrained environments, but are often limited by computational resources. This work evaluates the challenges of pick-and-place tasks, with entirely onboard computation of object pose and visual odometry based state estimation on a small platform, and demonstrates experiments with enough accuracy to reliably grasp objects. In a total of 70 trials across challenging cases such as cluttered environments, obstructed targets, and multiple instances of the same target, we demonstrated successfully grasping the target in 93% of trials. Both the hardware component designs and software framework are released as open-source, since our intention is to enable easy reproduction and application on a wide range of small vehicles.

Micromanipulation in Surgery: Autonomous Needle Insertion Inside the Eye for Targeted Drug Delivery

Jun 30, 2023Abstract:We consider a micromanipulation problem in eye surgery, specifically retinal vein cannulation (RVC). RVC involves inserting a microneedle into a retinal vein for the purpose of targeted drug delivery. The procedure requires accurately guiding a needle to a target vein and inserting it while avoiding damage to the surrounding tissues. RVC can be considered similar to the reach or push task studied in robotics manipulation, but with additional constraints related to precision and safety while interacting with soft tissues. Prior works have mainly focused developing robotic hardware and sensors to enhance the surgeons' accuracy, leaving the automation of RVC largely unexplored. In this paper, we present the first autonomous strategy for RVC while relying on a minimal setup: a robotic arm, a needle, and monocular images. Our system exclusively relies on monocular vision to achieve precise navigation, gentle placement on the target vein, and safe insertion without causing tissue damage. Throughout the procedure, we employ machine learning for perception and to identify key surgical events such as needle-vein contact and vein punctures. Detecting these events guides our task and motion planning framework, which generates safe trajectories using model predictive control to complete the procedure. We validate our system through 24 successful autonomous trials on 4 cadaveric pig eyes. We show that our system can navigate to target veins within 22 micrometers of XY accuracy and under 35 seconds, and consistently puncture the target vein without causing tissue damage. Preliminary comparison to a human demonstrates the superior accuracy and reliability of our system.

Deep Learning Guided Autonomous Surgery: Guiding Small Needles into Sub-Millimeter Scale Blood Vessels

Jun 16, 2023

Abstract:We propose a general strategy for autonomous guidance and insertion of a needle into a retinal blood vessel. The main challenges underpinning this task are the accurate placement of the needle-tip on the target vein and a careful needle insertion maneuver to avoid double-puncturing the vein, while dealing with challenging kinematic constraints and depth-estimation uncertainty. Following how surgeons perform this task purely based on visual feedback, we develop a system which relies solely on \emph{monocular} visual cues by combining data-driven kinematic and contact estimation, visual-servoing, and model-based optimal control. By relying on both known kinematic models, as well as deep-learning based perception modules, the system can localize the surgical needle tip and detect needle-tissue interactions and venipuncture events. The outputs from these perception modules are then combined with a motion planning framework that uses visual-servoing and optimal control to cannulate the target vein, while respecting kinematic constraints that consider the safety of the procedure. We demonstrate that we can reliably and consistently perform needle insertion in the domain of retinal surgery, specifically in performing retinal vein cannulation. Using cadaveric pig eyes, we demonstrate that our system can navigate to target veins within 22$\mu m$ XY accuracy and perform the entire procedure in less than 35 seconds on average, and all 24 trials performed on 4 pig eyes were successful. Preliminary comparison study against a human operator show that our system is consistently more accurate and safer, especially during safety-critical needle-tissue interactions. To the best of the authors' knowledge, this work accomplishes a first demonstration of autonomous retinal vein cannulation at a clinically-relevant setting using animal tissues.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge