Adam Polevoy

Johns Hopkins University Applied Physics Lab

Stratified Topological Autonomy for Long-Range Coordination (STALC)

Mar 13, 2025

Abstract:Achieving unified multi-robot coordination and motion planning in complex environments is a challenging problem. In this paper, we present a hierarchical approach to long-range coordination, which we call Stratified Topological Autonomy for Long-Range Coordination (STALC). In particular, we look at the problem of minimizing visibility to observers and maximizing safety with a multi-robot team navigating through a hazardous environment. At its core, our approach relies on the notion of a dynamic topological graph, where the edge weights vary dynamically based on the locations of the robots in the graph. To create this dynamic topological graph, we evaluate the visibility of the robot team from a discrete set of observer locations (both adversarial and friendly), and construct a topological graph whose edge weights depend on both adversary position and robot team configuration. We then impose temporal constraints on the evolution of those edge weights based on robot team state and use Mixed-Integer Programming (MIP) to generate optimal multirobot plans through the graph. The visibility information also informs the lower layers of the autonomy stack to plan minimal visibility paths through the environment for the team of robots. Our approach presents methods to reduce the computational complexity for a team of robots that interact and coordinate across the team to accomplish a common goal. We demonstrate our approach in simulated and hardware experiments in forested and urban environments.

Dense Fixed-Wing Swarming using Receding-Horizon NMPC

Feb 06, 2025Abstract:In this paper, we present an approach for controlling a team of agile fixed-wing aerial vehicles in close proximity to one another. Our approach relies on receding-horizon nonlinear model predictive control (NMPC) to plan maneuvers across an expanded flight envelope to enable inter-agent collision avoidance. To facilitate robust collision avoidance and characterize the likelihood of inter-agent collisions, we compute a statistical bound on the probability of the system leaving a tube around the planned nominal trajectory. Finally, we propose a metric for evaluating highly dynamic swarms and use this metric to evaluate our approach. We successfully demonstrated our approach through both simulation and hardware experiments, and to our knowledge, this the first time close-quarters swarming has been achieved with physical aerobatic fixed-wing vehicles.

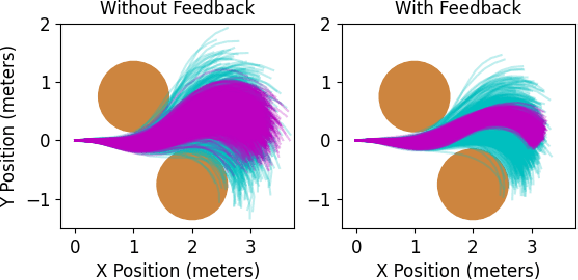

Multi-Agent Feedback Motion Planning using Probably Approximately Correct Nonlinear Model Predictive Control

Jan 21, 2025

Abstract:For many tasks, multi-robot teams often provide greater efficiency, robustness, and resiliency. However, multi-robot collaboration in real-world scenarios poses a number of major challenges, especially when dynamic robots must balance competing objectives like formation control and obstacle avoidance in the presence of stochastic dynamics and sensor uncertainty. In this paper, we propose a distributed, multi-agent receding-horizon feedback motion planning approach using Probably Approximately Correct Nonlinear Model Predictive Control (PAC-NMPC) that is able to reason about both model and measurement uncertainty to achieve robust multi-agent formation control while navigating cluttered obstacle fields and avoiding inter-robot collisions. Our approach relies not only on the underlying PAC-NMPC algorithm but also on a terminal cost-function derived from gyroscopic obstacle avoidance. Through numerical simulation, we show that our distributed approach performs on par with a centralized formulation, that it offers improved performance in the case of significant measurement noise, and that it can scale to more complex dynamical systems.

PAC-NMPC with Learned Perception-Informed Value Function

Sep 22, 2023

Abstract:Nonlinear model predictive control (NMPC) is typically restricted to short, finite horizons to limit the computational burden of online optimization. This makes a global planner necessary to avoid local minima when using NMPC for navigation in complex environments. For this reason, the performance of NMPC approaches are often limited by that of the global planner. While control policies trained with reinforcement learning (RL) can theoretically learn to avoid such local minima, they are usually unable to guarantee enforcement of general state constraints. In this paper, we augment a sampling-based stochastic NMPC (SNMPC) approach with an RL trained perception-informed value function. This allows the system to avoid observable local minima in the environment by reasoning about perception information beyond the finite planning horizon. By using Probably Approximately Correct NMPC (PAC-NMPC) as our base controller, we are also able to generate statistical guarantees of performance and safety. We demonstrate our approach in simulation and on hardware using a 1/10th scale rally car with lidar.

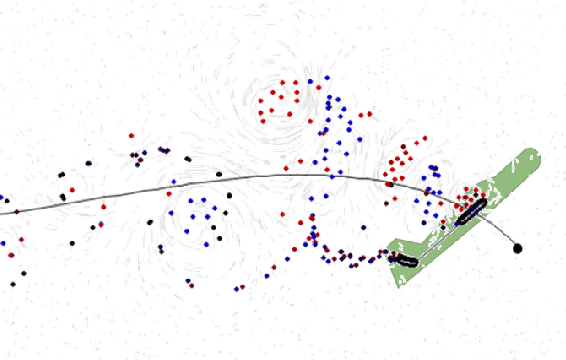

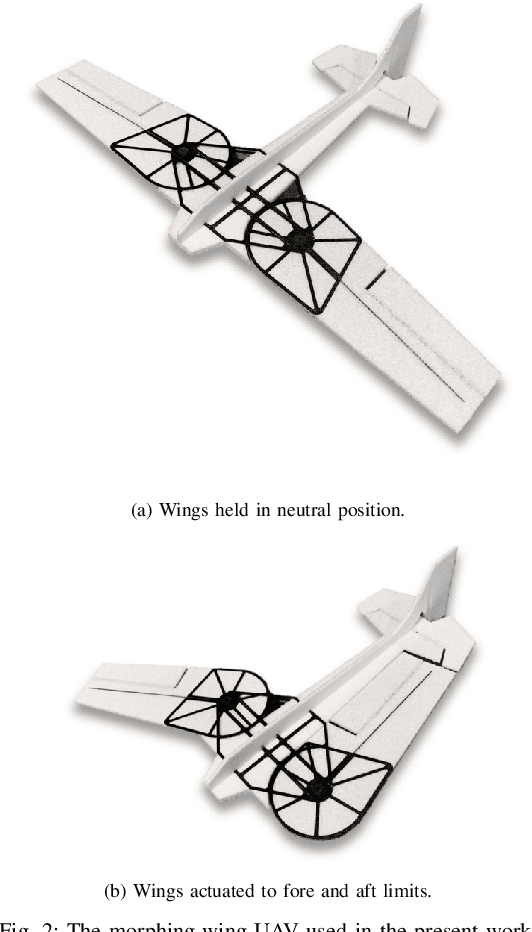

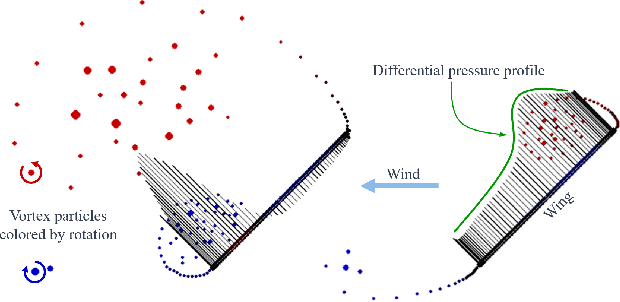

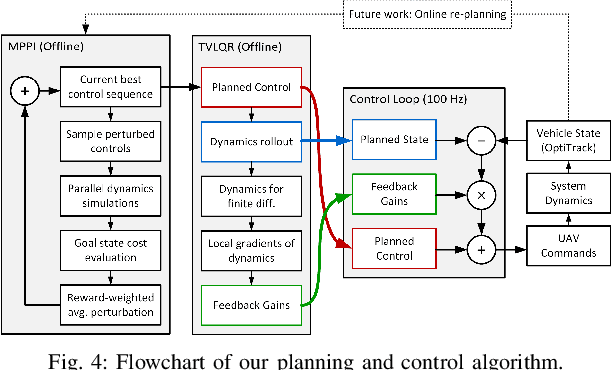

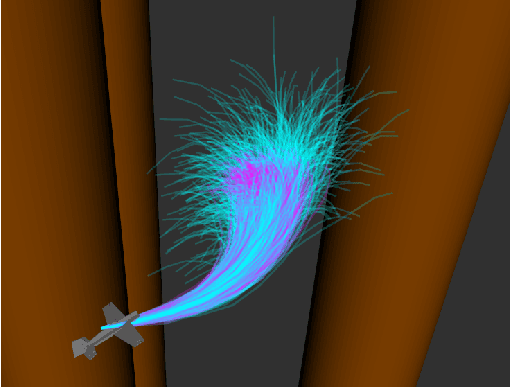

Planning and Control for a Dynamic Morphing-Wing UAV Using a Vortex Particle Model

Jul 05, 2023

Abstract:Achieving precise, highly-dynamic maneuvers with Unmanned Aerial Vehicles (UAVs) is a major challenge due to the complexity of the associated aerodynamics. In particular, unsteady effects -- as might be experienced in post-stall regimes or during sudden vehicle morphing -- can have an adverse impact on the performance of modern flight control systems. In this paper, we present a vortex particle model and associated model-based controller capable of reasoning about the unsteady aerodynamics during aggressive maneuvers. We evaluate our approach in hardware on a morphing-wing UAV executing post-stall perching maneuvers. Our results show that the use of the unsteady aerodynamics model improves performance during both fixed-wing and dynamic-wing perching, while the use of wing-morphing planned with quasi-steady aerodynamics results in reduced performance. While the focus of this paper is a pre-computed control policy, we believe that, with sufficient computational resources, our approach could enable online planning in the future.

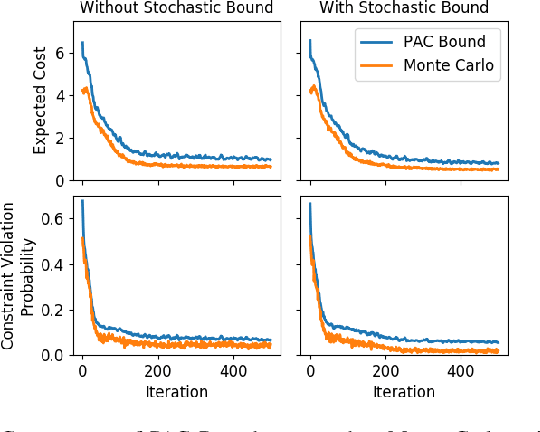

Probably Approximately Correct Nonlinear Model Predictive Control (PAC-NMPC)

Oct 14, 2022

Abstract:Approaches for stochastic nonlinear model predictive control (SNMPC) typically make restrictive assumptions about the system dynamics and rely on approximations to characterize the evolution of the underlying uncertainty distributions. For this reason, they are often unable to capture more complex distributions (e.g., non-Gaussian or multi-modal) and cannot provide accurate guarantees of performance. In this paper, we present a sampling-based SNMPC approach that leverages recently derived sample complexity bounds to certify the performance of a feedback policy without making assumptions about the system dynamics or underlying uncertainty distributions. By parallelizing our approach, we are able to demonstrate real-time receding-horizon SNMPC with statistical safety guarantees in simulation on a 24-inch wingspan fixed-wing UAV and on hardware using a 1/10th scale rally car.

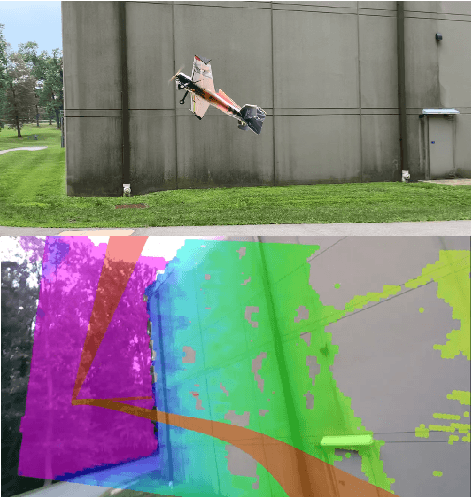

Post-Stall Navigation with Fixed-Wing UAVs using Onboard Vision

Jan 04, 2022

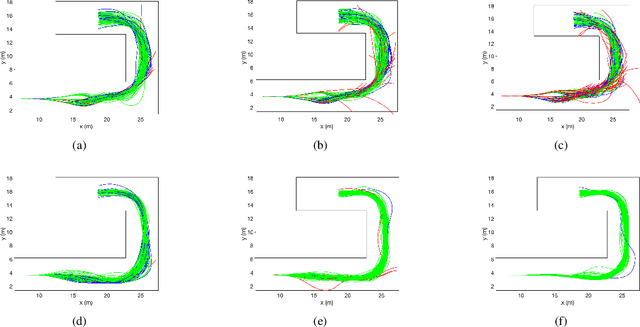

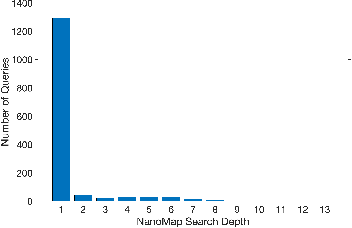

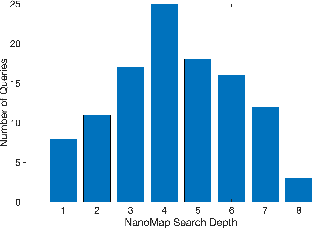

Abstract:Recent research has enabled fixed-wing unmanned aerial vehicles (UAVs) to maneuver in constrained spaces through the use of direct nonlinear model predictive control (NMPC). However, this approach has been limited to a priori known maps and ground truth state measurements. In this paper, we present a direct NMPC approach that leverages NanoMap, a light-weight point-cloud mapping framework to generate collision-free trajectories using onboard stereo vision. We first explore our approach in simulation and demonstrate that our algorithm is sufficient to enable vision-based navigation in urban environments. We then demonstrate our approach in hardware using a 42-inch fixed-wing UAV and show that our motion planning algorithm is capable of navigating around a building using a minimalistic set of goal-points. We also show that storing a point-cloud history is important for navigating these types of constrained environments.

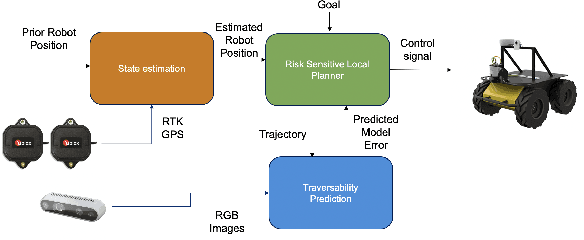

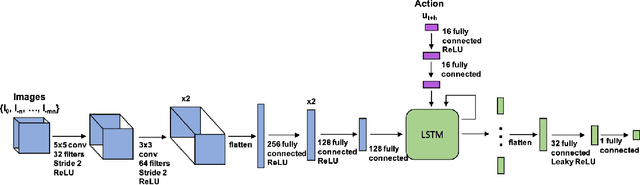

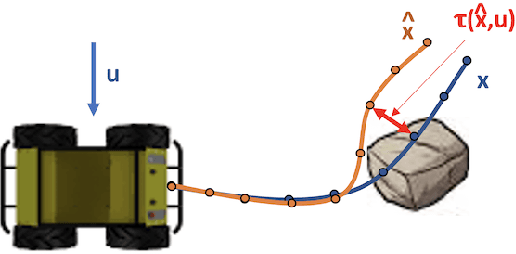

Complex Terrain Navigation via Model Error Prediction

Nov 18, 2021

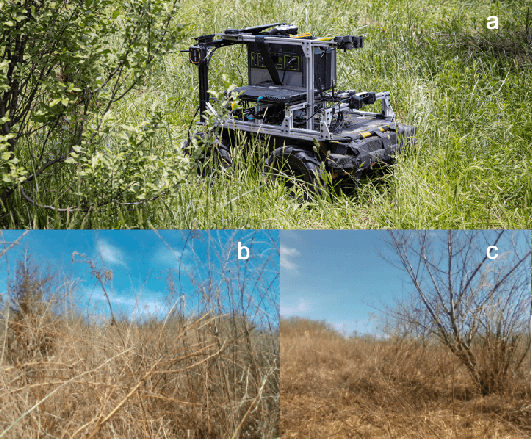

Abstract:Robot navigation traditionally relies on building an explicit map that is used to plan collision-free trajectories to a desired target. In deformable, complex terrain, using geometric-based approaches can fail to find a path due to mischaracterizing deformable objects as rigid and impassable. Instead, we learn to predict an estimate of traversability of terrain regions and to prefer regions that are easier to navigate (e.g., short grass over small shrubs). Rather than predicting collisions, we instead regress on realized error compared to a canonical dynamics model. We train with an on-policy approach, resulting in successful navigation policies using as little as 50 minutes of training data split across simulation and real world. Our learning-based navigation system is a sample efficient short-term planner that we demonstrate on a Clearpath Husky navigating through a variety of terrain including grassland and forest

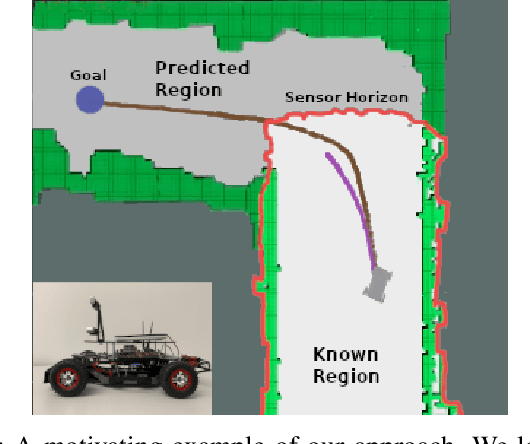

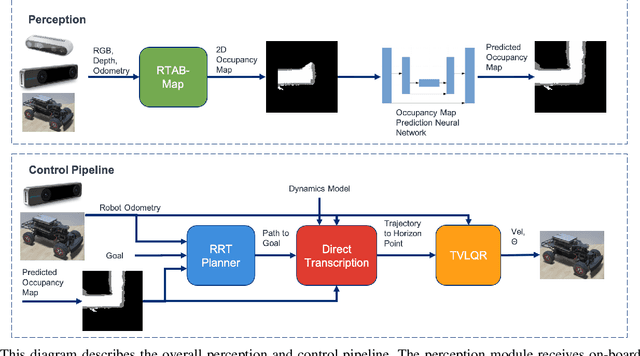

High-Speed Robot Navigation using Predicted Occupancy Maps

Dec 22, 2020

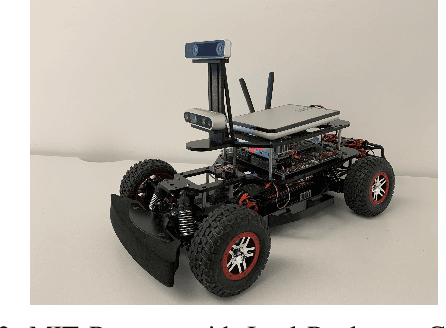

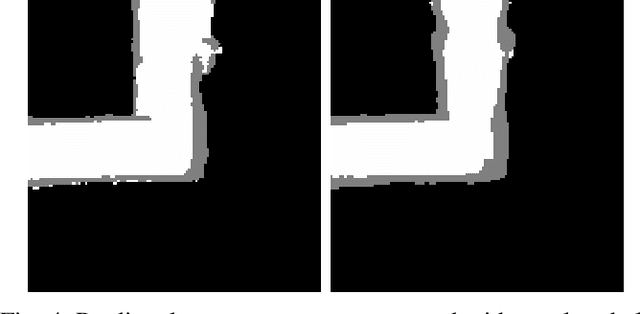

Abstract:Safe and high-speed navigation is a key enabling capability for real world deployment of robotic systems. A significant limitation of existing approaches is the computational bottleneck associated with explicit mapping and the limited field of view (FOV) of existing sensor technologies. In this paper, we study algorithmic approaches that allow the robot to predict spaces extending beyond the sensor horizon for robust planning at high speeds. We accomplish this using a generative neural network trained from real-world data without requiring human annotated labels. Further, we extend our existing control algorithms to support leveraging the predicted spaces to improve collision-free planning and navigation at high speeds. Our experiments are conducted on a physical robot based on the MIT race car using an RGBD sensor where were able to demonstrate improved performance at 4 m/s compared to a controller not operating on predicted regions of the map.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge