Craig Knuth

Johns Hopkins University Applied Physics Lab

Rapid Co-design of Task-Specialized Whegged Robots for Ad-Hoc Needs

May 08, 2024Abstract:In this work, we investigate the use of co-design methods to iterate upon robot designs in the field, performing time sensitive, ad-hoc tasks. Our method optimizes the morphology and wheg trajectory for a MiniRHex robot, producing 3D printable structures and leg trajectory parameters. Tested in four terrains, we show that robots optimized in simulation exhibit strong sim-to-real transfer and are nearly twice as efficient as the nominal platform when tested in hardware.

Generative Planning with Fast Collision Checks for High Speed Navigation

May 07, 2024

Abstract:Reasoning about large numbers of diverse plans to achieve high speed navigation in cluttered environments remains a challenge for robotic systems even in the case of perfect perceptual information. Often, this is tackled by methods that iteratively optimize around a prior seeded trajectory and consequently restrict to local optima. We present a novel planning method using normalizing flows (NFs) to encode expert-styled motion primitives. We also present an accelerated collision checking framework that enables rejecting samples from the prior distribution before running them through the NF model for rapid sampling of collision-free trajectories. The choice of an NF as the generator permits a flexible way to encode diverse multi-modal behavior distributions while maintaining a smooth relation to the input space which allows approximating collision checks on NF inputs rather than outputs. We show comparable performance to model predictive path integral control in random cluttered environments and improved exit rates in a cul-de-sac environment. We conclude by discussing our plans for future work to improve both safety and performance of our controller.

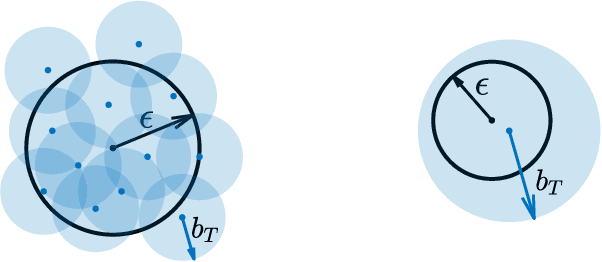

Statistical Safety and Robustness Guarantees for Feedback Motion Planning of Unknown Underactuated Stochastic Systems

Dec 13, 2022Abstract:We present a method for providing statistical guarantees on runtime safety and goal reachability for integrated planning and control of a class of systems with unknown nonlinear stochastic underactuated dynamics. Specifically, given a dynamics dataset, our method jointly learns a mean dynamics model, a spatially-varying disturbance bound that captures the effect of noise and model mismatch, and a feedback controller based on contraction theory that stabilizes the learned dynamics. We propose a sampling-based planner that uses the mean dynamics model and simultaneously bounds the closed-loop tracking error via a learned disturbance bound. We employ techniques from Extreme Value Theory (EVT) to estimate, to a specified level of confidence, several constants which characterize the learned components and govern the size of the tracking error bound. This ensures plans are guaranteed to be safely tracked at runtime. We validate that our guarantees translate to empirical safety in simulation on a 10D quadrotor, and in the real world on a physical CrazyFlie quadrotor and Clearpath Jackal robot, whereas baselines that ignore the model error and stochasticity are unsafe.

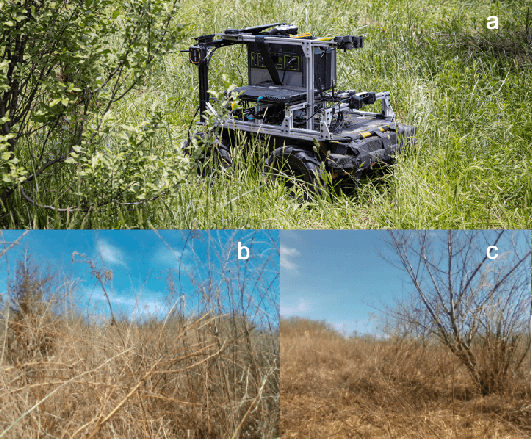

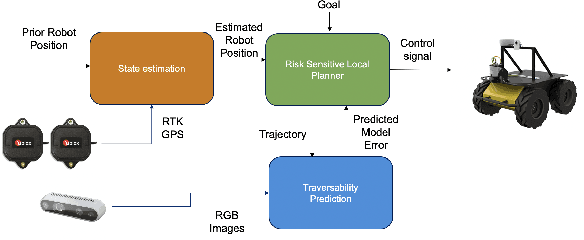

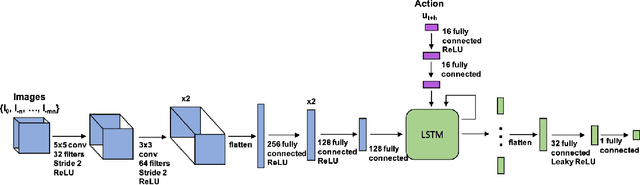

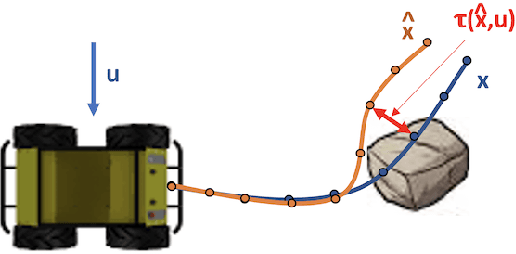

Complex Terrain Navigation via Model Error Prediction

Nov 18, 2021

Abstract:Robot navigation traditionally relies on building an explicit map that is used to plan collision-free trajectories to a desired target. In deformable, complex terrain, using geometric-based approaches can fail to find a path due to mischaracterizing deformable objects as rigid and impassable. Instead, we learn to predict an estimate of traversability of terrain regions and to prefer regions that are easier to navigate (e.g., short grass over small shrubs). Rather than predicting collisions, we instead regress on realized error compared to a canonical dynamics model. We train with an on-policy approach, resulting in successful navigation policies using as little as 50 minutes of training data split across simulation and real world. Our learning-based navigation system is a sample efficient short-term planner that we demonstrate on a Clearpath Husky navigating through a variety of terrain including grassland and forest

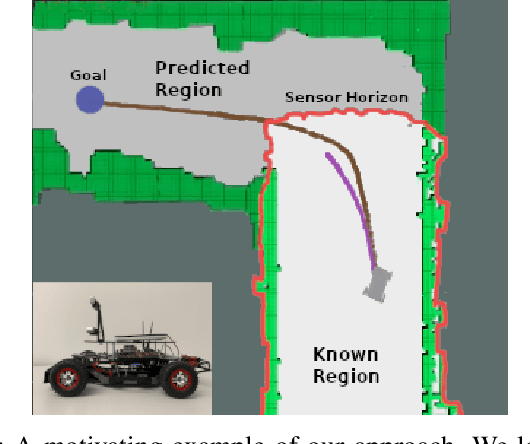

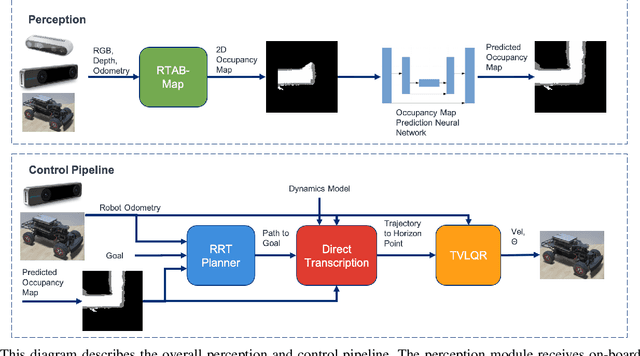

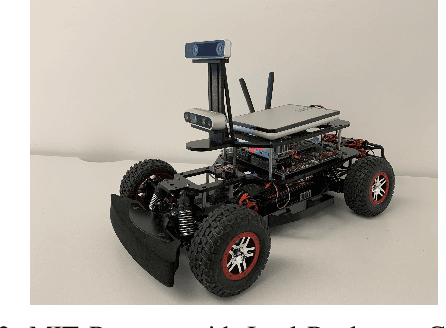

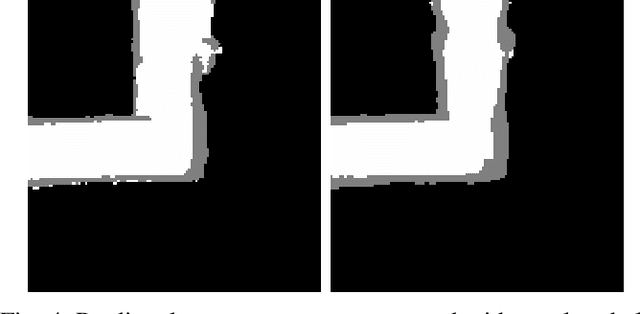

High-Speed Robot Navigation using Predicted Occupancy Maps

Dec 22, 2020

Abstract:Safe and high-speed navigation is a key enabling capability for real world deployment of robotic systems. A significant limitation of existing approaches is the computational bottleneck associated with explicit mapping and the limited field of view (FOV) of existing sensor technologies. In this paper, we study algorithmic approaches that allow the robot to predict spaces extending beyond the sensor horizon for robust planning at high speeds. We accomplish this using a generative neural network trained from real-world data without requiring human annotated labels. Further, we extend our existing control algorithms to support leveraging the predicted spaces to improve collision-free planning and navigation at high speeds. Our experiments are conducted on a physical robot based on the MIT race car using an RGBD sensor where were able to demonstrate improved performance at 4 m/s compared to a controller not operating on predicted regions of the map.

Planning with Learned Dynamics: Guaranteed Safety and Reachability via Lipschitz Constants

Oct 18, 2020

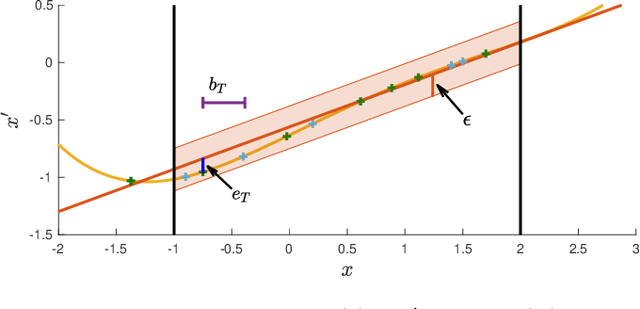

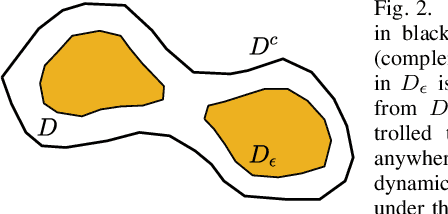

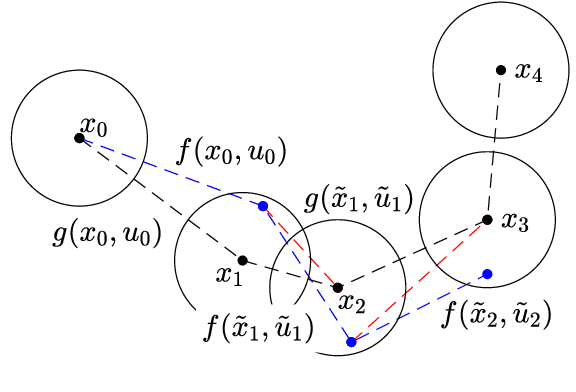

Abstract:We present an approach for feedback motion planning of systems with unknown dynamics which provides guarantees on safety, reachability, and stability about the goal. Given a learned control-affine approximation of the true dynamics, we estimate the Lipschitz constant of the difference between the true and learned dynamics to determine a trusted domain for our learned model. Provided the system has at least as many controls as states, we further derive the conditions under which a one-step feedback law exists. This allows fora small bound on the tracking error when the trajectory is executed on the real system. Our method imposes a check for the existence of the feedback law as constraints in a sampling-based planner, which returns a feedback policy ensuring that under the true dynamics, the goal is reachable, the path is safe in execution, and the closed-loop system is invariant in a small set about the goal. We demonstrate our approach by planning using learned models of a 6D quadrotor and a 7DOF Kuka arm.We show that a baseline which plans using the same learned dynamics without considering the error bound or the existence of the feedback law can fail to stabilize around the plan and become unsafe.

Inferring Obstacles and Path Validity from Visibility-Constrained Demonstrations

May 11, 2020

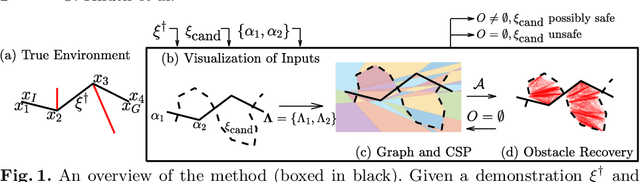

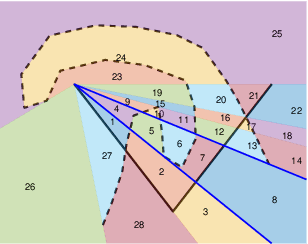

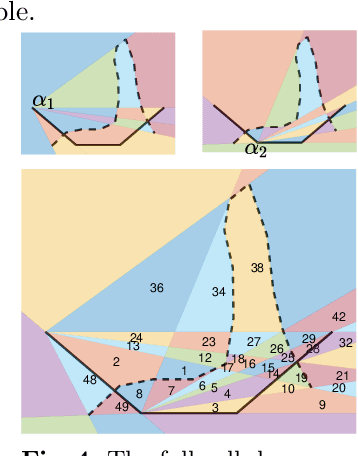

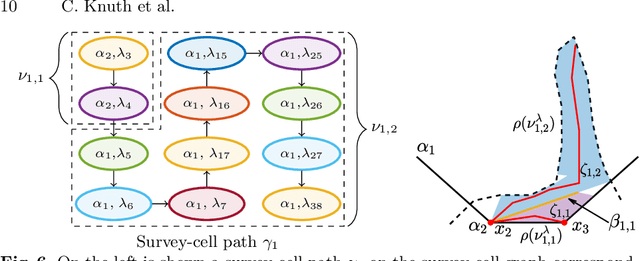

Abstract:Many methods in learning from demonstration assume that the demonstrator has knowledge of the full environment. However, in many scenarios, a demonstrator only sees part of the environment and they continuously replan as they gather information. To plan new paths or to reconstruct the environment, we must consider the visibility constraints and replanning process of the demonstrator, which, to our knowledge, has not been done in previous work. We consider the problem of inferring obstacle configurations in a 2D environment from demonstrated paths for a point robot that is capable of seeing in any direction but not through obstacles. Given a set of \textit{survey points}, which describe where the demonstrator obtains new information, and a candidate path, we construct a Constraint Satisfaction Problem (CSP) on a cell decomposition of the environment. We parameterize a set of obstacles corresponding to an assignment from the CSP and sample from the set to find valid environments. We show that there is a probabilistically-complete, yet not entirely tractable, algorithm that can guarantee novel paths in the space are unsafe or possibly safe. We also present an incomplete, but empirically-successful, heuristic-guided algorithm that we apply in our experiments to 1) planning novel paths and 2) recovering a probabilistic representation of the environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge