Necmiye Ozay

Safe and Optimal Learning from Preferences via Weighted Temporal Logic with Applications in Robotics and Formula 1

Nov 11, 2025Abstract:Autonomous systems increasingly rely on human feedback to align their behavior, expressed as pairwise comparisons, rankings, or demonstrations. While existing methods can adapt behaviors, they often fail to guarantee safety in safety-critical domains. We propose a safety-guaranteed, optimal, and efficient approach to solve the learning problem from preferences, rankings, or demonstrations using Weighted Signal Temporal Logic (WSTL). WSTL learning problems, when implemented naively, lead to multi-linear constraints in the weights to be learned. By introducing structural pruning and log-transform procedures, we reduce the problem size and recast the problem as a Mixed-Integer Linear Program while preserving safety guarantees. Experiments on robotic navigation and real-world Formula 1 data demonstrate that the method effectively captures nuanced preferences and models complex task objectives.

Efficient Reward Identification In Max Entropy Reinforcement Learning with Sparsity and Rank Priors

Aug 10, 2025Abstract:In this paper, we consider the problem of recovering time-varying reward functions from either optimal policies or demonstrations coming from a max entropy reinforcement learning problem. This problem is highly ill-posed without additional assumptions on the underlying rewards. However, in many applications, the rewards are indeed parsimonious, and some prior information is available. We consider two such priors on the rewards: 1) rewards are mostly constant and they change infrequently, 2) rewards can be represented by a linear combination of a small number of feature functions. We first show that the reward identification problem with the former prior can be recast as a sparsification problem subject to linear constraints. Moreover, we give a polynomial-time algorithm that solves this sparsification problem exactly. Then, we show that identifying rewards representable with the minimum number of features can be recast as a rank minimization problem subject to linear constraints, for which convex relaxations of rank can be invoked. In both cases, these observations lead to efficient optimization-based reward identification algorithms. Several examples are given to demonstrate the accuracy of the recovered rewards as well as their generalizability.

Learning Reward Machines from Partially Observed Optimal Policies

Feb 06, 2025

Abstract:Inverse reinforcement learning is the problem of inferring a reward function from an optimal policy. In this work, it is assumed that the reward is expressed as a reward machine whose transitions depend on atomic propositions associated with the state of a Markov Decision Process (MDP). Our goal is to identify the true reward machine using finite information. To this end, we first introduce the notion of a prefix tree policy which associates a distribution of actions to each state of the MDP and each attainable finite sequence of atomic propositions. Then, we characterize an equivalence class of reward machines that can be identified given the prefix tree policy. Finally, we propose a SAT-based algorithm that uses information extracted from the prefix tree policy to solve for a reward machine. It is proved that if the prefix tree policy is known up to a sufficient (but finite) depth, our algorithm recovers the exact reward machine up to the equivalence class. This sufficient depth is derived as a function of the number of MDP states and (an upper bound on) the number of states of the reward machine. Several examples are used to demonstrate the effectiveness of the approach.

Ankle Exoskeletons May Hinder Standing Balance in Simple Models of Older and Younger Adults

Aug 14, 2024

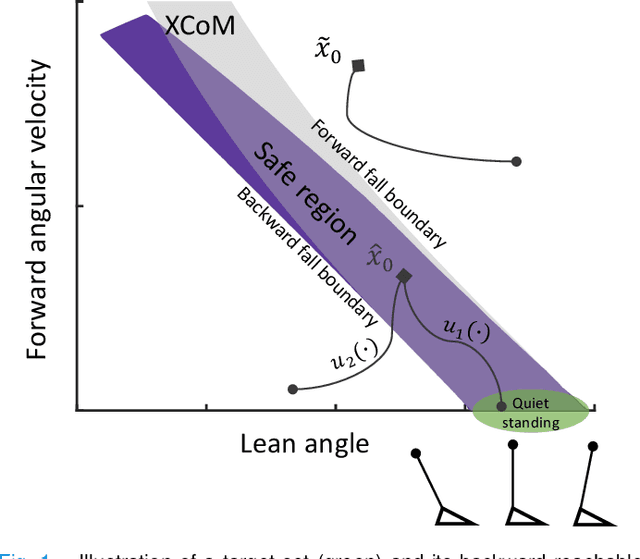

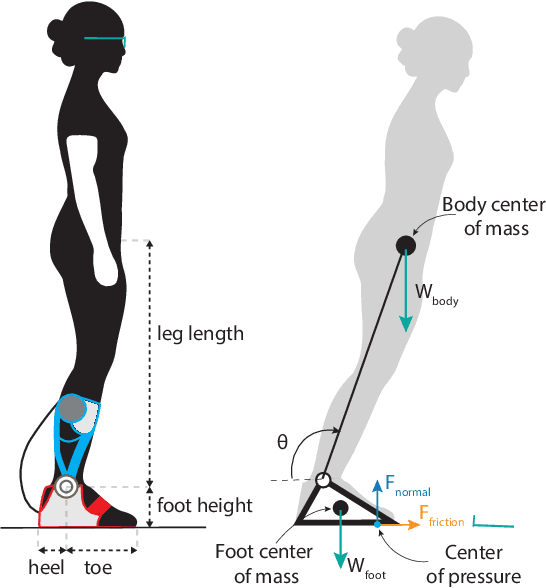

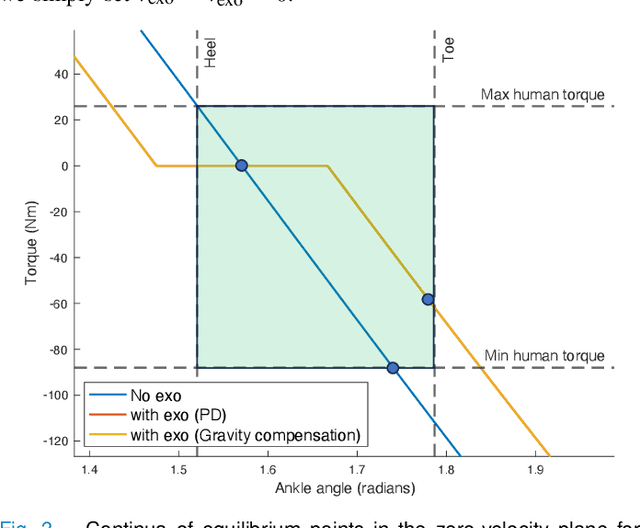

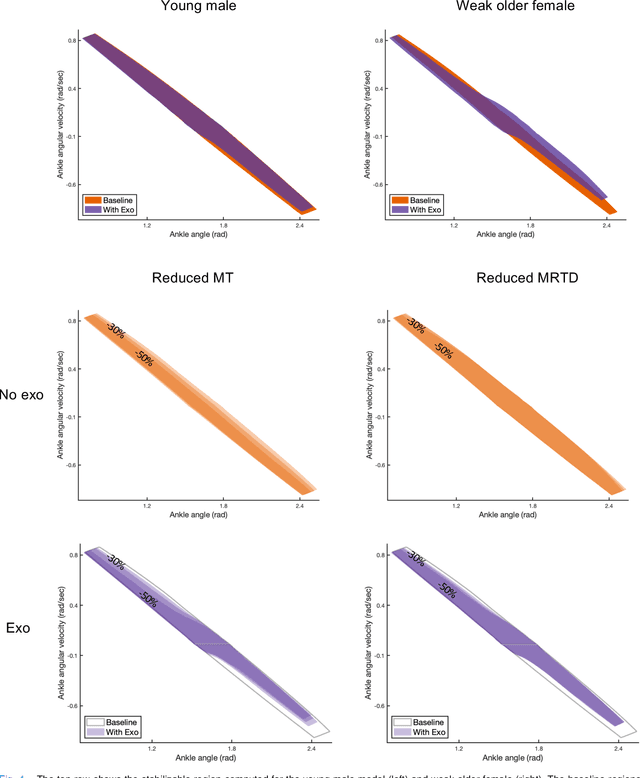

Abstract:Humans rely on ankle torque to maintain standing balance, particularly in the presence of small to moderate perturbations. Reductions in maximum torque (MT) production and maximum rate of torque development (MRTD) occur at the ankle with age, diminishing stability. Ankle exoskeletons are powered orthotic devices that may assist older adults by compensating for reduced muscle force and power production capabilities. They may also be able to assist with ankle strategies used for balance. However, no studies have investigated the effect of such devices on balance in older adults. Here, we model the effect ankle exoskeletons have on stability in physics-based models of healthy young and old adults, focusing on the mitigation of age-related deficits such as reduced MT and MRTD. We show that an ankle exoskeleton moderately reduces feasible stability boundaries in users who have full ankle strength. For individuals with age-related deficits, there is a trade-off. While exoskeletons augment stability in low velocity conditions, they reduce stability in some high velocity conditions. Our results suggest that well-established control strategies must still be experimentally validated in older adults.

On the Hardness of Learning to Stabilize Linear Systems

Nov 18, 2023Abstract:Inspired by the work of Tsiamis et al. \cite{tsiamis2022learning}, in this paper we study the statistical hardness of learning to stabilize linear time-invariant systems. Hardness is measured by the number of samples required to achieve a learning task with a given probability. The work in \cite{tsiamis2022learning} shows that there exist system classes that are hard to learn to stabilize with the core reason being the hardness of identification. Here we present a class of systems that can be easy to identify, thanks to a non-degenerate noise process that excites all modes, but the sample complexity of stabilization still increases exponentially with the system dimension. We tie this result to the hardness of co-stabilizability for this class of systems using ideas from robust control.

A Preference Learning Approach to Develop Safe and Personalizable Autonomous Vehicles

Oct 30, 2023Abstract:This work introduces a preference learning method that ensures adherence to traffic rules for autonomous vehicles. Our approach incorporates priority ordering of signal temporal logic (STL) formulas, describing traffic rules, into a learning framework. By leveraging the parametric weighted signal temporal logic (PWSTL), we formulate the problem of safety-guaranteed preference learning based on pairwise comparisons, and propose an approach to solve this learning problem. Our approach finds a feasible valuation for the weights of the given PWSTL formula such that, with these weights, preferred signals have weighted quantitative satisfaction measures greater than their non-preferred counterparts. The feasible valuation of weights given by our approach leads to a weighted STL formula which can be used in correct-and-custom-by-construction controller synthesis. We demonstrate the performance of our method with human subject studies in two different simulated driving scenarios involving a stop sign and a pedestrian crossing. Our approach yields competitive results compared to existing preference learning methods in terms of capturing preferences, and notably outperforms them when safety is considered.

Can Transformers Learn Optimal Filtering for Unknown Systems?

Aug 16, 2023

Abstract:Transformers have demonstrated remarkable success in natural language processing; however, their potential remains mostly unexplored for problems arising in dynamical systems. In this work, we investigate the optimal output estimation problem using transformers, which generate output predictions using all the past ones. We train the transformer using various systems drawn from a prior distribution and then evaluate its performance on previously unseen systems from the same distribution. As a result, the obtained transformer acts like a prediction algorithm that learns in-context and quickly adapts to and predicts well for different systems - thus we call it meta-output-predictor (MOP). MOP matches the performance of the optimal output estimator, based on Kalman filter, for most linear dynamical systems even though it does not have access to a model. We observe via extensive numerical experiments that MOP also performs well in challenging scenarios with non-i.i.d. noise, time-varying dynamics, and nonlinear dynamics like a quadrotor system with unknown parameters. To further support this observation, in the second part of the paper, we provide statistical guarantees on the performance of MOP and quantify the required amount of training to achieve a desired excess risk during test-time. Finally, we point out some limitations of MOP by identifying two classes of problems MOP fails to perform well, highlighting the need for caution when using transformers for control and estimation.

Falsification of a Vision-based Automatic Landing System

Jul 04, 2023Abstract:At smaller airports without an instrument approach or advanced equipment, automatic landing of aircraft is a safety-critical task that requires the use of sensors present on the aircraft. In this paper, we study falsification of an automatic landing system for fixed-wing aircraft using a camera as its main sensor. We first present an architecture for vision-based automatic landing, including a vision-based runway distance and orientation estimator and an associated PID controller. We then outline landing specifications that we validate with actual flight data. Using these specifications, we propose the use of the falsification tool Breach to find counterexamples to the specifications in the automatic landing system. Our experiments are implemented using a Beechcraft Baron 58 in the X-Plane flight simulator communicating with MATLAB Simulink.

Finite Sample Identification of Bilinear Dynamical Systems

Aug 29, 2022

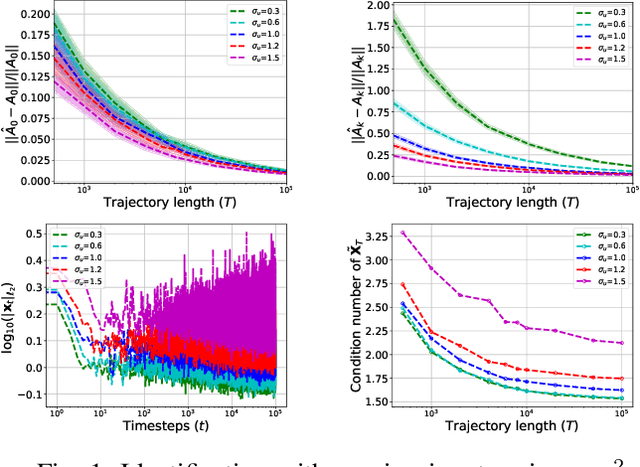

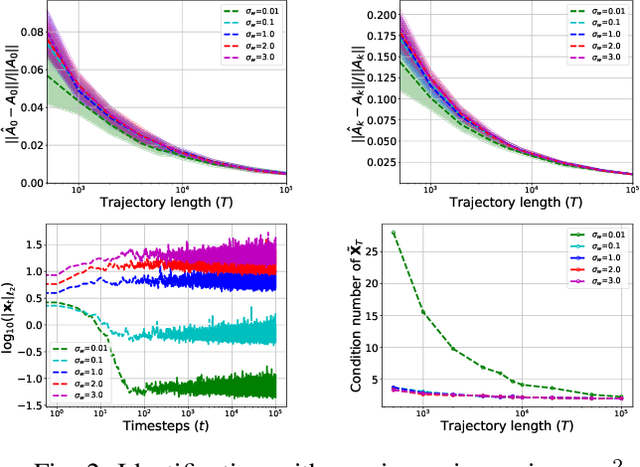

Abstract:Bilinear dynamical systems are ubiquitous in many different domains and they can also be used to approximate more general control-affine systems. This motivates the problem of learning bilinear systems from a single trajectory of the system's states and inputs. Under a mild marginal mean-square stability assumption, we identify how much data is needed to estimate the unknown bilinear system up to a desired accuracy with high probability. Our sample complexity and statistical error rates are optimal in terms of the trajectory length, the dimensionality of the system and the input size. Our proof technique relies on an application of martingale small-ball condition. This enables us to correctly capture the properties of the problem, specifically our error rates do not deteriorate with increasing instability. Finally, we show that numerical experiments are well-aligned with our theoretical results.

Safe Output Feedback Motion Planning from Images via Learned Perception Modules and Contraction Theory

Jun 14, 2022

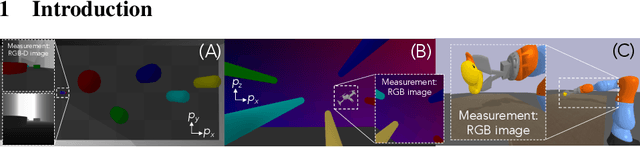

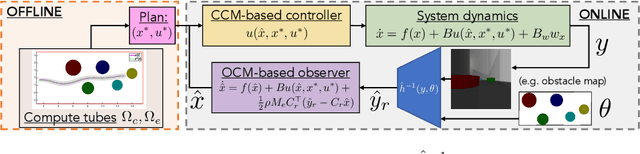

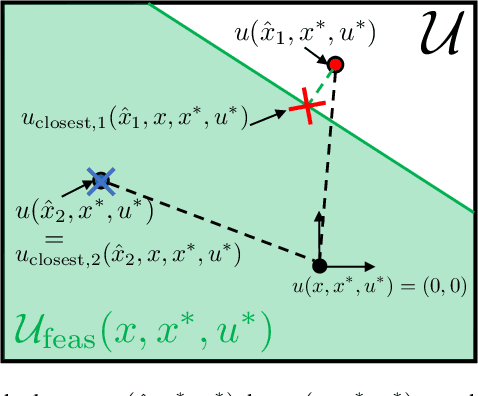

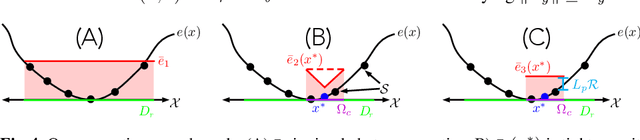

Abstract:We present a motion planning algorithm for a class of uncertain control-affine nonlinear systems which guarantees runtime safety and goal reachability when using high-dimensional sensor measurements (e.g., RGB-D images) and a learned perception module in the feedback control loop. First, given a dataset of states and observations, we train a perception system that seeks to invert a subset of the state from an observation, and estimate an upper bound on the perception error which is valid with high probability in a trusted domain near the data. Next, we use contraction theory to design a stabilizing state feedback controller and a convergent dynamic state observer which uses the learned perception system to update its state estimate. We derive a bound on the trajectory tracking error when this controller is subjected to errors in the dynamics and incorrect state estimates. Finally, we integrate this bound into a sampling-based motion planner, guiding it to return trajectories that can be safely tracked at runtime using sensor data. We demonstrate our approach in simulation on a 4D car, a 6D planar quadrotor, and a 17D manipulation task with RGB(-D) sensor measurements, demonstrating that our method safely and reliably steers the system to the goal, while baselines that fail to consider the trusted domain or state estimation errors can be unsafe.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge