Katie M. Popek

Johns Hopkins University Applied Physics Lab

Rapid Co-design of Task-Specialized Whegged Robots for Ad-Hoc Needs

May 08, 2024Abstract:In this work, we investigate the use of co-design methods to iterate upon robot designs in the field, performing time sensitive, ad-hoc tasks. Our method optimizes the morphology and wheg trajectory for a MiniRHex robot, producing 3D printable structures and leg trajectory parameters. Tested in four terrains, we show that robots optimized in simulation exhibit strong sim-to-real transfer and are nearly twice as efficient as the nominal platform when tested in hardware.

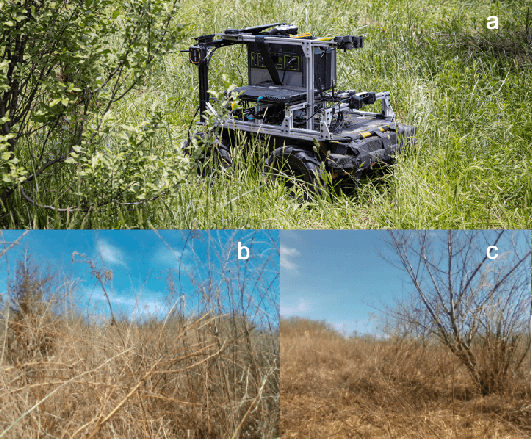

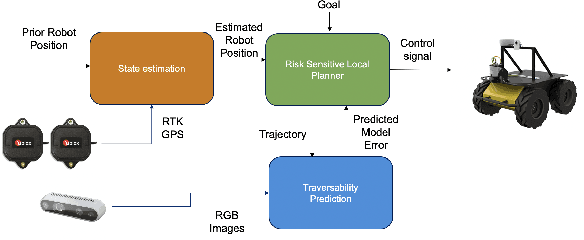

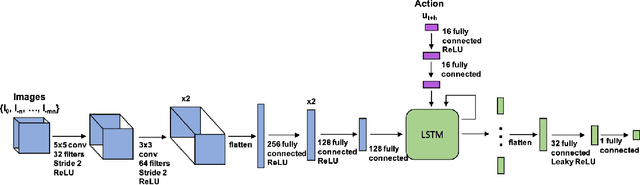

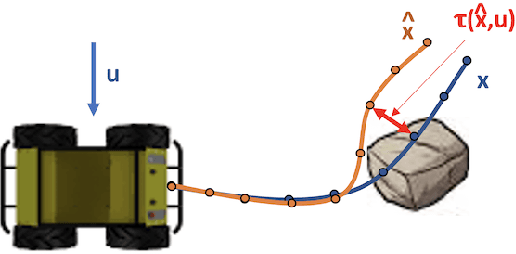

Complex Terrain Navigation via Model Error Prediction

Nov 18, 2021

Abstract:Robot navigation traditionally relies on building an explicit map that is used to plan collision-free trajectories to a desired target. In deformable, complex terrain, using geometric-based approaches can fail to find a path due to mischaracterizing deformable objects as rigid and impassable. Instead, we learn to predict an estimate of traversability of terrain regions and to prefer regions that are easier to navigate (e.g., short grass over small shrubs). Rather than predicting collisions, we instead regress on realized error compared to a canonical dynamics model. We train with an on-policy approach, resulting in successful navigation policies using as little as 50 minutes of training data split across simulation and real world. Our learning-based navigation system is a sample efficient short-term planner that we demonstrate on a Clearpath Husky navigating through a variety of terrain including grassland and forest

High-Speed Robot Navigation using Predicted Occupancy Maps

Dec 22, 2020

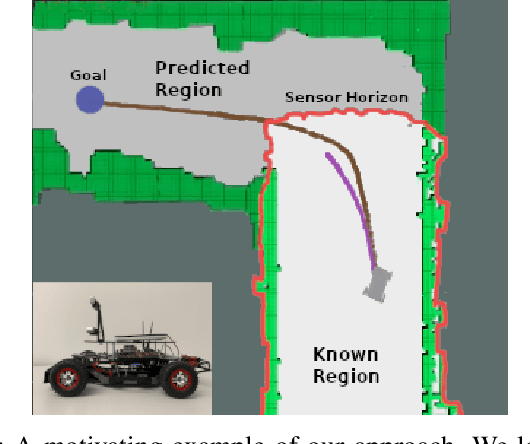

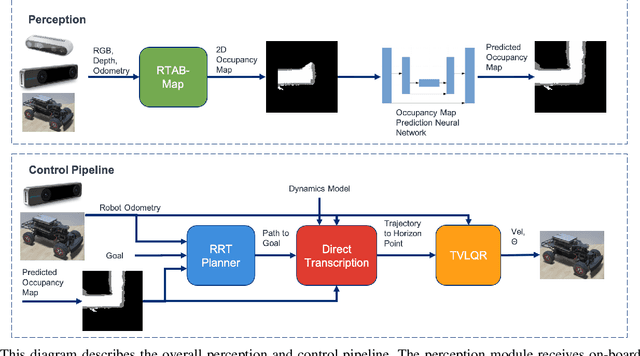

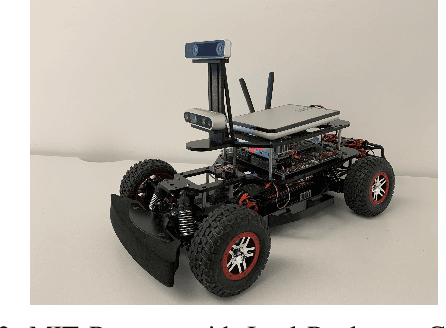

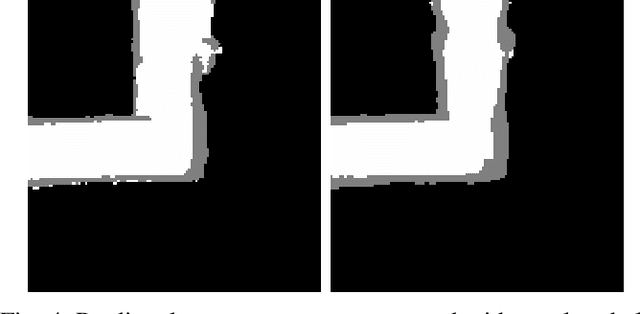

Abstract:Safe and high-speed navigation is a key enabling capability for real world deployment of robotic systems. A significant limitation of existing approaches is the computational bottleneck associated with explicit mapping and the limited field of view (FOV) of existing sensor technologies. In this paper, we study algorithmic approaches that allow the robot to predict spaces extending beyond the sensor horizon for robust planning at high speeds. We accomplish this using a generative neural network trained from real-world data without requiring human annotated labels. Further, we extend our existing control algorithms to support leveraging the predicted spaces to improve collision-free planning and navigation at high speeds. Our experiments are conducted on a physical robot based on the MIT race car using an RGBD sensor where were able to demonstrate improved performance at 4 m/s compared to a controller not operating on predicted regions of the map.

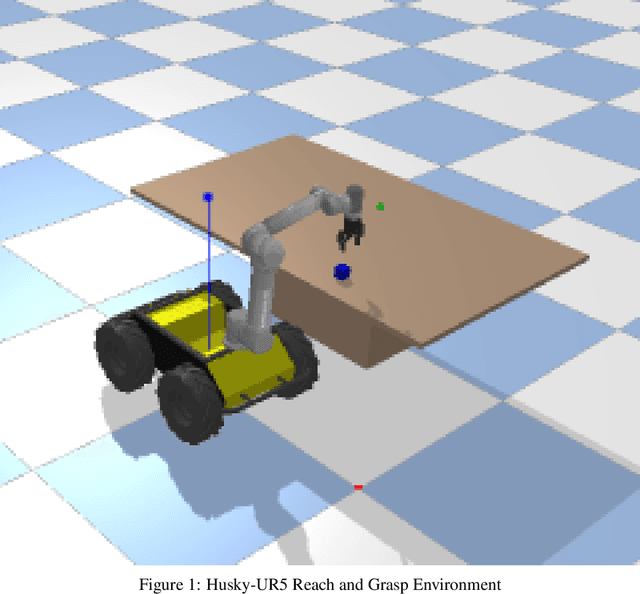

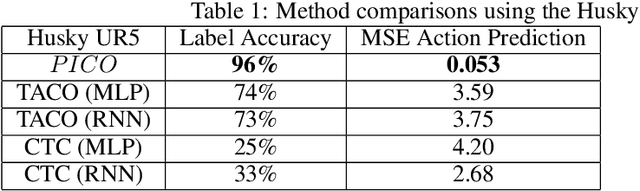

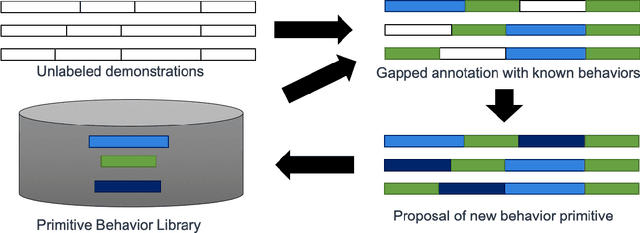

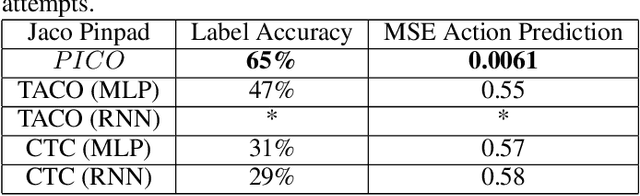

PICO: Primitive Imitation for COntrol

Jun 22, 2020

Abstract:In this work, we explore a novel framework for control of complex systems called Primitive Imitation for Control PICO. The approach combines ideas from imitation learning, task decomposition, and novel task sequencing to generalize from demonstrations to new behaviors. Demonstrations are automatically decomposed into existing or missing sub-behaviors which allows the framework to identify novel behaviors while not duplicating existing behaviors. Generalization to new tasks is achieved through dynamic blending of behavior primitives. We evaluated the approach using demonstrations from two different robotic platforms. The experimental results show that PICO is able to detect the presence of a novel behavior primitive and build the missing control policy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge