Post-Stall Navigation with Fixed-Wing UAVs using Onboard Vision

Paper and Code

Jan 04, 2022

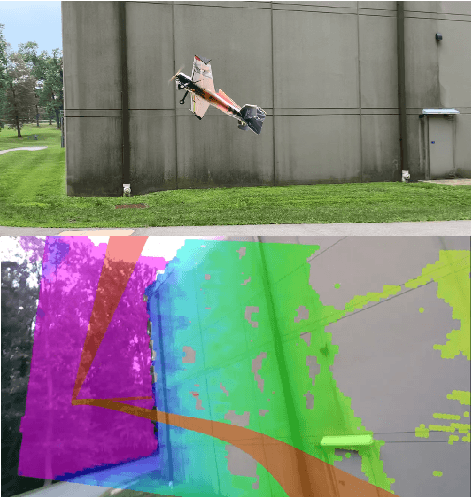

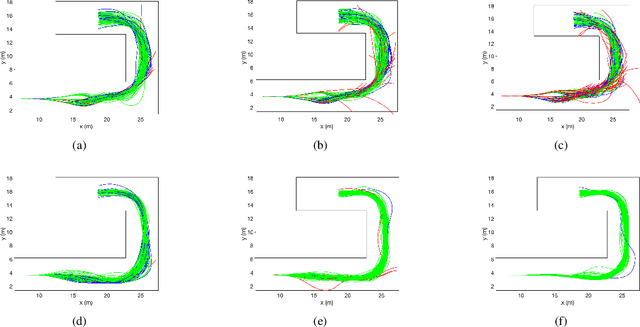

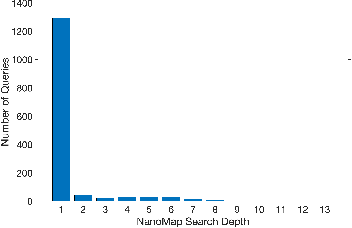

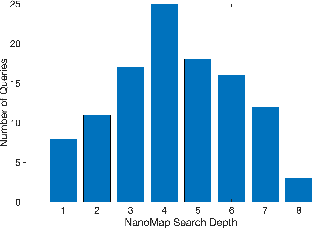

Recent research has enabled fixed-wing unmanned aerial vehicles (UAVs) to maneuver in constrained spaces through the use of direct nonlinear model predictive control (NMPC). However, this approach has been limited to a priori known maps and ground truth state measurements. In this paper, we present a direct NMPC approach that leverages NanoMap, a light-weight point-cloud mapping framework to generate collision-free trajectories using onboard stereo vision. We first explore our approach in simulation and demonstrate that our algorithm is sufficient to enable vision-based navigation in urban environments. We then demonstrate our approach in hardware using a 42-inch fixed-wing UAV and show that our motion planning algorithm is capable of navigating around a building using a minimalistic set of goal-points. We also show that storing a point-cloud history is important for navigating these types of constrained environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge