Kimberly McGuire

Survey of Simulators for Aerial Robots

Nov 04, 2023Abstract:Uncrewed Aerial Vehicle (UAV) research faces challenges with safety, scalability, costs, and ecological impact when conducting hardware testing. High-fidelity simulators offer a vital solution by replicating real-world conditions to enable the development and evaluation of novel perception and control algorithms. However, the large number of available simulators poses a significant challenge for researchers to determine which simulator best suits their specific use-case, based on each simulator's limitations and customization readiness. This paper analyzes existing UAV simulators and decision factors for their selection, aiming to enhance the efficiency and safety of research endeavors.

Lighthouse Positioning System: Dataset, Accuracy, and Precision for UAV Research

Apr 23, 2021

Abstract:The Lighthouse system was originally developed as tracking system for virtual reality applications. Due to its affordable price, it has also found attractive use-cases in robotics in the past. However, existing works frequently rely on the centralized official tracking software, which make the solution less attractive for UAV swarms. In this work, we consider an open-source tracking software that can run onboard small Unmanned Aerial Vehicles (UAVs) in real-time and enable distributed swarming algorithms. We provide a dataset specifically for the use cases i) flight; and ii) as ground truth for other commonly-used distributed swarming localization systems such as ultra-wideband. We then use this dataset to analyze both accuracy and precision of the Lighthouse system in different use-cases. To our knowledge, we are the first to compare two different Lighthouse hardware versions with a motion capture system and the first to analyze the accuracy using tracking software that runs onboard a microcontroller.

A Comparative Study of Bug Algorithms for Robot Navigation

Aug 17, 2018

Abstract:This paper presents a literature survey and a comparative study of Bug Algorithms, with the goal of investigating their potential for robotic navigation. At first sight, these methods seem to provide an efficient navigation paradigm, ideal for implementations on tiny robots with limited resources. Closer inspection, however, shows that many of these Bug Algorithms assume perfect global position estimate of the robot which in GPS-denied environments implies considerable expenses of computation and memory -- relying on accurate Simultaneous Localization And Mapping (SLAM) or Visual Odometry (VO) methods. We compare a selection of Bug Algorithms in a simulated robot and environment where they endure different types noise and failure-cases of their on-board sensors. From the simulation results, we conclude that the implemented Bug Algorithms' performances are sensitive to many types of sensor-noise, which was most noticeable for odometry-drift. This raises the question if Bug Algorithms are suitable for real-world, on-board, robotic navigation as is. Variations that use multiple sensors to keep track of their progress towards the goal, were more adept in completing their task in the presence of sensor-failures. This shows that Bug Algorithms must spread their risk, by relying on the readings of multiple sensors, to be suitable for real-world deployment.

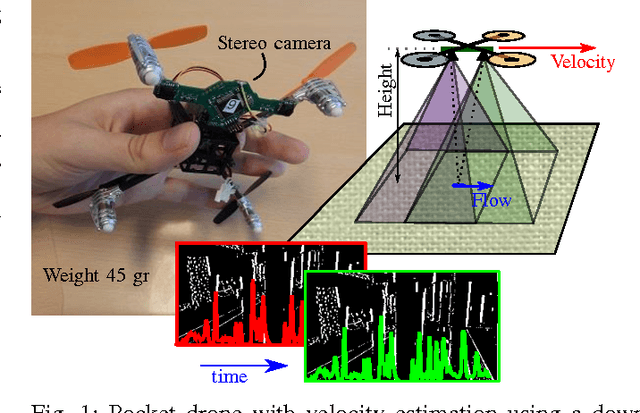

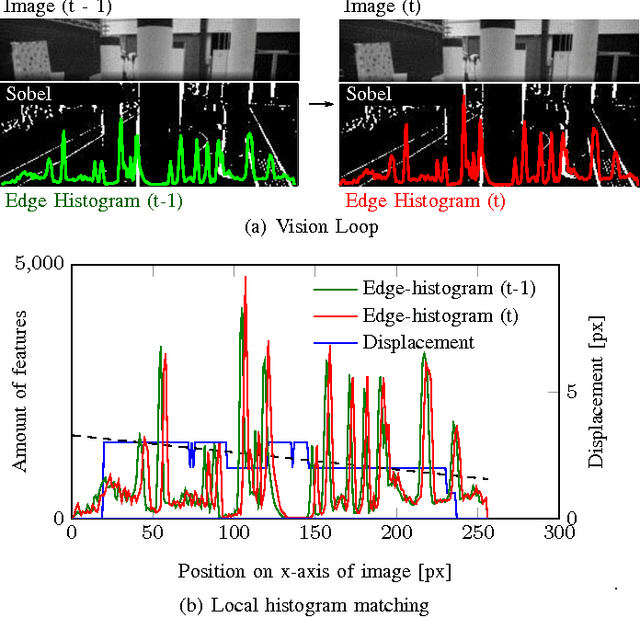

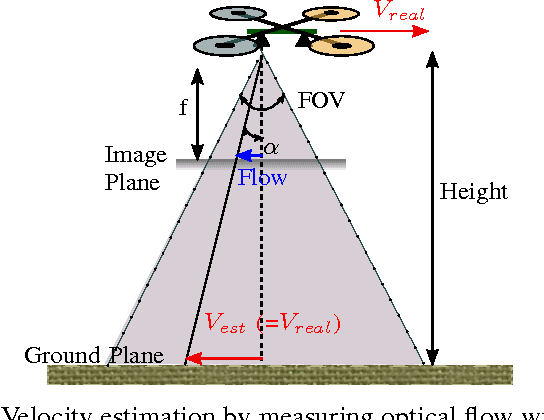

Local Histogram Matching for Efficient Optical Flow Computation Applied to Velocity Estimation on Pocket Drones

Mar 14, 2017

Abstract:Autonomous flight of pocket drones is challenging due to the severe limitations on on-board energy, sensing, and processing power. However, tiny drones have great potential as their small size allows maneuvering through narrow spaces while their small weight provides significant safety advantages. This paper presents a computationally efficient algorithm for determining optical flow, which can be run on an STM32F4 microprocessor (168 MHz) of a 4 gram stereo-camera. The optical flow algorithm is based on edge histograms. We propose a matching scheme to determine local optical flow. Moreover, the method allows for sub-pixel flow determination based on time horizon adaptation. We demonstrate velocity measurements in flight and use it within a velocity control-loop on a pocket drone.

* 7 pages, 10 figures, Changes: format changed one column to two columns, used url package for links

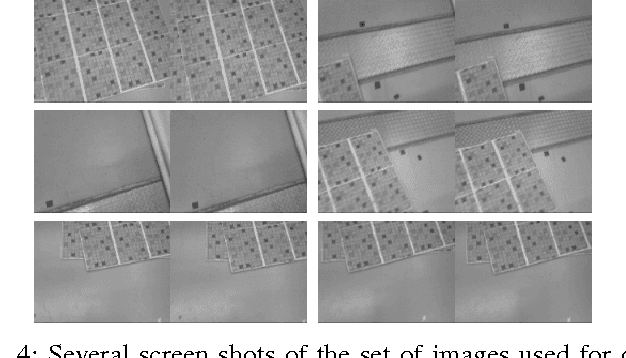

Efficient Optical flow and Stereo Vision for Velocity Estimation and Obstacle Avoidance on an Autonomous Pocket Drone

Mar 14, 2017

Abstract:Miniature Micro Aerial Vehicles (MAV) are very suitable for flying in indoor environments, but autonomous navigation is challenging due to their strict hardware limitations. This paper presents a highly efficient computer vision algorithm called Edge-FS for the determination of velocity and depth. It runs at 20 Hz on a 4 g stereo camera with an embedded STM32F4 microprocessor (168 MHz, 192 kB) and uses feature histograms to calculate optical flow and stereo disparity. The stereo-based distance estimates are used to scale the optical flow in order to retrieve the drone's velocity. The velocity and depth measurements are used for fully autonomous flight of a 40 g pocket drone only relying on on-board sensors. The method allows the MAV to control its velocity and avoid obstacles.

* 7 pages, 10 figures, Published at IEEE Robotics and Automation Letters

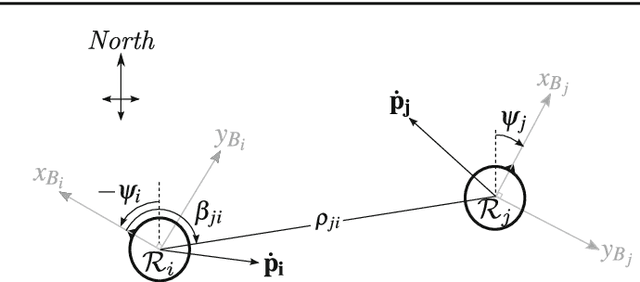

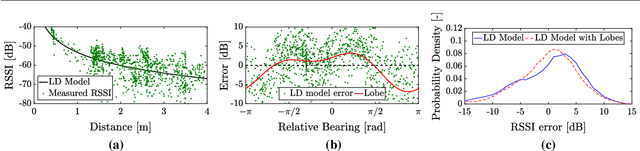

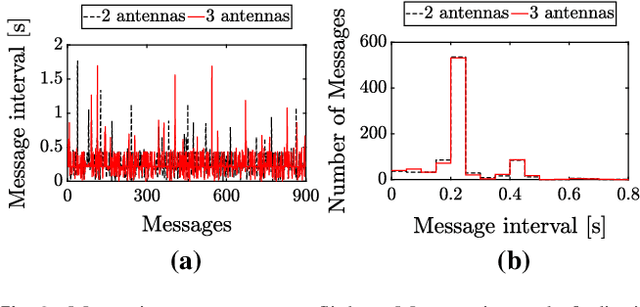

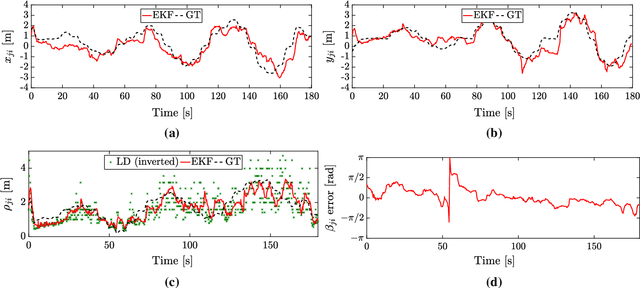

On-board Communication-based Relative Localization for Collision Avoidance in Micro Air Vehicle teams

Mar 08, 2017

Abstract:Micro Air Vehicles (MAVs) will unlock their true potential once they can operate in groups. To this end, it is essential for them to estimate on-board the relative location of their neighbors. The challenge lies in limiting the mass and processing burden needed to enable this. We developed a relative localization method that only requires the MAVs to communicate via their wireless transceiver. Communication allows the exchange of on-board states (velocity, height, and orientation), while the signal-strength provides range data. These quantities are fused to provide a full relative location estimate. We used our method to tackle the problem of collision avoidance in tight areas. The system was tested with a team of AR.Drones flying in a 4mx4m area and with miniature drones of ~50g in a 2mx2m area. The MAVs were able to track their relative positions and fly several minutes without collisions. Our implementation used Bluetooth to communicate between the drones. This featured significant noise and disturbances in signal-strength, which worsened as more drones were added. Simulation analysis suggests that results can improve with a more suitable transceiver module.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge