Kirk Y. W. Scheper

Taming Contrast Maximization for Learning Sequential, Low-latency, Event-based Optical Flow

Mar 09, 2023

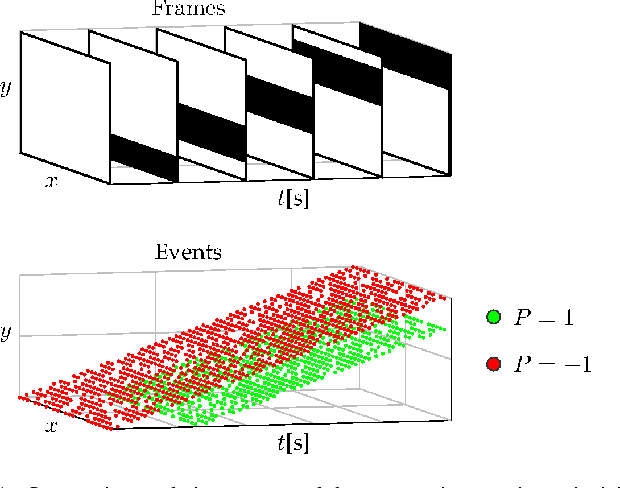

Abstract:Event cameras have recently gained significant traction since they open up new avenues for low-latency and low-power solutions to complex computer vision problems. To unlock these solutions, it is necessary to develop algorithms that can leverage the unique nature of event data. However, the current state-of-the-art is still highly influenced by the frame-based literature, and usually fails to deliver on these promises. In this work, we take this into consideration and propose a novel self-supervised learning pipeline for the sequential estimation of event-based optical flow that allows for the scaling of the models to high inference frequencies. At its core, we have a continuously-running stateful neural model that is trained using a novel formulation of contrast maximization that makes it robust to nonlinearities and varying statistics in the input events. Results across multiple datasets confirm the effectiveness of our method, which establishes a new state of the art in terms of accuracy for approaches trained or optimized without ground truth.

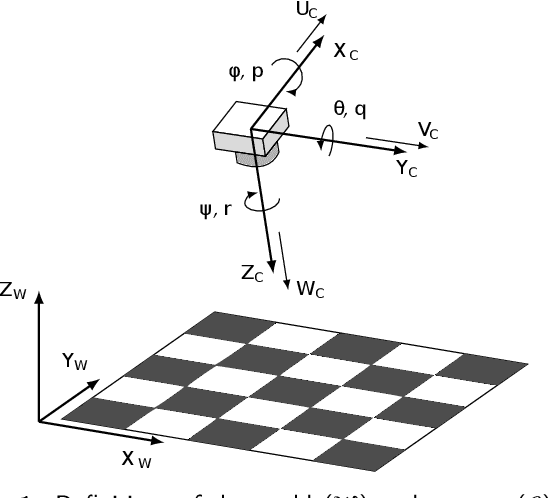

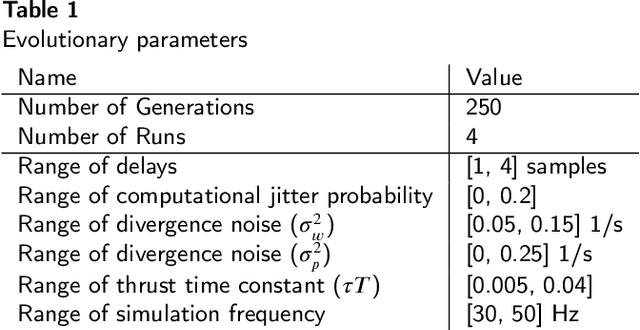

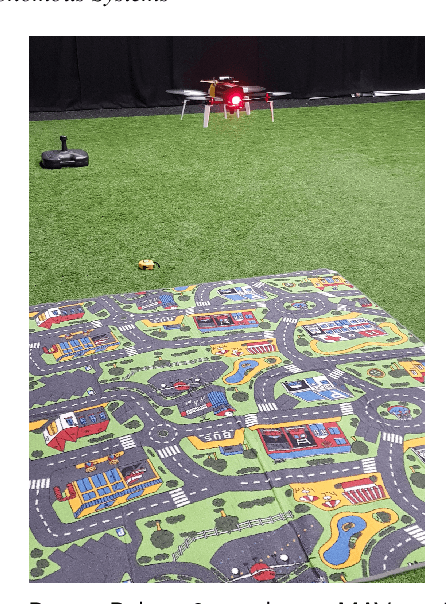

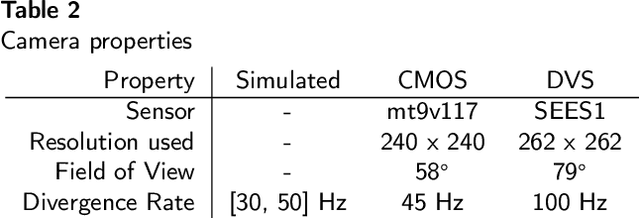

Evolution of Robust High Speed Optical-Flow-Based Landing for Autonomous MAVs

Dec 16, 2019

Abstract:Automatic optimization of robotic behavior has been the long-standing goal of Evolutionary Robotics. Allowing the problem at hand to be solved by automation often leads to novel approaches and new insights. A common problem encountered with this approach is that when this optimization occurs in a simulated environment, the optimized policies are subject to the reality gap when implemented in the real world. This often results in sub-optimal behavior, if it works at all. This paper investigates the automatic optimization of neurocontrollers to perform quick but safe landing maneuvers for a quadrotor micro air vehicle using the divergence of the optical flow field of a downward looking camera. The optimized policies showed that a piece-wise linear control scheme is more effective than the simple linear scheme commonly used, something not yet considered by human designers. Additionally, we show the utility in using abstraction on the input and output of the controller as a tool to improve the robustness of the optimized policies to the reality gap by testing our policies optimized in simulation on real world vehicles. We tested the neurocontrollers using two different methods to generate and process the visual input, one using a conventional CMOS camera and one a dynamic vision sensor, both of which perform significantly differently than the simulated sensor. The use of the abstracted input resulted in near seamless transfer to the real world with the controllers showing high robustness to a clear reality gap.

* This is an accepted manuscript preprint of published work available at https://doi.org/10.1016/j.robot.2019.103380. Please cite with Kirk Y.W Scheper and Guido C.H.E. de Croon, Evolution of robust high speed optical-flow-based landing for autonomous MAVs, Robotics and Autonomous Systems 124, 2020

Unsupervised Learning of a Hierarchical Spiking Neural Network for Optical Flow Estimation: From Events to Global Motion Perception

Jul 28, 2018

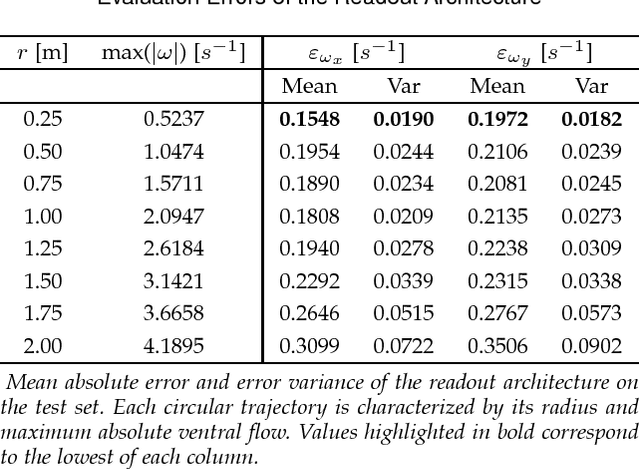

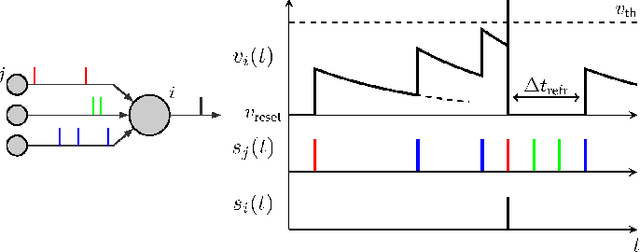

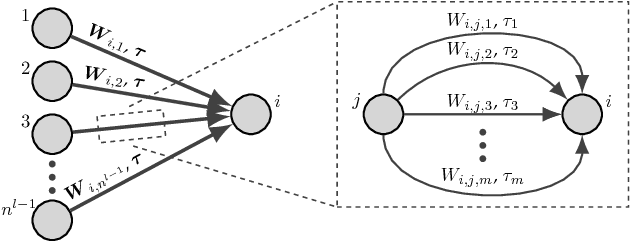

Abstract:The combination of spiking neural networks and event-based vision sensors holds the potential of highly efficient and high-bandwidth optical flow estimation. This paper presents the first hierarchical spiking architecture in which motion (direction and speed) selectivity emerges in an unsupervised fashion from the raw stimuli generated with an event-based camera. A novel adaptive neuron model and spike-timing-dependent plasticity formulation are at the core of this neural network governing its spike-based processing and learning, respectively. After convergence, the neural architecture exhibits the main properties of biological visual motion systems, namely feature extraction and local and global motion perception. To assess the outcome of the learning, a shallow conventional artificial neural network is trained to map the activation traces of the penultimate layer to the optical flow visual observables of ventral flow. The proposed solution is validated for simulated event sequences with ground-truth measurements. Experimental results show that accurate estimates of these parameters can be obtained over a wide range of speeds.

Vertical Landing for Micro Air Vehicles using Event-Based Optical Flow

Nov 13, 2017

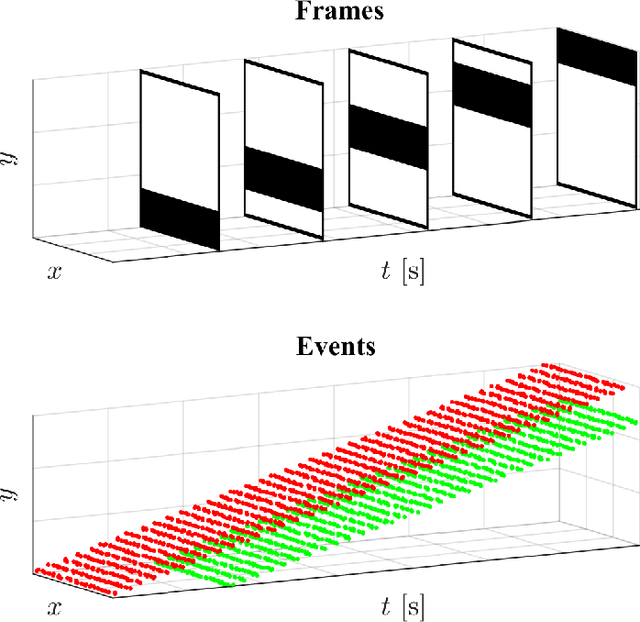

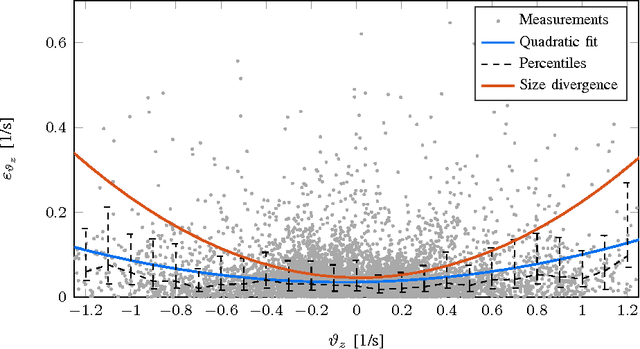

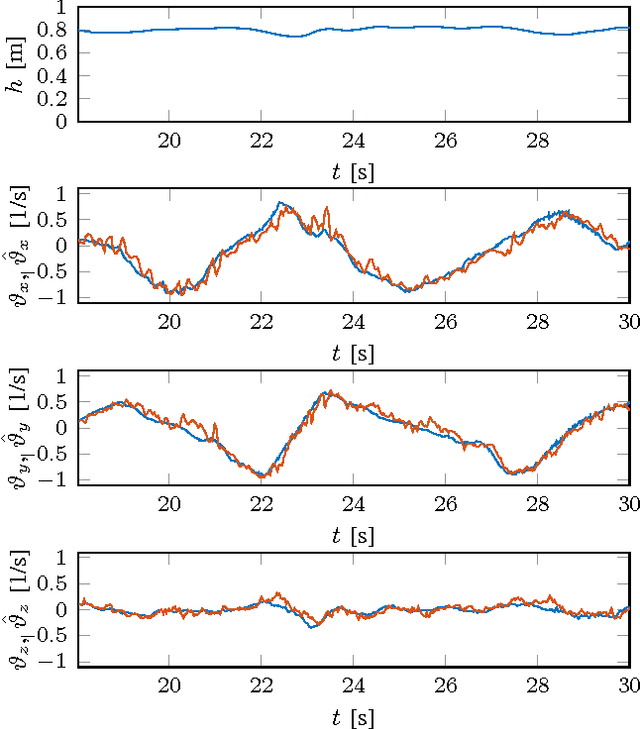

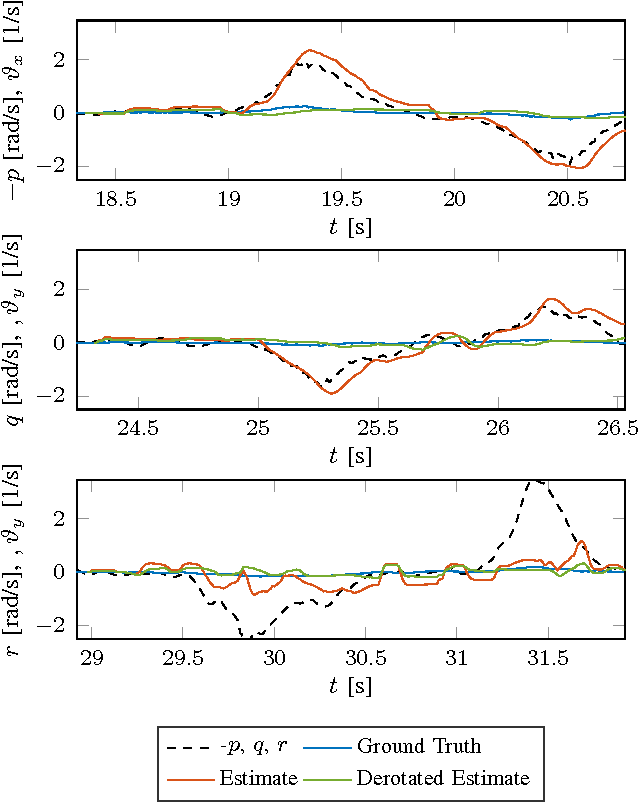

Abstract:Small flying robots can perform landing maneuvers using bio-inspired optical flow by maintaining a constant divergence. However, optical flow is typically estimated from frame sequences recorded by standard miniature cameras. This requires processing full images on-board, limiting the update rate of divergence measurements, and thus the speed of the control loop and the robot. Event-based cameras overcome these limitations by only measuring pixel-level brightness changes at microsecond temporal accuracy, hence providing an efficient mechanism for optical flow estimation. This paper presents, to the best of our knowledge, the first work integrating event-based optical flow estimation into the control loop of a flying robot. We extend an existing 'local plane fitting' algorithm to obtain an improved and more computationally efficient optical flow estimation method, valid for a wide range of optical flow velocities. This method is validated for real event sequences. In addition, a method for estimating the divergence from event-based optical flow is introduced, which accounts for the aperture problem. The developed algorithms are implemented in a constant divergence landing controller on-board of a quadrotor. Experiments show that, using event-based optical flow, accurate divergence estimates can be obtained over a wide range of speeds. This enables the quadrotor to perform very fast landing maneuvers.

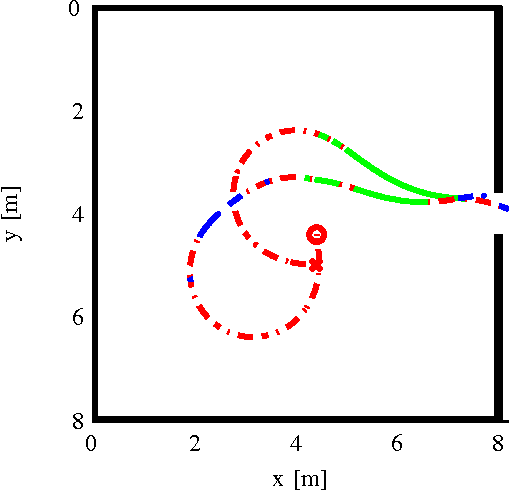

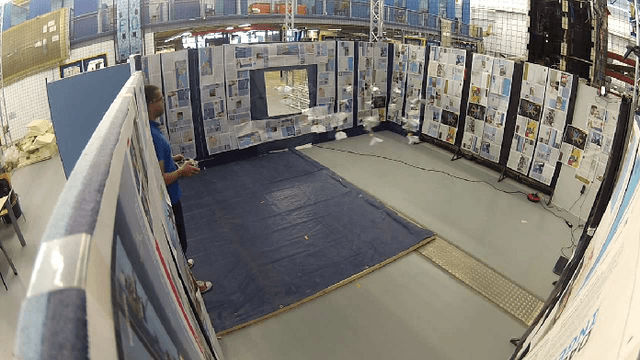

On-board Communication-based Relative Localization for Collision Avoidance in Micro Air Vehicle teams

Mar 08, 2017

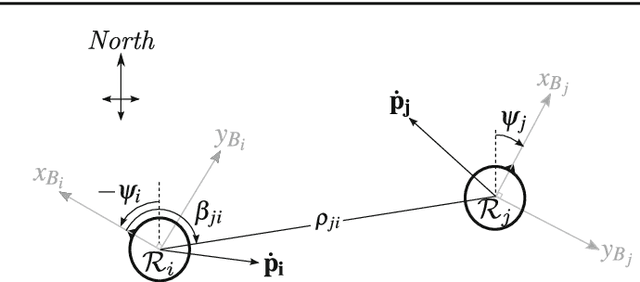

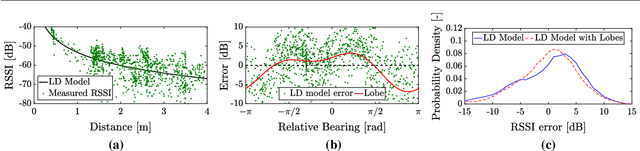

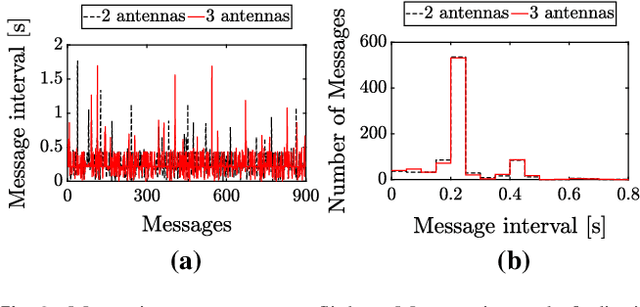

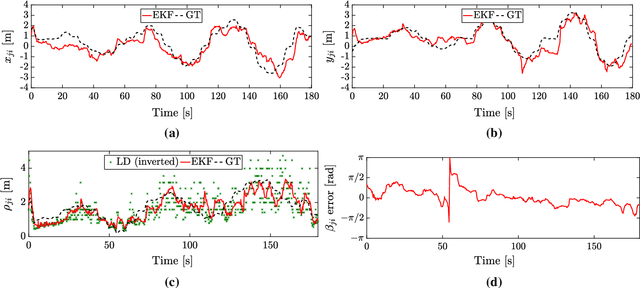

Abstract:Micro Air Vehicles (MAVs) will unlock their true potential once they can operate in groups. To this end, it is essential for them to estimate on-board the relative location of their neighbors. The challenge lies in limiting the mass and processing burden needed to enable this. We developed a relative localization method that only requires the MAVs to communicate via their wireless transceiver. Communication allows the exchange of on-board states (velocity, height, and orientation), while the signal-strength provides range data. These quantities are fused to provide a full relative location estimate. We used our method to tackle the problem of collision avoidance in tight areas. The system was tested with a team of AR.Drones flying in a 4mx4m area and with miniature drones of ~50g in a 2mx2m area. The MAVs were able to track their relative positions and fly several minutes without collisions. Our implementation used Bluetooth to communicate between the drones. This featured significant noise and disturbances in signal-strength, which worsened as more drones were added. Simulation analysis suggests that results can improve with a more suitable transceiver module.

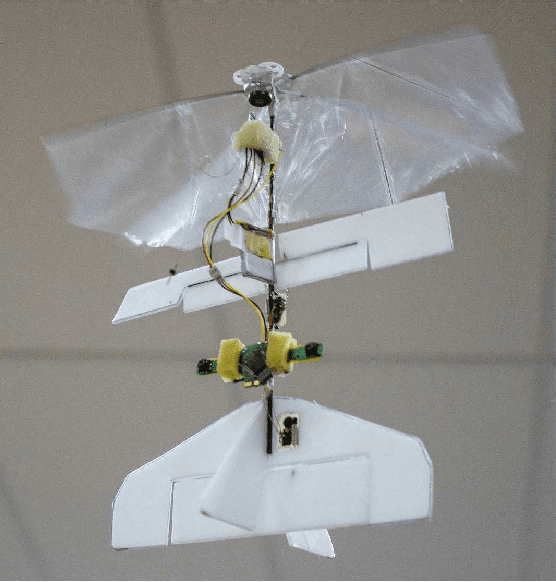

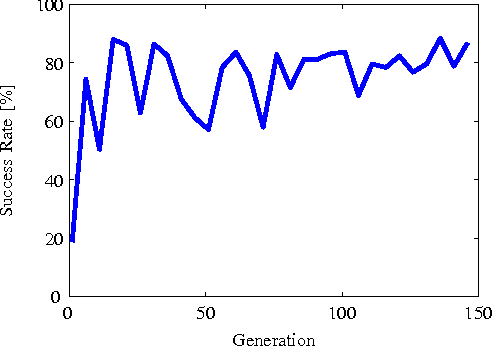

Behaviour Trees for Evolutionary Robotics

Aug 07, 2015

Abstract:Evolutionary Robotics allows robots with limited sensors and processing to tackle complex tasks by means of sensory-motor coordination. In this paper we show the first application of the Behaviour Tree framework to a real robotic platform using the Evolutionary Robotics methodology. This framework is used to improve the intelligibility of the emergent robotic behaviour as compared to the traditional Neural Network formulation. As a result, the behaviour is easier to comprehend and manually adapt when crossing the reality gap from simulation to reality. This functionality is shown by performing real-world flight tests with the 20-gram DelFly Explorer flapping wing Micro Air Vehicle equipped with a 4-gram onboard stereo vision system. The experiments show that the DelFly can fully autonomously search for and fly through a window with only its onboard sensors and processing. The success rate of the optimised behaviour in simulation is 88% and the corresponding real-world performance is 54% after user adaptation. Although this leaves room for improvement, it is higher than the 46% success rate from a tuned user-defined controller.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge