Federico Paredes-Vallés

EV-LayerSegNet: Self-supervised Motion Segmentation using Event Cameras

Jun 07, 2025Abstract:Event cameras are novel bio-inspired sensors that capture motion dynamics with much higher temporal resolution than traditional cameras, since pixels react asynchronously to brightness changes. They are therefore better suited for tasks involving motion such as motion segmentation. However, training event-based networks still represents a difficult challenge, as obtaining ground truth is very expensive, error-prone and limited in frequency. In this article, we introduce EV-LayerSegNet, a self-supervised CNN for event-based motion segmentation. Inspired by a layered representation of the scene dynamics, we show that it is possible to learn affine optical flow and segmentation masks separately, and use them to deblur the input events. The deblurring quality is then measured and used as self-supervised learning loss. We train and test the network on a simulated dataset with only affine motion, achieving IoU and detection rate up to 71% and 87% respectively.

Towards Low-Latency Event-based Obstacle Avoidance on a FPGA-Drone

Apr 14, 2025Abstract:This work quantitatively evaluates the performance of event-based vision systems (EVS) against conventional RGB-based models for action prediction in collision avoidance on an FPGA accelerator. Our experiments demonstrate that the EVS model achieves a significantly higher effective frame rate (1 kHz) and lower temporal (-20 ms) and spatial prediction errors (-20 mm) compared to the RGB-based model, particularly when tested on out-of-distribution data. The EVS model also exhibits superior robustness in selecting optimal evasion maneuvers. In particular, in distinguishing between movement and stationary states, it achieves a 59 percentage point advantage in precision (78% vs. 19%) and a substantially higher F1 score (0.73 vs. 0.06), highlighting the susceptibility of the RGB model to overfitting. Further analysis in different combinations of spatial classes confirms the consistent performance of the EVS model in both test data sets. Finally, we evaluated the system end-to-end and achieved a latency of approximately 2.14 ms, with event aggregation (1 ms) and inference on the processing unit (0.94 ms) accounting for the largest components. These results underscore the advantages of event-based vision for real-time collision avoidance and demonstrate its potential for deployment in resource-constrained environments.

On-Device Self-Supervised Learning of Low-Latency Monocular Depth from Only Events

Dec 09, 2024

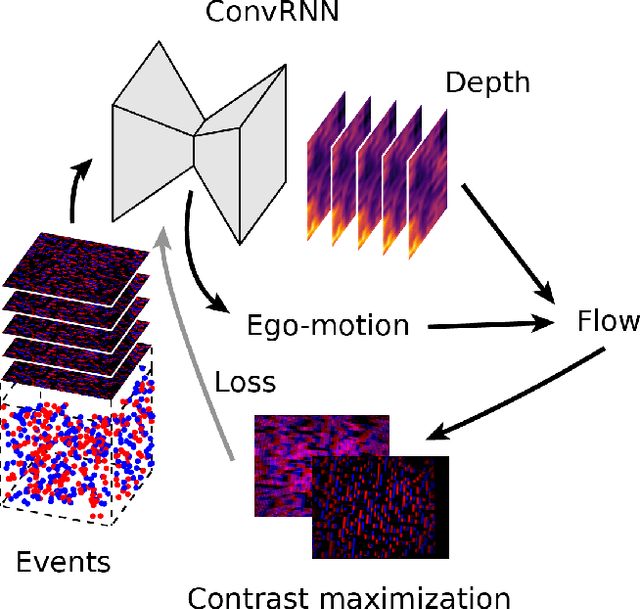

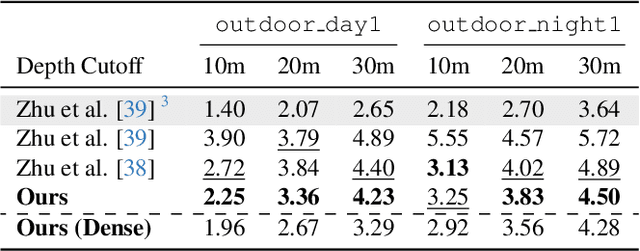

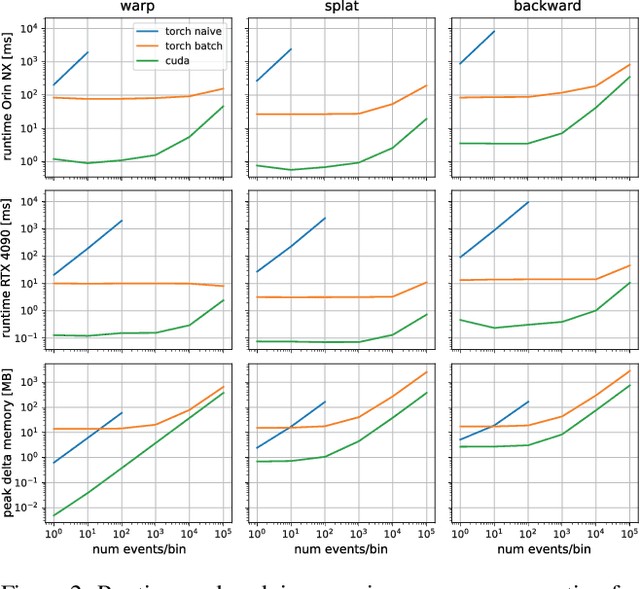

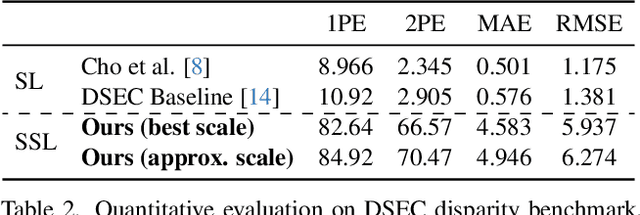

Abstract:Event cameras provide low-latency perception for only milliwatts of power. This makes them highly suitable for resource-restricted, agile robots such as small flying drones. Self-supervised learning based on contrast maximization holds great potential for event-based robot vision, as it foregoes the need to high-frequency ground truth and allows for online learning in the robot's operational environment. However, online, onboard learning raises the major challenge of achieving sufficient computational efficiency for real-time learning, while maintaining competitive visual perception performance. In this work, we improve the time and memory efficiency of the contrast maximization learning pipeline. Benchmarking experiments show that the proposed pipeline achieves competitive results with the state of the art on the task of depth estimation from events. Furthermore, we demonstrate the usability of the learned depth for obstacle avoidance through real-world flight experiments. Finally, we compare the performance of different combinations of pre-training and fine-tuning of the depth estimation networks, showing that on-board domain adaptation is feasible given a few minutes of flight.

Low-power event-based face detection with asynchronous neuromorphic hardware

Dec 21, 2023Abstract:The rise of mobility, IoT and wearables has shifted processing to the edge of the sensors, driven by the need to reduce latency, communication costs and overall energy consumption. While deep learning models have achieved remarkable results in various domains, their deployment at the edge for real-time applications remains computationally expensive. Neuromorphic computing emerges as a promising paradigm shift, characterized by co-localized memory and computing as well as event-driven asynchronous sensing and processing. In this work, we demonstrate the possibility of solving the ubiquitous computer vision task of object detection at the edge with low-power requirements, using the event-based N-Caltech101 dataset. We present the first instance of an on-chip spiking neural network for event-based face detection deployed on the SynSense Speck neuromorphic chip, which comprises both an event-based sensor and a spike-based asynchronous processor implementing Integrate-and-Fire neurons. We show how to reduce precision discrepancies between off-chip clock-driven simulation used for training and on-chip event-driven inference. This involves using a multi-spike version of the Integrate-and-Fire neuron on simulation, where spikes carry values that are proportional to the extent the membrane potential exceeds the firing threshold. We propose a robust strategy to train spiking neural networks with back-propagation through time using multi-spike activation and firing rate regularization and demonstrate how to decode output spikes into bounding boxes. We show that the power consumption of the chip is directly proportional to the number of synaptic operations in the spiking neural network, and we explore the trade-off between power consumption and detection precision with different firing rate regularization, achieving an on-chip face detection mAP[0.5] of ~0.6 while consuming only ~20 mW.

Fully neuromorphic vision and control for autonomous drone flight

Mar 15, 2023Abstract:Biological sensing and processing is asynchronous and sparse, leading to low-latency and energy-efficient perception and action. In robotics, neuromorphic hardware for event-based vision and spiking neural networks promises to exhibit similar characteristics. However, robotic implementations have been limited to basic tasks with low-dimensional sensory inputs and motor actions due to the restricted network size in current embedded neuromorphic processors and the difficulties of training spiking neural networks. Here, we present the first fully neuromorphic vision-to-control pipeline for controlling a freely flying drone. Specifically, we train a spiking neural network that accepts high-dimensional raw event-based camera data and outputs low-level control actions for performing autonomous vision-based flight. The vision part of the network, consisting of five layers and 28.8k neurons, maps incoming raw events to ego-motion estimates and is trained with self-supervised learning on real event data. The control part consists of a single decoding layer and is learned with an evolutionary algorithm in a drone simulator. Robotic experiments show a successful sim-to-real transfer of the fully learned neuromorphic pipeline. The drone can accurately follow different ego-motion setpoints, allowing for hovering, landing, and maneuvering sideways$\unicode{x2014}$even while yawing at the same time. The neuromorphic pipeline runs on board on Intel's Loihi neuromorphic processor with an execution frequency of 200 Hz, spending only 27 $\unicode{x00b5}$J per inference. These results illustrate the potential of neuromorphic sensing and processing for enabling smaller, more intelligent robots.

Taming Contrast Maximization for Learning Sequential, Low-latency, Event-based Optical Flow

Mar 09, 2023

Abstract:Event cameras have recently gained significant traction since they open up new avenues for low-latency and low-power solutions to complex computer vision problems. To unlock these solutions, it is necessary to develop algorithms that can leverage the unique nature of event data. However, the current state-of-the-art is still highly influenced by the frame-based literature, and usually fails to deliver on these promises. In this work, we take this into consideration and propose a novel self-supervised learning pipeline for the sequential estimation of event-based optical flow that allows for the scaling of the models to high inference frequencies. At its core, we have a continuously-running stateful neural model that is trained using a novel formulation of contrast maximization that makes it robust to nonlinearities and varying statistics in the input events. Results across multiple datasets confirm the effectiveness of our method, which establishes a new state of the art in terms of accuracy for approaches trained or optimized without ground truth.

Lightweight Event-based Optical Flow Estimation via Iterative Deblurring

Nov 24, 2022

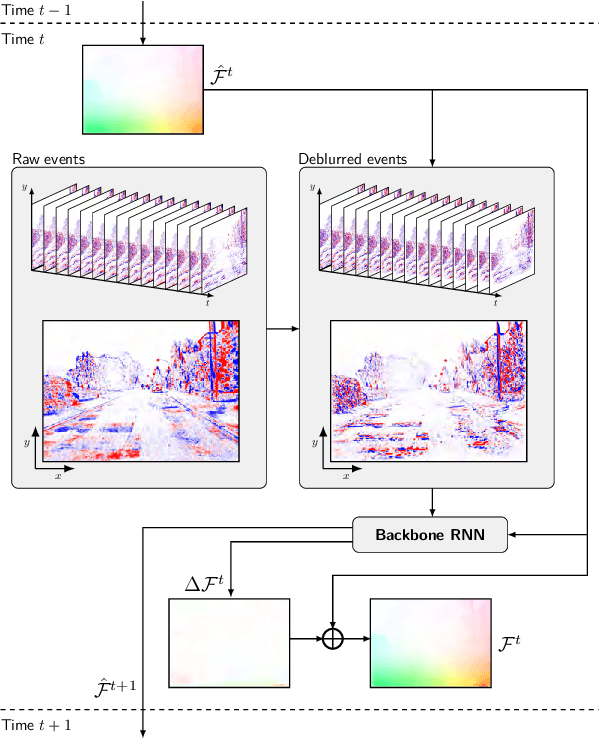

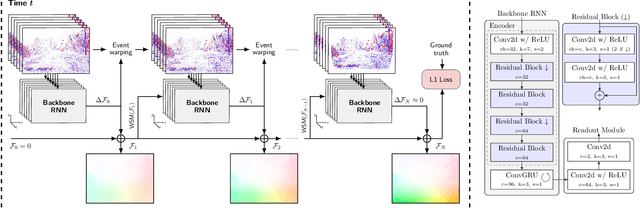

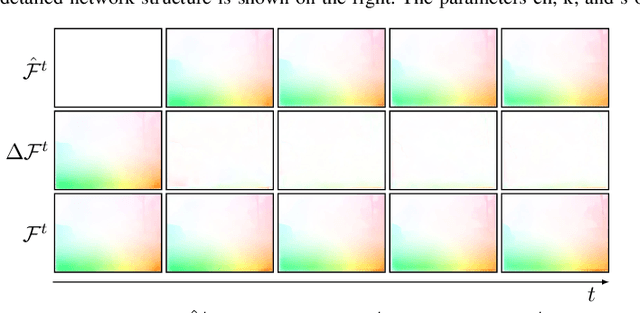

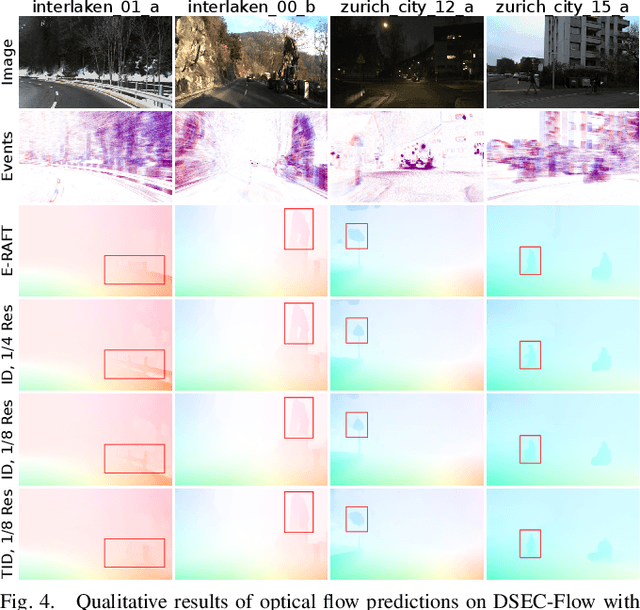

Abstract:Inspired by frame-based methods, state-of-the-art event-based optical flow networks rely on the explicit computation of correlation volumes, which are expensive to compute and store on systems with limited processing budget and memory. To this end, we introduce IDNet (Iterative Deblurring Network), a lightweight yet well-performing event-based optical flow network without using correlation volumes. IDNet leverages the unique spatiotemporally continuous nature of event streams to propose an alternative way of implicitly capturing correlation through iterative refinement and motion deblurring. Our network does not compute correlation volumes but rather utilizes a recurrent network to maximize the spatiotemporal correlation of events iteratively. We further propose two iterative update schemes: "ID" which iterates over the same batch of events, and "TID" which iterates over time with streaming events in an online fashion. Benchmark results show the former "ID" scheme can reach close to state-of-the-art performance with 33% of savings in compute and 90% in memory footprint, while the latter "TID" scheme is even more efficient promising 83% of compute savings and 15 times less latency at the cost of 18% of performance drop.

NanoFlowNet: Real-time Dense Optical Flow on a Nano Quadcopter

Sep 14, 2022

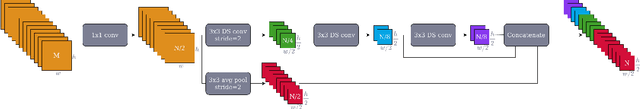

Abstract:Nano quadcopters are small, agile, and cheap platforms that are well suited for deployment in narrow, cluttered environments. Due to their limited payload, these vehicles are highly constrained in processing power, rendering conventional vision-based methods for safe and autonomous navigation incompatible. Recent machine learning developments promise high-performance perception at low latency, while dedicated edge computing hardware has the potential to augment the processing capabilities of these limited devices. In this work, we present NanoFlowNet, a lightweight convolutional neural network for real-time dense optical flow estimation on edge computing hardware. We draw inspiration from recent advances in semantic segmentation for the design of this network. Additionally, we guide the learning of optical flow using motion boundary ground truth data, which improves performance with no impact on latency. Validation results on the MPI-Sintel dataset show the high performance of the proposed network given its constrained architecture. Additionally, we successfully demonstrate the capabilities of NanoFlowNet by deploying it on the ultra-low power GAP8 microprocessor and by applying it to vision-based obstacle avoidance on board a Bitcraze Crazyflie, a 34 g nano quadcopter.

The Artificial Intelligence behind the winning entry to the 2019 AI Robotic Racing Competition

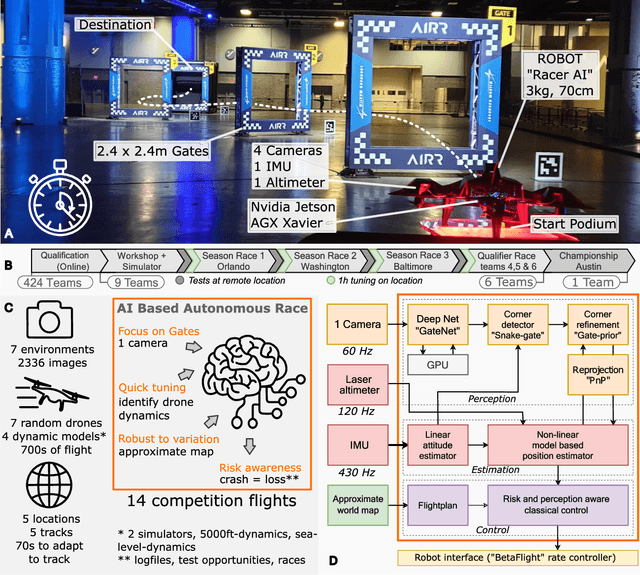

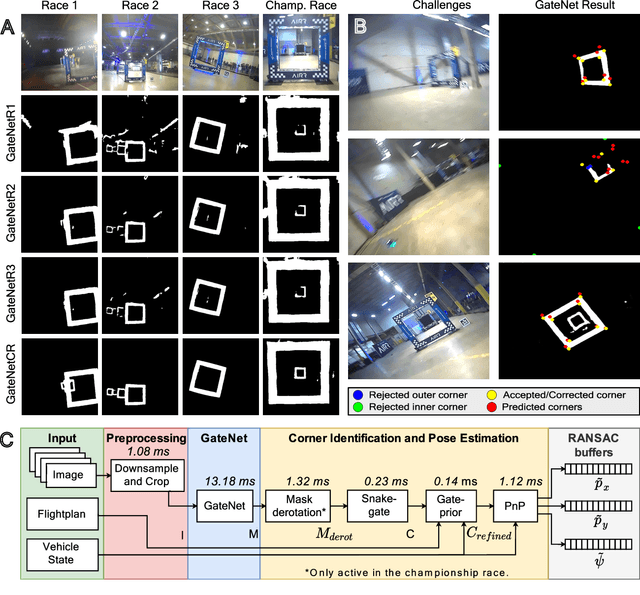

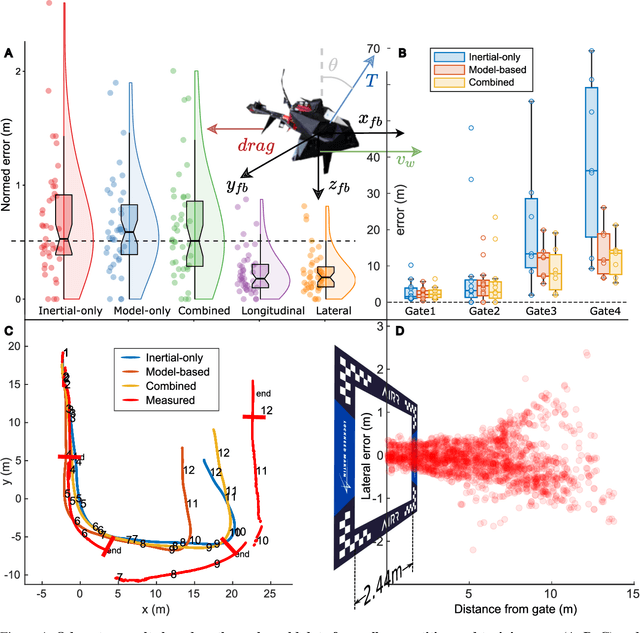

Sep 30, 2021

Abstract:Robotics is the next frontier in the progress of Artificial Intelligence (AI), as the real world in which robots operate represents an enormous, complex, continuous state space with inherent real-time requirements. One extreme challenge in robotics is currently formed by autonomous drone racing. Human drone racers can fly through complex tracks at speeds of up to 190 km/h. Achieving similar speeds with autonomous drones signifies tackling fundamental problems in AI under extreme restrictions in terms of resources. In this article, we present the winning solution of the first AI Robotic Racing (AIRR) Circuit, a competition consisting of four races in which all participating teams used the same drone, to which they had limited access. The core of our approach is inspired by how human pilots combine noisy observations of the race gates with their mental model of the drone's dynamics to achieve fast control. Our approach has a large focus on gate detection with an efficient deep neural segmentation network and active vision. Further, we make contributions to robust state estimation and risk-based control. This allowed us to reach speeds of ~9.2m/s in the last race, unrivaled by previous autonomous drone race competitions. Although our solution was the fastest and most robust, it still lost against one of the best human pilots, Gab707. The presented approach indicates a promising direction to close the gap with human drone pilots, forming an important step in bringing AI to the real world.

Self-Supervised Learning of Event-Based Optical Flow with Spiking Neural Networks

Jun 03, 2021

Abstract:Neuromorphic sensing and computing hold a promise for highly energy-efficient and high-bandwidth-sensor processing. A major challenge for neuromorphic computing is that learning algorithms for traditional artificial neural networks (ANNs) do not transfer directly to spiking neural networks (SNNs) due to the discrete spikes and more complex neuronal dynamics. As a consequence, SNNs have not yet been successfully applied to complex, large-scale tasks. In this article, we focus on the self-supervised learning problem of optical flow estimation from event-based camera inputs, and investigate the changes that are necessary to the state-of-the-art ANN training pipeline in order to successfully tackle it with SNNs. More specifically, we first modify the input event representation to encode a much smaller time slice with minimal explicit temporal information. Consequently, we make the network's neuronal dynamics and recurrent connections responsible for integrating information over time. Moreover, we reformulate the self-supervised loss function for event-based optical flow to improve its convexity. We perform experiments with various types of recurrent ANNs and SNNs using the proposed pipeline. Concerning SNNs, we investigate the effects of elements such as parameter initialization and optimization, surrogate gradient shape, and adaptive neuronal mechanisms. We find that initialization and surrogate gradient width play a crucial part in enabling learning with sparse inputs, while the inclusion of adaptivity and learnable neuronal parameters can improve performance. We show that the performance of the proposed ANNs and SNNs are on par with that of the current state-of-the-art ANNs trained in a self-supervised manner.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge