Julien Dupeyroux

Neuromorphic computing for attitude estimation onboard quadrotors

Apr 18, 2023Abstract:Compelling evidence has been given for the high energy efficiency and update rates of neuromorphic processors, with performance beyond what standard Von Neumann architectures can achieve. Such promising features could be advantageous in critical embedded systems, especially in robotics. To date, the constraints inherent in robots (e.g., size and weight, battery autonomy, available sensors, computing resources, processing time, etc.), and particularly in aerial vehicles, severely hamper the performance of fully-autonomous on-board control, including sensor processing and state estimation. In this work, we propose a spiking neural network (SNN) capable of estimating the pitch and roll angles of a quadrotor in highly dynamic movements from 6-degree of freedom Inertial Measurement Unit (IMU) data. With only 150 neurons and a limited training dataset obtained using a quadrotor in a real world setup, the network shows competitive results as compared to state-of-the-art, non-neuromorphic attitude estimators. The proposed architecture was successfully tested on the Loihi neuromorphic processor on-board a quadrotor to estimate the attitude when flying. Our results show the robustness of neuromorphic attitude estimation and pave the way towards energy-efficient, fully autonomous control of quadrotors with dedicated neuromorphic computing systems.

Fully neuromorphic vision and control for autonomous drone flight

Mar 15, 2023Abstract:Biological sensing and processing is asynchronous and sparse, leading to low-latency and energy-efficient perception and action. In robotics, neuromorphic hardware for event-based vision and spiking neural networks promises to exhibit similar characteristics. However, robotic implementations have been limited to basic tasks with low-dimensional sensory inputs and motor actions due to the restricted network size in current embedded neuromorphic processors and the difficulties of training spiking neural networks. Here, we present the first fully neuromorphic vision-to-control pipeline for controlling a freely flying drone. Specifically, we train a spiking neural network that accepts high-dimensional raw event-based camera data and outputs low-level control actions for performing autonomous vision-based flight. The vision part of the network, consisting of five layers and 28.8k neurons, maps incoming raw events to ego-motion estimates and is trained with self-supervised learning on real event data. The control part consists of a single decoding layer and is learned with an evolutionary algorithm in a drone simulator. Robotic experiments show a successful sim-to-real transfer of the fully learned neuromorphic pipeline. The drone can accurately follow different ego-motion setpoints, allowing for hovering, landing, and maneuvering sideways$\unicode{x2014}$even while yawing at the same time. The neuromorphic pipeline runs on board on Intel's Loihi neuromorphic processor with an execution frequency of 200 Hz, spending only 27 $\unicode{x00b5}$J per inference. These results illustrate the potential of neuromorphic sensing and processing for enabling smaller, more intelligent robots.

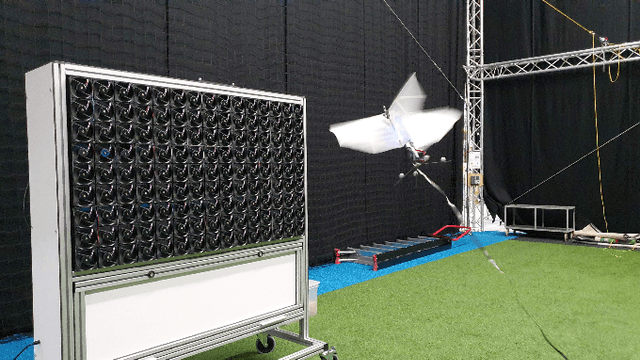

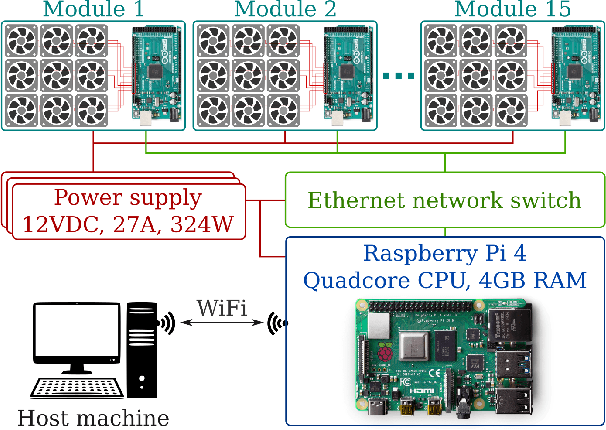

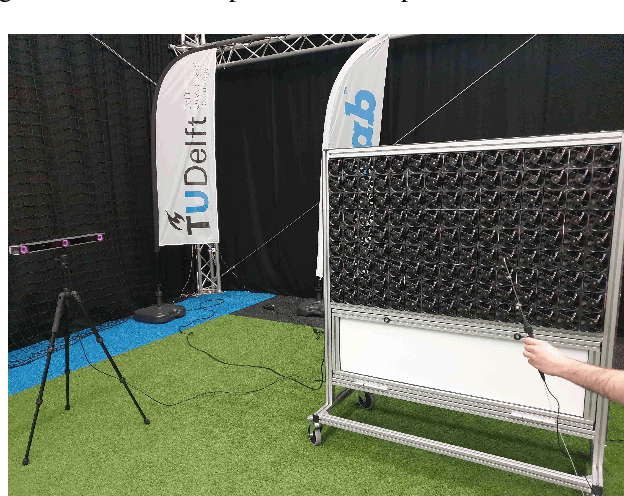

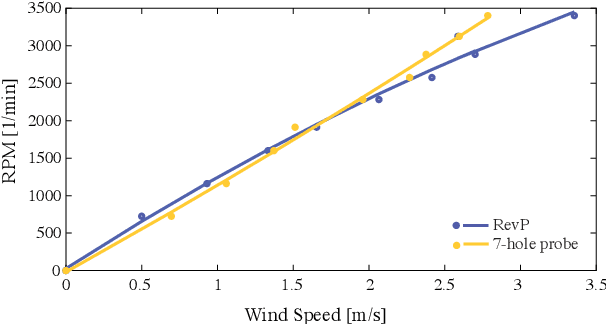

An Experimental Study of Wind Resistance and Power Consumption in MAVs with a Low-Speed Multi-Fan Wind System

Feb 14, 2022

Abstract:This paper discusses a low-cost, open-source and open-hardware design and performance evaluation of a low-speed, multi-fan wind system dedicated to micro air vehicle (MAV) testing. In addition, a set of experiments with a flapping wing MAV and rotorcraft is presented, demonstrating the capabilities of the system and the properties of these different types of drones in response to various types of wind. We performed two sets of experiments where a MAV is flying into the wake of the fan system, gathering data about states, battery voltage and current. Firstly, we focus on steady wind conditions with wind speeds ranging from 0.5 m/s to 3.4 m/s. During the second set of experiments, we introduce wind gusts, by periodically modulating the wind speed from 1.3 m/s to 3.4 m/s with wind gust oscillations of 0.5 Hz, 0.25 Hz and 0.125 Hz. The "Flapper" flapping wing MAV requires much larger pitch angles to counter wind than the "CrazyFlie" quadrotor. This is due to the Flapper's larger wing surface. In forward flight, its wings do provide extra lift, considerably reducing the power consumption. In contrast, the CrazyFlie's power consumption stays more constant for different wind speeds. The experiments with the varying wind show a quicker gust response by the CrazyFlie compared with the Flapper drone, but both their responses could be further improved. We expect that the proposed wind gust system will provide a useful tool to the community to achieve such improvements.

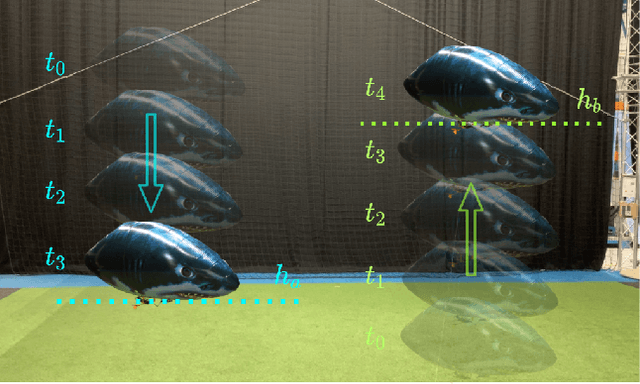

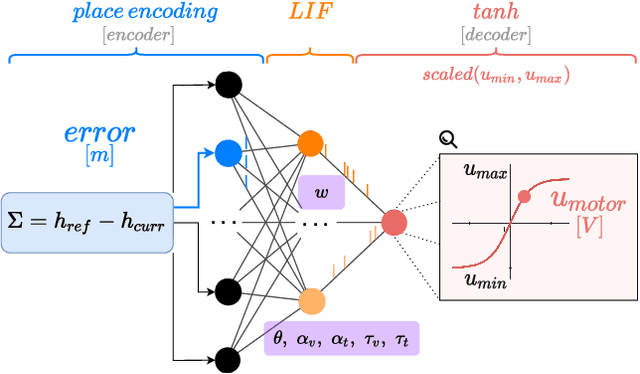

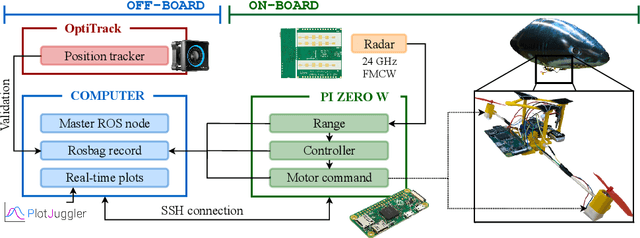

Evolved neuromorphic radar-based altitude controller for an autonomous open-source blimp

Oct 01, 2021

Abstract:Robotic airships offer significant advantages in terms of safety, mobility, and extended flight times. However, their highly restrictive weight constraints pose a major challenge regarding the available computational power to perform the required control tasks. Spiking neural networks (SNNs) are a promising research direction for addressing this problem. By mimicking the biological process for transferring information between neurons using spikes or impulses, they allow for low power consumption and asynchronous event-driven processing. In this paper, we propose an evolved altitude controller based on a SNN for a robotic airship which relies solely on the sensory feedback provided by an airborne radar. Starting from the design of a lightweight, low-cost, open-source airship, we also present a SNN-based controller architecture, an evolutionary framework for training the network in a simulated environment, and a control scheme for ameliorating the gap with reality. The system's performance is evaluated through real-world experiments, demonstrating the advantages of our approach by comparing it with an artificial neural network and a linear controller. The results show an accurate tracking of the altitude command with an efficient control effort.

Design and implementation of a parsimonious neuromorphic PID for onboard altitude control for MAVs using neuromorphic processors

Sep 21, 2021

Abstract:The great promises of neuromorphic sensing and processing for robotics have led researchers and engineers to investigate novel models for robust and reliable control of autonomous robots (navigation, obstacle detection and avoidance, etc.), especially for quadrotors in challenging contexts such as drone racing and aggressive maneuvers. Using spiking neural networks, these models can be run on neuromorphic hardware to benefit from outstanding update rates and high energy efficiency. Yet, low-level controllers are often neglected and remain outside of the neuromorphic loop. Designing low-level neuromorphic controllers is crucial to remove the standard PID, and therefore benefit from all the advantages of closing the neuromorphic loop. In this paper, we propose a parsimonious and adjustable neuromorphic PID controller, endowed with a minimal number of 93 neurons sparsely connected to achieve autonomous, onboard altitude control of a quadrotor equipped with Intel's Loihi neuromorphic chip. We successfully demonstrate the robustness of our proposed network in a set of experiments where the quadrotor is requested to reach a target altitude from take-off. Our results confirm the suitability of such low-level neuromorphic controllers, ultimately with a very high update frequency.

A toolbox for neuromorphic sensing in robotics

Mar 03, 2021

Abstract:The third generation of artificial intelligence (AI) introduced by neuromorphic computing is revolutionizing the way robots and autonomous systems can sense the world, process the information, and interact with their environment. The promises of high flexibility, energy efficiency, and robustness of neuromorphic systems is widely supported by software tools for simulating spiking neural networks, and hardware integration (neuromorphic processors). Yet, while efforts have been made on neuromorphic vision (event-based cameras), it is worth noting that most of the sensors available for robotics remain inherently incompatible with neuromorphic computing, where information is encoded into spikes. To facilitate the use of traditional sensors, we need to convert the output signals into streams of spikes, i.e., a series of events (+1, -1) along with their corresponding timestamps. In this paper, we propose a review of the coding algorithms from a robotics perspective and further supported by a benchmark to assess their performance. We also introduce a ROS (Robot Operating System) toolbox to encode and decode input signals coming from any type of sensor available on a robot. This initiative is meant to stimulate and facilitate robotic integration of neuromorphic AI, with the opportunity to adapt traditional off-the-shelf sensors to spiking neural nets within one of the most powerful robotic tools, ROS.

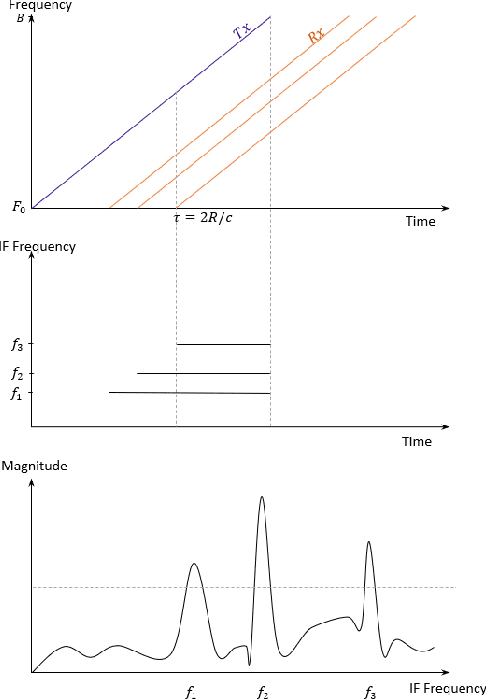

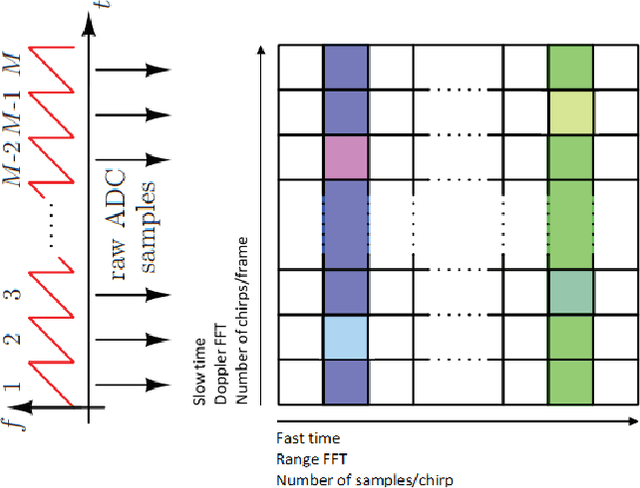

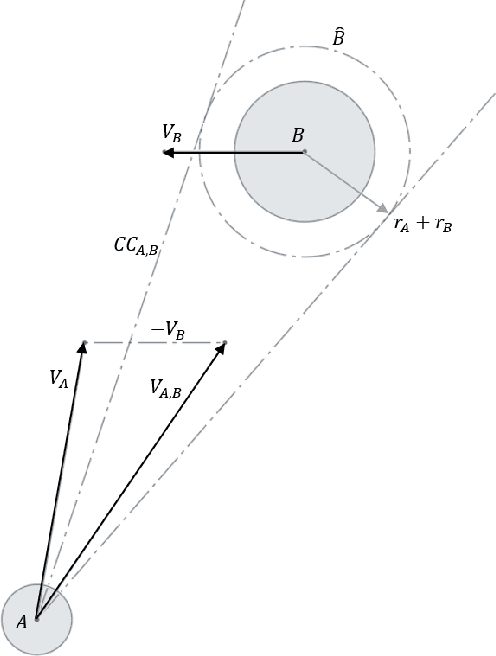

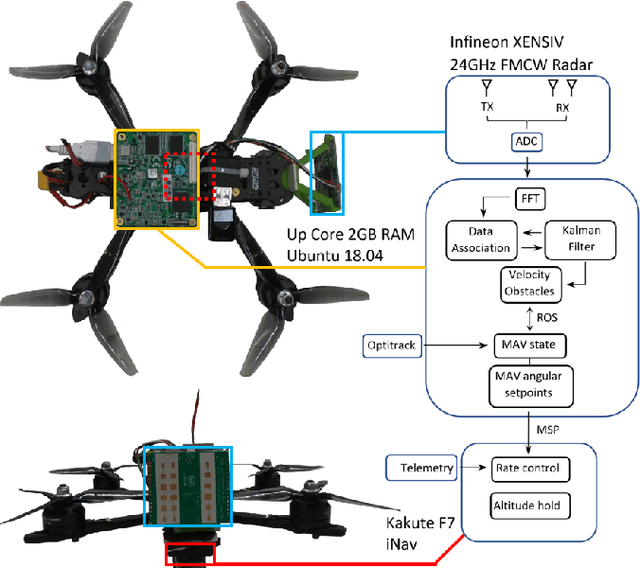

Obstacle Avoidance onboard MAVs using a FMCW RADAR

Mar 02, 2021

Abstract:Micro Air Vehicles (MAVs) are increasingly being used for complex or hazardous tasks in enclosed and cluttered environments such as surveillance or search and rescue. With this comes the necessity for sensors that can operate in poor visibility conditions to facilitate with navigation and avoidance of objects or people. Radar sensors in particular can provide more robust sensing of the environment when traditional sensors such as cameras fail in the presence of dust, fog or smoke. While extensively used in autonomous driving, miniature FMCW radars on MAVs have been relatively unexplored. This study aims to investigate to what extent this sensor is of use in these environments by employing traditional signal processing such as multi-target tracking and velocity obstacles. The viability of the solution is evaluated with an implementation on board a MAV by running trial tests in an indoor environment containing obstacles and by comparison with a human pilot, demonstrating the potential for the sensor to provide a more robust sense and avoid function in fully autonomous MAVs.

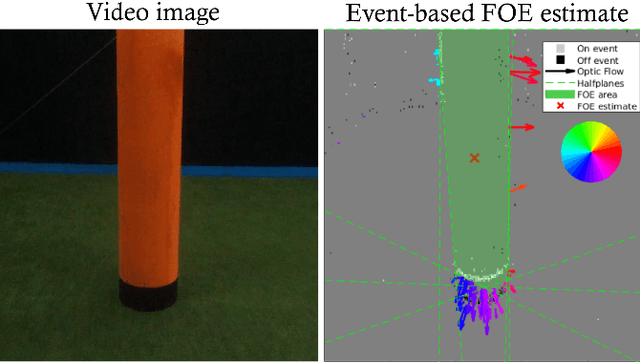

FAITH: Fast iterative half-plane focus of expansion estimation using event-based optic flow

Feb 25, 2021

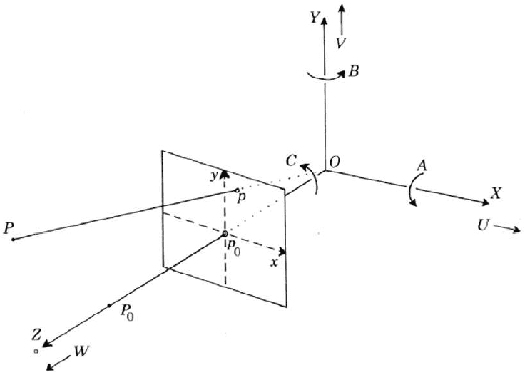

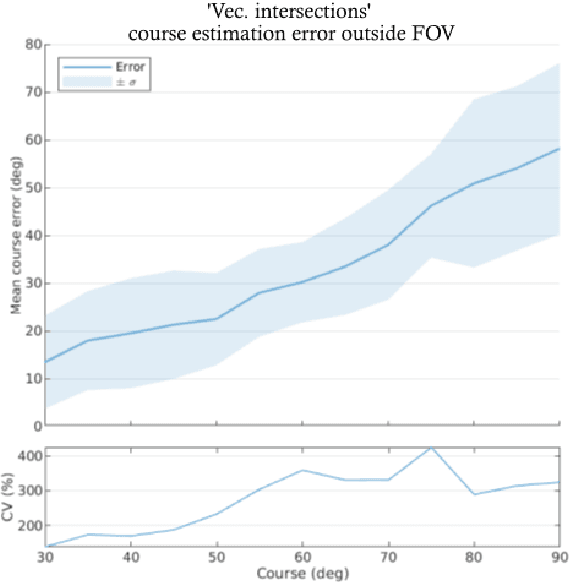

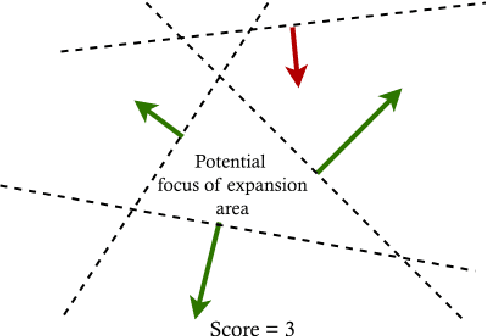

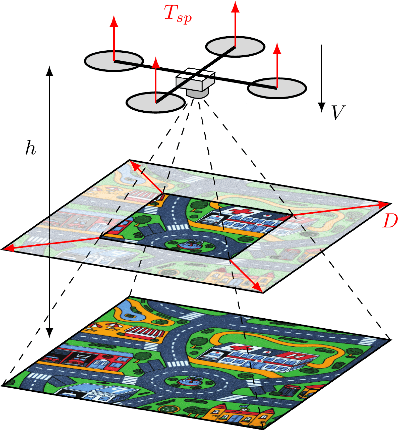

Abstract:Course estimation is a key component for the development of autonomous navigation systems for robots. While state-of-the-art methods widely use visual-based algorithms, it is worth noting that they all fail to deal with the complexity of the real world by being computationally greedy and sometimes too slow. They often require obstacles to be highly textured to improve the overall performance, particularly when the obstacle is located within the focus of expansion (FOE) where the optic flow (OF) is almost null. This study proposes the FAst ITerative Half-plane (FAITH) method to determine the course of a micro air vehicle (MAV). This is achieved by means of an event-based camera, along with a fast RANSAC-based algorithm that uses event-based OF to determine the FOE. The performance is validated by means of a benchmark on a simulated environment and then tested on a dataset collected for indoor obstacle avoidance. Our results show that the computational efficiency of our solution outperforms state-of-the-art methods while keeping a high level of accuracy. This has been further demonstrated onboard an MAV equipped with an event-based camera, showing that our event-based FOE estimation can be achieved online onboard tiny drones, thus opening the path towards fully neuromorphic solutions for autonomous obstacle avoidance and navigation onboard MAVs.

Neuromorphic control for optic-flow-based landings of MAVs using the Loihi processor

Nov 01, 2020

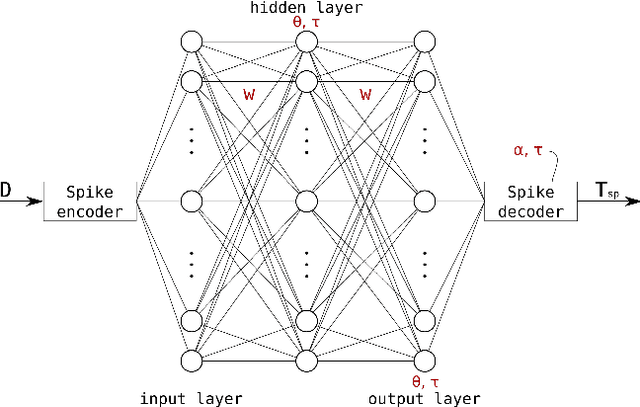

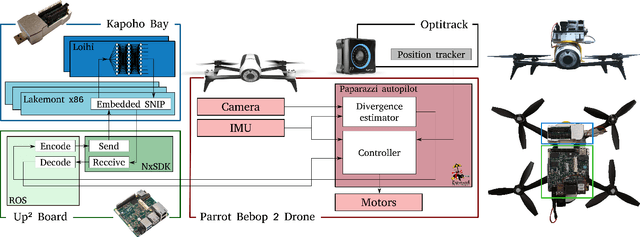

Abstract:Neuromorphic processors like Loihi offer a promising alternative to conventional computing modules for endowing constrained systems like micro air vehicles (MAVs) with robust, efficient and autonomous skills such as take-off and landing, obstacle avoidance, and pursuit. However, a major challenge for using such processors on robotic platforms is the reality gap between simulation and the real world. In this study, we present for the very first time a fully embedded application of the Loihi neuromorphic chip prototype in a flying robot. A spiking neural network (SNN) was evolved to compute the thrust command based on the divergence of the ventral optic flow field to perform autonomous landing. Evolution was performed in a Python-based simulator using the PySNN library. The resulting network architecture consists of only 35 neurons distributed among 3 layers. Quantitative analysis between simulation and Loihi reveals a root-mean-square error of the thrust setpoint as low as 0.005 g, along with a 99.8% matching of the spike sequences in the hidden layer, and 99.7% in the output layer. The proposed approach successfully bridges the reality gap, offering important insights for future neuromorphic applications in robotics. Supplementary material is available at https://mavlab.tudelft.nl/loihi/.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge