Nikhil Wessendorp

Obstacle Avoidance onboard MAVs using a FMCW RADAR

Mar 02, 2021

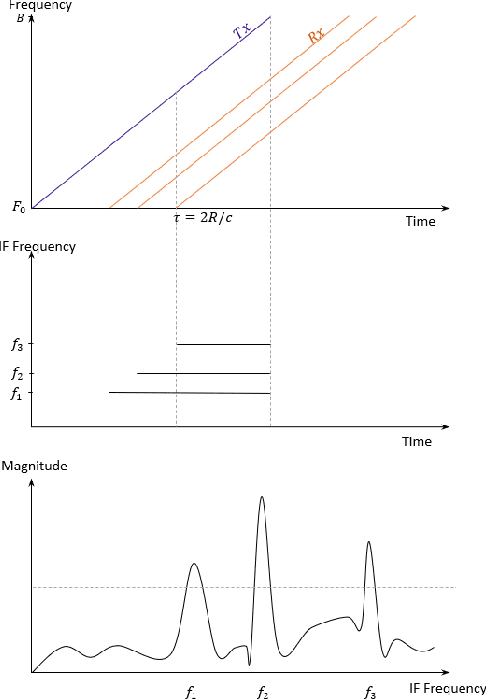

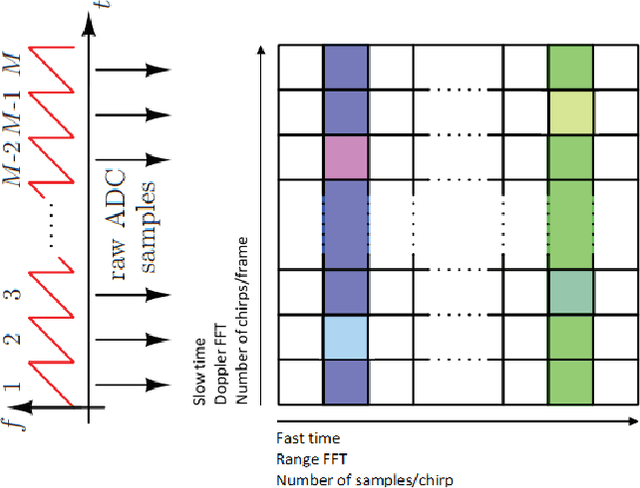

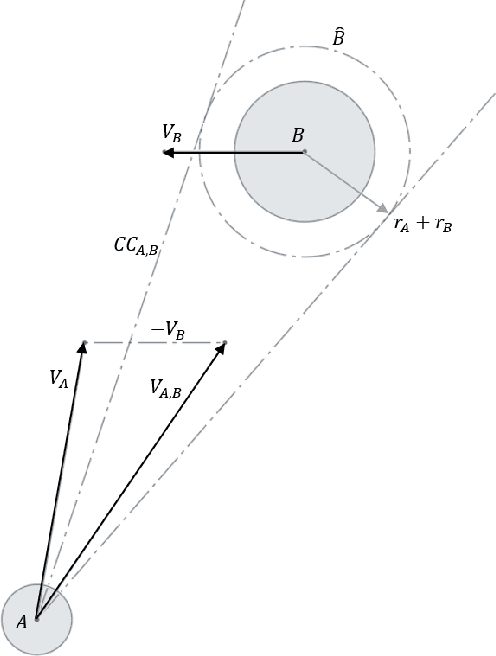

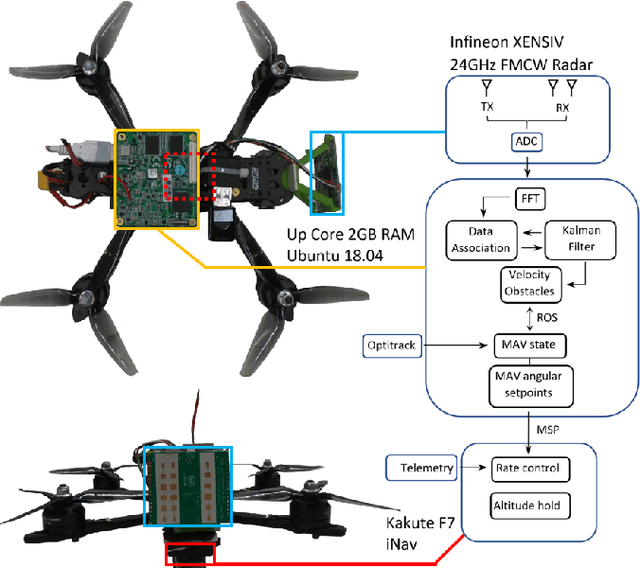

Abstract:Micro Air Vehicles (MAVs) are increasingly being used for complex or hazardous tasks in enclosed and cluttered environments such as surveillance or search and rescue. With this comes the necessity for sensors that can operate in poor visibility conditions to facilitate with navigation and avoidance of objects or people. Radar sensors in particular can provide more robust sensing of the environment when traditional sensors such as cameras fail in the presence of dust, fog or smoke. While extensively used in autonomous driving, miniature FMCW radars on MAVs have been relatively unexplored. This study aims to investigate to what extent this sensor is of use in these environments by employing traditional signal processing such as multi-target tracking and velocity obstacles. The viability of the solution is evaluated with an implementation on board a MAV by running trial tests in an indoor environment containing obstacles and by comparison with a human pilot, demonstrating the potential for the sensor to provide a more robust sense and avoid function in fully autonomous MAVs.

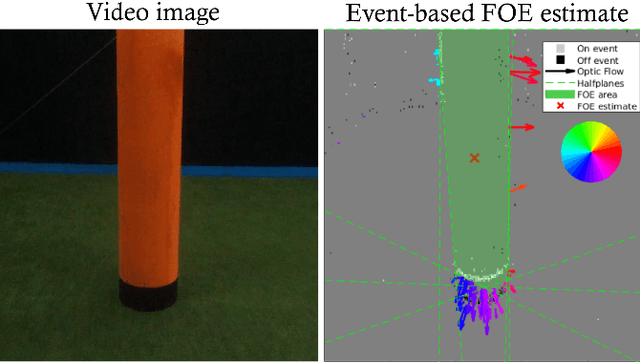

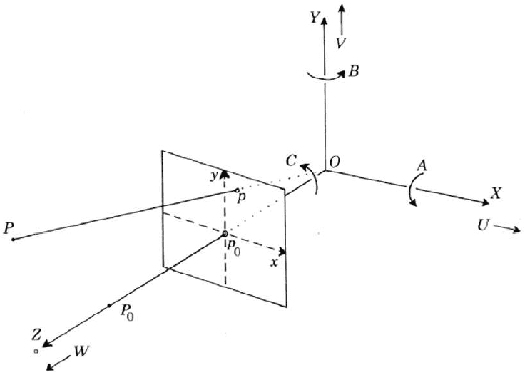

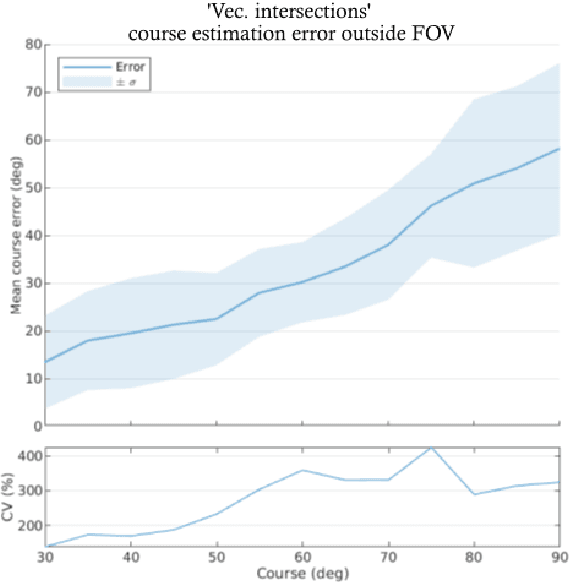

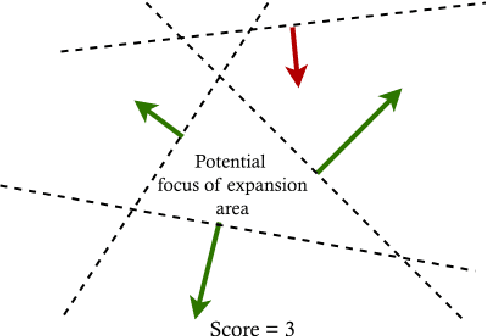

FAITH: Fast iterative half-plane focus of expansion estimation using event-based optic flow

Feb 25, 2021

Abstract:Course estimation is a key component for the development of autonomous navigation systems for robots. While state-of-the-art methods widely use visual-based algorithms, it is worth noting that they all fail to deal with the complexity of the real world by being computationally greedy and sometimes too slow. They often require obstacles to be highly textured to improve the overall performance, particularly when the obstacle is located within the focus of expansion (FOE) where the optic flow (OF) is almost null. This study proposes the FAst ITerative Half-plane (FAITH) method to determine the course of a micro air vehicle (MAV). This is achieved by means of an event-based camera, along with a fast RANSAC-based algorithm that uses event-based OF to determine the FOE. The performance is validated by means of a benchmark on a simulated environment and then tested on a dataset collected for indoor obstacle avoidance. Our results show that the computational efficiency of our solution outperforms state-of-the-art methods while keeping a high level of accuracy. This has been further demonstrated onboard an MAV equipped with an event-based camera, showing that our event-based FOE estimation can be achieved online onboard tiny drones, thus opening the path towards fully neuromorphic solutions for autonomous obstacle avoidance and navigation onboard MAVs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge