Guido de Croon

Breaking the Circle: An Autonomous Control-Switching Strategy for Stable Orographic Soaring in MAVs

Oct 27, 2025Abstract:Orographic soaring can significantly extend the endurance of micro aerial vehicles (MAVs), but circling behavior, arising from control conflicts between the longitudinal and vertical axes, increases energy consumption and the risk of divergence. We propose a control switching method, named SAOS: Switched Control for Autonomous Orographic Soaring, which mitigates circling behavior by selectively controlling either the horizontal or vertical axis, effectively transforming the system from underactuated to fully actuated during soaring. Additionally, the angle of attack is incorporated into the INDI controller to improve force estimation. Simulations with randomized initial positions and wind tunnel experiments on two MAVs demonstrate that the SAOS improves position convergence, reduces throttle usage, and mitigates roll oscillations caused by pitch-roll coupling. These improvements enhance energy efficiency and flight stability in constrained soaring environments.

On-Device Self-Supervised Learning of Low-Latency Monocular Depth from Only Events

Dec 09, 2024

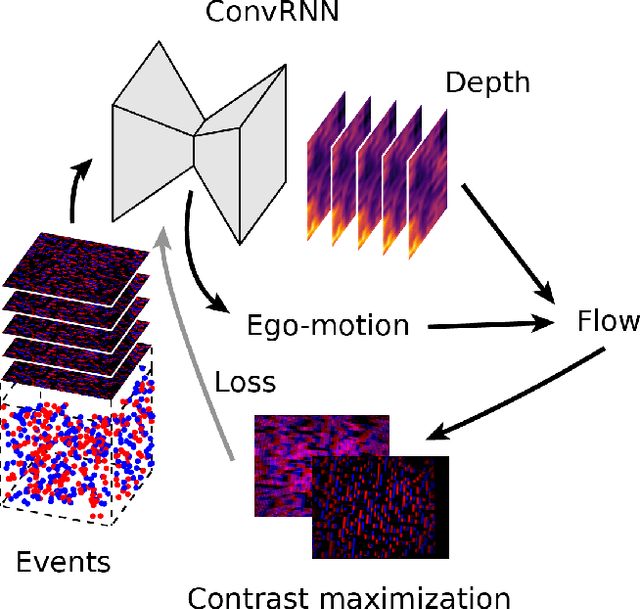

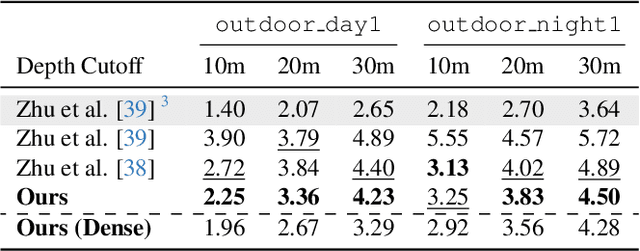

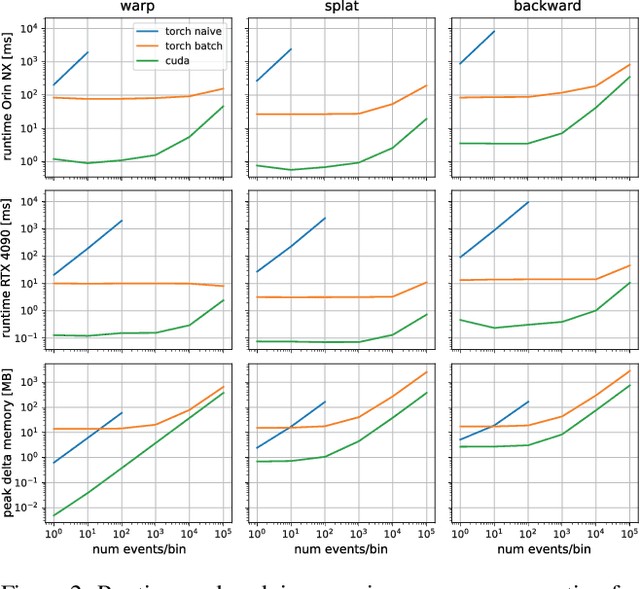

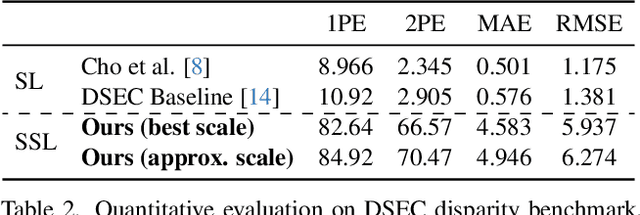

Abstract:Event cameras provide low-latency perception for only milliwatts of power. This makes them highly suitable for resource-restricted, agile robots such as small flying drones. Self-supervised learning based on contrast maximization holds great potential for event-based robot vision, as it foregoes the need to high-frequency ground truth and allows for online learning in the robot's operational environment. However, online, onboard learning raises the major challenge of achieving sufficient computational efficiency for real-time learning, while maintaining competitive visual perception performance. In this work, we improve the time and memory efficiency of the contrast maximization learning pipeline. Benchmarking experiments show that the proposed pipeline achieves competitive results with the state of the art on the task of depth estimation from events. Furthermore, we demonstrate the usability of the learned depth for obstacle avoidance through real-world flight experiments. Finally, we compare the performance of different combinations of pre-training and fine-tuning of the depth estimation networks, showing that on-board domain adaptation is feasible given a few minutes of flight.

Event-based Optical Flow on Neuromorphic Processor: ANN vs. SNN Comparison based on Activation Sparsification

Jul 29, 2024

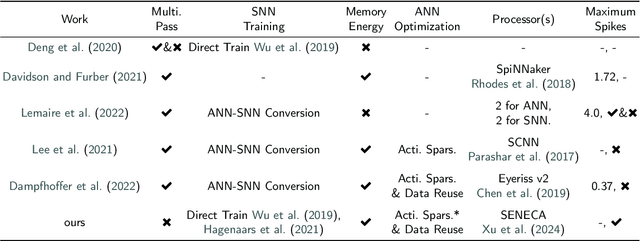

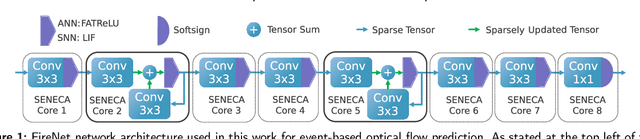

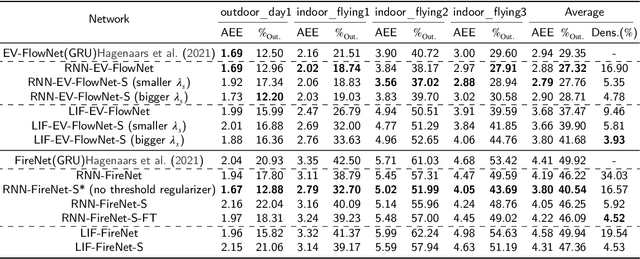

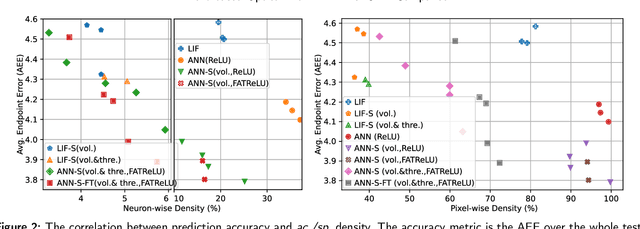

Abstract:Spiking neural networks (SNNs) for event-based optical flow are claimed to be computationally more efficient than their artificial neural networks (ANNs) counterparts, but a fair comparison is missing in the literature. In this work, we propose an event-based optical flow solution based on activation sparsification and a neuromorphic processor, SENECA. SENECA has an event-driven processing mechanism that can exploit the sparsity in ANN activations and SNN spikes to accelerate the inference of both types of neural networks. The ANN and the SNN for comparison have similar low activation/spike density (~5%) thanks to our novel sparsification-aware training. In the hardware-in-loop experiments designed to deduce the average time and energy consumption, the SNN consumes 44.9ms and 927.0 microjoules, which are 62.5% and 75.2% of the ANN's consumption, respectively. We find that SNN's higher efficiency attributes to its lower pixel-wise spike density (43.5% vs. 66.5%) that requires fewer memory access operations for neuron states.

Direct learning of home vector direction for insect-inspired robot navigation

May 06, 2024Abstract:Insects have long been recognized for their ability to navigate and return home using visual cues from their nest's environment. However, the precise mechanism underlying this remarkable homing skill remains a subject of ongoing investigation. Drawing inspiration from the learning flights of honey bees and wasps, we propose a robot navigation method that directly learns the home vector direction from visual percepts during a learning flight in the vicinity of the nest. After learning, the robot will travel away from the nest, come back by means of odometry, and eliminate the resultant drift by inferring the home vector orientation from the currently experienced view. Using a compact convolutional neural network, we demonstrate successful learning in both simulated and real forest environments, as well as successful homing control of a simulated quadrotor. The average errors of the inferred home vectors in general stay well below the 90{\deg} required for successful homing, and below 24{\deg} if all images contain sufficient texture and illumination. Moreover, we show that the trajectory followed during the initial learning flight has a pronounced impact on the network's performance. A higher density of sample points in proximity to the nest results in a more consistent return. Code and data are available at https://mavlab.tudelft.nl/learning_to_home .

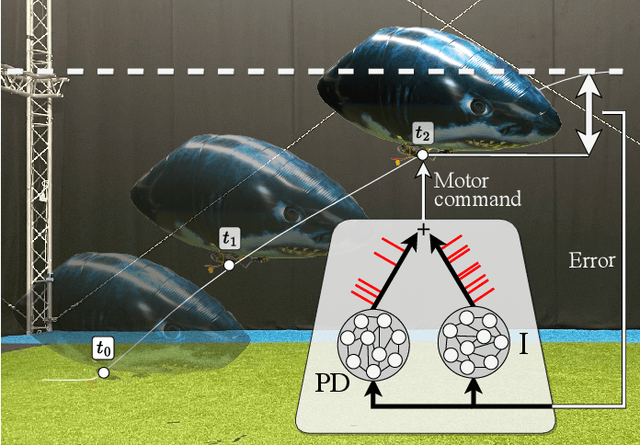

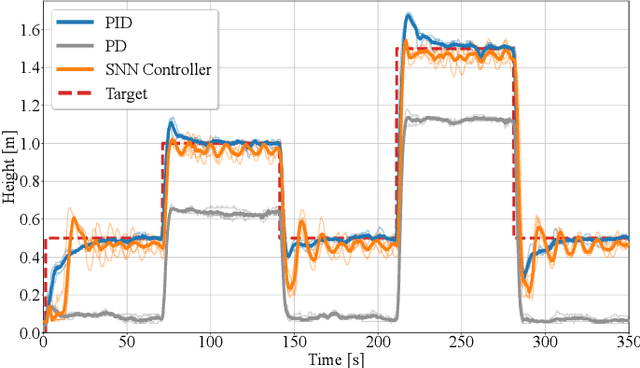

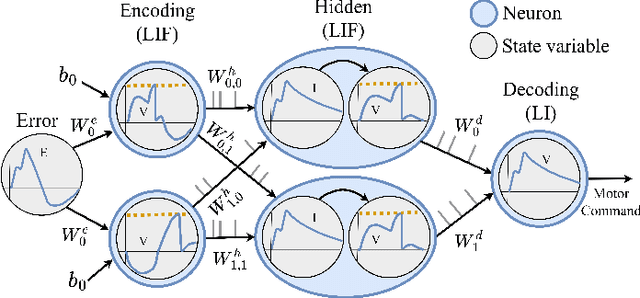

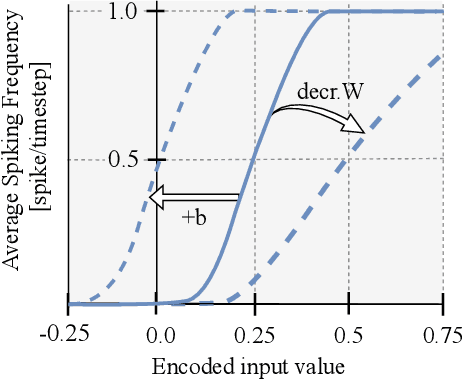

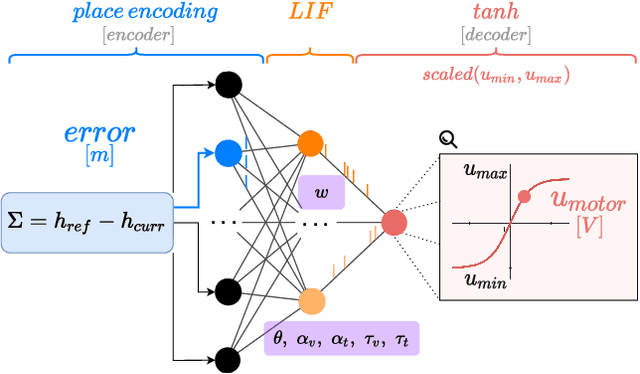

Evolving Spiking Neural Networks to Mimic PID Control for Autonomous Blimps

Sep 22, 2023

Abstract:In recent years, Artificial Neural Networks (ANN) have become a standard in robotic control. However, a significant drawback of large-scale ANNs is their increased power consumption. This becomes a critical concern when designing autonomous aerial vehicles, given the stringent constraints on power and weight. Especially in the case of blimps, known for their extended endurance, power-efficient control methods are essential. Spiking neural networks (SNN) can provide a solution, facilitating energy-efficient and asynchronous event-driven processing. In this paper, we have evolved SNNs for accurate altitude control of a non-neutrally buoyant indoor blimp, relying solely on onboard sensing and processing power. The blimp's altitude tracking performance significantly improved compared to prior research, showing reduced oscillations and a minimal steady-state error. The parameters of the SNNs were optimized via an evolutionary algorithm, using a Proportional-Derivative-Integral (PID) controller as the target signal. We developed two complementary SNN controllers while examining various hidden layer structures. The first controller responds swiftly to control errors, mitigating overshooting and oscillations, while the second minimizes steady-state errors due to non-neutral buoyancy-induced drift. Despite the blimp's drivetrain limitations, our SNN controllers ensured stable altitude control, employing only 160 spiking neurons.

NeuroBench: Advancing Neuromorphic Computing through Collaborative, Fair and Representative Benchmarking

Apr 15, 2023

Abstract:The field of neuromorphic computing holds great promise in terms of advancing computing efficiency and capabilities by following brain-inspired principles. However, the rich diversity of techniques employed in neuromorphic research has resulted in a lack of clear standards for benchmarking, hindering effective evaluation of the advantages and strengths of neuromorphic methods compared to traditional deep-learning-based methods. This paper presents a collaborative effort, bringing together members from academia and the industry, to define benchmarks for neuromorphic computing: NeuroBench. The goals of NeuroBench are to be a collaborative, fair, and representative benchmark suite developed by the community, for the community. In this paper, we discuss the challenges associated with benchmarking neuromorphic solutions, and outline the key features of NeuroBench. We believe that NeuroBench will be a significant step towards defining standards that can unify the goals of neuromorphic computing and drive its technological progress. Please visit neurobench.ai for the latest updates on the benchmark tasks and metrics.

Fully neuromorphic vision and control for autonomous drone flight

Mar 15, 2023Abstract:Biological sensing and processing is asynchronous and sparse, leading to low-latency and energy-efficient perception and action. In robotics, neuromorphic hardware for event-based vision and spiking neural networks promises to exhibit similar characteristics. However, robotic implementations have been limited to basic tasks with low-dimensional sensory inputs and motor actions due to the restricted network size in current embedded neuromorphic processors and the difficulties of training spiking neural networks. Here, we present the first fully neuromorphic vision-to-control pipeline for controlling a freely flying drone. Specifically, we train a spiking neural network that accepts high-dimensional raw event-based camera data and outputs low-level control actions for performing autonomous vision-based flight. The vision part of the network, consisting of five layers and 28.8k neurons, maps incoming raw events to ego-motion estimates and is trained with self-supervised learning on real event data. The control part consists of a single decoding layer and is learned with an evolutionary algorithm in a drone simulator. Robotic experiments show a successful sim-to-real transfer of the fully learned neuromorphic pipeline. The drone can accurately follow different ego-motion setpoints, allowing for hovering, landing, and maneuvering sideways$\unicode{x2014}$even while yawing at the same time. The neuromorphic pipeline runs on board on Intel's Loihi neuromorphic processor with an execution frequency of 200 Hz, spending only 27 $\unicode{x00b5}$J per inference. These results illustrate the potential of neuromorphic sensing and processing for enabling smaller, more intelligent robots.

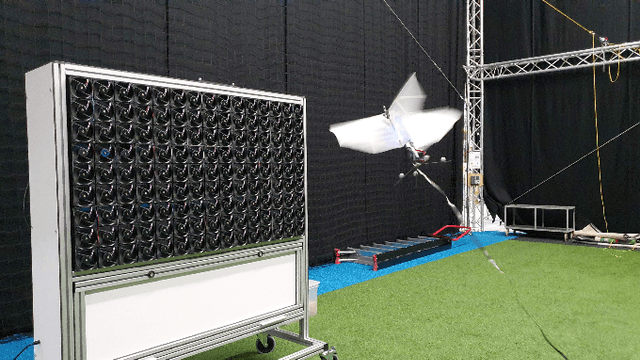

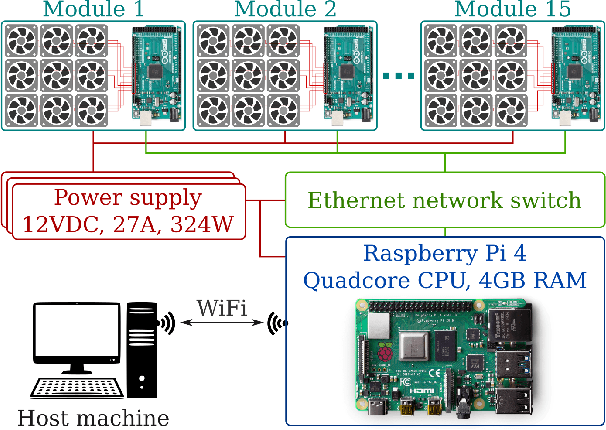

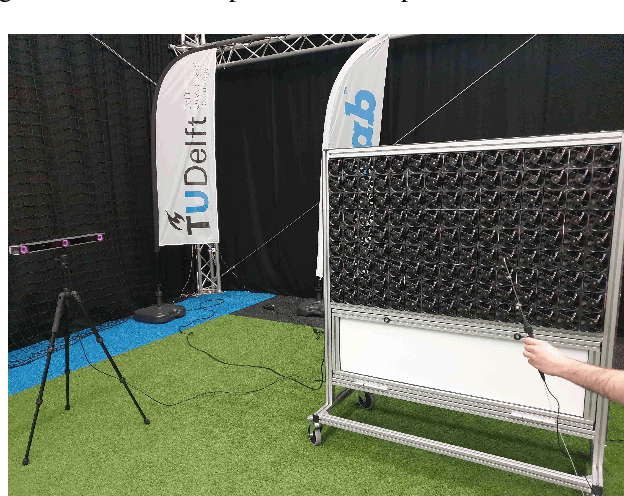

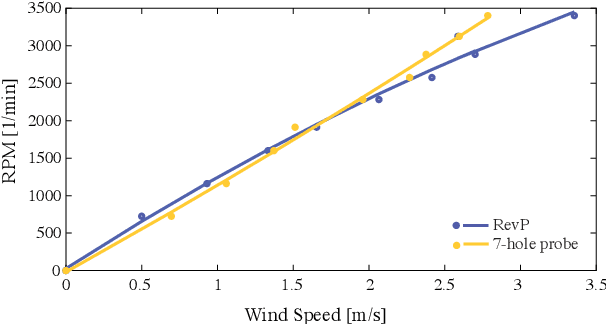

An Experimental Study of Wind Resistance and Power Consumption in MAVs with a Low-Speed Multi-Fan Wind System

Feb 14, 2022

Abstract:This paper discusses a low-cost, open-source and open-hardware design and performance evaluation of a low-speed, multi-fan wind system dedicated to micro air vehicle (MAV) testing. In addition, a set of experiments with a flapping wing MAV and rotorcraft is presented, demonstrating the capabilities of the system and the properties of these different types of drones in response to various types of wind. We performed two sets of experiments where a MAV is flying into the wake of the fan system, gathering data about states, battery voltage and current. Firstly, we focus on steady wind conditions with wind speeds ranging from 0.5 m/s to 3.4 m/s. During the second set of experiments, we introduce wind gusts, by periodically modulating the wind speed from 1.3 m/s to 3.4 m/s with wind gust oscillations of 0.5 Hz, 0.25 Hz and 0.125 Hz. The "Flapper" flapping wing MAV requires much larger pitch angles to counter wind than the "CrazyFlie" quadrotor. This is due to the Flapper's larger wing surface. In forward flight, its wings do provide extra lift, considerably reducing the power consumption. In contrast, the CrazyFlie's power consumption stays more constant for different wind speeds. The experiments with the varying wind show a quicker gust response by the CrazyFlie compared with the Flapper drone, but both their responses could be further improved. We expect that the proposed wind gust system will provide a useful tool to the community to achieve such improvements.

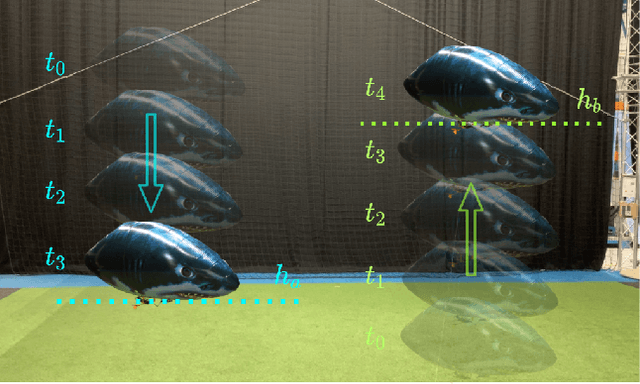

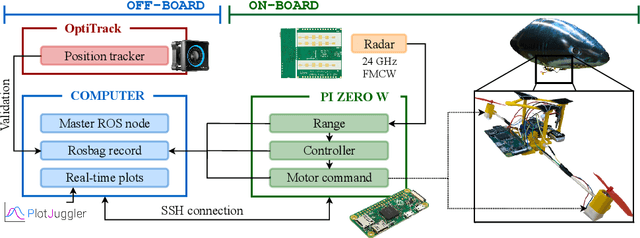

Evolved neuromorphic radar-based altitude controller for an autonomous open-source blimp

Oct 01, 2021

Abstract:Robotic airships offer significant advantages in terms of safety, mobility, and extended flight times. However, their highly restrictive weight constraints pose a major challenge regarding the available computational power to perform the required control tasks. Spiking neural networks (SNNs) are a promising research direction for addressing this problem. By mimicking the biological process for transferring information between neurons using spikes or impulses, they allow for low power consumption and asynchronous event-driven processing. In this paper, we propose an evolved altitude controller based on a SNN for a robotic airship which relies solely on the sensory feedback provided by an airborne radar. Starting from the design of a lightweight, low-cost, open-source airship, we also present a SNN-based controller architecture, an evolutionary framework for training the network in a simulated environment, and a control scheme for ameliorating the gap with reality. The system's performance is evaluated through real-world experiments, demonstrating the advantages of our approach by comparing it with an artificial neural network and a linear controller. The results show an accurate tracking of the altitude command with an efficient control effort.

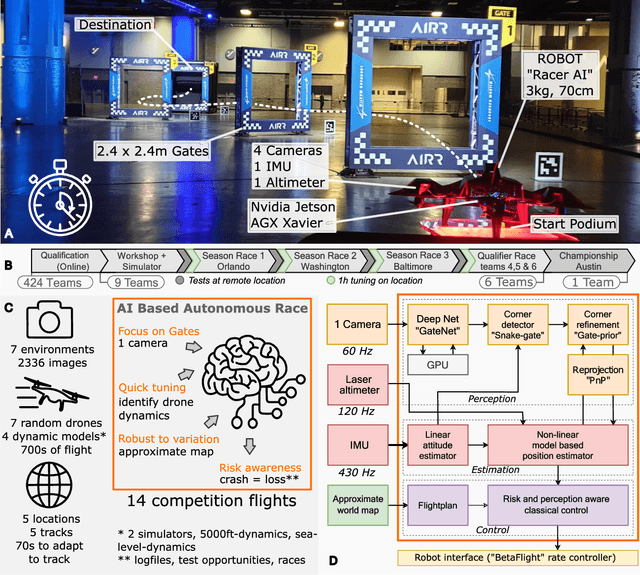

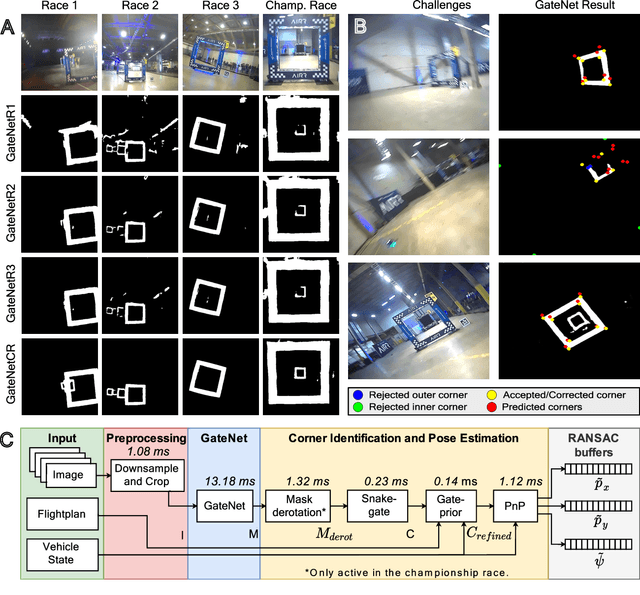

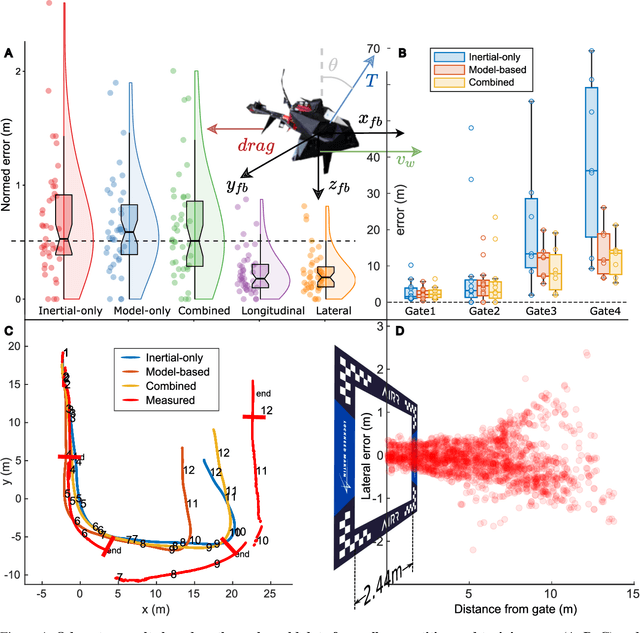

The Artificial Intelligence behind the winning entry to the 2019 AI Robotic Racing Competition

Sep 30, 2021

Abstract:Robotics is the next frontier in the progress of Artificial Intelligence (AI), as the real world in which robots operate represents an enormous, complex, continuous state space with inherent real-time requirements. One extreme challenge in robotics is currently formed by autonomous drone racing. Human drone racers can fly through complex tracks at speeds of up to 190 km/h. Achieving similar speeds with autonomous drones signifies tackling fundamental problems in AI under extreme restrictions in terms of resources. In this article, we present the winning solution of the first AI Robotic Racing (AIRR) Circuit, a competition consisting of four races in which all participating teams used the same drone, to which they had limited access. The core of our approach is inspired by how human pilots combine noisy observations of the race gates with their mental model of the drone's dynamics to achieve fast control. Our approach has a large focus on gate detection with an efficient deep neural segmentation network and active vision. Further, we make contributions to robust state estimation and risk-based control. This allowed us to reach speeds of ~9.2m/s in the last race, unrivaled by previous autonomous drone race competitions. Although our solution was the fastest and most robust, it still lost against one of the best human pilots, Gab707. The presented approach indicates a promising direction to close the gap with human drone pilots, forming an important step in bringing AI to the real world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge