Stein Stroobants

On-Device Self-Supervised Learning of Low-Latency Monocular Depth from Only Events

Dec 09, 2024

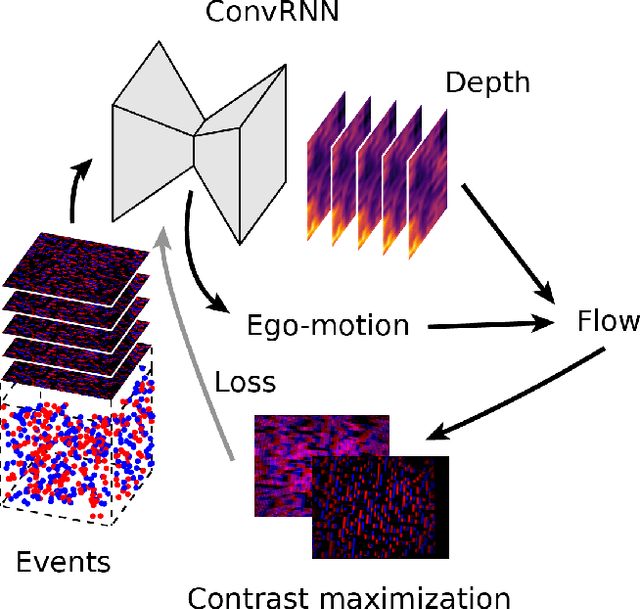

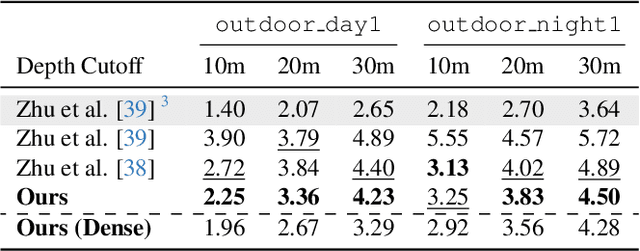

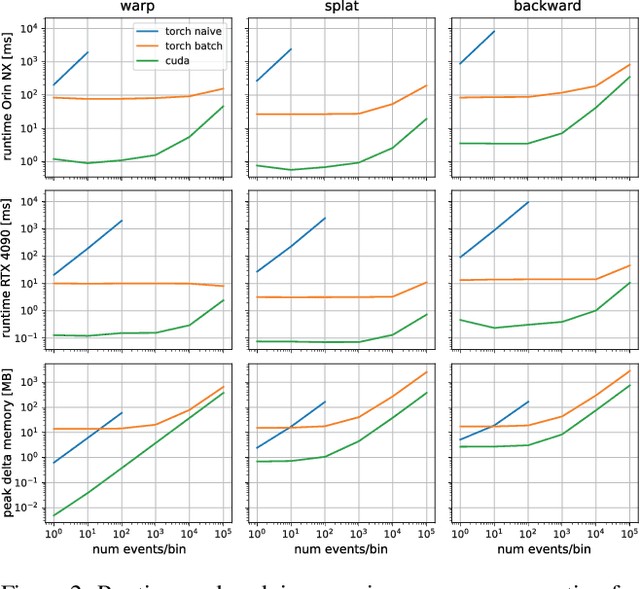

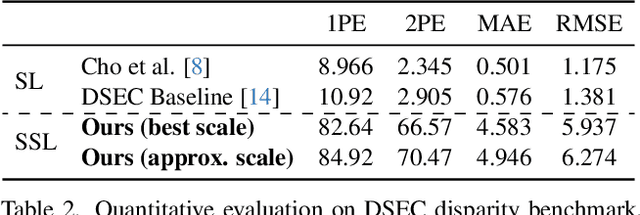

Abstract:Event cameras provide low-latency perception for only milliwatts of power. This makes them highly suitable for resource-restricted, agile robots such as small flying drones. Self-supervised learning based on contrast maximization holds great potential for event-based robot vision, as it foregoes the need to high-frequency ground truth and allows for online learning in the robot's operational environment. However, online, onboard learning raises the major challenge of achieving sufficient computational efficiency for real-time learning, while maintaining competitive visual perception performance. In this work, we improve the time and memory efficiency of the contrast maximization learning pipeline. Benchmarking experiments show that the proposed pipeline achieves competitive results with the state of the art on the task of depth estimation from events. Furthermore, we demonstrate the usability of the learned depth for obstacle avoidance through real-world flight experiments. Finally, we compare the performance of different combinations of pre-training and fine-tuning of the depth estimation networks, showing that on-board domain adaptation is feasible given a few minutes of flight.

Neuromorphic Attitude Estimation and Control

Nov 21, 2024Abstract:The real-world application of small drones is mostly hampered by energy limitations. Neuromorphic computing promises extremely energy-efficient AI for autonomous flight, but is still challenging to train and deploy on real robots. In order to reap the maximal benefits from neuromorphic computing, it is desired to perform all autonomy functions end-to-end on a single neuromorphic chip, from low-level attitude control to high-level navigation. This research presents the first neuromorphic control system using a spiking neural network (SNN) to effectively map a drone's raw sensory input directly to motor commands. We apply this method to low-level attitude estimation and control for a quadrotor, deploying the SNN on a tiny Crazyflie. We propose a modular SNN, separately training and then merging estimation and control sub-networks. The SNN is trained with imitation learning, using a flight dataset of sensory-motor pairs. Post-training, the network is deployed on the Crazyflie, issuing control commands from sensor inputs at $500$Hz. Furthermore, for the training procedure we augmented training data by flying a controller with additional excitation and time-shifting the target data to enhance the predictive capabilities of the SNN. On the real drone the perception-to-control SNN tracks attitude commands with an average error of $3$ degrees, compared to $2.5$ degrees for the regular flight stack. We also show the benefits of the proposed learning modifications for reducing the average tracking error and reducing oscillations. Our work shows the feasibility of performing neuromorphic end-to-end control, laying the basis for highly energy-efficient and low-latency neuromorphic autopilots.

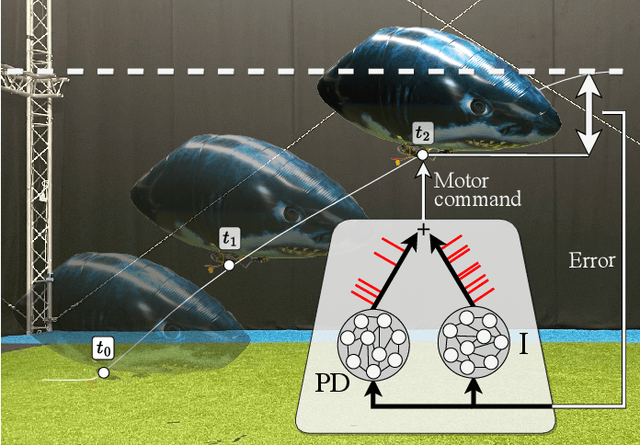

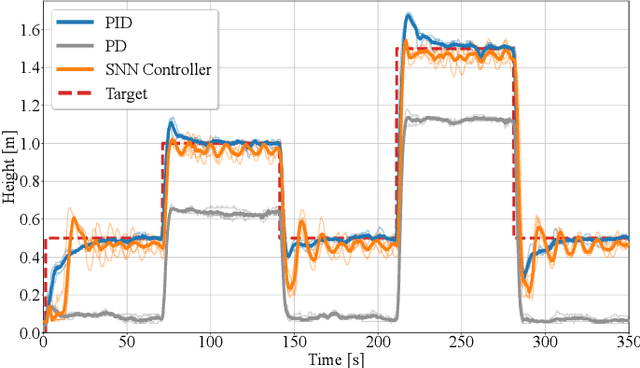

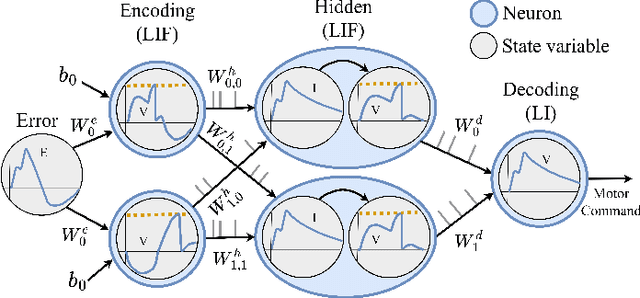

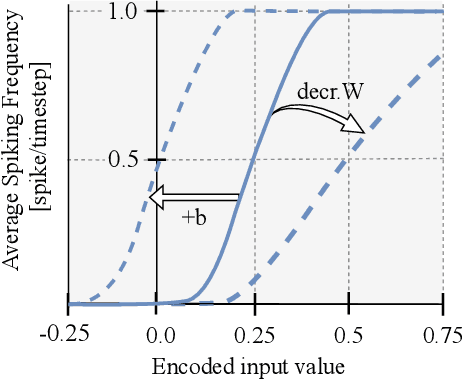

Evolving Spiking Neural Networks to Mimic PID Control for Autonomous Blimps

Sep 22, 2023

Abstract:In recent years, Artificial Neural Networks (ANN) have become a standard in robotic control. However, a significant drawback of large-scale ANNs is their increased power consumption. This becomes a critical concern when designing autonomous aerial vehicles, given the stringent constraints on power and weight. Especially in the case of blimps, known for their extended endurance, power-efficient control methods are essential. Spiking neural networks (SNN) can provide a solution, facilitating energy-efficient and asynchronous event-driven processing. In this paper, we have evolved SNNs for accurate altitude control of a non-neutrally buoyant indoor blimp, relying solely on onboard sensing and processing power. The blimp's altitude tracking performance significantly improved compared to prior research, showing reduced oscillations and a minimal steady-state error. The parameters of the SNNs were optimized via an evolutionary algorithm, using a Proportional-Derivative-Integral (PID) controller as the target signal. We developed two complementary SNN controllers while examining various hidden layer structures. The first controller responds swiftly to control errors, mitigating overshooting and oscillations, while the second minimizes steady-state errors due to non-neutral buoyancy-induced drift. Despite the blimp's drivetrain limitations, our SNN controllers ensured stable altitude control, employing only 160 spiking neurons.

Neuromorphic computing for attitude estimation onboard quadrotors

Apr 18, 2023Abstract:Compelling evidence has been given for the high energy efficiency and update rates of neuromorphic processors, with performance beyond what standard Von Neumann architectures can achieve. Such promising features could be advantageous in critical embedded systems, especially in robotics. To date, the constraints inherent in robots (e.g., size and weight, battery autonomy, available sensors, computing resources, processing time, etc.), and particularly in aerial vehicles, severely hamper the performance of fully-autonomous on-board control, including sensor processing and state estimation. In this work, we propose a spiking neural network (SNN) capable of estimating the pitch and roll angles of a quadrotor in highly dynamic movements from 6-degree of freedom Inertial Measurement Unit (IMU) data. With only 150 neurons and a limited training dataset obtained using a quadrotor in a real world setup, the network shows competitive results as compared to state-of-the-art, non-neuromorphic attitude estimators. The proposed architecture was successfully tested on the Loihi neuromorphic processor on-board a quadrotor to estimate the attitude when flying. Our results show the robustness of neuromorphic attitude estimation and pave the way towards energy-efficient, fully autonomous control of quadrotors with dedicated neuromorphic computing systems.

Neuromorphic Control using Input-Weighted Threshold Adaptation

Apr 18, 2023

Abstract:Neuromorphic processing promises high energy efficiency and rapid response rates, making it an ideal candidate for achieving autonomous flight of resource-constrained robots. It will be especially beneficial for complex neural networks as are involved in high-level visual perception. However, fully neuromorphic solutions will also need to tackle low-level control tasks. Remarkably, it is currently still challenging to replicate even basic low-level controllers such as proportional-integral-derivative (PID) controllers. Specifically, it is difficult to incorporate the integral and derivative parts. To address this problem, we propose a neuromorphic controller that incorporates proportional, integral, and derivative pathways during learning. Our approach includes a novel input threshold adaptation mechanism for the integral pathway. This Input-Weighted Threshold Adaptation (IWTA) introduces an additional weight per synaptic connection, which is used to adapt the threshold of the post-synaptic neuron. We tackle the derivative term by employing neurons with different time constants. We first analyze the performance and limits of the proposed mechanisms and then put our controller to the test by implementing it on a microcontroller connected to the open-source tiny Crazyflie quadrotor, replacing the innermost rate controller. We demonstrate the stability of our bio-inspired algorithm with flights in the presence of disturbances. The current work represents a substantial step towards controlling highly dynamic systems with neuromorphic algorithms, thus advancing neuromorphic processing and robotics. In addition, integration is an important part of any temporal task, so the proposed Input-Weighted Threshold Adaptation (IWTA) mechanism may have implications well beyond control tasks.

Fully neuromorphic vision and control for autonomous drone flight

Mar 15, 2023Abstract:Biological sensing and processing is asynchronous and sparse, leading to low-latency and energy-efficient perception and action. In robotics, neuromorphic hardware for event-based vision and spiking neural networks promises to exhibit similar characteristics. However, robotic implementations have been limited to basic tasks with low-dimensional sensory inputs and motor actions due to the restricted network size in current embedded neuromorphic processors and the difficulties of training spiking neural networks. Here, we present the first fully neuromorphic vision-to-control pipeline for controlling a freely flying drone. Specifically, we train a spiking neural network that accepts high-dimensional raw event-based camera data and outputs low-level control actions for performing autonomous vision-based flight. The vision part of the network, consisting of five layers and 28.8k neurons, maps incoming raw events to ego-motion estimates and is trained with self-supervised learning on real event data. The control part consists of a single decoding layer and is learned with an evolutionary algorithm in a drone simulator. Robotic experiments show a successful sim-to-real transfer of the fully learned neuromorphic pipeline. The drone can accurately follow different ego-motion setpoints, allowing for hovering, landing, and maneuvering sideways$\unicode{x2014}$even while yawing at the same time. The neuromorphic pipeline runs on board on Intel's Loihi neuromorphic processor with an execution frequency of 200 Hz, spending only 27 $\unicode{x00b5}$J per inference. These results illustrate the potential of neuromorphic sensing and processing for enabling smaller, more intelligent robots.

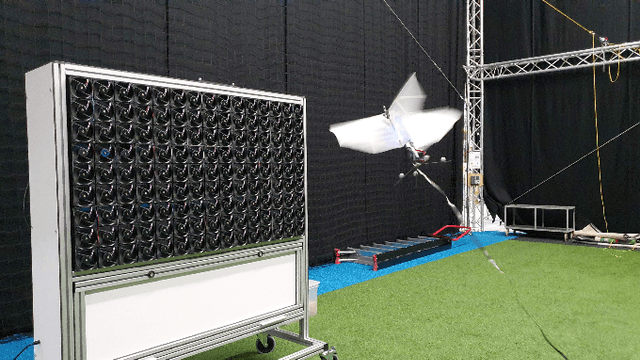

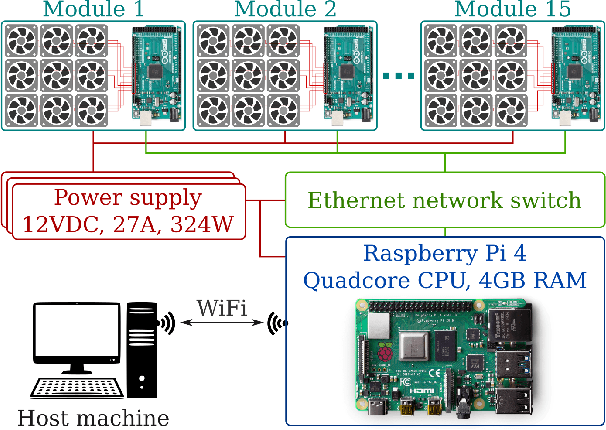

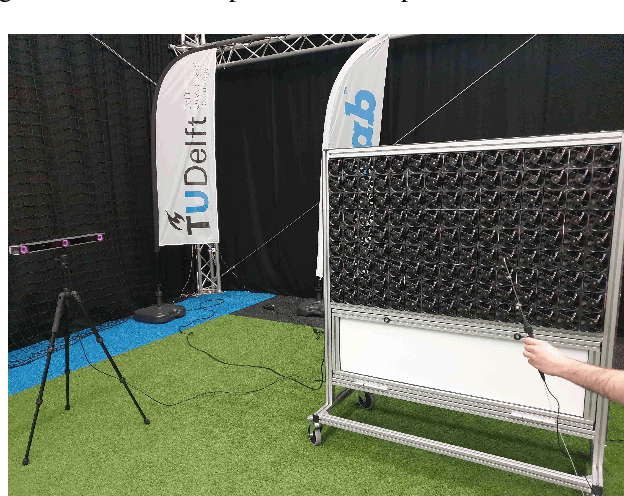

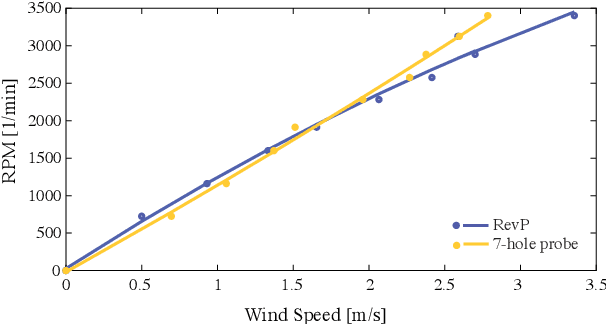

An Experimental Study of Wind Resistance and Power Consumption in MAVs with a Low-Speed Multi-Fan Wind System

Feb 14, 2022

Abstract:This paper discusses a low-cost, open-source and open-hardware design and performance evaluation of a low-speed, multi-fan wind system dedicated to micro air vehicle (MAV) testing. In addition, a set of experiments with a flapping wing MAV and rotorcraft is presented, demonstrating the capabilities of the system and the properties of these different types of drones in response to various types of wind. We performed two sets of experiments where a MAV is flying into the wake of the fan system, gathering data about states, battery voltage and current. Firstly, we focus on steady wind conditions with wind speeds ranging from 0.5 m/s to 3.4 m/s. During the second set of experiments, we introduce wind gusts, by periodically modulating the wind speed from 1.3 m/s to 3.4 m/s with wind gust oscillations of 0.5 Hz, 0.25 Hz and 0.125 Hz. The "Flapper" flapping wing MAV requires much larger pitch angles to counter wind than the "CrazyFlie" quadrotor. This is due to the Flapper's larger wing surface. In forward flight, its wings do provide extra lift, considerably reducing the power consumption. In contrast, the CrazyFlie's power consumption stays more constant for different wind speeds. The experiments with the varying wind show a quicker gust response by the CrazyFlie compared with the Flapper drone, but both their responses could be further improved. We expect that the proposed wind gust system will provide a useful tool to the community to achieve such improvements.

Design and implementation of a parsimonious neuromorphic PID for onboard altitude control for MAVs using neuromorphic processors

Sep 21, 2021

Abstract:The great promises of neuromorphic sensing and processing for robotics have led researchers and engineers to investigate novel models for robust and reliable control of autonomous robots (navigation, obstacle detection and avoidance, etc.), especially for quadrotors in challenging contexts such as drone racing and aggressive maneuvers. Using spiking neural networks, these models can be run on neuromorphic hardware to benefit from outstanding update rates and high energy efficiency. Yet, low-level controllers are often neglected and remain outside of the neuromorphic loop. Designing low-level neuromorphic controllers is crucial to remove the standard PID, and therefore benefit from all the advantages of closing the neuromorphic loop. In this paper, we propose a parsimonious and adjustable neuromorphic PID controller, endowed with a minimal number of 93 neurons sparsely connected to achieve autonomous, onboard altitude control of a quadrotor equipped with Intel's Loihi neuromorphic chip. We successfully demonstrate the robustness of our proposed network in a set of experiments where the quadrotor is requested to reach a target altitude from take-off. Our results confirm the suitability of such low-level neuromorphic controllers, ultimately with a very high update frequency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge