Christophe de Wagter

Neuromorphic Attitude Estimation and Control

Nov 21, 2024Abstract:The real-world application of small drones is mostly hampered by energy limitations. Neuromorphic computing promises extremely energy-efficient AI for autonomous flight, but is still challenging to train and deploy on real robots. In order to reap the maximal benefits from neuromorphic computing, it is desired to perform all autonomy functions end-to-end on a single neuromorphic chip, from low-level attitude control to high-level navigation. This research presents the first neuromorphic control system using a spiking neural network (SNN) to effectively map a drone's raw sensory input directly to motor commands. We apply this method to low-level attitude estimation and control for a quadrotor, deploying the SNN on a tiny Crazyflie. We propose a modular SNN, separately training and then merging estimation and control sub-networks. The SNN is trained with imitation learning, using a flight dataset of sensory-motor pairs. Post-training, the network is deployed on the Crazyflie, issuing control commands from sensor inputs at $500$Hz. Furthermore, for the training procedure we augmented training data by flying a controller with additional excitation and time-shifting the target data to enhance the predictive capabilities of the SNN. On the real drone the perception-to-control SNN tracks attitude commands with an average error of $3$ degrees, compared to $2.5$ degrees for the regular flight stack. We also show the benefits of the proposed learning modifications for reducing the average tracking error and reducing oscillations. Our work shows the feasibility of performing neuromorphic end-to-end control, laying the basis for highly energy-efficient and low-latency neuromorphic autopilots.

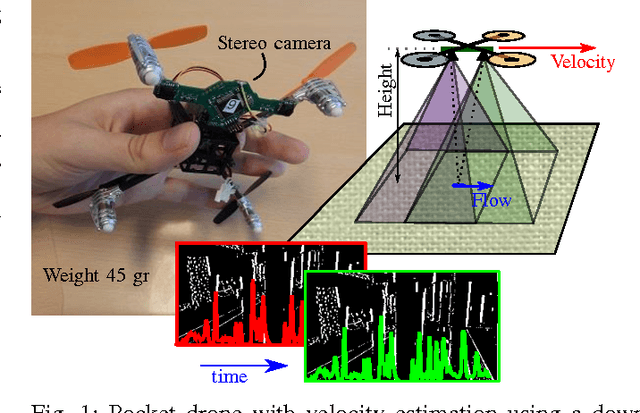

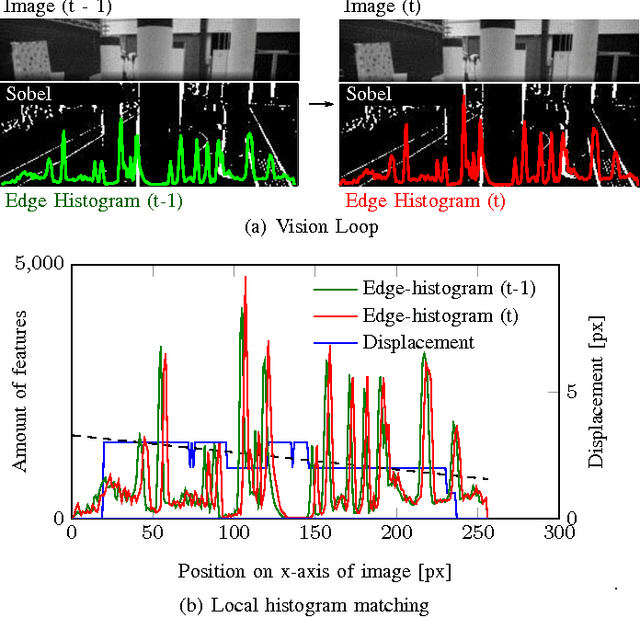

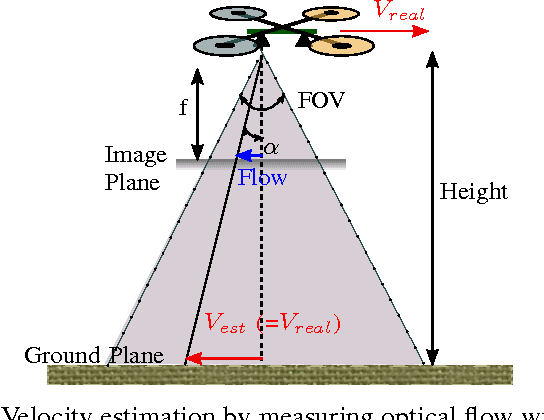

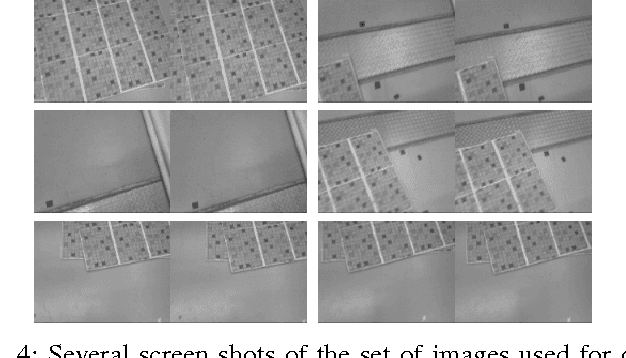

Local Histogram Matching for Efficient Optical Flow Computation Applied to Velocity Estimation on Pocket Drones

Mar 14, 2017

Abstract:Autonomous flight of pocket drones is challenging due to the severe limitations on on-board energy, sensing, and processing power. However, tiny drones have great potential as their small size allows maneuvering through narrow spaces while their small weight provides significant safety advantages. This paper presents a computationally efficient algorithm for determining optical flow, which can be run on an STM32F4 microprocessor (168 MHz) of a 4 gram stereo-camera. The optical flow algorithm is based on edge histograms. We propose a matching scheme to determine local optical flow. Moreover, the method allows for sub-pixel flow determination based on time horizon adaptation. We demonstrate velocity measurements in flight and use it within a velocity control-loop on a pocket drone.

* 7 pages, 10 figures, Changes: format changed one column to two columns, used url package for links

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge