Unsupervised Learning of a Hierarchical Spiking Neural Network for Optical Flow Estimation: From Events to Global Motion Perception

Paper and Code

Jul 28, 2018

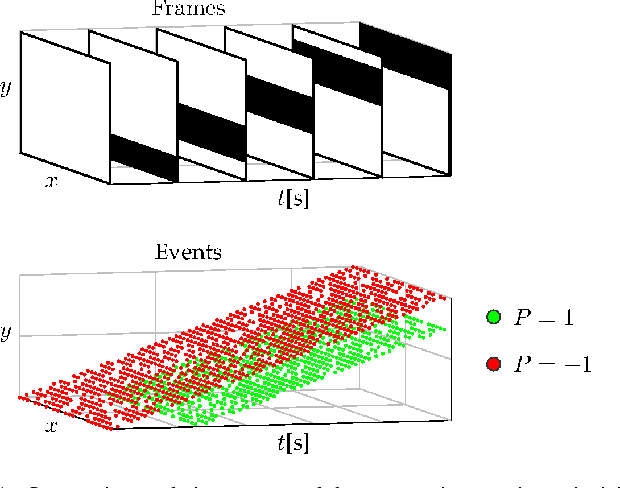

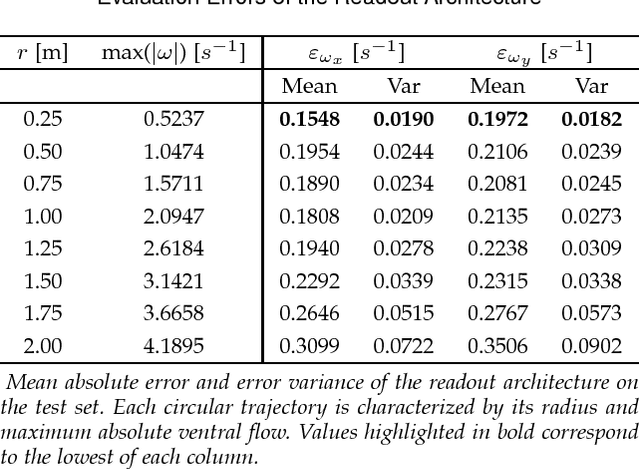

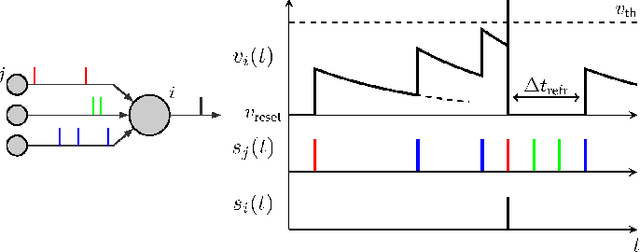

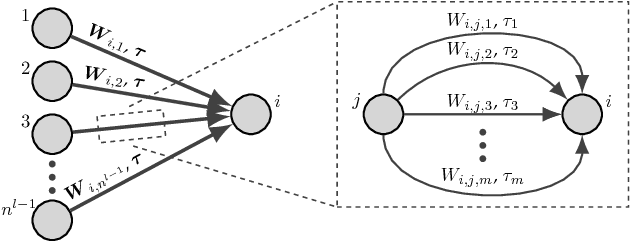

The combination of spiking neural networks and event-based vision sensors holds the potential of highly efficient and high-bandwidth optical flow estimation. This paper presents the first hierarchical spiking architecture in which motion (direction and speed) selectivity emerges in an unsupervised fashion from the raw stimuli generated with an event-based camera. A novel adaptive neuron model and spike-timing-dependent plasticity formulation are at the core of this neural network governing its spike-based processing and learning, respectively. After convergence, the neural architecture exhibits the main properties of biological visual motion systems, namely feature extraction and local and global motion perception. To assess the outcome of the learning, a shallow conventional artificial neural network is trained to map the activation traces of the penultimate layer to the optical flow visual observables of ventral flow. The proposed solution is validated for simulated event sequences with ground-truth measurements. Experimental results show that accurate estimates of these parameters can be obtained over a wide range of speeds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge