Margarita Chli

LiDAR Loop Closure Detection using Semantic Graphs with Graph Attention Networks

Jan 31, 2025Abstract:In this paper, we propose a novel loop closure detection algorithm that uses graph attention neural networks to encode semantic graphs to perform place recognition and then use semantic registration to estimate the 6 DoF relative pose constraint. Our place recognition algorithm has two key modules, namely, a semantic graph encoder module and a graph comparison module. The semantic graph encoder employs graph attention networks to efficiently encode spatial, semantic and geometric information from the semantic graph of the input point cloud. We then use self-attention mechanism in both node-embedding and graph-embedding steps to create distinctive graph vectors. The graph vectors of the current scan and a keyframe scan are then compared in the graph comparison module to identify a possible loop closure. Specifically, employing the difference of the two graph vectors showed a significant improvement in performance, as shown in ablation studies. Lastly, we implemented a semantic registration algorithm that takes in loop closure candidate scans and estimates the relative 6 DoF pose constraint for the LiDAR SLAM system. Extensive evaluation on public datasets shows that our model is more accurate and robust, achieving 13% improvement in maximum F1 score on the SemanticKITTI dataset, when compared to the baseline semantic graph algorithm. For the benefit of the community, we open-source the complete implementation of our proposed algorithm and custom implementation of semantic registration at https://github.com/crepuscularlight/SemanticLoopClosure

Hyperion -- A fast, versatile symbolic Gaussian Belief Propagation framework for Continuous-Time SLAM

Jul 09, 2024

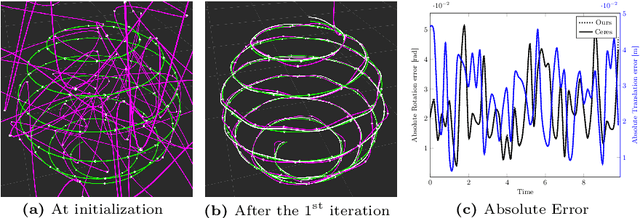

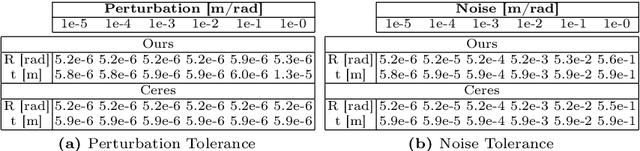

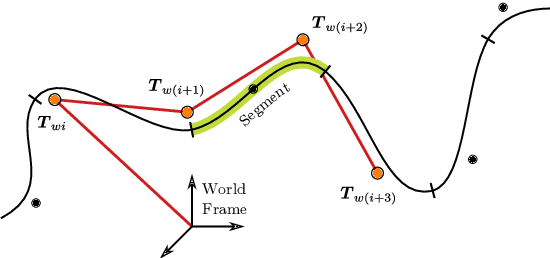

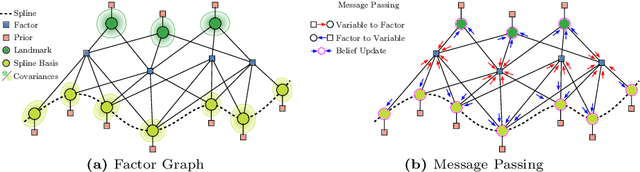

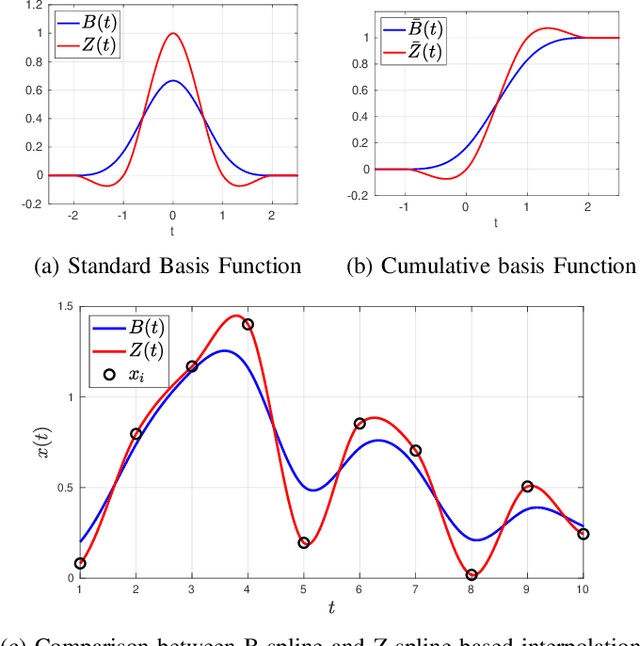

Abstract:Continuous-Time Simultaneous Localization And Mapping (CTSLAM) has become a promising approach for fusing asynchronous and multi-modal sensor suites. Unlike discrete-time SLAM, which estimates poses discretely, CTSLAM uses continuous-time motion parametrizations, facilitating the integration of a variety of sensors such as rolling-shutter cameras, event cameras and Inertial Measurement Units (IMUs). However, CTSLAM approaches remain computationally demanding and are conventionally posed as centralized Non-Linear Least Squares (NLLS) optimizations. Targeting these limitations, we not only present the fastest SymForce-based [Martiros et al., RSS 2022] B- and Z-Spline implementations achieving speedups between 2.43x and 110.31x over Sommer et al. [CVPR 2020] but also implement a novel continuous-time Gaussian Belief Propagation (GBP) framework, coined Hyperion, which targets decentralized probabilistic inference across agents. We demonstrate the efficacy of our method in motion tracking and localization settings, complemented by empirical ablation studies.

Towards Multi-robot Exploration: A Decentralized Strategy for UAV Forest Exploration

Jan 20, 2023Abstract:Efficient exploration strategies are vital in tasks such as search-and-rescue missions and disaster surveying. Unmanned Aerial Vehicles (UAVs) have become particularly popular in such applications, promising to cover large areas at high speeds. Moreover, with the increasing maturity of onboard UAV perception, research focus has been shifting toward higher-level reasoning for single- and multi-robot missions. However, autonomous navigation and exploration of previously unknown large spaces still constitutes an open challenge, especially when the environment is cluttered and exhibits large and frequent occlusions due to high obstacle density, as is the case of forests. Moreover, the problem of long-distance wireless communication in such scenes can become a limiting factor, especially when automating the navigation of a UAV swarm. In this spirit, this work proposes an exploration strategy that enables UAVs, both individually and in small swarms, to quickly explore complex scenes in a decentralized fashion. By providing the decision-making capabilities to each UAV to switch between different execution modes, the proposed strategy strikes a great balance between cautious exploration of yet completely unknown regions and more aggressive exploration of smaller areas of unknown space. This results in full coverage of forest areas of variable density, consistently faster than the state of the art. Demonstrating successful deployment with a single UAV as well as a swarm of up to three UAVs, this work sets out the basic principles for multi-root exploration of cluttered scenes, with up to 65% speed up in the single UAV case and 40% increase in explored area for the same mission time in multi-UAV setups.

COVINS-G: A Generic Back-end for Collaborative Visual-Inertial SLAM

Jan 17, 2023

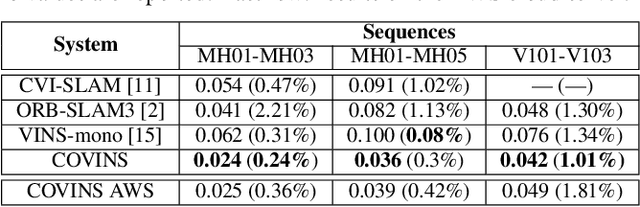

Abstract:Collaborative SLAM is at the core of perception in multi-robot systems as it enables the co-localization of the team of robots in a common reference frame, which is of vital importance for any coordination amongst them. The paradigm of a centralized architecture is well established, with the robots (i.e. agents) running Visual-Inertial Odometry (VIO) onboard while communicating relevant data, such as e.g. Keyframes (KFs), to a central back-end (i.e. server), which then merges and optimizes the joint maps of the agents. While these frameworks have proven to be successful, their capability and performance are highly dependent on the choice of the VIO front-end, thus limiting their flexibility. In this work, we present COVINS-G, a generalized back-end building upon the COVINS framework, enabling the compatibility of the server-back-end with any arbitrary VIO front-end, including, for example, off-the-shelf cameras with odometry capabilities, such as the Realsense T265. The COVINS-G back-end deploys a multi-camera relative pose estimation algorithm for computing the loop-closure constraints allowing the system to work purely on 2D image data. In the experimental evaluation, we show on-par accuracy with state-of-the-art multi-session and collaborative SLAM systems, while demonstrating the flexibility and generality of our approach by employing different front-ends onboard collaborating agents within the same mission. The COVINS-G codebase along with a generalized front-end wrapper to allow any existing VIO front-end to be readily used in combination with the proposed collaborative back-end is open-sourced. Video: https://youtu.be/FoJfXCfaYDw

COVINS: Visual-Inertial SLAM for Centralized Collaboration

Aug 12, 2021

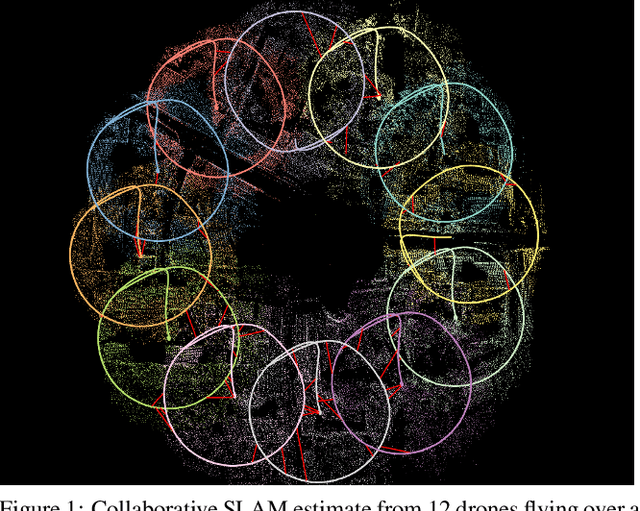

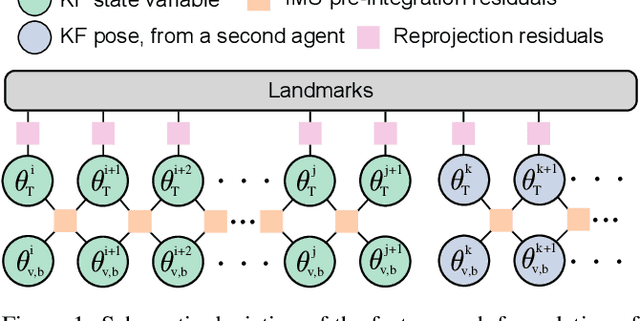

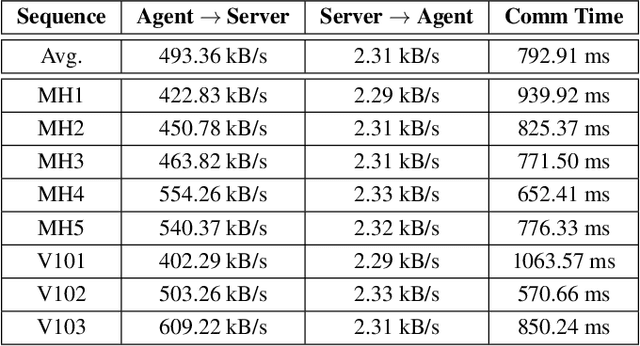

Abstract:Collaborative SLAM enables a group of agents to simultaneously co-localize and jointly map an environment, thus paving the way to wide-ranging applications of multi-robot perception and multi-user AR experiences by eliminating the need for external infrastructure or pre-built maps. This article presents COVINS, a novel collaborative SLAM system, that enables multi-agent, scalable SLAM in large environments and for large teams of more than 10 agents. The paradigm here is that each agent runs visual-inertial odomety independently onboard in order to ensure its autonomy, while sharing map information with the COVINS server back-end running on a powerful local PC or a remote cloud server. The server back-end establishes an accurate collaborative global estimate from the contributed data, refining the joint estimate by means of place recognition, global optimization and removal of redundant data, in order to ensure an accurate, but also efficient SLAM process. A thorough evaluation of COVINS reveals increased accuracy of the collaborative SLAM estimates, as well as efficiency in both removing redundant information and reducing the coordination overhead, and demonstrates successful operation in a large-scale mission with 12 agents jointly performing SLAM.

Hough2Map -- Iterative Event-based Hough Transform for High-Speed Railway Mapping

Feb 18, 2021

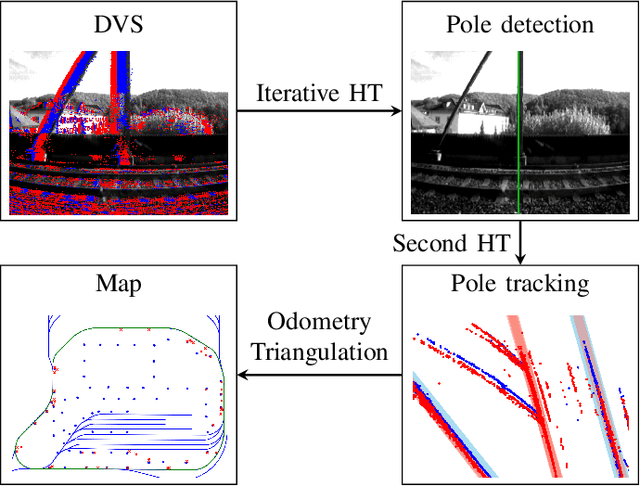

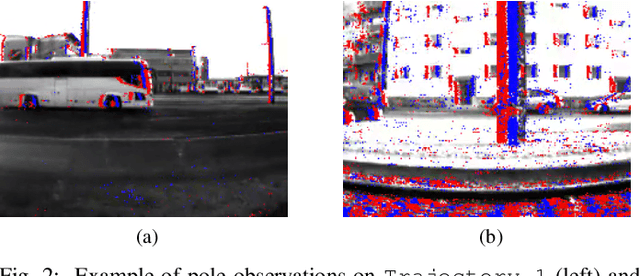

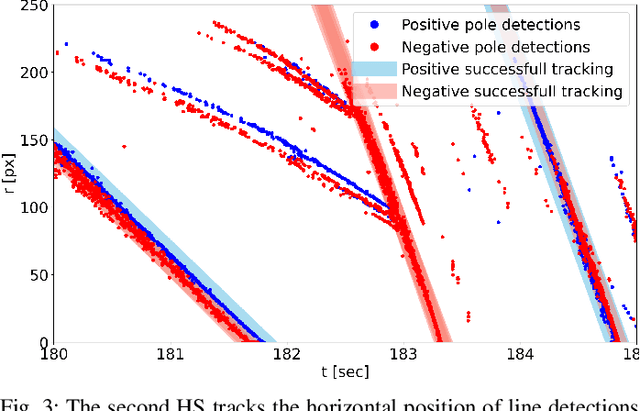

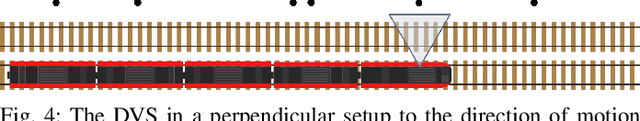

Abstract:To cope with the growing demand for transportation on the railway system, accurate, robust, and high-frequency positioning is required to enable a safe and efficient utilization of the existing railway infrastructure. As a basis for a localization system we propose a complete on-board mapping pipeline able to map robust meaningful landmarks, such as poles from power lines, in the vicinity of the vehicle. Such poles are good candidates for reliable and long term landmarks even through difficult weather conditions or seasonal changes. To address the challenges of motion blur and illumination changes in railway scenarios we employ a Dynamic Vision Sensor, a novel event-based camera. Using a sideways oriented on-board camera, poles appear as vertical lines. To map such lines in a real-time event stream, we introduce Hough2Map, a novel consecutive iterative event-based Hough transform framework capable of detecting, tracking, and triangulating close-by structures. We demonstrate the mapping reliability and accuracy of Hough2Map on real-world data in typical usage scenarios and evaluate using surveyed infrastructure ground truth maps. Hough2Map achieves a detection reliability of up to 92% and a mapping root mean square error accuracy of 1.1518m.

Distributed Variable-Baseline Stereo SLAM from two UAVs

Sep 10, 2020

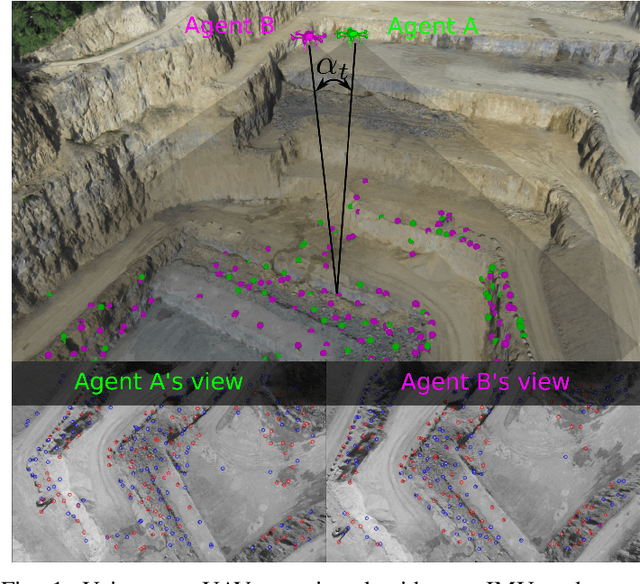

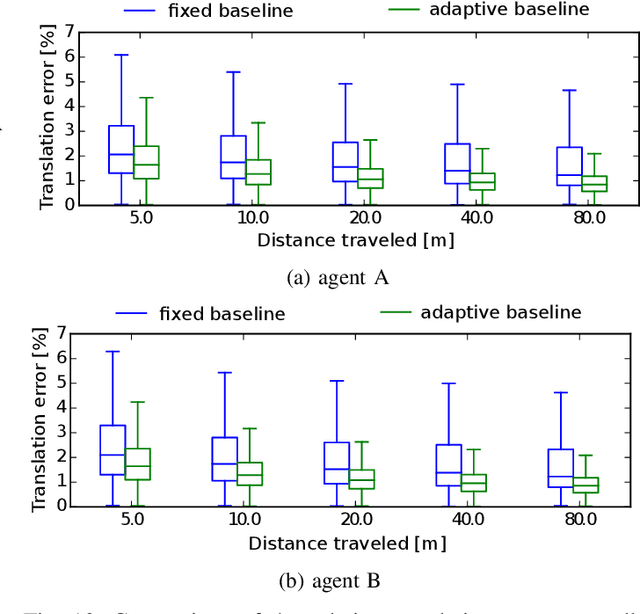

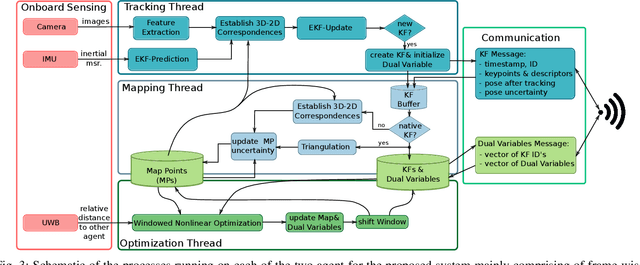

Abstract:VIO has been widely used and researched to control and aid the automation of navigation of robots especially in the absence of absolute position measurements, such as GPS. However, when observable landmarks in the scene lie far away from the robot's sensor suite, as it is the case at high altitude flights, the fidelity of estimates and the observability of the metric scale degrades greatly for these methods. Aiming to tackle this issue, in this article, we employ two UAVs equipped with one monocular camera and one IMU each, to exploit their view overlap and relative distance measurements between them using UWB modules onboard to enable collaborative VIO. In particular, we propose a novel, distributed fusion scheme enabling the formation of a virtual stereo camera rig with adjustable baseline from the two UAVs. In order to control the \gls{uav} agents autonomously, we propose a decentralized collaborative estimation scheme, where each agent hold its own local map, achieving an average pose estimation latency of 11ms, while ensuring consistency of the agents' estimates via consensus based optimization. Following a thorough evaluation on photorealistic simulations, we demonstrate the effectiveness of the approach at high altitude flights of up to 160m, going significantly beyond the capabilities of state-of-the-art VIO methods. Finally, we show the advantage of actively adjusting the baseline on-the-fly over a fixed, target baseline, reducing the error in our experiments by a factor of two.

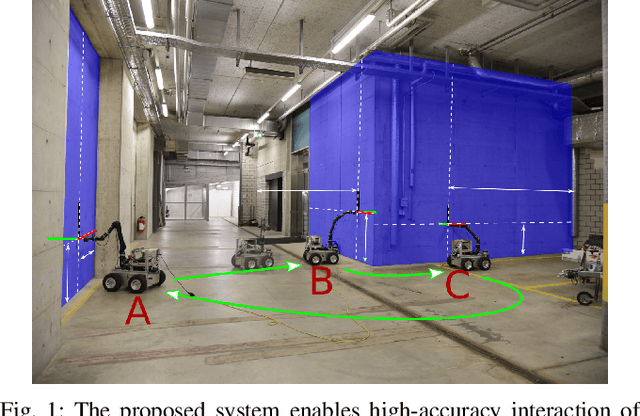

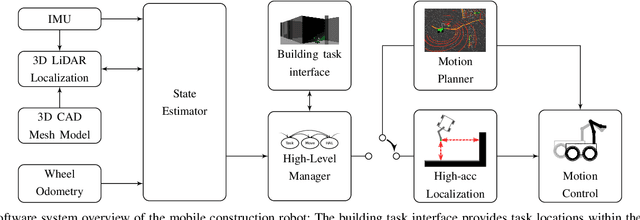

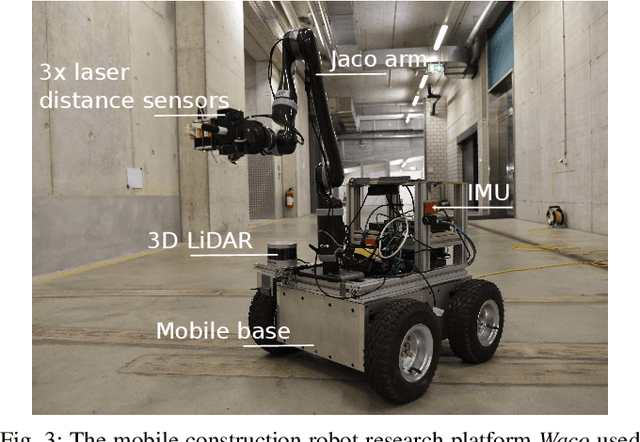

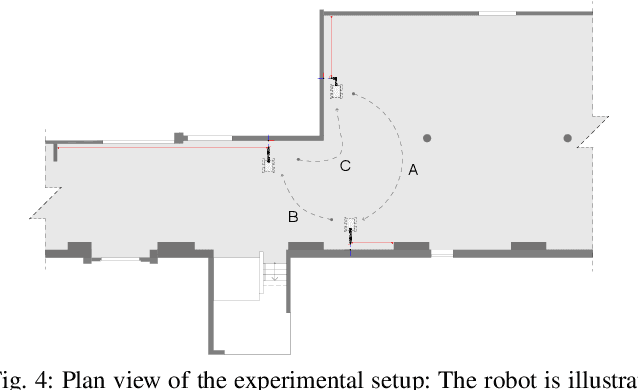

A Fully-Integrated Sensing and Control System for High-Accuracy Mobile Robotic Building Construction

Dec 04, 2019

Abstract:We present a fully-integrated sensing and control system which enables mobile manipulator robots to execute building tasks with millimeter-scale accuracy on building construction sites. The approach leverages multi-modal sensing capabilities for state estimation, tight integration with digital building models, and integrated trajectory planning and whole-body motion control. A novel method for high-accuracy localization updates relative to the known building structure is proposed. The approach is implemented on a real platform and tested under realistic construction conditions. We show that the system can achieve sub-cm end-effector positioning accuracy during fully autonomous operation using solely on-board sensing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge