Marco Karrer

LiDAR Loop Closure Detection using Semantic Graphs with Graph Attention Networks

Jan 31, 2025Abstract:In this paper, we propose a novel loop closure detection algorithm that uses graph attention neural networks to encode semantic graphs to perform place recognition and then use semantic registration to estimate the 6 DoF relative pose constraint. Our place recognition algorithm has two key modules, namely, a semantic graph encoder module and a graph comparison module. The semantic graph encoder employs graph attention networks to efficiently encode spatial, semantic and geometric information from the semantic graph of the input point cloud. We then use self-attention mechanism in both node-embedding and graph-embedding steps to create distinctive graph vectors. The graph vectors of the current scan and a keyframe scan are then compared in the graph comparison module to identify a possible loop closure. Specifically, employing the difference of the two graph vectors showed a significant improvement in performance, as shown in ablation studies. Lastly, we implemented a semantic registration algorithm that takes in loop closure candidate scans and estimates the relative 6 DoF pose constraint for the LiDAR SLAM system. Extensive evaluation on public datasets shows that our model is more accurate and robust, achieving 13% improvement in maximum F1 score on the SemanticKITTI dataset, when compared to the baseline semantic graph algorithm. For the benefit of the community, we open-source the complete implementation of our proposed algorithm and custom implementation of semantic registration at https://github.com/crepuscularlight/SemanticLoopClosure

COVINS-G: A Generic Back-end for Collaborative Visual-Inertial SLAM

Jan 17, 2023

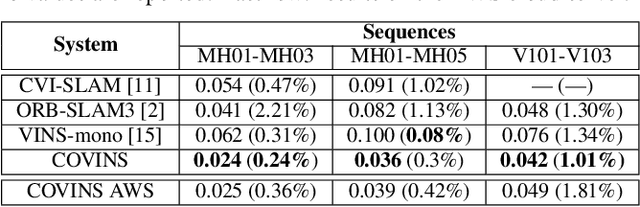

Abstract:Collaborative SLAM is at the core of perception in multi-robot systems as it enables the co-localization of the team of robots in a common reference frame, which is of vital importance for any coordination amongst them. The paradigm of a centralized architecture is well established, with the robots (i.e. agents) running Visual-Inertial Odometry (VIO) onboard while communicating relevant data, such as e.g. Keyframes (KFs), to a central back-end (i.e. server), which then merges and optimizes the joint maps of the agents. While these frameworks have proven to be successful, their capability and performance are highly dependent on the choice of the VIO front-end, thus limiting their flexibility. In this work, we present COVINS-G, a generalized back-end building upon the COVINS framework, enabling the compatibility of the server-back-end with any arbitrary VIO front-end, including, for example, off-the-shelf cameras with odometry capabilities, such as the Realsense T265. The COVINS-G back-end deploys a multi-camera relative pose estimation algorithm for computing the loop-closure constraints allowing the system to work purely on 2D image data. In the experimental evaluation, we show on-par accuracy with state-of-the-art multi-session and collaborative SLAM systems, while demonstrating the flexibility and generality of our approach by employing different front-ends onboard collaborating agents within the same mission. The COVINS-G codebase along with a generalized front-end wrapper to allow any existing VIO front-end to be readily used in combination with the proposed collaborative back-end is open-sourced. Video: https://youtu.be/FoJfXCfaYDw

COVINS: Visual-Inertial SLAM for Centralized Collaboration

Aug 12, 2021

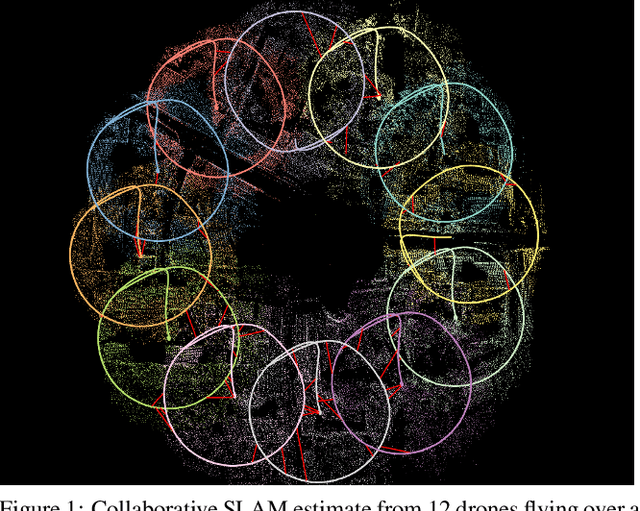

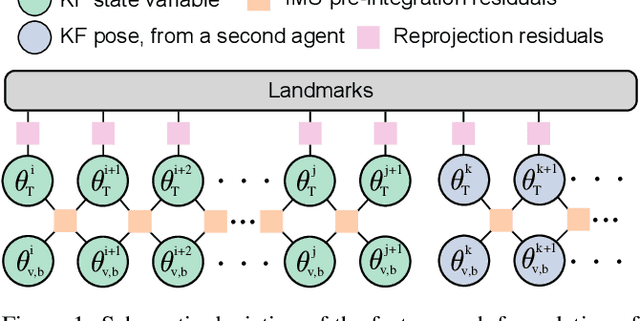

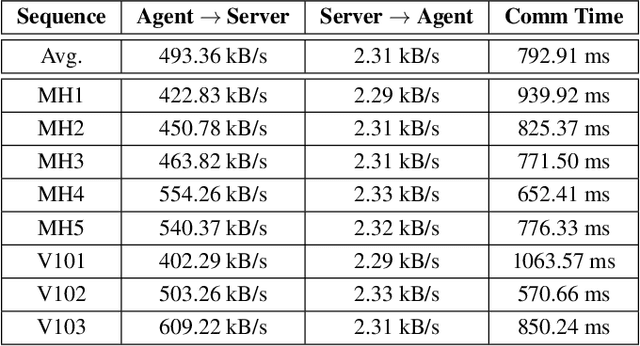

Abstract:Collaborative SLAM enables a group of agents to simultaneously co-localize and jointly map an environment, thus paving the way to wide-ranging applications of multi-robot perception and multi-user AR experiences by eliminating the need for external infrastructure or pre-built maps. This article presents COVINS, a novel collaborative SLAM system, that enables multi-agent, scalable SLAM in large environments and for large teams of more than 10 agents. The paradigm here is that each agent runs visual-inertial odomety independently onboard in order to ensure its autonomy, while sharing map information with the COVINS server back-end running on a powerful local PC or a remote cloud server. The server back-end establishes an accurate collaborative global estimate from the contributed data, refining the joint estimate by means of place recognition, global optimization and removal of redundant data, in order to ensure an accurate, but also efficient SLAM process. A thorough evaluation of COVINS reveals increased accuracy of the collaborative SLAM estimates, as well as efficiency in both removing redundant information and reducing the coordination overhead, and demonstrates successful operation in a large-scale mission with 12 agents jointly performing SLAM.

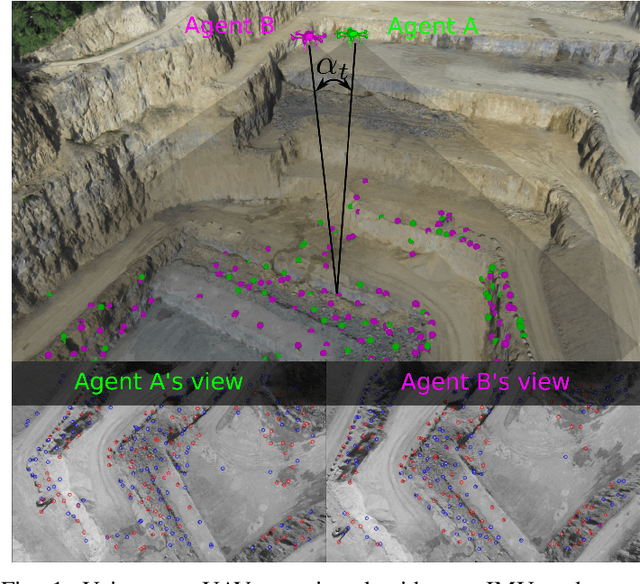

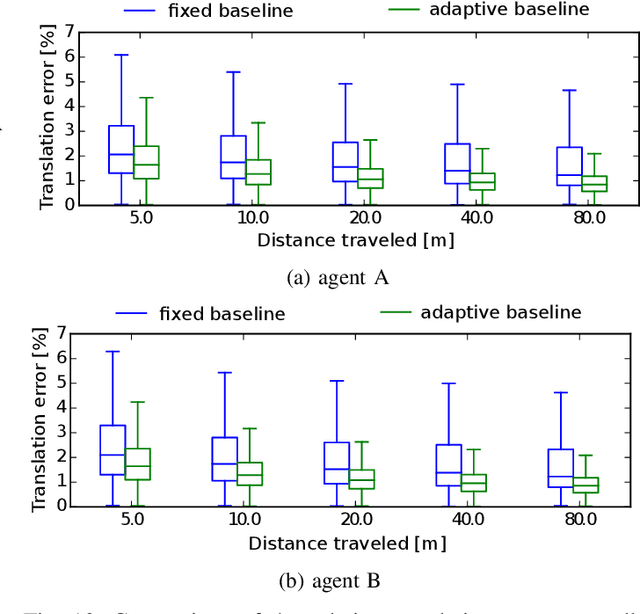

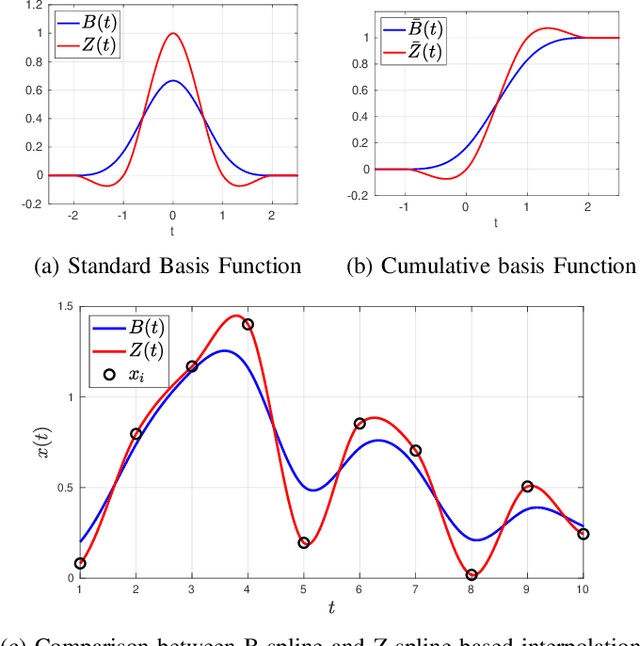

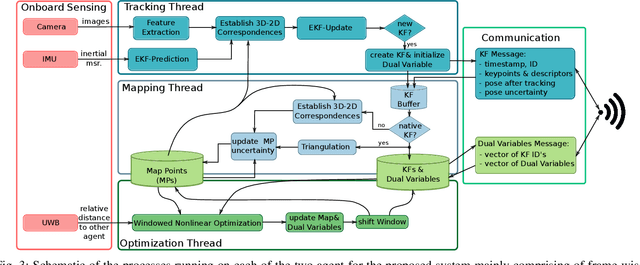

Distributed Variable-Baseline Stereo SLAM from two UAVs

Sep 10, 2020

Abstract:VIO has been widely used and researched to control and aid the automation of navigation of robots especially in the absence of absolute position measurements, such as GPS. However, when observable landmarks in the scene lie far away from the robot's sensor suite, as it is the case at high altitude flights, the fidelity of estimates and the observability of the metric scale degrades greatly for these methods. Aiming to tackle this issue, in this article, we employ two UAVs equipped with one monocular camera and one IMU each, to exploit their view overlap and relative distance measurements between them using UWB modules onboard to enable collaborative VIO. In particular, we propose a novel, distributed fusion scheme enabling the formation of a virtual stereo camera rig with adjustable baseline from the two UAVs. In order to control the \gls{uav} agents autonomously, we propose a decentralized collaborative estimation scheme, where each agent hold its own local map, achieving an average pose estimation latency of 11ms, while ensuring consistency of the agents' estimates via consensus based optimization. Following a thorough evaluation on photorealistic simulations, we demonstrate the effectiveness of the approach at high altitude flights of up to 160m, going significantly beyond the capabilities of state-of-the-art VIO methods. Finally, we show the advantage of actively adjusting the baseline on-the-fly over a fixed, target baseline, reducing the error in our experiments by a factor of two.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge