Linh Nguyen

University of Michigan

Scalable AI Framework for Defect Detection in Metal Additive Manufacturing

Nov 01, 2024

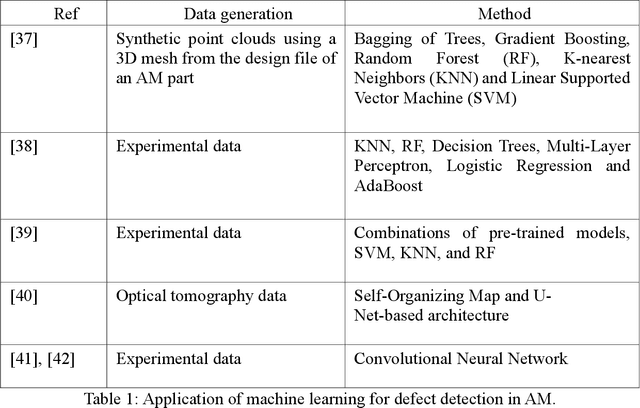

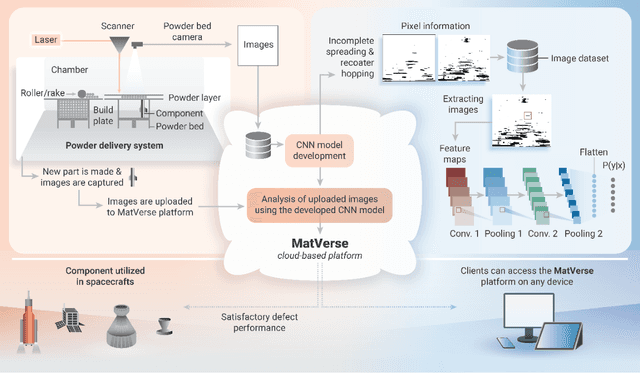

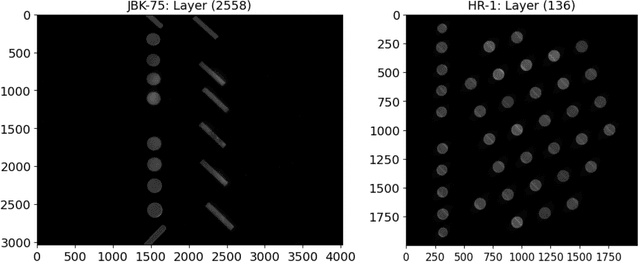

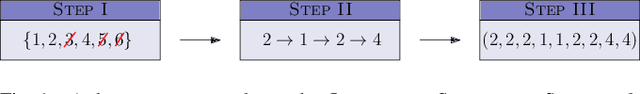

Abstract:Additive Manufacturing (AM) is transforming the manufacturing sector by enabling efficient production of intricately designed products and small-batch components. However, metal parts produced via AM can include flaws that cause inferior mechanical properties, including reduced fatigue response, yield strength, and fracture toughness. To address this issue, we leverage convolutional neural networks (CNN) to analyze thermal images of printed layers, automatically identifying anomalies that impact these properties. We also investigate various synthetic data generation techniques to address limited and imbalanced AM training data. Our models' defect detection capabilities were assessed using images of Nickel alloy 718 layers produced on a laser powder bed fusion AM machine and synthetic datasets with and without added noise. Our results show significant accuracy improvements with synthetic data, emphasizing the importance of expanding training sets for reliable defect detection. Specifically, Generative Adversarial Networks (GAN)-generated datasets streamlined data preparation by eliminating human intervention while maintaining high performance, thereby enhancing defect detection capabilities. Additionally, our denoising approach effectively improves image quality, ensuring reliable defect detection. Finally, our work integrates these models in the CLoud ADditive MAnufacturing (CLADMA) module, a user-friendly interface, to enhance their accessibility and practicality for AM applications. This integration supports broader adoption and practical implementation of advanced defect detection in AM processes.

Provable Methods for Searching with an Imperfect Sensor

Oct 08, 2024

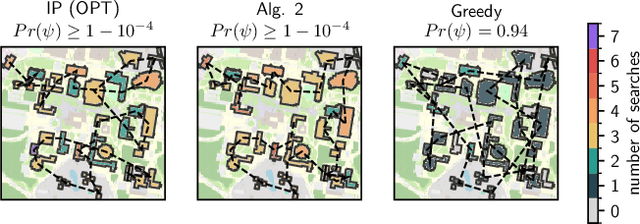

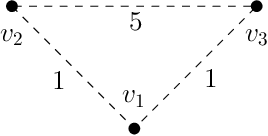

Abstract:Assume that a target is known to be present at an unknown point among a finite set of locations in the plane. We search for it using a mobile robot that has imperfect sensing capabilities. It takes time for the robot to move between locations and search a location; we have a total time budget within which to conduct the search. We study the problem of computing a search path/strategy for the robot that maximizes the probability of detection of the target. Considering non-uniform travel times between points (e.g., based on the distance between them) is crucial for search and rescue applications; such problems have been investigated to a limited extent due to their inherent complexity. In this paper, we describe fast algorithms with performance guarantees for this search problem and some variants, complement them with complexity results, and perform experiments to observe their performance.

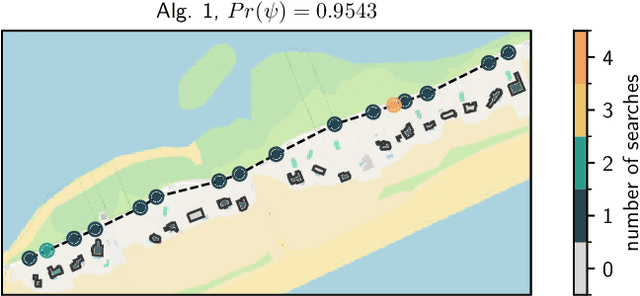

Coverage Path Planning For Minimizing Expected Time to Search For an Object With Continuous Sensing

Aug 01, 2024Abstract:In this paper, we present several results of both theoretical as well as practical interests. First, we propose the quota lawn mowing problem, an extension of the classic lawn mowing problem in computational geometry, as follows: given a quota of coverage, compute the shortest lawn mowing route to achieve said quota. We give constant-factor approximations for the quota lawn mowing problem. Second, we investigate the expected detection time minimization problem in geometric coverage path planning with local, continuous sensory information. We provide the first approximation algorithm with provable error bounds with pseudopolynomial running time. Our ideas also extend to another search mechanism, namely visibility-based search, which is related to the watchman route problem. We complement our theoretical analysis with some simple but effective heuristics for finding an object in minimum expected time, on which we provide simulation results.

Revisiting the Disequilibrium Issues in Tackling Heart Disease Classification Tasks

Jul 19, 2024

Abstract:In the field of heart disease classification, two primary obstacles arise. Firstly, existing Electrocardiogram (ECG) datasets consistently demonstrate imbalances and biases across various modalities. Secondly, these time-series data consist of diverse lead signals, causing Convolutional Neural Networks (CNNs) to become overfitting to the one with higher power, hence diminishing the performance of the Deep Learning (DL) process. In addition, when facing an imbalanced dataset, performance from such high-dimensional data may be susceptible to overfitting. Current efforts predominantly focus on enhancing DL models by designing novel architectures, despite these evident challenges, seemingly overlooking the core issues, therefore hindering advancements in heart disease classification. To address these obstacles, our proposed approach introduces two straightforward and direct methods to enhance the classification tasks. To address the high dimensionality issue, we employ a Channel-wise Magnitude Equalizer (CME) on signal-encoded images. This approach reduces redundancy in the feature data range, highlighting changes in the dataset. Simultaneously, to counteract data imbalance, we propose the Inverted Weight Logarithmic Loss (IWL) to alleviate imbalances among the data. When applying IWL loss, the accuracy of state-of-the-art models (SOTA) increases up to 5% in the CPSC2018 dataset. CME in combination with IWL also surpasses the classification results of other baseline models from 5% to 10%.

Large language models can be zero-shot anomaly detectors for time series?

May 23, 2024

Abstract:Recent studies have shown the ability of large language models to perform a variety of tasks, including time series forecasting. The flexible nature of these models allows them to be used for many applications. In this paper, we present a novel study of large language models used for the challenging task of time series anomaly detection. This problem entails two aspects novel for LLMs: the need for the model to identify part of the input sequence (or multiple parts) as anomalous; and the need for it to work with time series data rather than the traditional text input. We introduce sigllm, a framework for time series anomaly detection using large language models. Our framework includes a time-series-to-text conversion module, as well as end-to-end pipelines that prompt language models to perform time series anomaly detection. We investigate two paradigms for testing the abilities of large language models to perform the detection task. First, we present a prompt-based detection method that directly asks a language model to indicate which elements of the input are anomalies. Second, we leverage the forecasting capability of a large language model to guide the anomaly detection process. We evaluated our framework on 11 datasets spanning various sources and 10 pipelines. We show that the forecasting method significantly outperformed the prompting method in all 11 datasets with respect to the F1 score. Moreover, while large language models are capable of finding anomalies, state-of-the-art deep learning models are still superior in performance, achieving results 30% better than large language models.

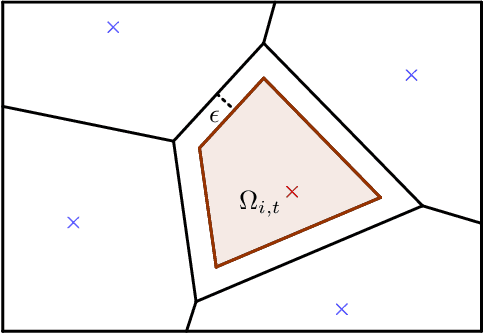

Spatially temporally distributed informative path planning for multi-robot systems

Mar 25, 2024Abstract:This paper investigates the problem of informative path planning for a mobile robotic sensor network in spatially temporally distributed mapping. The robots are able to gather noisy measurements from an area of interest during their movements to build a Gaussian Process (GP) model of a spatio-temporal field. The model is then utilized to predict the spatio-temporal phenomenon at different points of interest. To spatially and temporally navigate the group of robots so that they can optimally acquire maximal information gains while their connectivity is preserved, we propose a novel multistep prediction informative path planning optimization strategy employing our newly defined local cost functions. By using the dual decomposition method, it is feasible and practical to effectively solve the optimization problem in a distributed manner. The proposed method was validated through synthetic experiments utilizing real-world data sets.

Depth-based Sampling and Steering Constraints for Memoryless Local Planners

Nov 06, 2022Abstract:By utilizing only depth information, the paper introduces a novel but efficient local planning approach that enhances not only computational efficiency but also planning performances for memoryless local planners. The sampling is first proposed to be based on the depth data which can identify and eliminate a specific type of in-collision trajectories in the sampled motion primitive library. More specifically, all the obscured primitives' endpoints are found through querying the depth values and excluded from the sampled set, which can significantly reduce the computational workload required in collision checking. On the other hand, we furthermore propose a steering mechanism also based on the depth information to effectively prevent an autonomous vehicle from getting stuck when facing a large convex obstacle, providing a higher level of autonomy for a planning system. Our steering technique is theoretically proved to be complete in scenarios of convex obstacles. To evaluate effectiveness of the proposed DEpth based both Sampling and Steering (DESS) methods, we implemented them in the synthetic environments where a quadrotor was simulated flying through a cluttered region with multiple size-different obstacles. The obtained results demonstrate that the proposed approach can considerably decrease computing time in local planners, where more trajectories can be evaluated while the best path with much lower cost can be found. More importantly, the success rates calculated by the fact that the robot successfully navigated to the destinations in different testing scenarios are always higher than 99.6% on average.

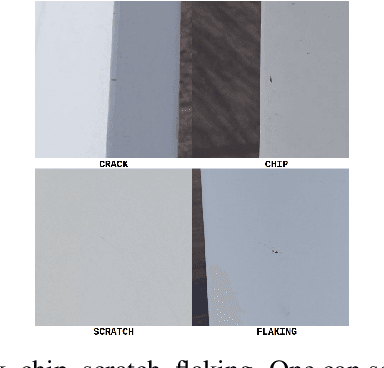

Learning to identify cracks on wind turbine blade surfaces using drone-based inspection images

Jul 20, 2022

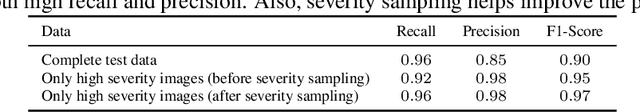

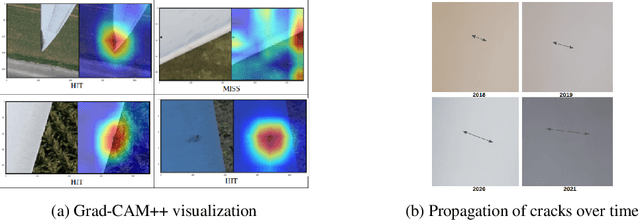

Abstract:Wind energy is expected to be one of the leading ways to achieve the goals of the Paris Agreement but it in turn heavily depends on effective management of its operations and maintenance (O&M) costs. Blade failures account for one-third of all O&M costs thus making accurate detection of blade damages, especially cracks, very important for sustained operations and cost savings. Traditionally, damage inspection has been a completely manual process thus making it subjective, error-prone, and time-consuming. Hence in this work, we bring more objectivity, scalability, and repeatability in our damage inspection process, using deep learning, to miss fewer cracks. We build a deep learning model trained on a large dataset of blade damages, collected by our drone-based inspection, to correctly detect cracks. Our model is already in production and has processed more than a million damages with a recall of 0.96. We also focus on model interpretability using class activation maps to get a peek into the model workings. The model not only performs as good as human experts but also better in certain tricky cases. Thus, in this work, we aim to increase wind energy adoption by decreasing one of its major hurdles - the O\&M costs resulting from missing blade failures like cracks.

An Automated System for Detecting Visual Damages of Wind Turbine Blades

May 22, 2022

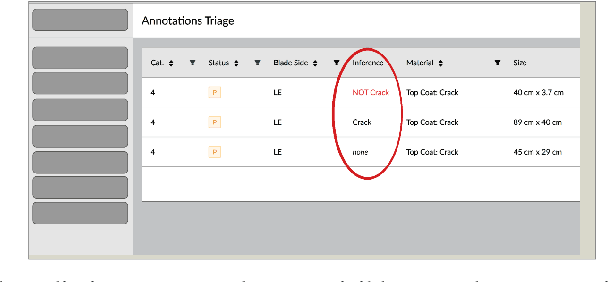

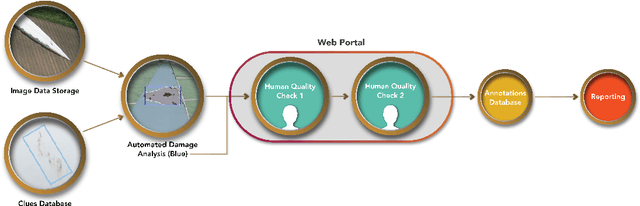

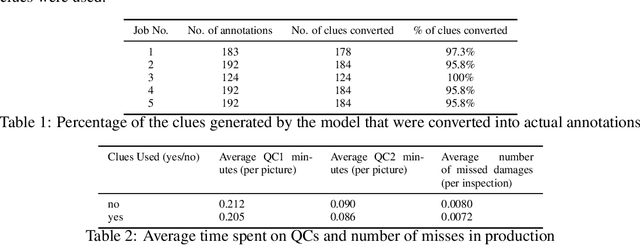

Abstract:Wind energy's ability to compete with fossil fuels on a market level depends on lowering wind's high operational costs. Since damages on wind turbine blades are the leading cause for these operational problems, identifying blade damages is critical. However, recent works in visual identification of blade damages are still experimental and focus on optimizing the traditional machine learning metrics such as IoU. In this paper, we argue that pushing models to production long before achieving the "optimal" model performance can still generate real value for this use case. We discuss the performance of our damage's suggestion model in production and how this system works in coordination with humans as part of a commercialized product and how it can contribute towards lowering wind energy's operational costs.

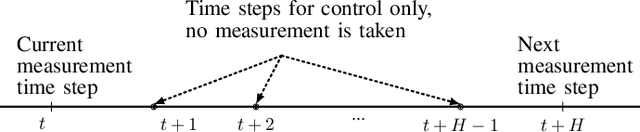

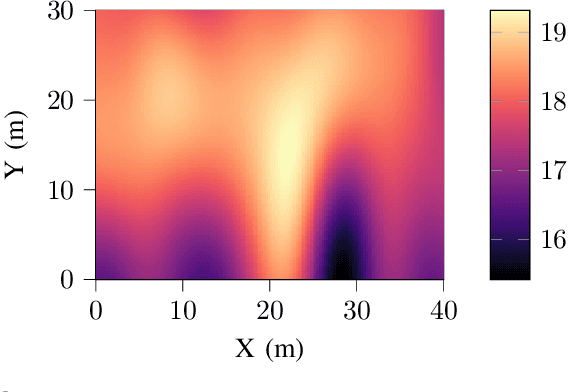

ADMM-based Adaptive Sampling Strategy for Nonholonomic Mobile Robotic Sensor Networks

Jan 27, 2021

Abstract:This paper discusses the adaptive sampling problem in a nonholonomic mobile robotic sensor network for efficiently monitoring a spatial field. It is proposed to employ Gaussian process to model a spatial phenomenon and predict it at unmeasured positions, which enables the sampling optimization problem to be formulated by the use of the log determinant of a predicted covariance matrix at next sampling locations. The control, movement and nonholonomic dynamics constraints of the mobile sensors are also considered in the adaptive sampling optimization problem. In order to tackle the nonlinearity and nonconvexity of the objective function in the optimization problem we first exploit the linearized alternating direction method of multipliers (L-ADMM) method that can effectively simplify the objective function, though it is computationally expensive since a nonconvex problem needs to be solved exactly in each iteration. We then propose a novel approach called the successive convexified ADMM (SC-ADMM) that sequentially convexify the nonlinear dynamic constraints so that the original optimization problem can be split into convex subproblems. It is noted that both the L-ADMM algorithm and our SC-ADMM approach can solve the sampling optimization problem in either a centralized or a distributed manner. We validated the proposed approaches in 1000 experiments in a synthetic environment with a real-world dataset, where the obtained results suggest that both the L-ADMM and SC- ADMM techniques can provide good accuracy for the monitoring purpose. However, our proposed SC-ADMM approach computationally outperforms the L-ADMM counterpart, demonstrating its better practicality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge