Laura Zheng

GM3: A General Physical Model for Micro-Mobility Vehicles

Oct 09, 2025Abstract:Modeling the dynamics of micro-mobility vehicles (MMV) is becoming increasingly important for training autonomous vehicle systems and building urban traffic simulations. However, mainstream tools rely on variants of the Kinematic Bicycle Model (KBM) or mode-specific physics that miss tire slip, load transfer, and rider/vehicle lean. To our knowledge, no unified, physics-based model captures these dynamics across the full range of common MMVs and wheel layouts. We propose the "Generalized Micro-mobility Model" (GM3), a tire-level formulation based on the tire brush representation that supports arbitrary wheel configurations, including single/double track and multi-wheel platforms. We introduce an interactive model-agnostic simulation framework that decouples vehicle/layout specification from dynamics to compare the GM3 with the KBM and other models, consisting of fixed step RK4 integration, human-in-the-loop and scripted control, real-time trajectory traces and logging for analysis. We also empirically validate the GM3 on the Stanford Drone Dataset's deathCircle (roundabout) scene for biker, skater, and cart classes.

Quantifying and Modeling Driving Styles in Trajectory Forecasting

Mar 06, 2025

Abstract:Trajectory forecasting has become a popular deep learning task due to its relevance for scenario simulation for autonomous driving. Specifically, trajectory forecasting predicts the trajectory of a short-horizon future for specific human drivers in a particular traffic scenario. Robust and accurate future predictions can enable autonomous driving planners to optimize for low-risk and predictable outcomes for human drivers around them. Although some work has been done to model driving style in planning and personalized autonomous polices, a gap exists in explicitly modeling human driving styles for trajectory forecasting of human behavior. Human driving style is most certainly a correlating factor to decision making, especially in edge-case scenarios where risk is nontrivial, as justified by the large amount of traffic psychology literature on risky driving. So far, the current real-world datasets for trajectory forecasting lack insight on the variety of represented driving styles. While the datasets may represent real-world distributions of driving styles, we posit that fringe driving style types may also be correlated with edge-case safety scenarios. In this work, we conduct analyses on existing real-world trajectory datasets for driving and dissect these works from the lens of driving styles, which is often intangible and non-standardized.

Gradient-based Trajectory Optimization with Parallelized Differentiable Traffic Simulation

Dec 21, 2024

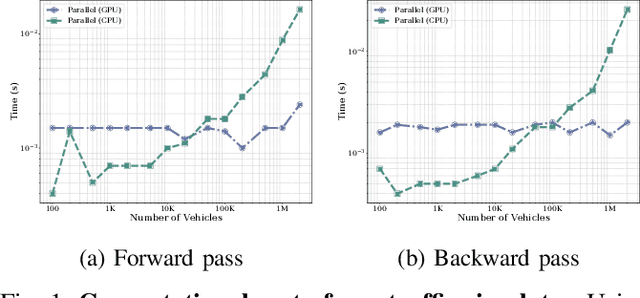

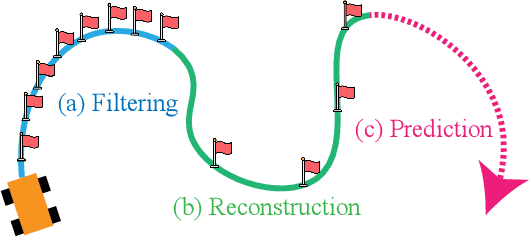

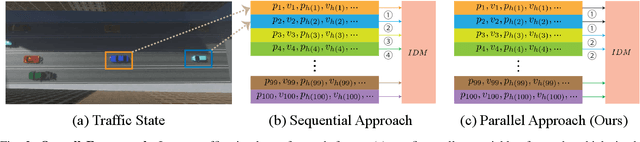

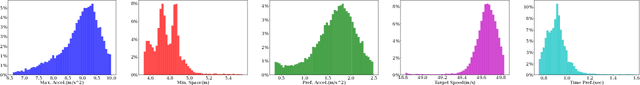

Abstract:We present a parallelized differentiable traffic simulator based on the Intelligent Driver Model (IDM), a car-following framework that incorporates driver behavior as key variables. Our simulator efficiently models vehicle motion, generating trajectories that can be supervised to fit real-world data. By leveraging its differentiable nature, IDM parameters are optimized using gradient-based methods. With the capability to simulate up to 2 million vehicles in real time, the system is scalable for large-scale trajectory optimization. We show that we can use the simulator to filter noise in the input trajectories (trajectory filtering), reconstruct dense trajectories from sparse ones (trajectory reconstruction), and predict future trajectories (trajectory prediction), with all generated trajectories adhering to physical laws. We validate our simulator and algorithm on several datasets including NGSIM and Waymo Open Dataset.

TRAVERSE: Traffic-Responsive Autonomous Vehicle Experience & Rare-event Simulation for Enhanced safety

Jul 12, 2024Abstract:Data for training learning-enabled self-driving cars in the physical world are typically collected in a safe, normal environment. Such data distribution often engenders a strong bias towards safe driving, making self-driving cars unprepared when encountering adversarial scenarios like unexpected accidents. Due to a dearth of such adverse data that is unrealistic for drivers to collect, autonomous vehicles can perform poorly when experiencing such rare events. This work addresses much-needed research by having participants drive a VR vehicle simulator going through simulated traffic with various types of accidental scenarios. It aims to understand human responses and behaviors in simulated accidents, contributing to our understanding of driving dynamics and safety. The simulation framework adopts a robust traffic simulation and is rendered using the Unity Game Engine. Furthermore, the simulation framework is built with portable, light-weight immersive driving simulator hardware, lowering the resource barrier for studies in autonomous driving research. Keywords: Rare Events, Traffic Simulation, Autonomous Driving, Virtual Reality, User Studies

Sensitivity-Informed Augmentation for Robust Segmentation

Jun 04, 2024

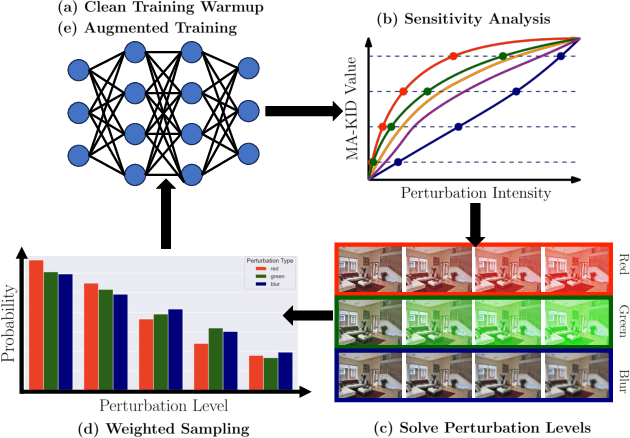

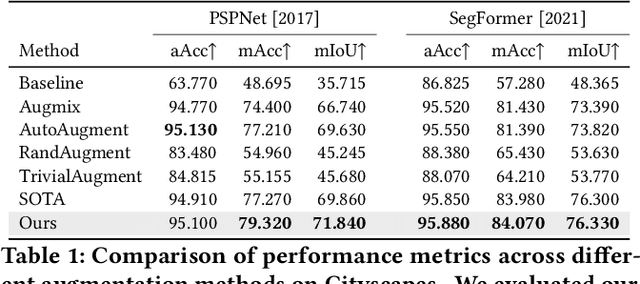

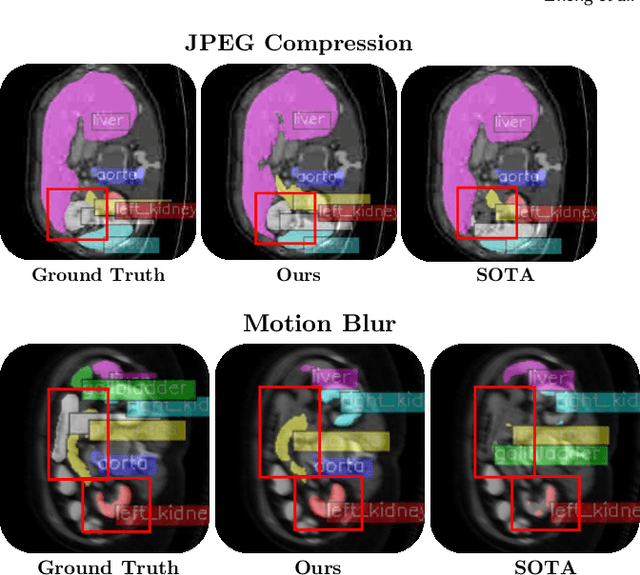

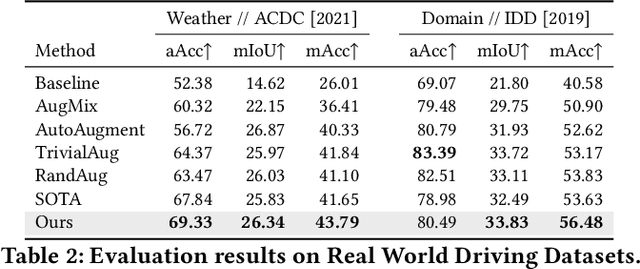

Abstract:Segmentation is an integral module in many visual computing applications such as virtual try-on, medical imaging, autonomous driving, and agricultural automation. These applications often involve either widespread consumer use or highly variable environments, both of which can degrade the quality of visual sensor data, whether from a common mobile phone or an expensive satellite imaging camera. In addition to external noises like user difference or weather conditions, internal noises such as variations in camera quality or lens distortion can affect the performance of segmentation models during both development and deployment. In this work, we present an efficient, adaptable, and gradient-free method to enhance the robustness of learning-based segmentation models across training. First, we introduce a novel adaptive sensitivity analysis (ASA) using Kernel Inception Distance (KID) on basis perturbations to benchmark perturbation sensitivity of pre-trained segmentation models. Then, we model the sensitivity curve using the adaptive SA and sample perturbation hyperparameter values accordingly. Finally, we conduct adversarial training with the selected perturbation values and dynamically re-evaluate robustness during online training. Our method, implemented end-to-end with minimal fine-tuning required, consistently outperforms state-of-the-art data augmentation techniques for segmentation. It shows significant improvement in both clean data evaluation and real-world adverse scenario evaluation across various segmentation datasets used in visual computing and computer graphics applications.

Deep Stochastic Kinematic Models for Probabilistic Motion Forecasting in Traffic

Jun 03, 2024

Abstract:Kinematic priors have shown to be helpful in boosting generalization and performance in prior work on trajectory forecasting. Specifically, kinematic priors have been applied such that models predict a set of actions instead of future output trajectories. By unrolling predicted trajectories via time integration and models of kinematic dynamics, predicted trajectories are not only kinematically feasible on average but also relate uncertainty from one timestep to the next. With benchmarks supporting prediction of multiple trajectory predictions, deterministic kinematic priors are less and less applicable to current models. We propose a method for integrating probabilistic kinematic priors into modern probabilistic trajectory forecasting architectures. The primary difference between our work and previous techniques is the analytical quantification of variance, or uncertainty, in predicted trajectories. With negligible additional computational overhead, our method can be generalized and easily implemented with any modern probabilistic method that models candidate trajectories as Gaussian distributions. In particular, our method works especially well in unoptimal settings, such as with small datasets or in the presence of noise. Our method achieves up to a 50% performance boost in small dataset settings and up to an 8% performance boost in large-scale learning compared to previous kinematic prediction methods on SOTA trajectory forecasting architectures out-of-the-box, with minimal fine-tuning. In this paper, we show four analytical formulations of probabilistic kinematic priors which can be used for any Gaussian Mixture Model (GMM)-based deep learning models, quantify the error bound on linear approximations applied during trajectory unrolling, and show results to evaluate each formulation in trajectory forecasting.

Towards Driving Policies with Personality: Modeling Behavior and Style in Risky Scenarios via Data Collection in Virtual Reality

Mar 08, 2023Abstract:Autonomous driving research currently faces data sparsity in representation of risky scenarios. Such data is both difficult to obtain ethically in the real world, and unreliable to obtain via simulation. Recent advances in virtual reality (VR) driving simulators lower barriers to tackling this problem in simulation. We propose the first data collection framework for risky scenario driving data from real humans using VR, as well as accompanying numerical driving personality characterizations. We validate the resulting dataset with statistical analyses and model driving behavior with an eight-factor personality vector based on the Multi-dimensional Driving Style Inventory (MDSI). Our method, dataset, and analyses show that realistic driving personalities can be modeled without deep learning or large datasets to complement autonomous driving research.

Traffic-Aware Autonomous Driving with Differentiable Traffic Simulation

Oct 07, 2022

Abstract:While there have been advancements in autonomous driving control and traffic simulation, there have been little to no works exploring the unification of both with deep learning. Works in both areas seem to focus on entirely different exclusive problems, yet traffic and driving have inherent semantic relations in the real world. In this paper, we present a generalizable distillation-style method for traffic-informed imitation learning that directly optimizes a autonomous driving policy for the overall benefit of faster traffic flow and lower energy consumption. We capitalize on improving the arbitrarily defined supervision of speed control in imitation learning systems, as most driving research focus on perception and steering. Moreover, our method addresses the lack of co-simulation between traffic and driving simulators and lays groundwork for directly involving traffic simulation with autonomous driving in future work. Our results show that, with information from traffic simulation involved in supervision of imitation learning methods, an autonomous vehicle can learn how to accelerate in a fashion that is beneficial for traffic flow and overall energy consumption for all nearby vehicles.

Improving Generalization of Transfer Learning Across Domains Using Spatio-Temporal Features in Autonomous Driving

Mar 15, 2021

Abstract:Training vision-based autonomous driving in the real world can be inefficient and impractical. Vehicle simulation can be used to learn in the virtual world, and the acquired skills can be transferred to handle real-world scenarios more effectively. Between virtual and real visual domains, common features such as relative distance to road edges and other vehicles over time are consistent. These visual elements are intuitively crucial for human decision making during driving. We hypothesize that these spatio-temporal factors can also be used in transfer learning to improve generalization across domains. First, we propose a CNN+LSTM transfer learning framework to extract the spatio-temporal features representing vehicle dynamics from scenes. Next, we conduct an ablation study to quantitatively estimate the significance of various features in the decisions of driving systems. We observe that physically interpretable factors are highly correlated with network decisions, while representational differences between scenes are not. Finally, based on the results of our ablation study, we propose a transfer learning pipeline that uses saliency maps and physical features extracted from a source model to enhance the performance of a target model. Training of our network is initialized with the learned weights from CNN and LSTM latent features (capturing the intrinsic physics of the moving vehicle w.r.t. its surroundings) transferred from one domain to another. Our experiments show that this proposed transfer learning framework better generalizes across unseen domains compared to a baseline CNN model on a binary classification learning task.

Improving Robustness of Learning-based Autonomous Steering Using Adversarial Images

Feb 26, 2021

Abstract:For safety of autonomous driving, vehicles need to be able to drive under various lighting, weather, and visibility conditions in different environments. These external and environmental factors, along with internal factors associated with sensors, can pose significant challenges to perceptual data processing, hence affecting the decision-making and control of the vehicle. In this work, we address this critical issue by introducing a framework for analyzing robustness of the learning algorithm w.r.t varying quality in the image input for autonomous driving. Using the results of sensitivity analysis, we further propose an algorithm to improve the overall performance of the task of "learning to steer". The results show that our approach is able to enhance the learning outcomes up to 48%. A comparative study drawn between our approach and other related techniques, such as data augmentation and adversarial training, confirms the effectiveness of our algorithm as a way to improve the robustness and generalization of neural network training for autonomous driving.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge