Kory W. Mathewson

A Robot Walks into a Bar: Can Language Models Serve as Creativity Support Tools for Comedy? An Evaluation of LLMs' Humour Alignment with Comedians

Jun 03, 2024

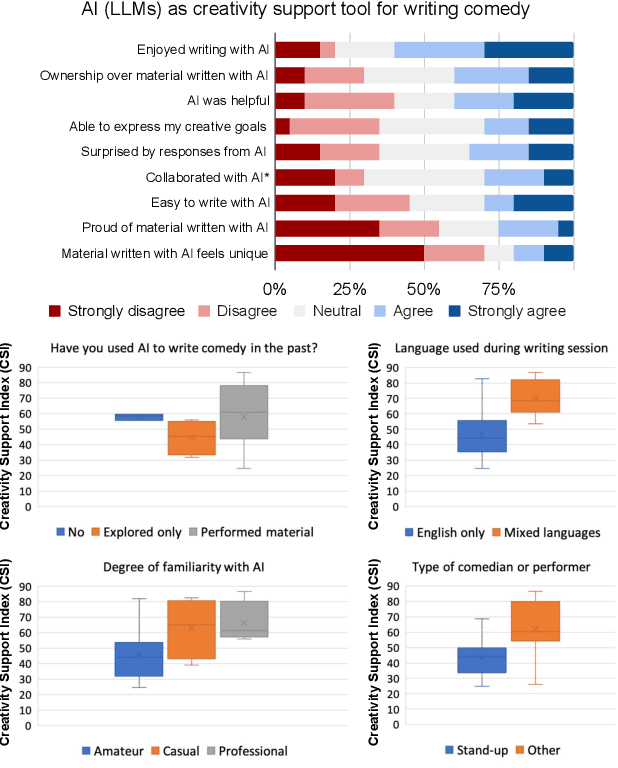

Abstract:We interviewed twenty professional comedians who perform live shows in front of audiences and who use artificial intelligence in their artistic process as part of 3-hour workshops on ``AI x Comedy'' conducted at the Edinburgh Festival Fringe in August 2023 and online. The workshop consisted of a comedy writing session with large language models (LLMs), a human-computer interaction questionnaire to assess the Creativity Support Index of AI as a writing tool, and a focus group interrogating the comedians' motivations for and processes of using AI, as well as their ethical concerns about bias, censorship and copyright. Participants noted that existing moderation strategies used in safety filtering and instruction-tuned LLMs reinforced hegemonic viewpoints by erasing minority groups and their perspectives, and qualified this as a form of censorship. At the same time, most participants felt the LLMs did not succeed as a creativity support tool, by producing bland and biased comedy tropes, akin to ``cruise ship comedy material from the 1950s, but a bit less racist''. Our work extends scholarship about the subtle difference between, one the one hand, harmful speech, and on the other hand, ``offensive'' language as a practice of resistance, satire and ``punching up''. We also interrogate the global value alignment behind such language models, and discuss the importance of community-based value alignment and data ownership to build AI tools that better suit artists' needs.

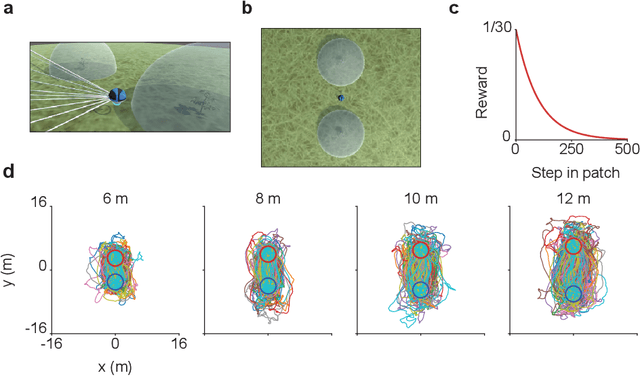

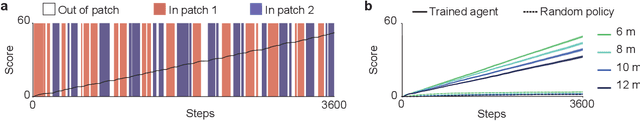

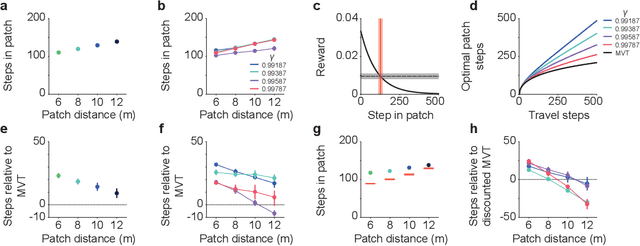

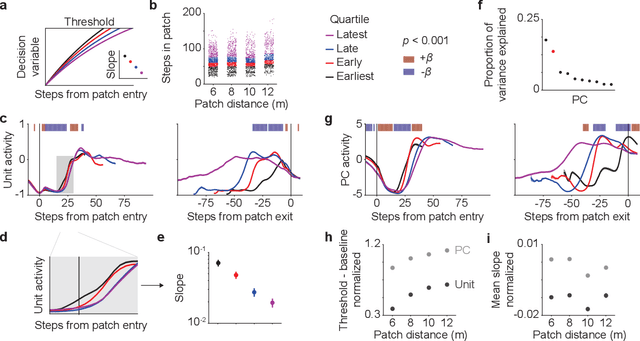

Adaptive patch foraging in deep reinforcement learning agents

Oct 14, 2022

Abstract:Patch foraging is one of the most heavily studied behavioral optimization challenges in biology. However, despite its importance to biological intelligence, this behavioral optimization problem is understudied in artificial intelligence research. Patch foraging is especially amenable to study given that it has a known optimal solution, which may be difficult to discover given current techniques in deep reinforcement learning. Here, we investigate deep reinforcement learning agents in an ecological patch foraging task. For the first time, we show that machine learning agents can learn to patch forage adaptively in patterns similar to biological foragers, and approach optimal patch foraging behavior when accounting for temporal discounting. Finally, we show emergent internal dynamics in these agents that resemble single-cell recordings from foraging non-human primates, which complements experimental and theoretical work on the neural mechanisms of biological foraging. This work suggests that agents interacting in complex environments with ecologically valid pressures arrive at common solutions, suggesting the emergence of foundational computations behind adaptive, intelligent behavior in both biological and artificial agents.

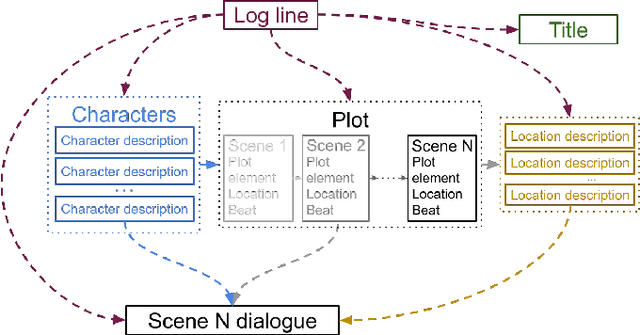

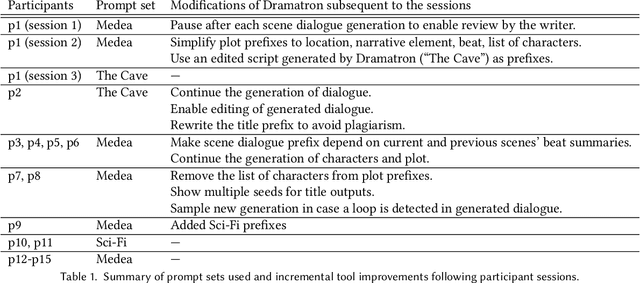

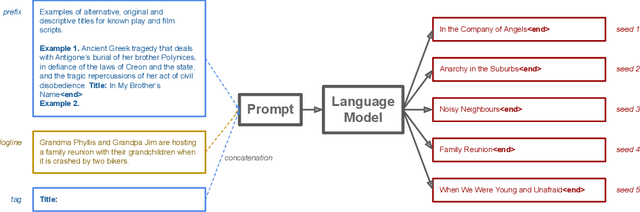

Co-Writing Screenplays and Theatre Scripts with Language Models: An Evaluation by Industry Professionals

Sep 29, 2022

Abstract:Language models are increasingly attracting interest from writers. However, such models lack long-range semantic coherence, limiting their usefulness for longform creative writing. We address this limitation by applying language models hierarchically, in a system we call Dramatron. By building structural context via prompt chaining, Dramatron can generate coherent scripts and screenplays complete with title, characters, story beats, location descriptions, and dialogue. We illustrate Dramatron's usefulness as an interactive co-creative system with a user study of 15 theatre and film industry professionals. Participants co-wrote theatre scripts and screenplays with Dramatron and engaged in open-ended interviews. We report critical reflections both from our interviewees and from independent reviewers who watched stagings of the works to illustrate how both Dramatron and hierarchical text generation could be useful for human-machine co-creativity. Finally, we discuss the suitability of Dramatron for co-creativity, ethical considerations -- including plagiarism and bias -- and participatory models for the design and deployment of such tools.

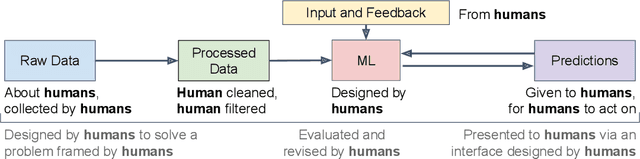

A Brief Guide to Designing and Evaluating Human-Centered Interactive Machine Learning

Apr 20, 2022

Abstract:Interactive machine learning (IML) is a field of research that explores how to leverage both human and computational abilities in decision making systems. IML represents a collaboration between multiple complementary human and machine intelligent systems working as a team, each with their own unique abilities and limitations. This teamwork might mean that both systems take actions at the same time, or in sequence. Two major open research questions in the field of IML are: "How should we design systems that can learn to make better decisions over time with human interaction?" and "How should we evaluate the design and deployment of such systems?" A lack of appropriate consideration for the humans involved can lead to problematic system behaviour, and issues of fairness, accountability, and transparency. Thus, our goal with this work is to present a human-centred guide to designing and evaluating IML systems while mitigating risks. This guide is intended to be used by machine learning practitioners who are responsible for the health, safety, and well-being of interacting humans. An obligation of responsibility for public interaction means acting with integrity, honesty, fairness, and abiding by applicable legal statutes. With these values and principles in mind, we as a machine learning research community can better achieve goals of augmenting human skills and abilities. This practical guide therefore aims to support many of the responsible decisions necessary throughout the iterative design, development, and dissemination of IML systems.

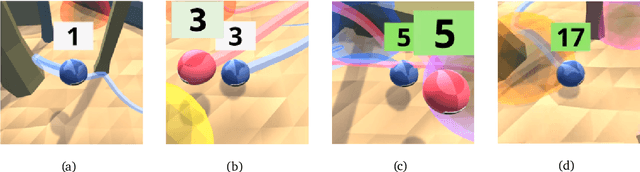

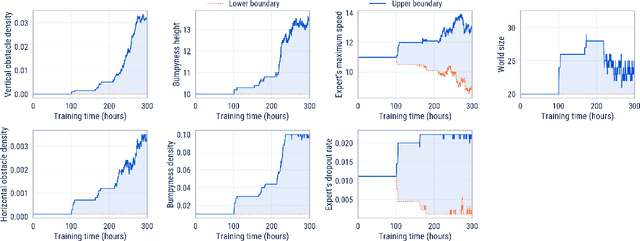

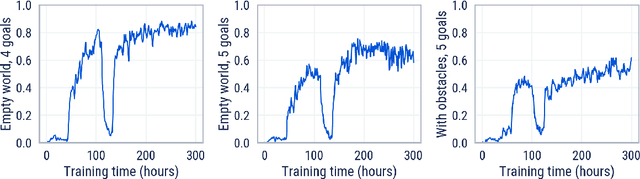

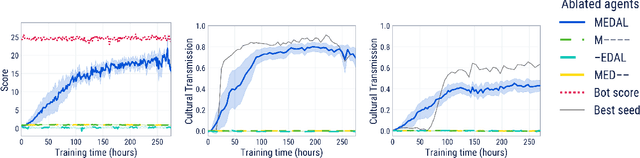

Learning Robust Real-Time Cultural Transmission without Human Data

Mar 01, 2022

Abstract:Cultural transmission is the domain-general social skill that allows agents to acquire and use information from each other in real-time with high fidelity and recall. In humans, it is the inheritance process that powers cumulative cultural evolution, expanding our skills, tools and knowledge across generations. We provide a method for generating zero-shot, high recall cultural transmission in artificially intelligent agents. Our agents succeed at real-time cultural transmission from humans in novel contexts without using any pre-collected human data. We identify a surprisingly simple set of ingredients sufficient for generating cultural transmission and develop an evaluation methodology for rigorously assessing it. This paves the way for cultural evolution as an algorithm for developing artificial general intelligence.

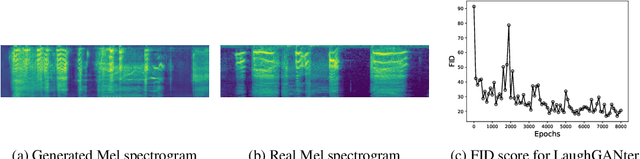

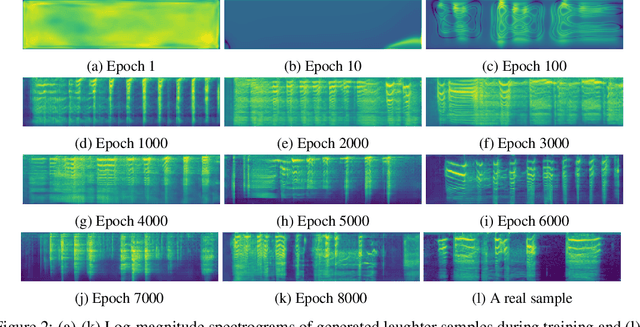

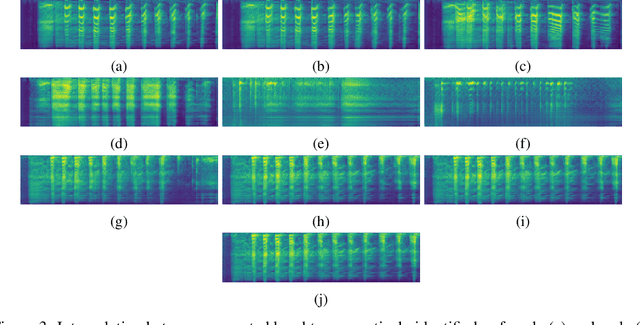

Generating Diverse Realistic Laughter for Interactive Art

Nov 04, 2021

Abstract:We propose an interactive art project to make those rendered invisible by the COVID-19 crisis and its concomitant solitude reappear through the welcome melody of laughter, and connections created and explored through advanced laughter synthesis approaches. However, the unconditional generation of the diversity of human emotional responses in high-quality auditory synthesis remains an open problem, with important implications for the application of these approaches in artistic settings. We developed LaughGANter, an approach to reproduce the diversity of human laughter using generative adversarial networks (GANs). When trained on a dataset of diverse laughter samples, LaughGANter generates diverse, high quality laughter samples, and learns a latent space suitable for emotional analysis and novel artistic applications such as latent mixing/interpolation and emotional transfer.

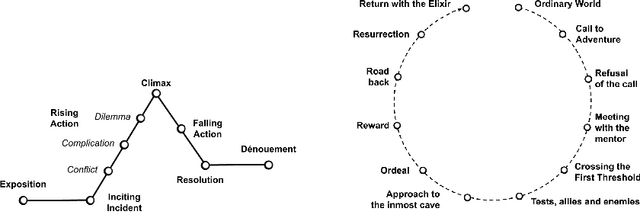

Automatic Embedding of Stories Into Collections of Independent Media

Nov 03, 2021

Abstract:We look at how machine learning techniques that derive properties of items in a collection of independent media can be used to automatically embed stories into such collections. To do so, we use models that extract the tempo of songs to make a music playlist follow a narrative arc. Our work specifies an open-source tool that uses pre-trained neural network models to extract the global tempo of a set of raw audio files and applies these measures to create a narrative-following playlist. This tool is available at https://github.com/dylanashley/playlist-story-builder/releases/tag/v1.0.0

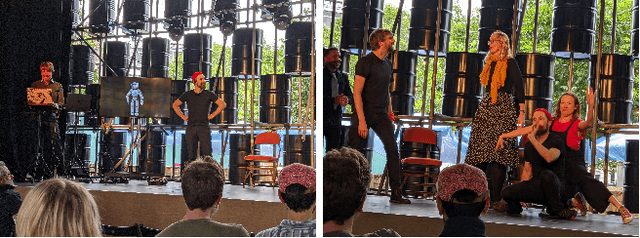

Collaborative Storytelling with Human Actors and AI Narrators

Sep 29, 2021

Abstract:Large language models can be used for collaborative storytelling. In this work we report on using GPT-3 \cite{brown2020language} to co-narrate stories. The AI system must track plot progression and character arcs while the human actors perform scenes. This event report details how a novel conversational agent was employed as creative partner with a team of professional improvisers to explore long-form spontaneous story narration in front of a live public audience. We introduced novel constraints on our language model to produce longer narrative text and tested the model in rehearsals with a team of professional improvisers. We then field tested the model with two live performances for public audiences as part of a live theatre festival in Europe. We surveyed audience members after each performance as well as performers to evaluate how well the AI performed in its role as narrator. Audiences and performers responded positively to AI narration and indicated preference for AI narration over AI characters within a scene. Performers also responded positively to AI narration and expressed enthusiasm for the creative and meaningful novel narrative directions introduced to the scenes. Our findings support improvisational theatre as a useful test-bed to explore how different language models can collaborate with humans in a variety of social contexts.

Conceptualization and Framework of Hybrid Intelligence Systems

Dec 11, 2020

Abstract:As artificial intelligence (AI) systems are getting ubiquitous within our society, issues related to its fairness, accountability, and transparency are increasing rapidly. As a result, researchers are integrating humans with AI systems to build robust and reliable hybrid intelligence systems. However, a proper conceptualization of these systems does not underpin this rapid growth. This article provides a precise definition of hybrid intelligence systems as well as explains its relation with other similar concepts through our proposed framework and examples from contemporary literature. The framework breakdowns the relationship between a human and a machine in terms of the degree of coupling and the directive authority of each party. Finally, we argue that all AI systems are hybrid intelligence systems, so human factors need to be examined at every stage of such systems' lifecycle.

A Human-Centered Approach to Interactive Machine Learning

May 15, 2019Abstract:The interactive machine learning (IML) community aims to augment humans' ability to learn and make decisions over time through the development of automated decision-making systems. This interaction represents a collaboration between multiple intelligent systems---humans and machines. A lack of appropriate consideration for the humans involved can lead to problematic system behaviour, and issues of fairness, accountability, and transparency. This work presents a human-centred thinking approach to applying IML methods. This guide is intended to be used by AI practitioners who incorporate human factors in their work. These practitioners are responsible for the health, safety, and well-being of interacting humans. An obligation of responsibility for public interaction means acting with integrity, honesty, fairness, and abiding by applicable legal statutes. With these values and principles in mind, we as a research community can better achieve the collective goal of augmenting human ability. This practical guide aims to support many of the responsible decisions necessary throughout iterative design, development, and dissemination of IML systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge