Karteek Popuri

Automated Body Composition Analysis Using DAFS Express on 2D MRI Slices at L3 Vertebral Level

Sep 11, 2024

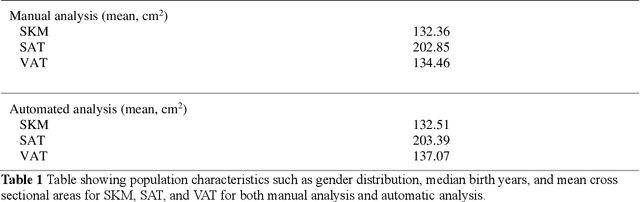

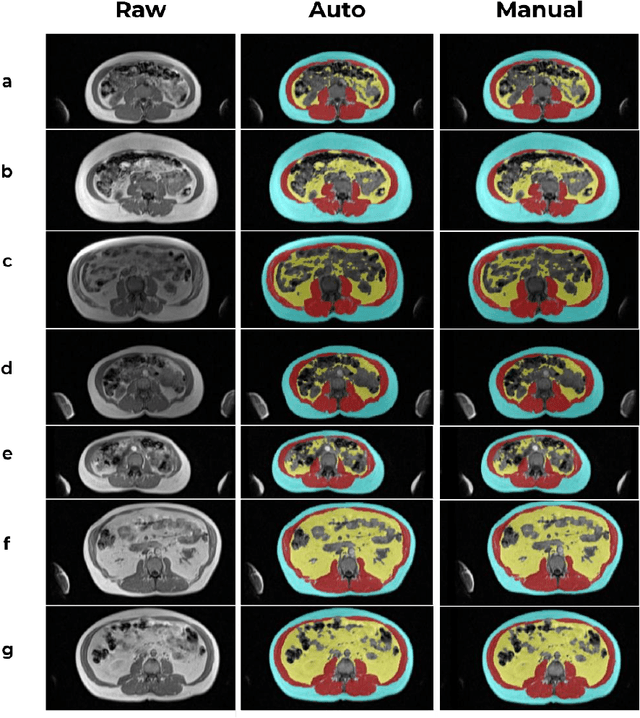

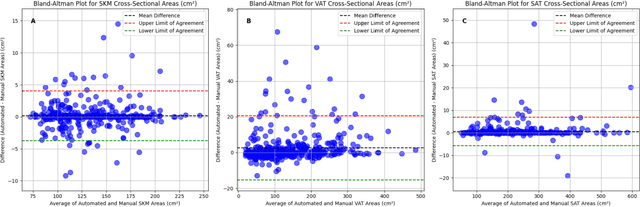

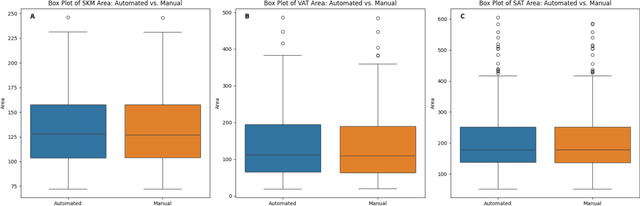

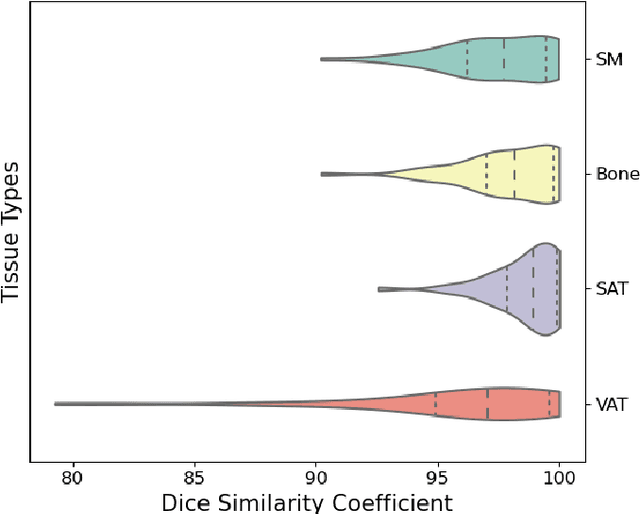

Abstract:Body composition analysis is vital in assessing health conditions such as obesity, sarcopenia, and metabolic syndromes. MRI provides detailed images of skeletal muscle (SKM), visceral adipose tissue (VAT), and subcutaneous adipose tissue (SAT), but their manual segmentation is labor-intensive and limits clinical applicability. This study validates an automated tool for MRI-based 2D body composition analysis- (Data Analysis Facilitation Suite (DAFS) Express), comparing its automated measurements with expert manual segmentations using UK Biobank data. A cohort of 399 participants from the UK Biobank dataset was selected, yielding 423 single L3 slices for analysis. DAFS Express performed automated segmentations of SKM, VAT, and SAT, which were then manually corrected by expert raters for validation. Evaluation metrics included Jaccard coefficients, Dice scores, Intraclass Correlation Coefficients (ICCs), and Bland-Altman Plots to assess segmentation agreement and reliability. High agreements were observed between automated and manual segmentations with mean Jaccard scores: SKM 99.03%, VAT 95.25%, and SAT 99.57%; and mean Dice scores: SKM 99.51%, VAT 97.41%, and SAT 99.78%. Cross-sectional area comparisons showed consistent measurements with automated methods closely matching manual measurements for SKM and SAT, and slightly higher values for VAT (SKM: Auto 132.51 cm^2, Manual 132.36 cm^2; VAT: Auto 137.07 cm^2, Manual 134.46 cm^2; SAT: Auto 203.39 cm^2, Manual 202.85 cm^2). ICCs confirmed strong reliability (SKM: 0.998, VAT: 0.994, SAT: 0.994). Bland-Altman plots revealed minimal biases, and boxplots illustrated distribution similarities across SKM, VAT, and SAT areas. On average DAFS Express took 18 seconds per DICOM. This underscores its potential to streamline image analysis processes in research and clinical settings, enhancing diagnostic accuracy and efficiency.

Segmentation-guided Domain Adaptation and Data Harmonization of Multi-device Retinal Optical Coherence Tomography using Cycle-Consistent Generative Adversarial Networks

Aug 31, 2022

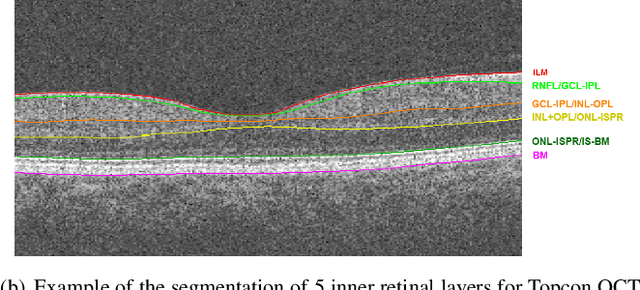

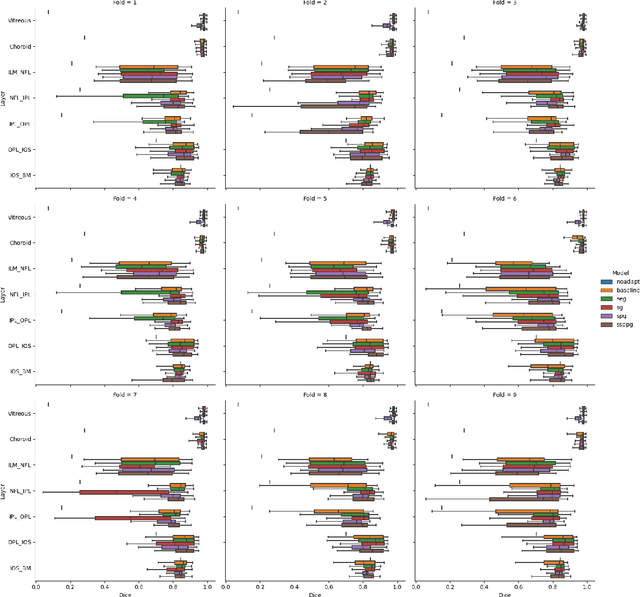

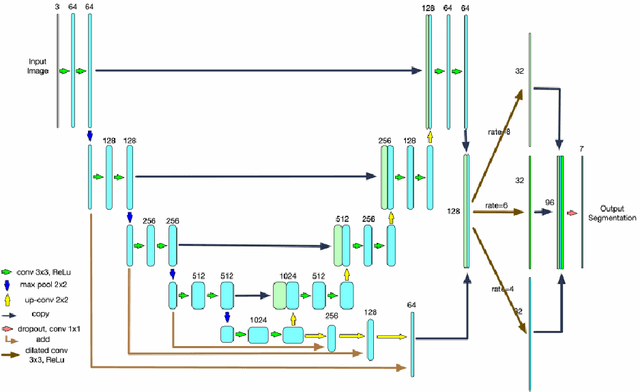

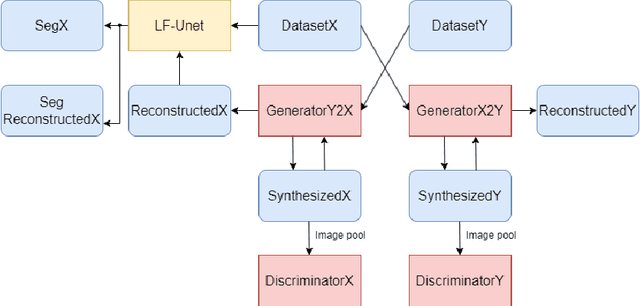

Abstract:Optical Coherence Tomography(OCT) is a non-invasive technique capturing cross-sectional area of the retina in micro-meter resolutions. It has been widely used as a auxiliary imaging reference to detect eye-related pathology and predict longitudinal progression of the disease characteristics. Retina layer segmentation is one of the crucial feature extraction techniques, where the variations of retinal layer thicknesses and the retinal layer deformation due to the presence of the fluid are highly correlated with multiple epidemic eye diseases like Diabetic Retinopathy(DR) and Age-related Macular Degeneration (AMD). However, these images are acquired from different devices, which have different intensity distribution, or in other words, belong to different imaging domains. This paper proposes a segmentation-guided domain-adaptation method to adapt images from multiple devices into single image domain, where the state-of-art pre-trained segmentation model is available. It avoids the time consumption of manual labelling for the upcoming new dataset and the re-training of the existing network. The semantic consistency and global feature consistency of the network will minimize the hallucination effect that many researchers reported regarding Cycle-Consistent Generative Adversarial Networks(CycleGAN) architecture.

Predicting Time-to-conversion for Dementia of Alzheimer's Type using Multi-modal Deep Survival Analysis

May 02, 2022

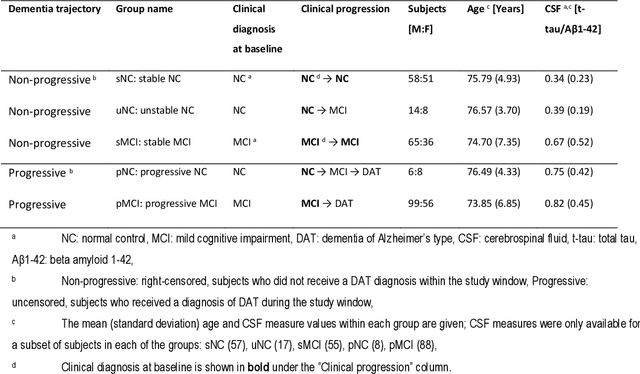

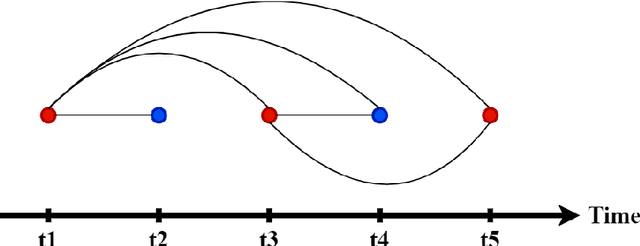

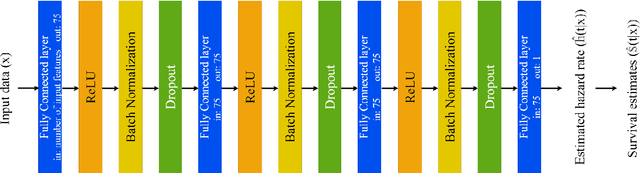

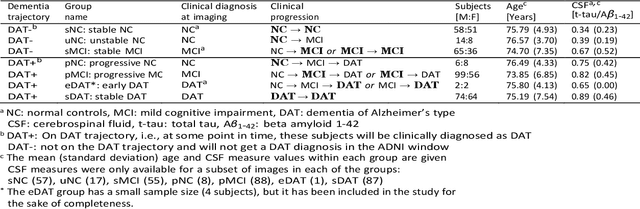

Abstract:Dementia of Alzheimer's Type (DAT) is a complex disorder influenced by numerous factors, but it is unclear how each factor contributes to disease progression. An in-depth examination of these factors may yield an accurate estimate of time-to-conversion to DAT for patients at various disease stages. We used 401 subjects with 63 features from MRI, genetic, and CDC (Cognitive tests, Demographic, and CSF) data modalities in the Alzheimer's Disease Neuroimaging Initiative (ADNI) database. We used a deep learning-based survival analysis model that extends the classic Cox regression model to predict time-to-conversion to DAT. Our findings showed that genetic features contributed the least to survival analysis, while CDC features contributed the most. Combining MRI and genetic features improved survival prediction over using either modality alone, but adding CDC to any combination of features only worked as well as using only CDC features. Consequently, our study demonstrated that using the current clinical procedure, which includes gathering cognitive test results, can outperform survival analysis results produced using costly genetic or CSF data.

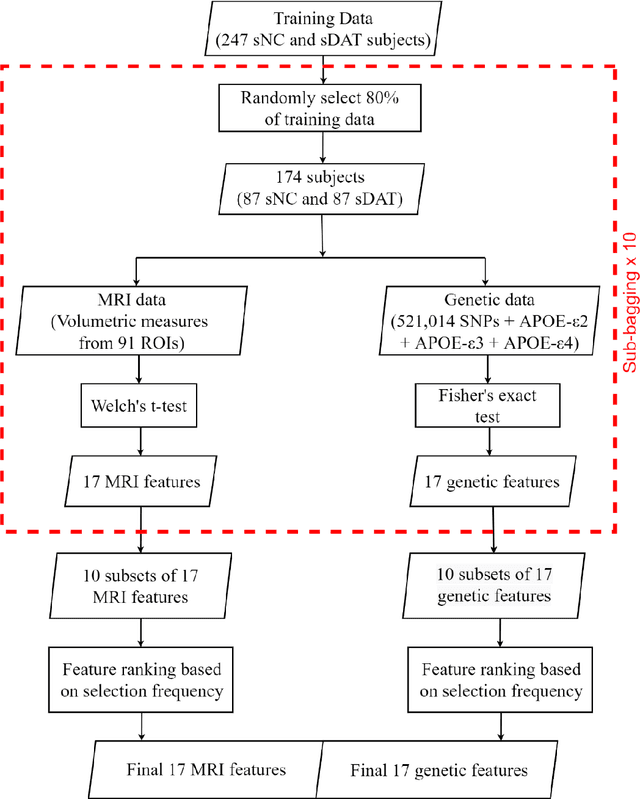

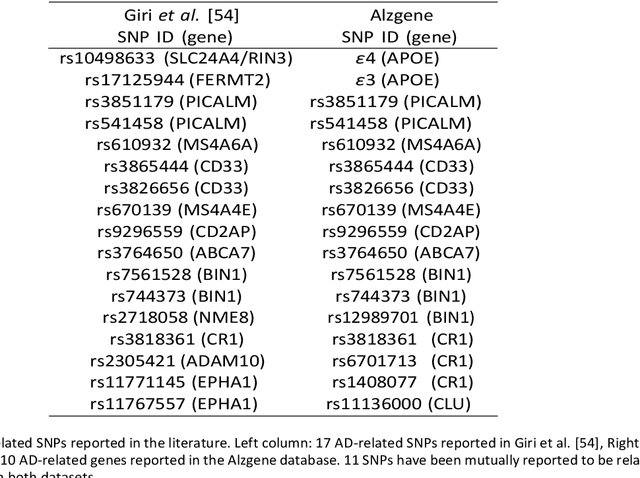

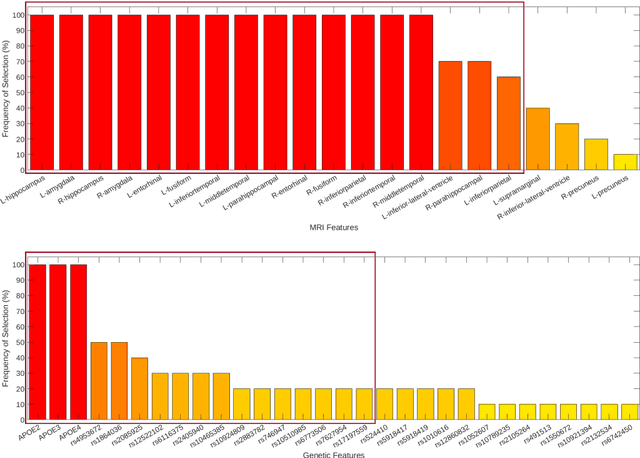

Machine Learning Based Multimodal Neuroimaging Genomics Dementia Score for Predicting Future Conversion to Alzheimer's Disease

Mar 11, 2022

Abstract:Background: The increasing availability of databases containing both magnetic resonance imaging (MRI) and genetic data allows researchers to utilize multimodal data to better understand the characteristics of dementia of Alzheimer's type (DAT). Objective: The goal of this study was to develop and analyze novel biomarkers that can help predict the development and progression of DAT. Methods: We used feature selection and ensemble learning classifier to develop an image/genotype-based DAT score that represents a subject's likelihood of developing DAT in the future. Three feature types were used: MRI only, genetic only, and combined multimodal data. We used a novel data stratification method to better represent different stages of DAT. Using a pre-defined 0.5 threshold on DAT scores, we predicted whether or not a subject would develop DAT in the future. Results: Our results on Alzheimer's Disease Neuroimaging Initiative (ADNI) database showed that dementia scores using genetic data could better predict future DAT progression for currently normal control subjects (Accuracy=0.857) compared to MRI (Accuracy=0.143), while MRI can better characterize subjects with stable mild cognitive impairment (Accuracy=0.614) compared to genetics (Accuracy=0.356). Combining MRI and genetic data showed improved classification performance in the remaining stratified groups. Conclusion: MRI and genetic data can contribute to DAT prediction in different ways. MRI data reflects anatomical changes in the brain, while genetic data can detect the risk of DAT progression prior to the symptomatic onset. Combining information from multimodal data in the right way can improve prediction performance.

Differential Diagnosis of Frontotemporal Dementia and Alzheimer's Disease using Generative Adversarial Network

Sep 29, 2021

Abstract:Frontotemporal dementia and Alzheimer's disease are two common forms of dementia and are easily misdiagnosed as each other due to their similar pattern of clinical symptoms. Differentiating between the two dementia types is crucial for determining disease-specific intervention and treatment. Recent development of Deep-learning-based approaches in the field of medical image computing are delivering some of the best performance for many binary classification tasks, although its application in differential diagnosis, such as neuroimage-based differentiation for multiple types of dementia, has not been explored. In this study, a novel framework was proposed by using the Generative Adversarial Network technique to distinguish FTD, AD and normal control subjects, using volumetric features extracted at coarse-to-fine structural scales from Magnetic Resonance Imaging scans. Experiments of 10-folds cross-validation on 1,954 images achieved high accuracy. With the proposed framework, we have demonstrated that the combination of multi-scale structural features and synthetic data augmentation based on generative adversarial network can improve the performance of challenging tasks such as differentiating Dementia sub-types.

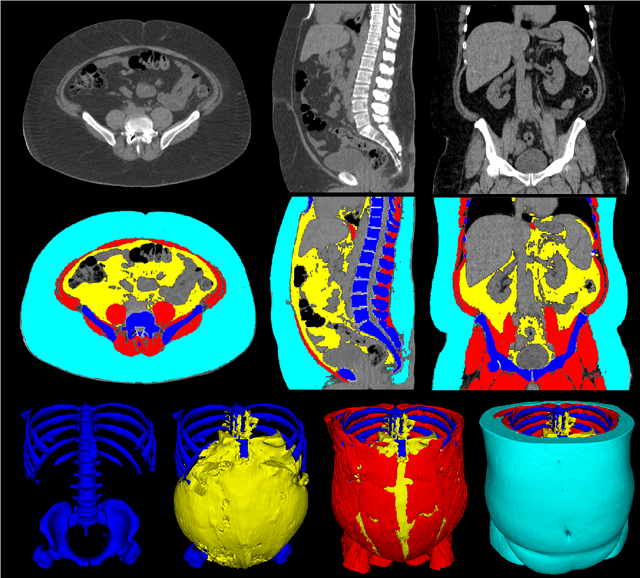

Comprehensive Validation of Automated Whole Body Skeletal Muscle, Adipose Tissue, and Bone Segmentation from 3D CT images for Body Composition Analysis: Towards Extended Body Composition

Jun 03, 2021

Abstract:The latest advances in computer-assisted precision medicine are making it feasible to move from population-wide models that are useful to discover aggregate patterns that hold for group-based analysis to patient-specific models that can drive patient-specific decisions with regard to treatment choices, and predictions of outcomes of treatment. Body Composition is recognized as an important driver and risk factor for a wide variety of diseases, as well as a predictor of individual patient-specific clinical outcomes to treatment choices or surgical interventions. 3D CT images are routinely acquired in the oncological worklows and deliver accurate rendering of internal anatomy and therefore can be used opportunistically to assess the amount of skeletal muscle and adipose tissue compartments. Powerful tools of artificial intelligence such as deep learning are making it feasible now to segment the entire 3D image and generate accurate measurements of all internal anatomy. These will enable the overcoming of the severe bottleneck that existed previously, namely, the need for manual segmentation, which was prohibitive to scale to the hundreds of 2D axial slices that made up a 3D volumetric image. Automated tools such as presented here will now enable harvesting whole-body measurements from 3D CT or MRI images, leading to a new era of discovery of the drivers of various diseases based on individual tissue, organ volume, shape, and functional status. These measurements were hitherto unavailable thereby limiting the field to a very small and limited subset. These discoveries and the potential to perform individual image segmentation with high speed and accuracy are likely to lead to the incorporation of these 3D measures into individual specific treatment planning models related to nutrition, aging, chemotoxicity, surgery and survival after the onset of a major disease such as cancer.

On the Ambiguity of Registration Uncertainty

Mar 20, 2018

Abstract:Estimating the uncertainty in image registration is an area of current research that is aimed at providing information that will enable surgeons to assess the operative risk based on registered image data and the estimated registration uncertainty. If they receive inaccurately calculated registration uncertainty and misplace confidence in the alignment solutions, severe consequences may result. For probabilistic image registration (PIR), most research quantifies the registration uncertainty using summary statistics of the transformation distributions. In this paper, we study a rarely examined topic: whether those summary statistics of the transformation distribution truly represent the registration uncertainty. Using concrete examples, we show that there are two types of uncertainties: the transformation uncertainty, Ut, and label uncertainty Ul. Ut indicates the doubt concerning transformation parameters and can be estimated by conventional uncertainty measures, while Ul is strongly linked to the goal of registration. Further, we show that using Ut to quantify Ul is inappropriate and can be misleading. In addition, we present some potentially critical findings regarding PIR.

Development and validation of a novel dementia of Alzheimer's type score based on metabolism FDG-PET imaging

Nov 02, 2017

Abstract:Fluorodeoxyglucose positron emission tomography (FDG-PET) imaging based 3D topographic brain glucose metabolism patterns from normal controls (NC) and individuals with dementia of Alzheimer's type (DAT) are used to train a novel multi-scale ensemble classification model. This ensemble model outputs a FDG-PET DAT score (FPDS) between 0 and 1 denoting the probability of a subject to be clinically diagnosed with DAT based on their metabolism profile. A novel 7 group image stratification scheme is devised that groups images not only based on their associated clinical diagnosis but also on past and future trajectories of the clinical diagnoses, yielding a more continuous representation of the different stages of DAT spectrum that mimics a real-world clinical setting. The potential for using FPDS as a DAT biomarker was validated on a large number of FDG-PET images (N=2984) obtained from the Alzheimer's Disease Neuroimaging Initiative (ADNI) database taken across the proposed stratification, and a good classification AUC (area under the curve) of 0.78 was achieved in distinguishing between images belonging to subjects on a DAT trajectory and those images taken from subjects not progressing to a DAT diagnosis. Further, the FPDS biomarker achieved state-of-the-art performance on the mild cognitive impairment (MCI) to DAT conversion prediction task with an AUC of 0.81, 0.80, 0.77 for the 2, 3, 5 years to conversion windows respectively.

Multimodal and Multiscale Deep Neural Networks for the Early Diagnosis of Alzheimer's Disease using structural MR and FDG-PET images

Oct 13, 2017

Abstract:Alzheimer's Disease (AD) is a progressive neurodegenerative disease. Amnestic mild cognitive impairment (MCI) is a common first symptom before the conversion to clinical impairment where the individual becomes unable to perform activities of daily living independently. Although there is currently no treatment available, the earlier a conclusive diagnosis is made, the earlier the potential for interventions to delay or perhaps even prevent progression to full-blown AD. Neuroimaging scans acquired from MRI and metabolism images obtained by FDG-PET provide in-vivo view into the structure and function (glucose metabolism) of the living brain. It is hypothesized that combining different image modalities could better characterize the change of human brain and result in a more accuracy early diagnosis of AD. In this paper, we proposed a novel framework to discriminate normal control(NC) subjects from subjects with AD pathology (AD and NC, MCI subjects convert to AD in future). Our novel approach utilizing a multimodal and multiscale deep neural network was found to deliver a 85.68\% accuracy in the prediction of subjects within 3 years to conversion. Cross validation experiments proved that it has better discrimination ability compared with results in existing published literature.

Misdirected Registration Uncertainty

May 17, 2017

Abstract:Being a task of establishing spatial correspondences, medical image registration is often formalized as finding the optimal transformation that best aligns two images. Since the transformation is such an essential component of registration, most existing researches conventionally quantify the registration uncertainty, which is the confidence in the estimated spatial correspondences, by the transformation uncertainty. In this paper, we give concrete examples and reveal that using the transformation uncertainty to quantify the registration uncertainty is inappropriate and sometimes misleading. Based on this finding, we also raise attention to an important yet subtle aspect of probabilistic image registration, that is whether it is reasonable to determine the correspondence of a registered voxel solely by the mode of its transformation distribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge