Jihwan Kim

Expanding Foundational Language Capabilities in Open-Source LLMs through a Korean Case Study

Sep 04, 2025Abstract:We introduce Llama-3-Motif, a language model consisting of 102 billion parameters, specifically designed to enhance Korean capabilities while retaining strong performance in English. Developed on the Llama 3 architecture, Llama-3-Motif employs advanced training techniques, including LlamaPro and Masked Structure Growth, to effectively scale the model without altering its core Transformer architecture. Using the MoAI platform for efficient training across hyperscale GPU clusters, we optimized Llama-3-Motif using a carefully curated dataset that maintains a balanced ratio of Korean and English data. Llama-3-Motif shows decent performance on Korean-specific benchmarks, outperforming existing models and achieving results comparable to GPT-4.

Generating Animated Layouts as Structured Text Representations

May 02, 2025

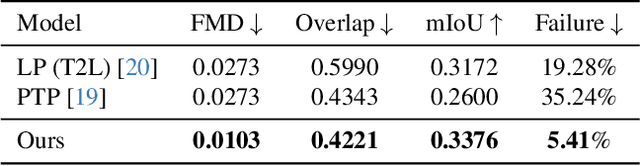

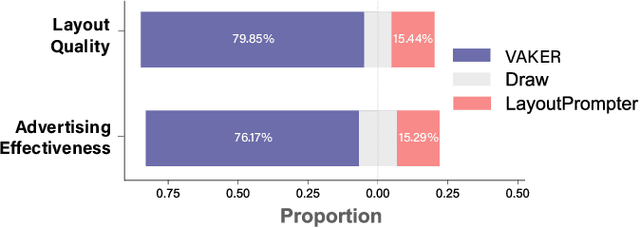

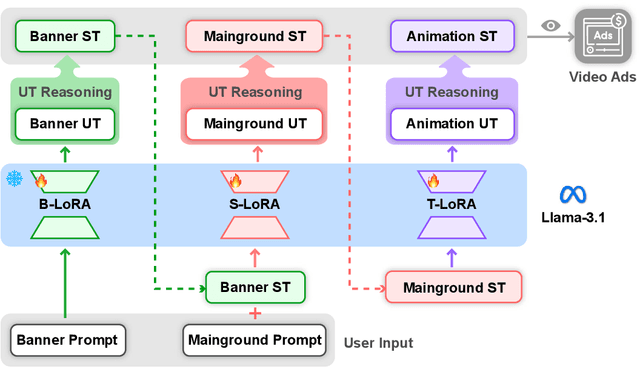

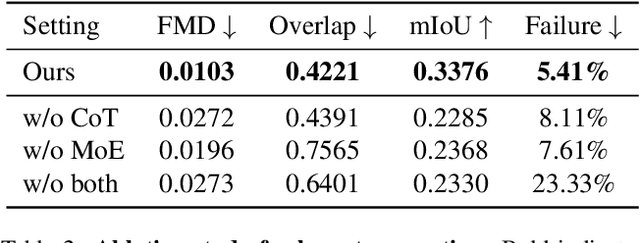

Abstract:Despite the remarkable progress in text-to-video models, achieving precise control over text elements and animated graphics remains a significant challenge, especially in applications such as video advertisements. To address this limitation, we introduce Animated Layout Generation, a novel approach to extend static graphic layouts with temporal dynamics. We propose a Structured Text Representation for fine-grained video control through hierarchical visual elements. To demonstrate the effectiveness of our approach, we present VAKER (Video Ad maKER), a text-to-video advertisement generation pipeline that combines a three-stage generation process with Unstructured Text Reasoning for seamless integration with LLMs. VAKER fully automates video advertisement generation by incorporating dynamic layout trajectories for objects and graphics across specific video frames. Through extensive evaluations, we demonstrate that VAKER significantly outperforms existing methods in generating video advertisements. Project Page: https://yeonsangshin.github.io/projects/Vaker

CrashFixer: A crash resolution agent for the Linux kernel

Apr 29, 2025

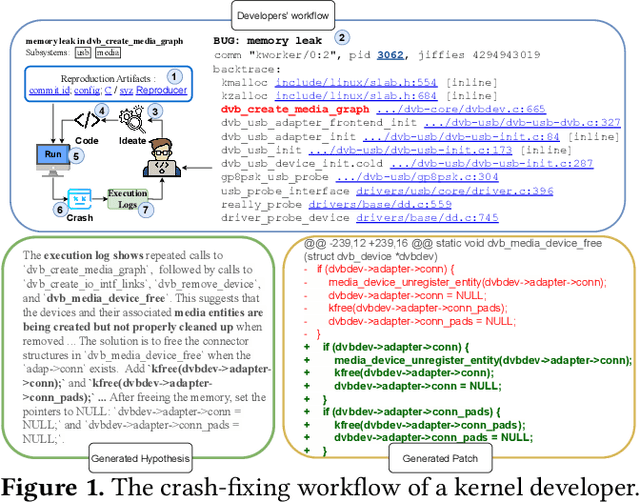

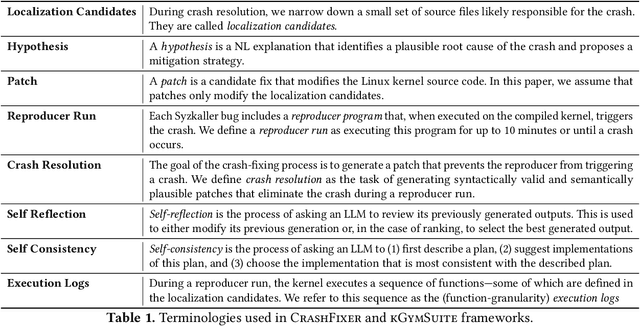

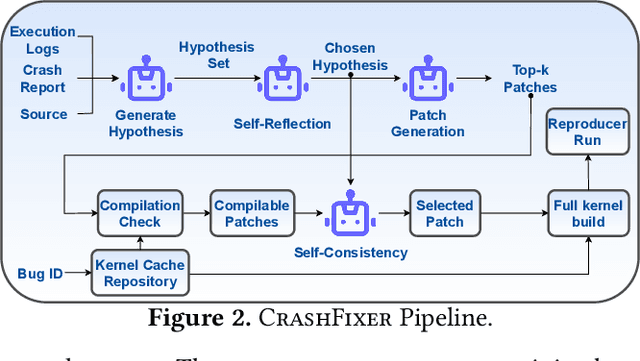

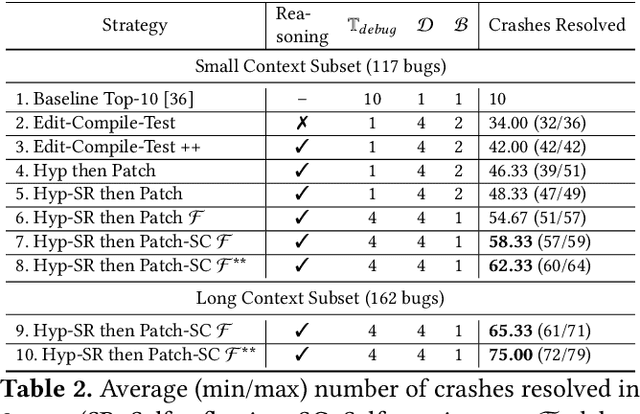

Abstract:Code large language models (LLMs) have shown impressive capabilities on a multitude of software engineering tasks. In particular, they have demonstrated remarkable utility in the task of code repair. However, common benchmarks used to evaluate the performance of code LLMs are often limited to small-scale settings. In this work, we build upon kGym, which shares a benchmark for system-level Linux kernel bugs and a platform to run experiments on the Linux kernel. This paper introduces CrashFixer, the first LLM-based software repair agent that is applicable to Linux kernel bugs. Inspired by the typical workflow of a kernel developer, we identify the key capabilities an expert developer leverages to resolve a kernel crash. Using this as our guide, we revisit the kGym platform and identify key system improvements needed to practically run LLM-based agents at the scale of the Linux kernel (50K files and 20M lines of code). We implement these changes by extending kGym to create an improved platform - called kGymSuite, which will be open-sourced. Finally, the paper presents an evaluation of various repair strategies for such complex kernel bugs and showcases the value of explicitly generating a hypothesis before attempting to fix bugs in complex systems such as the Linux kernel. We also evaluated CrashFixer's capabilities on still open bugs, and found at least two patch suggestions considered plausible to resolve the reported bug.

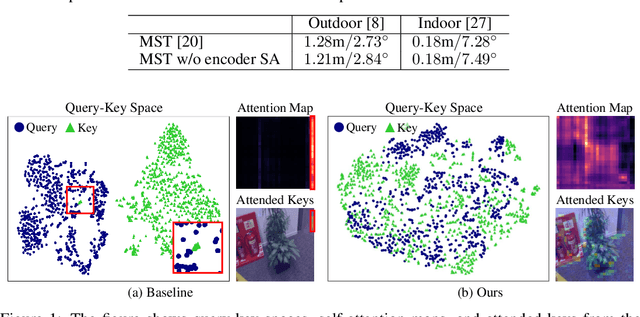

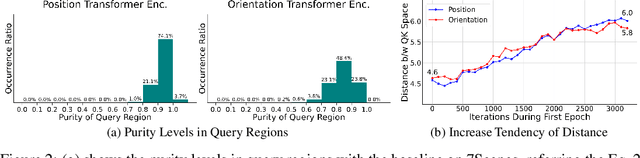

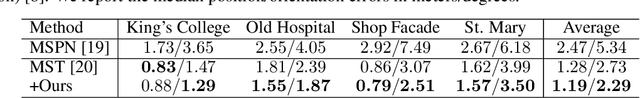

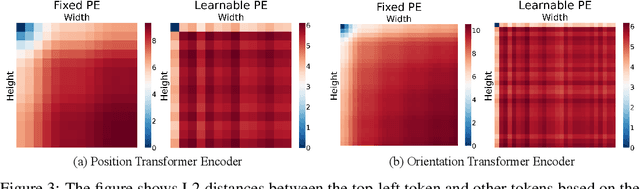

Activating Self-Attention for Multi-Scene Absolute Pose Regression

Nov 03, 2024

Abstract:Multi-scene absolute pose regression addresses the demand for fast and memory-efficient camera pose estimation across various real-world environments. Nowadays, transformer-based model has been devised to regress the camera pose directly in multi-scenes. Despite its potential, transformer encoders are underutilized due to the collapsed self-attention map, having low representation capacity. This work highlights the problem and investigates it from a new perspective: distortion of query-key embedding space. Based on the statistical analysis, we reveal that queries and keys are mapped in completely different spaces while only a few keys are blended into the query region. This leads to the collapse of the self-attention map as all queries are considered similar to those few keys. Therefore, we propose simple but effective solutions to activate self-attention. Concretely, we present an auxiliary loss that aligns queries and keys, preventing the distortion of query-key space and encouraging the model to find global relations by self-attention. In addition, the fixed sinusoidal positional encoding is adopted instead of undertrained learnable one to reflect appropriate positional clues into the inputs of self-attention. As a result, our approach resolves the aforementioned problem effectively, thus outperforming existing methods in both outdoor and indoor scenes.

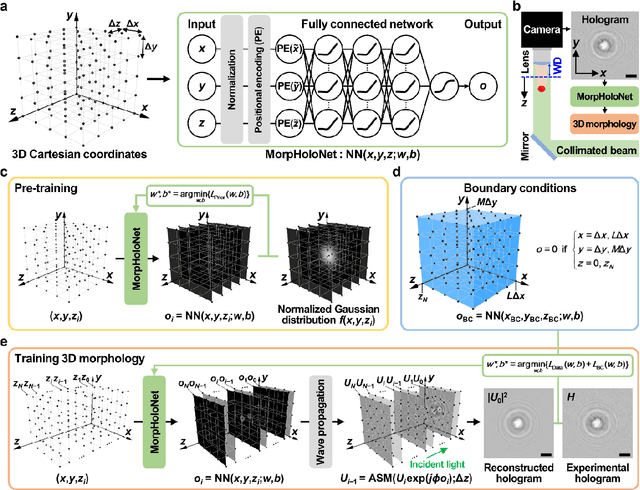

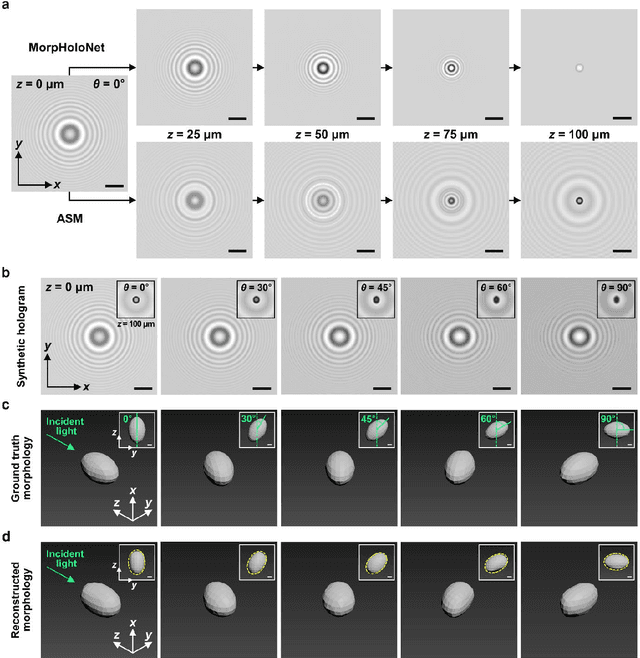

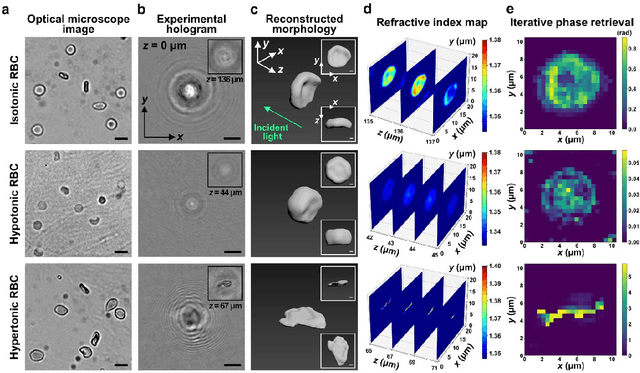

Single-shot reconstruction of three-dimensional morphology of biological cells in digital holographic microscopy using a physics-driven neural network

Sep 30, 2024

Abstract:Recent advances in deep learning-based image reconstruction techniques have led to significant progress in phase retrieval using digital in-line holographic microscopy (DIHM). However, existing deep learning-based phase retrieval methods have technical limitations in generalization performance and three-dimensional (3D) morphology reconstruction from a single-shot hologram of biological cells. In this study, we propose a novel deep learning model, named MorpHoloNet, for single-shot reconstruction of 3D morphology by integrating physics-driven and coordinate-based neural networks. By simulating the optical diffraction of coherent light through a 3D phase shift distribution, the proposed MorpHoloNet is optimized by minimizing the loss between the simulated and input holograms on the sensor plane. Compared to existing DIHM methods that face challenges with twin image and phase retrieval problems, MorpHoloNet enables direct reconstruction of 3D complex light field and 3D morphology of a test sample from its single-shot hologram without requiring multiple phase-shifted holograms or angle scanning. The performance of the proposed MorpHoloNet is validated by reconstructing 3D morphologies and refractive index distributions from synthetic holograms of ellipsoids and experimental holograms of biological cells. The proposed deep learning model is utilized to reconstruct spatiotemporal variations in 3D translational and rotational behaviors and morphological deformations of biological cells from consecutive single-shot holograms captured using DIHM. MorpHoloNet would pave the way for advancing label-free, real-time 3D imaging and dynamic analysis of biological cells under various cellular microenvironments in biomedical and engineering fields.

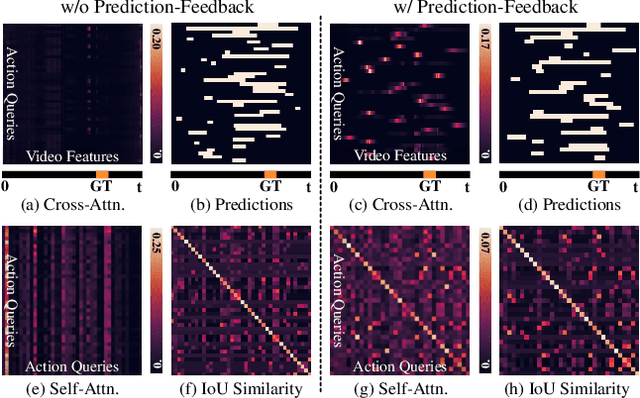

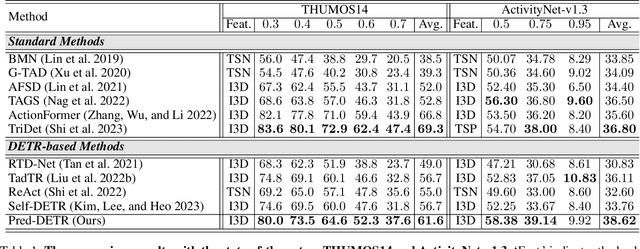

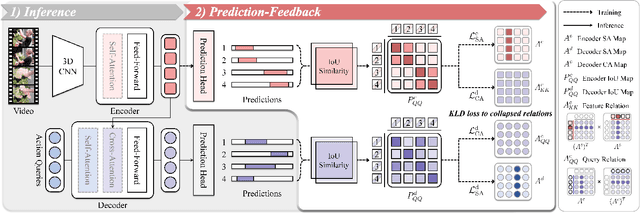

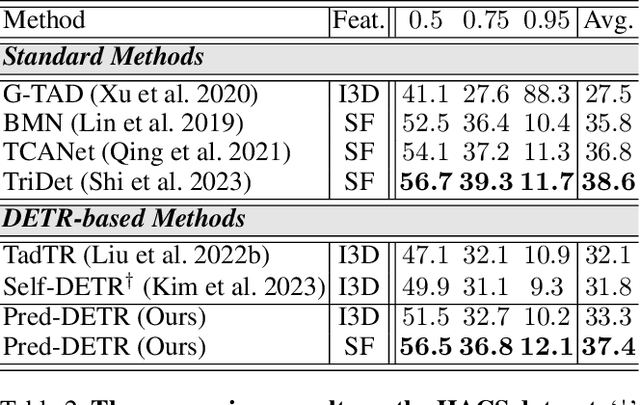

Prediction-Feedback DETR for Temporal Action Detection

Aug 29, 2024

Abstract:Temporal Action Detection (TAD) is fundamental yet challenging for real-world video applications. Leveraging the unique benefits of transformers, various DETR-based approaches have been adopted in TAD. However, it has recently been identified that the attention collapse in self-attention causes the performance degradation of DETR for TAD. Building upon previous research, this paper newly addresses the attention collapse problem in cross-attention within DETR-based TAD methods. Moreover, our findings reveal that cross-attention exhibits patterns distinct from predictions, indicating a short-cut phenomenon. To resolve this, we propose a new framework, Prediction-Feedback DETR (Pred-DETR), which utilizes predictions to restore the collapse and align the cross- and self-attention with predictions. Specifically, we devise novel prediction-feedback objectives using guidance from the relations of the predictions. As a result, Pred-DETR significantly alleviates the collapse and achieves state-of-the-art performance among DETR-based methods on various challenging benchmarks including THUMOS14, ActivityNet-v1.3, HACS, and FineAction.

Long-Term Pre-training for Temporal Action Detection with Transformers

Aug 23, 2024

Abstract:Temporal action detection (TAD) is challenging, yet fundamental for real-world video applications. Recently, DETR-based models for TAD have been prevailing thanks to their unique benefits. However, transformers demand a huge dataset, and unfortunately data scarcity in TAD causes a severe degeneration. In this paper, we identify two crucial problems from data scarcity: attention collapse and imbalanced performance. To this end, we propose a new pre-training strategy, Long-Term Pre-training (LTP), tailored for transformers. LTP has two main components: 1) class-wise synthesis, 2) long-term pretext tasks. Firstly, we synthesize long-form video features by merging video snippets of a target class and non-target classes. They are analogous to untrimmed data used in TAD, despite being created from trimmed data. In addition, we devise two types of long-term pretext tasks to learn long-term dependency. They impose long-term conditions such as finding second-to-fourth or short-duration actions. Our extensive experiments show state-of-the-art performances in DETR-based methods on ActivityNet-v1.3 and THUMOS14 by a large margin. Moreover, we demonstrate that LTP significantly relieves the data scarcity issues in TAD.

TWLV-I: Analysis and Insights from Holistic Evaluation on Video Foundation Models

Aug 21, 2024Abstract:In this work, we discuss evaluating video foundation models in a fair and robust manner. Unlike language or image foundation models, many video foundation models are evaluated with differing parameters (such as sampling rate, number of frames, pretraining steps, etc.), making fair and robust comparisons challenging. Therefore, we present a carefully designed evaluation framework for measuring two core capabilities of video comprehension: appearance and motion understanding. Our findings reveal that existing video foundation models, whether text-supervised like UMT or InternVideo2, or self-supervised like V-JEPA, exhibit limitations in at least one of these capabilities. As an alternative, we introduce TWLV-I, a new video foundation model that constructs robust visual representations for both motion- and appearance-based videos. Based on the average top-1 accuracy of linear probing on five action recognition benchmarks, pretrained only on publicly accessible datasets, our model shows a 4.6%p improvement compared to V-JEPA (ViT-L) and a 7.7%p improvement compared to UMT (ViT-L). Even when compared to much larger models, our model demonstrates a 7.2%p improvement compared to DFN (ViT-H), a 2.7%p improvement compared to V-JEPA~(ViT-H) and a 2.8%p improvement compared to InternVideo2 (ViT-g). We provide embedding vectors obtained by TWLV-I from videos of several commonly used video benchmarks, along with evaluation source code that can directly utilize these embeddings. The code is available on "https://github.com/twelvelabs-io/video-embeddings-evaluation-framework".

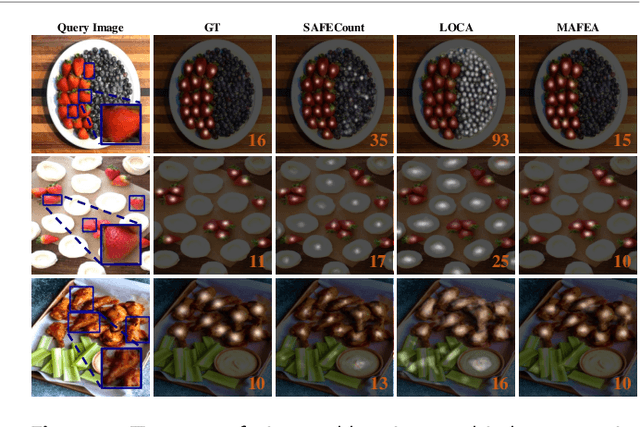

Mutually-Aware Feature Learning for Few-Shot Object Counting

Aug 19, 2024

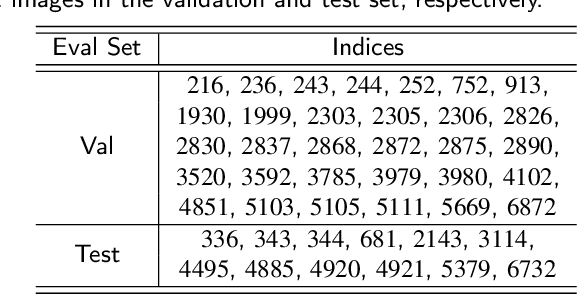

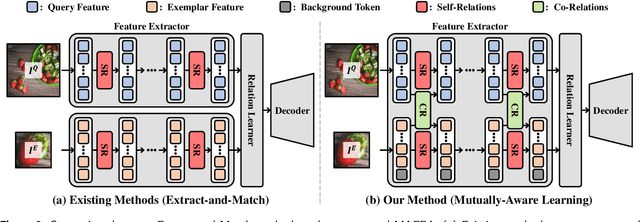

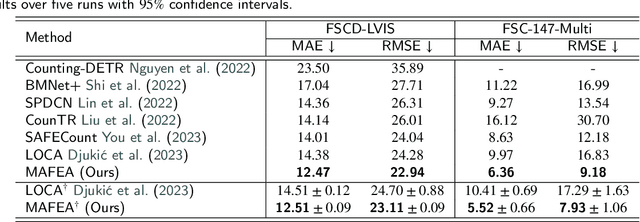

Abstract:Few-shot object counting has garnered significant attention for its practicality as it aims to count target objects in a query image based on given exemplars without the need for additional training. However, there is a shortcoming in the prevailing extract-and-match approach: query and exemplar features lack interaction during feature extraction since they are extracted unaware of each other and later correlated based on similarity. This can lead to insufficient target awareness of the extracted features, resulting in target confusion in precisely identifying the actual target when multiple class objects coexist. To address this limitation, we propose a novel framework, Mutually-Aware FEAture learning(MAFEA), which encodes query and exemplar features mutually aware of each other from the outset. By encouraging interaction between query and exemplar features throughout the entire pipeline, we can obtain target-aware features that are robust to a multi-category scenario. Furthermore, we introduce a background token to effectively associate the target region of query with exemplars and decouple its background region from them. Our extensive experiments demonstrate that our model reaches a new state-of-the-art performance on the two challenging benchmarks, FSCD-LVIS and FSC-147, with a remarkably reduced degree of the target confusion problem.

Boundary-Recovering Network for Temporal Action Detection

Aug 18, 2024Abstract:Temporal action detection (TAD) is challenging, yet fundamental for real-world video applications. Large temporal scale variation of actions is one of the most primary difficulties in TAD. Naturally, multi-scale features have potential in localizing actions of diverse lengths as widely used in object detection. Nevertheless, unlike objects in images, actions have more ambiguity in their boundaries. That is, small neighboring objects are not considered as a large one while short adjoining actions can be misunderstood as a long one. In the coarse-to-fine feature pyramid via pooling, these vague action boundaries can fade out, which we call 'vanishing boundary problem'. To this end, we propose Boundary-Recovering Network (BRN) to address the vanishing boundary problem. BRN constructs scale-time features by introducing a new axis called scale dimension by interpolating multi-scale features to the same temporal length. On top of scale-time features, scale-time blocks learn to exchange features across scale levels, which can effectively settle down the issue. Our extensive experiments demonstrate that our model outperforms the state-of-the-art on the two challenging benchmarks, ActivityNet-v1.3 and THUMOS14, with remarkably reduced degree of the vanishing boundary problem.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge