Jiancong Chen

VL-SAFE: Vision-Language Guided Safety-Aware Reinforcement Learning with World Models for Autonomous Driving

May 22, 2025

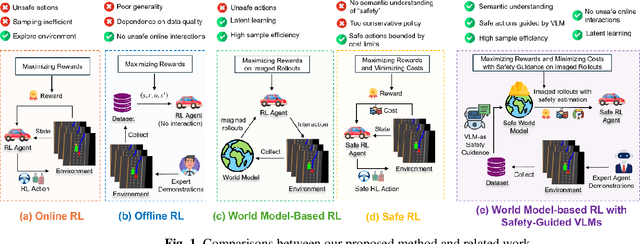

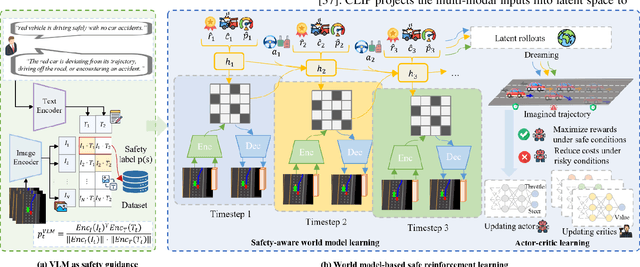

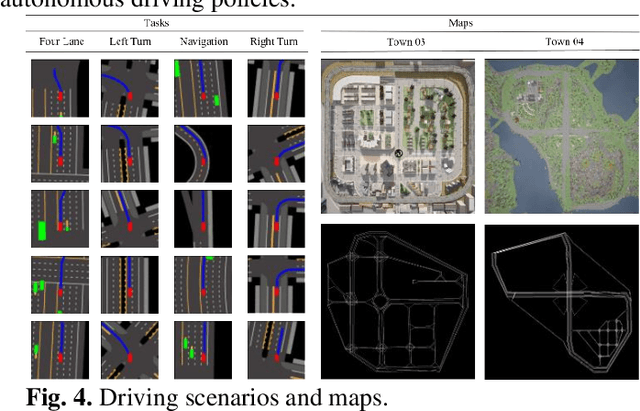

Abstract:Reinforcement learning (RL)-based autonomous driving policy learning faces critical limitations such as low sample efficiency and poor generalization; its reliance on online interactions and trial-and-error learning is especially unacceptable in safety-critical scenarios. Existing methods including safe RL often fail to capture the true semantic meaning of "safety" in complex driving contexts, leading to either overly conservative driving behavior or constraint violations. To address these challenges, we propose VL-SAFE, a world model-based safe RL framework with Vision-Language model (VLM)-as-safety-guidance paradigm, designed for offline safe policy learning. Specifically, we construct offline datasets containing data collected by expert agents and labeled with safety scores derived from VLMs. A world model is trained to generate imagined rollouts together with safety estimations, allowing the agent to perform safe planning without interacting with the real environment. Based on these imagined trajectories and safety evaluations, actor-critic learning is conducted under VLM-based safety guidance to optimize the driving policy more safely and efficiently. Extensive evaluations demonstrate that VL-SAFE achieves superior sample efficiency, generalization, safety, and overall performance compared to existing baselines. To the best of our knowledge, this is the first work that introduces a VLM-guided world model-based approach for safe autonomous driving. The demo video and code can be accessed at: https://ys-qu.github.io/vlsafe-website/

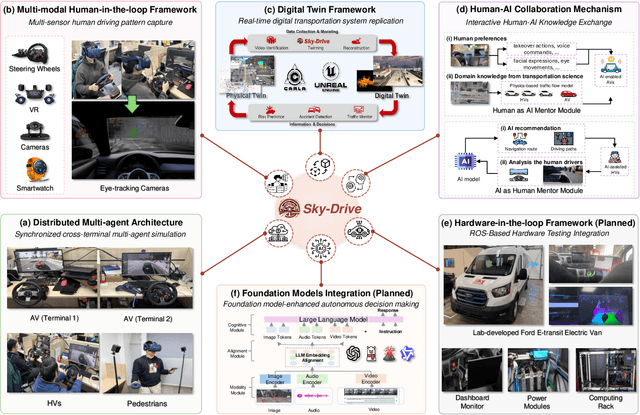

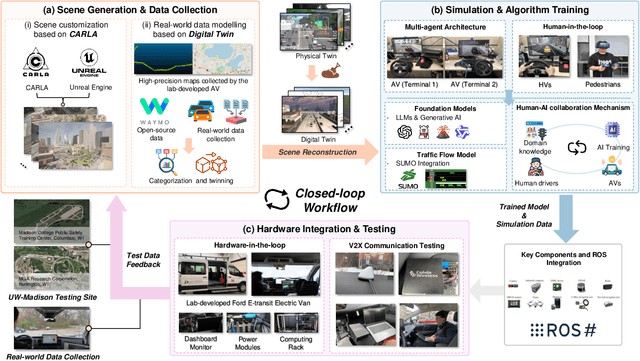

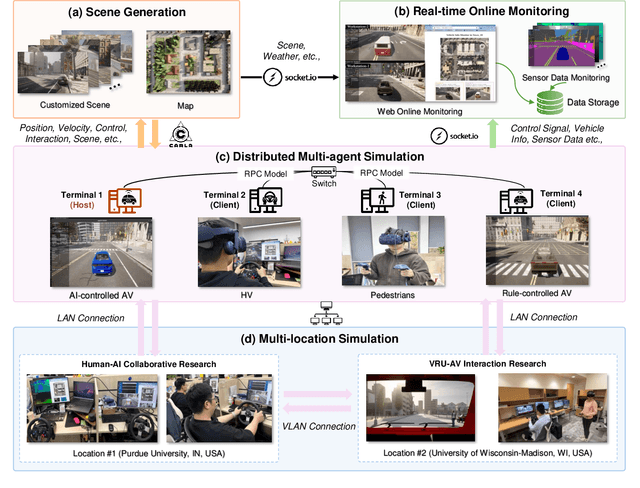

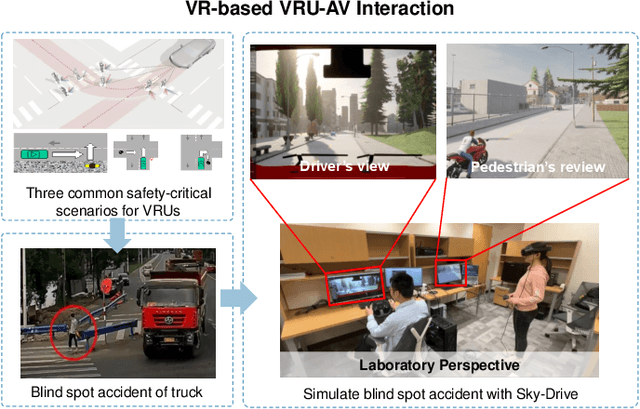

Sky-Drive: A Distributed Multi-Agent Simulation Platform for Socially-Aware and Human-AI Collaborative Future Transportation

Apr 25, 2025

Abstract:Recent advances in autonomous system simulation platforms have significantly enhanced the safe and scalable testing of driving policies. However, existing simulators do not yet fully meet the needs of future transportation research, particularly in modeling socially-aware driving agents and enabling effective human-AI collaboration. This paper introduces Sky-Drive, a novel distributed multi-agent simulation platform that addresses these limitations through four key innovations: (a) a distributed architecture for synchronized simulation across multiple terminals; (b) a multi-modal human-in-the-loop framework integrating diverse sensors to collect rich behavioral data; (c) a human-AI collaboration mechanism supporting continuous and adaptive knowledge exchange; and (d) a digital twin (DT) framework for constructing high-fidelity virtual replicas of real-world transportation environments. Sky-Drive supports diverse applications such as autonomous vehicle (AV)-vulnerable road user (VRU) interaction modeling, human-in-the-loop training, socially-aware reinforcement learning, personalized driving policy, and customized scenario generation. Future extensions will incorporate foundation models for context-aware decision support and hardware-in-the-loop (HIL) testing for real-world validation. By bridging scenario generation, data collection, algorithm training, and hardware integration, Sky-Drive has the potential to become a foundational platform for the next generation of socially-aware and human-centered autonomous transportation research. The demo video and code are available at:https://sky-lab-uw.github.io/Sky-Drive-website/

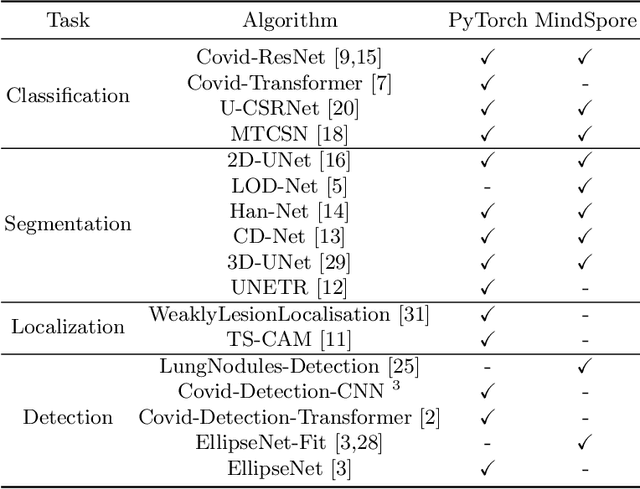

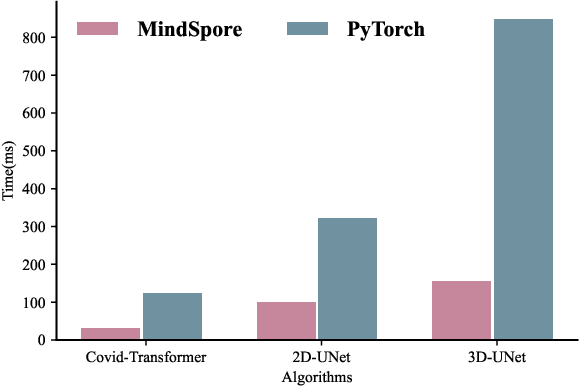

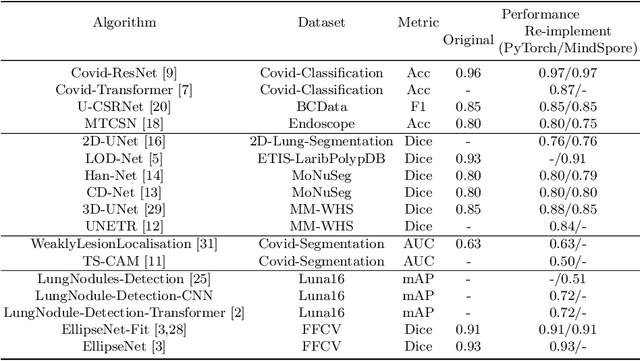

OpenMedIA: Open-Source Medical Image Analysis Toolbox and Benchmark under Heterogeneous AI Computing Platforms

Aug 11, 2022

Abstract:In this paper, we present OpenMedIA, an open-source toolbox library containing a rich set of deep learning methods for medical image analysis under heterogeneous Artificial Intelligence (AI) computing platforms. Various medical image analysis methods, including 2D$/$3D medical image classification, segmentation, localisation, and detection, have been included in the toolbox with PyTorch and$/$or MindSpore implementations under heterogeneous NVIDIA and Huawei Ascend computing systems. To our best knowledge, OpenMedIA is the first open-source algorithm library providing compared PyTorch and MindSp

EllipseNet: Anchor-Free Ellipse Detection for Automatic Cardiac Biometrics in Fetal Echocardiography

Sep 26, 2021

Abstract:As an important scan plane, four chamber view is routinely performed in both second trimester perinatal screening and fetal echocardiographic examinations. The biometrics in this plane including cardio-thoracic ratio (CTR) and cardiac axis are usually measured by sonographers for diagnosing congenital heart disease. However, due to the commonly existing artifacts like acoustic shadowing, the traditional manual measurements not only suffer from the low efficiency, but also with the inconsistent results depending on the operators' skills. In this paper, we present an anchor-free ellipse detection network, namely EllipseNet, which detects the cardiac and thoracic regions in ellipse and automatically calculates the CTR and cardiac axis for fetal cardiac biometrics in 4-chamber view. In particular, we formulate the network that detects the center of each object as points and regresses the ellipses' parameters simultaneously. We define an intersection-over-union loss to further regulate the regression procedure. We evaluate EllipseNet on clinical echocardiogram dataset with more than 2000 subjects. Experimental results show that the proposed framework outperforms several state-of-the-art methods. Source code will be available at https://git.openi.org.cn/capepoint/EllipseNet .

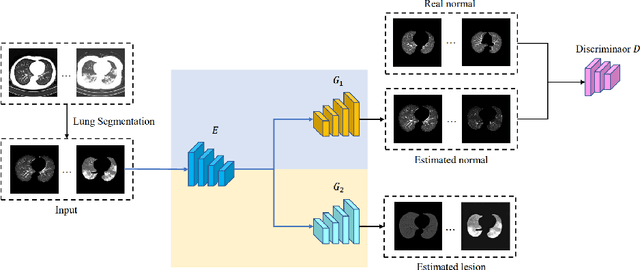

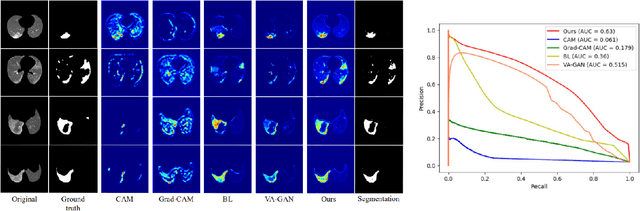

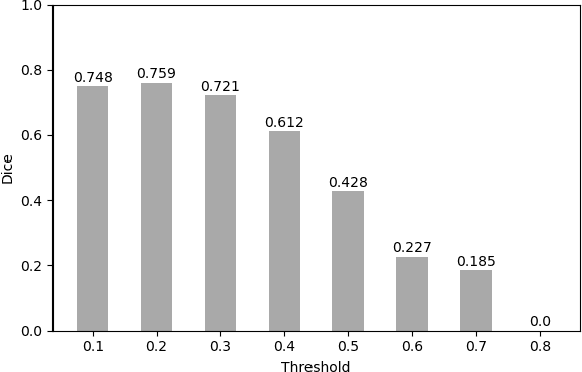

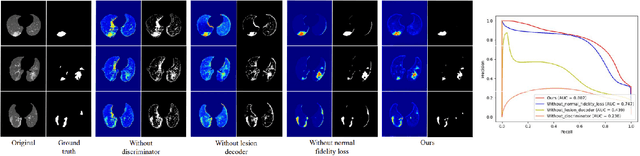

Towards Unbiased COVID-19 Lesion Localisation and Segmentation via Weakly Supervised Learning

Mar 01, 2021

Abstract:Despite tremendous efforts, it is very challenging to generate a robust model to assist in the accurate quantification assessment of COVID-19 on chest CT images. Due to the nature of blurred boundaries, the supervised segmentation methods usually suffer from annotation biases. To support unbiased lesion localisation and to minimise the labeling costs, we propose a data-driven framework supervised by only image-level labels. The framework can explicitly separate potential lesions from original images, with the help of a generative adversarial network and a lesion-specific decoder. Experiments on two COVID-19 datasets demonstrate the effectiveness of the proposed framework and its superior performance to several existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge