Keke Long

Robustness and Resilience Evaluation of Eco-Driving Strategies at Signalized Intersections

Jan 19, 2026Abstract:Eco-driving strategies have demonstrated substantial potential for improving energy efficiency and reducing emissions, especially at signalized intersections. However, evaluations of eco-driving methods typically rely on simplified simulation or experimental conditions, where certain assumptions are made to manage complexity and experimental control. This study introduces a unified framework to evaluate eco-driving strategies through the lens of two complementary criteria: control robustness and environmental resilience. We define formal indicators that quantify performance degradation caused by internal execution variability and external environmental disturbances, respectively. These indicators are then applied to assess multiple eco-driving controllers through real-world vehicle experiments. The results reveal key tradeoffs between tracking accuracy and adaptability, showing that optimization-based controllers offer more consistent performance across varying disturbance levels, while analytical controllers may perform comparably under nominal conditions but exhibit greater sensitivity to execution and timing variability.

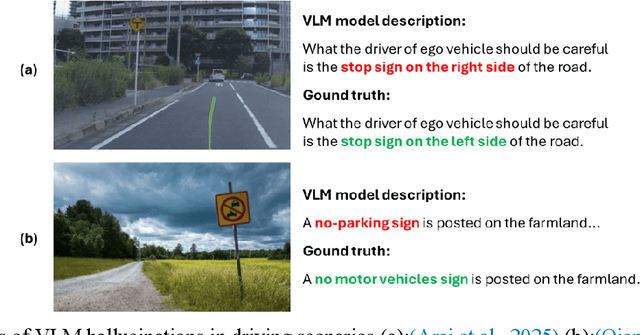

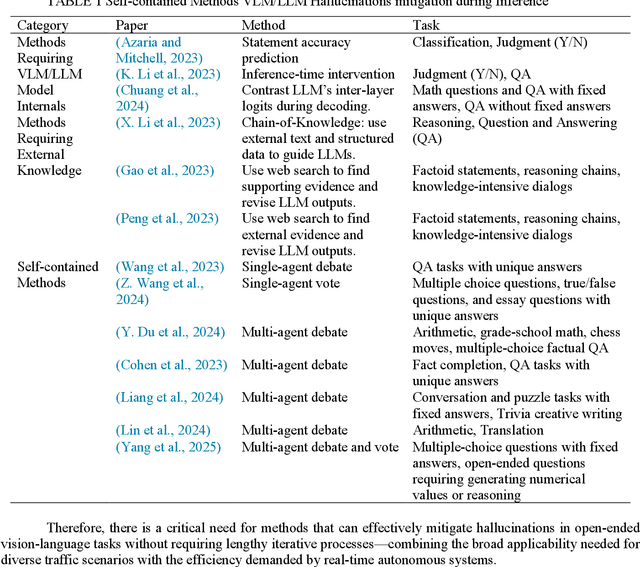

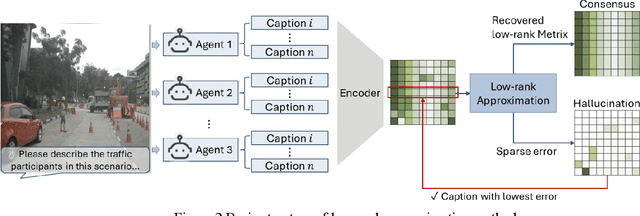

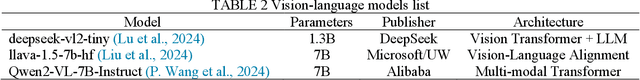

A Low-Rank Method for Vision Language Model Hallucination Mitigation in Autonomous Driving

Nov 09, 2025

Abstract:Vision Language Models (VLMs) are increasingly used in autonomous driving to help understand traffic scenes, but they sometimes produce hallucinations, which are false details not grounded in the visual input. Detecting and mitigating hallucinations is challenging when ground-truth references are unavailable and model internals are inaccessible. This paper proposes a novel self-contained low-rank approach to automatically rank multiple candidate captions generated by multiple VLMs based on their hallucination levels, using only the captions themselves without requiring external references or model access. By constructing a sentence-embedding matrix and decomposing it into a low-rank consensus component and a sparse residual, we use the residual magnitude to rank captions: selecting the one with the smallest residual as the most hallucination-free. Experiments on the NuScenes dataset demonstrate that our approach achieves 87% selection accuracy in identifying hallucination-free captions, representing a 19% improvement over the unfiltered baseline and a 6-10% improvement over multi-agent debate method. The sorting produced by sparse error magnitudes shows strong correlation with human judgments of hallucinations, validating our scoring mechanism. Additionally, our method, which can be easily parallelized, reduces inference time by 51-67% compared to debate approaches, making it practical for real-time autonomous driving applications.

Simulating the Unseen: Crash Prediction Must Learn from What Did Not Happen

May 27, 2025Abstract:Traffic safety science has long been hindered by a fundamental data paradox: the crashes we most wish to prevent are precisely those events we rarely observe. Existing crash-frequency models and surrogate safety metrics rely heavily on sparse, noisy, and under-reported records, while even sophisticated, high-fidelity simulations undersample the long-tailed situations that trigger catastrophic outcomes such as fatalities. We argue that the path to achieving Vision Zero, i.e., the complete elimination of traffic fatalities and severe injuries, requires a paradigm shift from traditional crash-only learning to a new form of counterfactual safety learning: reasoning not only about what happened, but also about the vast set of plausible yet perilous scenarios that could have happened under slightly different circumstances. To operationalize this shift, our proposed agenda bridges macro to micro. Guided by crash-rate priors, generative scene engines, diverse driver models, and causal learning, near-miss events are synthesized and explained. A crash-focused digital twin testbed links micro scenes to macro patterns, while a multi-objective validator ensures that simulations maintain statistical realism. This pipeline transforms sparse crash data into rich signals for crash prediction, enabling the stress-testing of vehicles, roads, and policies before deployment. By learning from crashes that almost happened, we can shift traffic safety from reactive forensics to proactive prevention, advancing Vision Zero.

Sky-Drive: A Distributed Multi-Agent Simulation Platform for Socially-Aware and Human-AI Collaborative Future Transportation

Apr 25, 2025

Abstract:Recent advances in autonomous system simulation platforms have significantly enhanced the safe and scalable testing of driving policies. However, existing simulators do not yet fully meet the needs of future transportation research, particularly in modeling socially-aware driving agents and enabling effective human-AI collaboration. This paper introduces Sky-Drive, a novel distributed multi-agent simulation platform that addresses these limitations through four key innovations: (a) a distributed architecture for synchronized simulation across multiple terminals; (b) a multi-modal human-in-the-loop framework integrating diverse sensors to collect rich behavioral data; (c) a human-AI collaboration mechanism supporting continuous and adaptive knowledge exchange; and (d) a digital twin (DT) framework for constructing high-fidelity virtual replicas of real-world transportation environments. Sky-Drive supports diverse applications such as autonomous vehicle (AV)-vulnerable road user (VRU) interaction modeling, human-in-the-loop training, socially-aware reinforcement learning, personalized driving policy, and customized scenario generation. Future extensions will incorporate foundation models for context-aware decision support and hardware-in-the-loop (HIL) testing for real-world validation. By bridging scenario generation, data collection, algorithm training, and hardware integration, Sky-Drive has the potential to become a foundational platform for the next generation of socially-aware and human-centered autonomous transportation research. The demo video and code are available at:https://sky-lab-uw.github.io/Sky-Drive-website/

Planning Safety Trajectories with Dual-Phase, Physics-Informed, and Transportation Knowledge-Driven Large Language Models

Apr 06, 2025Abstract:Foundation models have demonstrated strong reasoning and generalization capabilities in driving-related tasks, including scene understanding, planning, and control. However, they still face challenges in hallucinations, uncertainty, and long inference latency. While existing foundation models have general knowledge of avoiding collisions, they often lack transportation-specific safety knowledge. To overcome these limitations, we introduce LetsPi, a physics-informed, dual-phase, knowledge-driven framework for safe, human-like trajectory planning. To prevent hallucinations and minimize uncertainty, this hybrid framework integrates Large Language Model (LLM) reasoning with physics-informed social force dynamics. LetsPi leverages the LLM to analyze driving scenes and historical information, providing appropriate parameters and target destinations (goals) for the social force model, which then generates the future trajectory. Moreover, the dual-phase architecture balances reasoning and computational efficiency through its Memory Collection phase and Fast Inference phase. The Memory Collection phase leverages the physics-informed LLM to process and refine planning results through reasoning, reflection, and memory modules, storing safe, high-quality driving experiences in a memory bank. Surrogate safety measures and physics-informed prompt techniques are introduced to enhance the LLM's knowledge of transportation safety and physical force, respectively. The Fast Inference phase extracts similar driving experiences as few-shot examples for new scenarios, while simplifying input-output requirements to enable rapid trajectory planning without compromising safety. Extensive experiments using the HighD dataset demonstrate that LetsPi outperforms baseline models across five safety metrics.See PDF for project Github link.

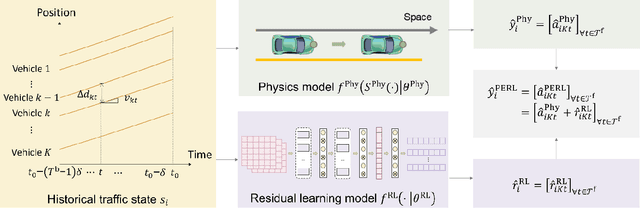

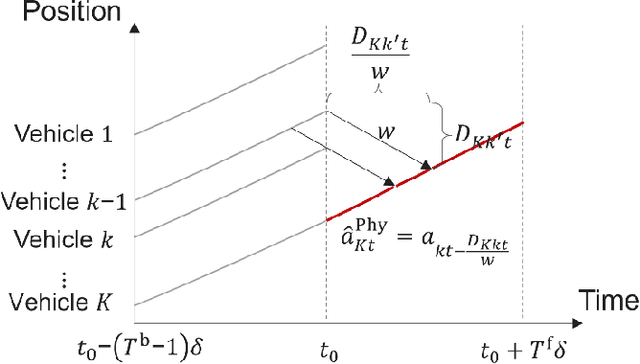

Online Adaptive Platoon Control for Connected and Automated Vehicles via Physics Enhanced Residual Learning

Dec 30, 2024Abstract:This paper introduces a physics enhanced residual learning (PERL) framework for connected and automated vehicle (CAV) platoon control, addressing the dynamics and unpredictability inherent to platoon systems. The framework first develops a physics-based controller to model vehicle dynamics, using driving speed as input to optimize safety and efficiency. Then the residual controller, based on neural network (NN) learning, enriches the prior knowledge of the physical model and corrects residuals caused by vehicle dynamics. By integrating the physical model with data-driven online learning, the PERL framework retains the interpretability and transparency of physics-based models and enhances the adaptability and precision of data-driven learning, achieving significant improvements in computational efficiency and control accuracy in dynamic scenarios. Simulation and robot car platform tests demonstrate that PERL significantly outperforms pure physical and learning models, reducing average cumulative absolute position and speed errors by up to 58.5% and 40.1% (physical model) and 58.4% and 47.7% (NN model). The reduced-scale robot car platform tests further validate the adaptive PERL framework's superior accuracy and rapid convergence under dynamic disturbances, reducing position and speed cumulative errors by 72.73% and 99.05% (physical model) and 64.71% and 72.58% (NN model). PERL enhances platoon control performance through online parameter updates when external disturbances are detected. Results demonstrate the advanced framework's exceptional accuracy and rapid convergence capabilities, proving its effectiveness in maintaining platoon stability under diverse conditions.

FollowGen: A Scaled Noise Conditional Diffusion Model for Car-Following Trajectory Prediction

Nov 23, 2024

Abstract:Vehicle trajectory prediction is crucial for advancing autonomous driving and advanced driver assistance systems (ADAS). Although deep learning-based approaches - especially those utilizing transformer-based and generative models - have markedly improved prediction accuracy by capturing complex, non-linear patterns in vehicle dynamics and traffic interactions, they frequently overlook detailed car-following behaviors and the inter-vehicle interactions critical for real-world driving applications, particularly in fully autonomous or mixed traffic scenarios. To address the issue, this study introduces a scaled noise conditional diffusion model for car-following trajectory prediction, which integrates detailed inter-vehicular interactions and car-following dynamics into a generative framework, improving both the accuracy and plausibility of predicted trajectories. The model utilizes a novel pipeline to capture historical vehicle dynamics by scaling noise with encoded historical features within the diffusion process. Particularly, it employs a cross-attention-based transformer architecture to model intricate inter-vehicle dependencies, effectively guiding the denoising process and enhancing prediction accuracy. Experimental results on diverse real-world driving scenarios demonstrate the state-of-the-art performance and robustness of the proposed method.

VLM-MPC: Vision Language Foundation Model (VLM)-Guided Model Predictive Controller (MPC) for Autonomous Driving

Aug 09, 2024Abstract:Motivated by the emergent reasoning capabilities of Vision Language Models (VLMs) and its potential to improve the comprehensibility of autonomous driving systems, this paper introduces a closed-loop autonomous driving controller called VLM-MPC, which combines a VLM for high-level decision-making and a Model Predictive Controller (MPC) for low-level vehicle control. The proposed VLM-MPC system is structurally divided into two asynchronous components: an upper-level VLM and a lower-level MPC. The upper layer VLM generates driving parameters for lower-level control based on front camera images, ego vehicle state, traffic environment conditions, and reference memory. The lower-level MPC controls the vehicle in real-time using these parameters, considering engine lag and providing state feedback to the entire system. Experiments based on the nuScenes dataset validated the effectiveness of the proposed VLM-MPC system across various scenarios (e.g., night, rain, intersections). Results showed that the VLM-MPC system consistently outperformed baseline models in terms of safety and driving comfort. By comparing behaviors under different weather conditions and scenarios, we demonstrated the VLM's ability to understand the environment and make reasonable inferences.

Crossfusor: A Cross-Attention Transformer Enhanced Conditional Diffusion Model for Car-Following Trajectory Prediction

Jun 17, 2024

Abstract:Vehicle trajectory prediction is crucial for advancing autonomous driving and advanced driver assistance systems (ADAS), enhancing road safety and traffic efficiency. While traditional methods have laid foundational work, modern deep learning techniques, particularly transformer-based models and generative approaches, have significantly improved prediction accuracy by capturing complex and non-linear patterns in vehicle motion and traffic interactions. However, these models often overlook the detailed car-following behaviors and inter-vehicle interactions essential for real-world driving scenarios. This study introduces a Cross-Attention Transformer Enhanced Conditional Diffusion Model (Crossfusor) specifically designed for car-following trajectory prediction. Crossfusor integrates detailed inter-vehicular interactions and car-following dynamics into a robust diffusion framework, improving both the accuracy and realism of predicted trajectories. The model leverages a novel temporal feature encoding framework combining GRU, location-based attention mechanisms, and Fourier embedding to capture historical vehicle dynamics. It employs noise scaled by these encoded historical features in the forward diffusion process, and uses a cross-attention transformer to model intricate inter-vehicle dependencies in the reverse denoising process. Experimental results on the NGSIM dataset demonstrate that Crossfusor outperforms state-of-the-art models, particularly in long-term predictions, showcasing its potential for enhancing the predictive capabilities of autonomous driving systems.

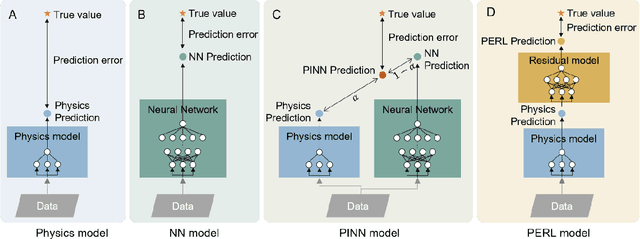

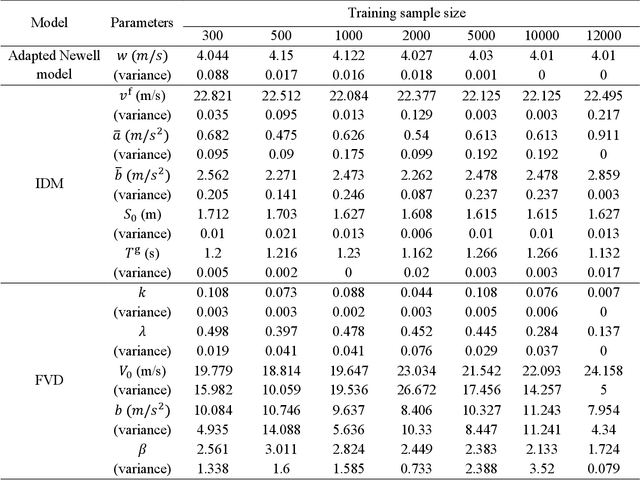

A Physics Enhanced Residual Learning (PERL) Framework for Traffic State Prediction

Sep 26, 2023

Abstract:In vehicle trajectory prediction, physics models and data-driven models are two predominant methodologies. However, each approach presents its own set of challenges: physics models fall short in predictability, while data-driven models lack interpretability. Addressing these identified shortcomings, this paper proposes a novel framework, the Physics-Enhanced Residual Learning (PERL) model. PERL integrates the strengths of physics-based and data-driven methods for traffic state prediction. PERL contains a physics model and a residual learning model. Its prediction is the sum of the physics model result and a predicted residual as a correction to it. It preserves the interpretability inherent to physics-based models and has reduced data requirements compared to data-driven methods. Experiments were conducted using a real-world vehicle trajectory dataset. We proposed a PERL model, with the Intelligent Driver Model (IDM) as its physics car-following model and Long Short-Term Memory (LSTM) as its residual learning model. We compare this PERL model with the physics car-following model, data-driven model, and other physics-informed neural network (PINN) models. The result reveals that PERL achieves better prediction with a small dataset, compared to the physics model, data-driven model, and PINN model. Second, the PERL model showed faster convergence during training, offering comparable performance with fewer training samples than the data-driven model and PINN model. Sensitivity analysis also proves comparable performance of PERL using another residual learning model and a physics car-following model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge