Jason Hickey

WAXAL: A Large-Scale Multilingual African Language Speech Corpus

Feb 02, 2026Abstract:The advancement of speech technology has predominantly favored high-resource languages, creating a significant digital divide for speakers of most Sub-Saharan African languages. To address this gap, we introduce WAXAL, a large-scale, openly accessible speech dataset for 21 languages representing over 100 million speakers. The collection consists of two main components: an Automated Speech Recognition (ASR) dataset containing approximately 1,250 hours of transcribed, natural speech from a diverse range of speakers, and a Text-to-Speech (TTS) dataset with over 180 hours of high-quality, single-speaker recordings reading phonetically balanced scripts. This paper details our methodology for data collection, annotation, and quality control, which involved partnerships with four African academic and community organizations. We provide a detailed statistical overview of the dataset and discuss its potential limitations and ethical considerations. The WAXAL datasets are released at https://huggingface.co/datasets/google/WaxalNLP under the permissive CC-BY-4.0 license to catalyze research, enable the development of inclusive technologies, and serve as a vital resource for the digital preservation of these languages.

Oya: Deep Learning for Accurate Global Precipitation Estimation

Nov 13, 2025Abstract:Accurate precipitation estimation is critical for hydrological applications, especially in the Global South where ground-based observation networks are sparse and forecasting skill is limited. Existing satellite-based precipitation products often rely on the longwave infrared channel alone or are calibrated with data that can introduce significant errors, particularly at sub-daily timescales. This study introduces Oya, a novel real-time precipitation retrieval algorithm utilizing the full spectrum of visible and infrared (VIS-IR) observations from geostationary (GEO) satellites. Oya employs a two-stage deep learning approach, combining two U-Net models: one for precipitation detection and another for quantitative precipitation estimation (QPE), to address the inherent data imbalance between rain and no-rain events. The models are trained using high-resolution GPM Combined Radar-Radiometer Algorithm (CORRA) v07 data as ground truth and pre-trained on IMERG-Final retrievals to enhance robustness and mitigate overfitting due to the limited temporal sampling of CORRA. By leveraging multiple GEO satellites, Oya achieves quasi-global coverage and demonstrates superior performance compared to existing competitive regional and global precipitation baselines, offering a promising pathway to improved precipitation monitoring and forecasting.

High-Resolution Building and Road Detection from Sentinel-2

Oct 17, 2023

Abstract:Mapping buildings and roads automatically with remote sensing typically requires high-resolution imagery, which is expensive to obtain and often sparsely available. In this work we demonstrate how multiple 10 m resolution Sentinel-2 images can be used to generate 50 cm resolution building and road segmentation masks. This is done by training a `student' model with access to Sentinel-2 images to reproduce the predictions of a `teacher' model which has access to corresponding high-resolution imagery. While the predictions do not have all the fine detail of the teacher model, we find that we are able to retain much of the performance: for building segmentation we achieve 78.3% mIoU, compared to the high-resolution teacher model accuracy of 85.3% mIoU. We also describe a related method for counting individual buildings in a Sentinel-2 patch which achieves R^2 = 0.91 against true counts. This work opens up new possibilities for using freely available Sentinel-2 imagery for a range of tasks that previously could only be done with high-resolution satellite imagery.

A Machine Learning Outlook: Post-processing of Global Medium-range Forecasts

Mar 28, 2023

Abstract:Post-processing typically takes the outputs of a Numerical Weather Prediction (NWP) model and applies linear statistical techniques to produce improve localized forecasts, by including additional observations, or determining systematic errors at a finer scale. In this pilot study, we investigate the benefits and challenges of using non-linear neural network (NN) based methods to post-process multiple weather features -- temperature, moisture, wind, geopotential height, precipitable water -- at 30 vertical levels, globally and at lead times up to 7 days. We show that we can achieve accuracy improvements of up to 12% (RMSE) in a field such as temperature at 850hPa for a 7 day forecast. However, we recognize the need to strengthen foundational work on objectively measuring a sharp and correct forecast. We discuss the challenges of using standard metrics such as root mean squared error (RMSE) or anomaly correlation coefficient (ACC) as we move from linear statistical models to more complex non-linear machine learning approaches for post-processing global weather forecasts.

Global Extreme Heat Forecasting Using Neural Weather Models

May 23, 2022

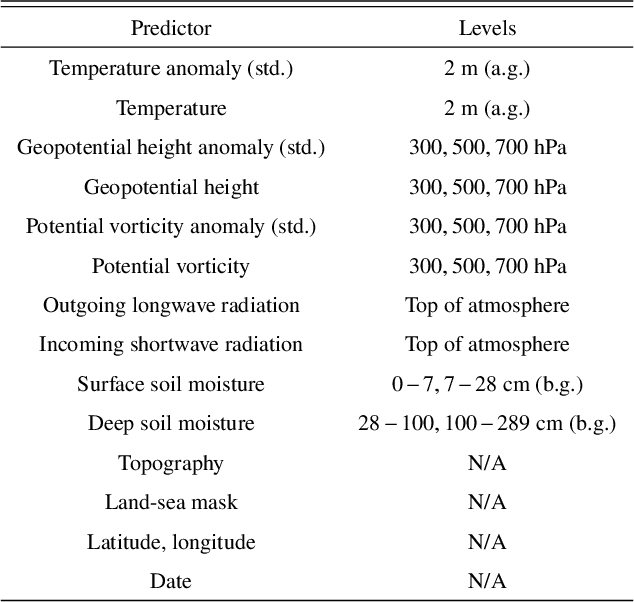

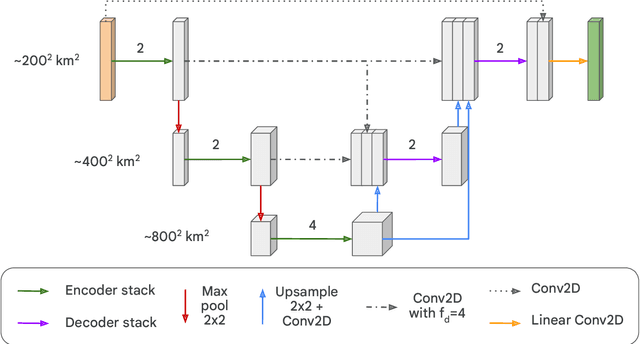

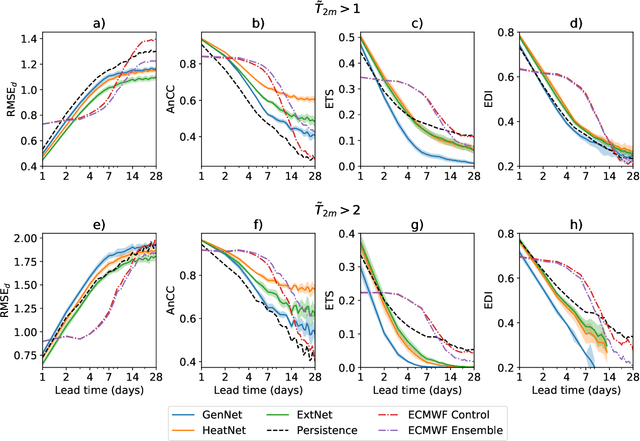

Abstract:Heat waves are projected to increase in frequency and severity with global warming. Improved warning systems would help reduce the associated loss of lives, wildfires, power disruptions, and reduction in crop yields. In this work, we explore the potential for deep learning systems trained on historical data to forecast extreme heat on short, medium and subseasonal timescales. To this purpose, we train a set of neural weather models (NWMs) with convolutional architectures to forecast surface temperature anomalies globally, 1 to 28 days ahead, at $\sim200~\mathrm{km}$ resolution and on the cubed sphere. The NWMs are trained using the ERA5 reanalysis product and a set of candidate loss functions, including the mean squared error and exponential losses targeting extremes. We find that training models to minimize custom losses tailored to emphasize extremes leads to significant skill improvements in the heat wave prediction task, compared to NWMs trained on the mean squared error loss. This improvement is accomplished with almost no skill reduction in the general temperature prediction task, and it can be efficiently realized through transfer learning, by re-training NWMs with the custom losses for a few epochs. In addition, we find that the use of a symmetric exponential loss reduces the smoothing of NWM forecasts with lead time. Our best NWM is able to outperform persistence in a regressive sense for all lead times and temperature anomaly thresholds considered, and shows positive regressive skill compared to the ECMWF subseasonal-to-seasonal control forecast within the first two forecast days and after two weeks.

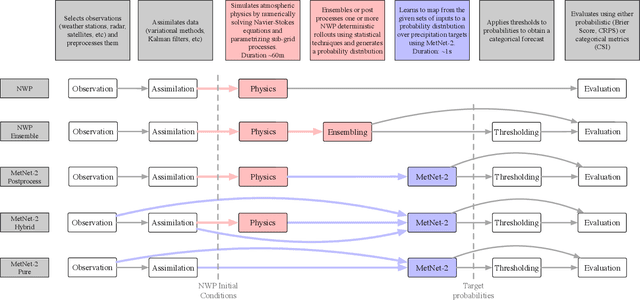

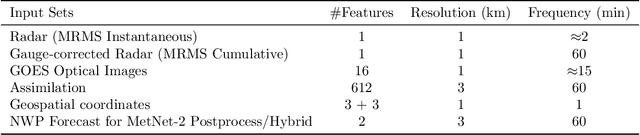

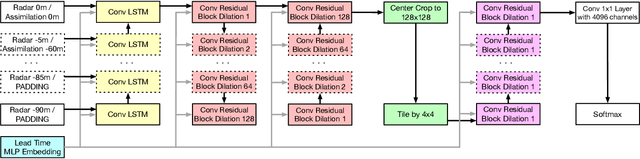

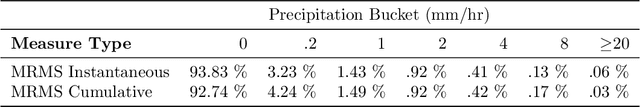

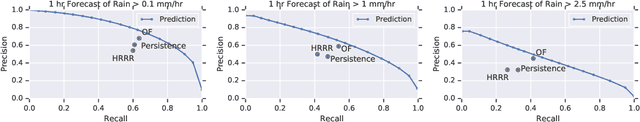

Skillful Twelve Hour Precipitation Forecasts using Large Context Neural Networks

Nov 14, 2021

Abstract:The problem of forecasting weather has been scientifically studied for centuries due to its high impact on human lives, transportation, food production and energy management, among others. Current operational forecasting models are based on physics and use supercomputers to simulate the atmosphere to make forecasts hours and days in advance. Better physics-based forecasts require improvements in the models themselves, which can be a substantial scientific challenge, as well as improvements in the underlying resolution, which can be computationally prohibitive. An emerging class of weather models based on neural networks represents a paradigm shift in weather forecasting: the models learn the required transformations from data instead of relying on hand-coded physics and are computationally efficient. For neural models, however, each additional hour of lead time poses a substantial challenge as it requires capturing ever larger spatial contexts and increases the uncertainty of the prediction. In this work, we present a neural network that is capable of large-scale precipitation forecasting up to twelve hours ahead and, starting from the same atmospheric state, the model achieves greater skill than the state-of-the-art physics-based models HRRR and HREF that currently operate in the Continental United States. Interpretability analyses reinforce the observation that the model learns to emulate advanced physics principles. These results represent a substantial step towards establishing a new paradigm of efficient forecasting with neural networks.

Deep Learning Models for Predicting Wildfires from Historical Remote-Sensing Data

Oct 15, 2020

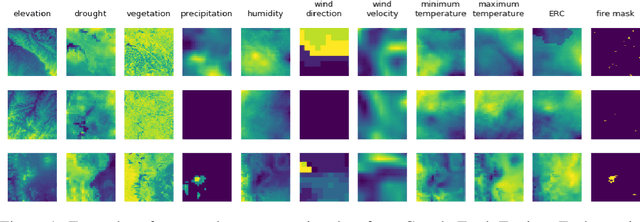

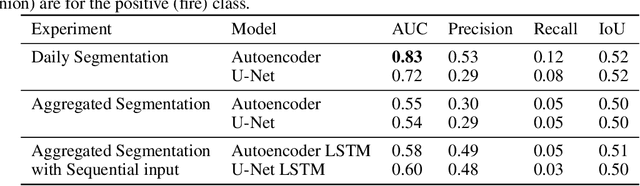

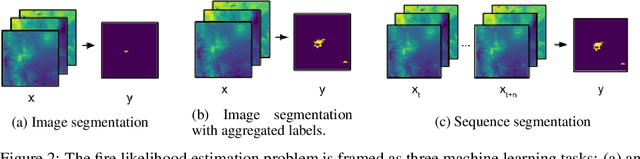

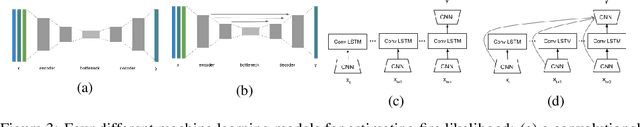

Abstract:Identifying regions that have high likelihood for wildfires is a key component of land and forestry management and disaster preparedness. We create a data set by aggregating nearly a decade of remote-sensing data and historical fire records to predict wildfires. This prediction problem is framed as three machine learning tasks. Results are compared and analyzed for four different deep learning models to estimate wildfire likelihood. The results demonstrate that deep learning models can successfully identify areas of high fire likelihood using aggregated data about vegetation, weather, and topography with an AUC of 83%.

MetNet: A Neural Weather Model for Precipitation Forecasting

Mar 30, 2020Abstract:Weather forecasting is a long standing scientific challenge with direct social and economic impact. The task is suitable for deep neural networks due to vast amounts of continuously collected data and a rich spatial and temporal structure that presents long range dependencies. We introduce MetNet, a neural network that forecasts precipitation up to 8 hours into the future at the high spatial resolution of 1 km$^2$ and at the temporal resolution of 2 minutes with a latency in the order of seconds. MetNet takes as input radar and satellite data and forecast lead time and produces a probabilistic precipitation map. The architecture uses axial self-attention to aggregate the global context from a large input patch corresponding to a million square kilometers. We evaluate the performance of MetNet at various precipitation thresholds and find that MetNet outperforms Numerical Weather Prediction at forecasts of up to 7 to 8 hours on the scale of the continental United States.

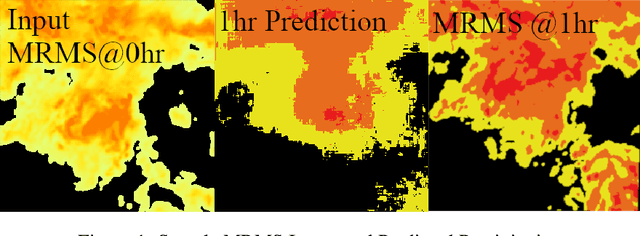

Machine Learning for Precipitation Nowcasting from Radar Images

Dec 11, 2019

Abstract:High-resolution nowcasting is an essential tool needed for effective adaptation to climate change, particularly for extreme weather. As Deep Learning (DL) techniques have shown dramatic promise in many domains, including the geosciences, we present an application of DL to the problem of precipitation nowcasting, i.e., high-resolution (1 km x 1 km) short-term (1 hour) predictions of precipitation. We treat forecasting as an image-to-image translation problem and leverage the power of the ubiquitous UNET convolutional neural network. We find this performs favorably when compared to three commonly used models: optical flow, persistence and NOAA's numerical one-hour HRRR nowcasting prediction.

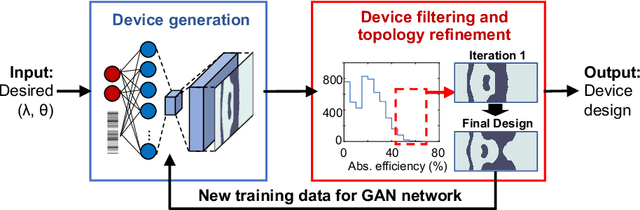

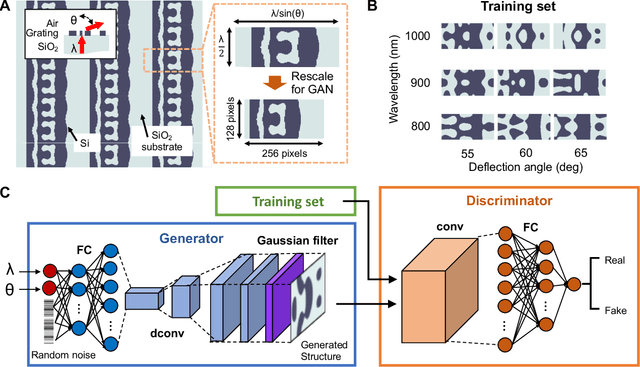

Data-driven metasurface discovery

Nov 29, 2018

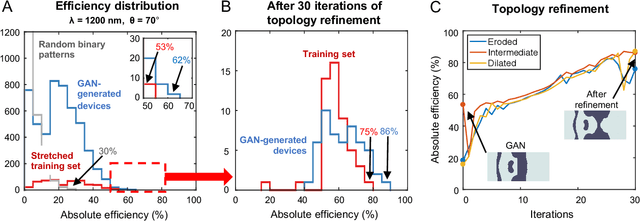

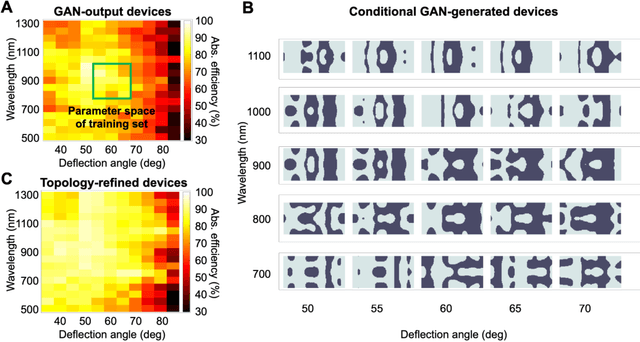

Abstract:A long-standing challenge with metasurface design is identifying computationally efficient methods that produce high performance devices. Design methods based on iterative optimization push the performance limits of metasurfaces, but they require extensive computational resources that limit their implementation to small numbers of microscale devices. We show that generative neural networks can learn from a small set of topology-optimized metasurfaces to produce large numbers of high-efficiency, topologically-complex metasurfaces operating across a large parameter space. This approach enables considerable savings in computation cost compared to brute force optimization. As a model system, we employ conditional generative adversarial networks to design highly-efficient metagratings over a broad range of deflection angles and operating wavelengths. Generated device designs can be further locally optimized and serve as additional training data for network refinement. Our design concept utilizes a relatively small initial training set of just a few hundred devices, and it serves as a more general blueprint for the AI-based analysis of physical systems where access to large datasets is limited. We envision that such data-driven design tools can be broadly utilized in other domains of optics, acoustics, mechanics, and electronics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge