Cenk Gazen

Fetch Technologies

A Machine Learning Outlook: Post-processing of Global Medium-range Forecasts

Mar 28, 2023

Abstract:Post-processing typically takes the outputs of a Numerical Weather Prediction (NWP) model and applies linear statistical techniques to produce improve localized forecasts, by including additional observations, or determining systematic errors at a finer scale. In this pilot study, we investigate the benefits and challenges of using non-linear neural network (NN) based methods to post-process multiple weather features -- temperature, moisture, wind, geopotential height, precipitable water -- at 30 vertical levels, globally and at lead times up to 7 days. We show that we can achieve accuracy improvements of up to 12% (RMSE) in a field such as temperature at 850hPa for a 7 day forecast. However, we recognize the need to strengthen foundational work on objectively measuring a sharp and correct forecast. We discuss the challenges of using standard metrics such as root mean squared error (RMSE) or anomaly correlation coefficient (ACC) as we move from linear statistical models to more complex non-linear machine learning approaches for post-processing global weather forecasts.

Skillful Twelve Hour Precipitation Forecasts using Large Context Neural Networks

Nov 14, 2021

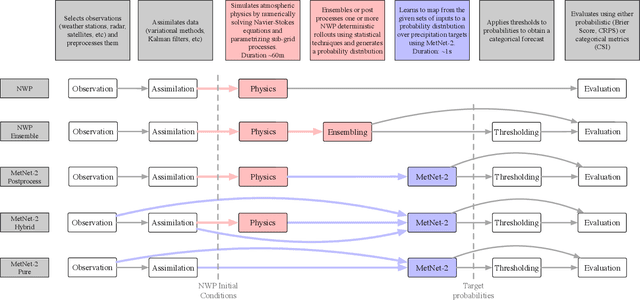

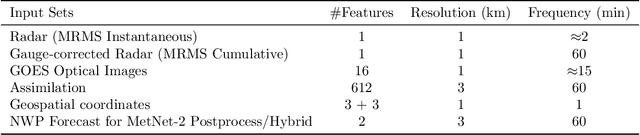

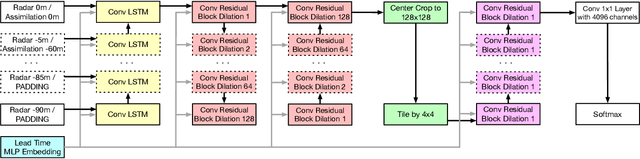

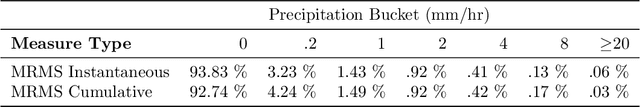

Abstract:The problem of forecasting weather has been scientifically studied for centuries due to its high impact on human lives, transportation, food production and energy management, among others. Current operational forecasting models are based on physics and use supercomputers to simulate the atmosphere to make forecasts hours and days in advance. Better physics-based forecasts require improvements in the models themselves, which can be a substantial scientific challenge, as well as improvements in the underlying resolution, which can be computationally prohibitive. An emerging class of weather models based on neural networks represents a paradigm shift in weather forecasting: the models learn the required transformations from data instead of relying on hand-coded physics and are computationally efficient. For neural models, however, each additional hour of lead time poses a substantial challenge as it requires capturing ever larger spatial contexts and increases the uncertainty of the prediction. In this work, we present a neural network that is capable of large-scale precipitation forecasting up to twelve hours ahead and, starting from the same atmospheric state, the model achieves greater skill than the state-of-the-art physics-based models HRRR and HREF that currently operate in the Continental United States. Interpretability analyses reinforce the observation that the model learns to emulate advanced physics principles. These results represent a substantial step towards establishing a new paradigm of efficient forecasting with neural networks.

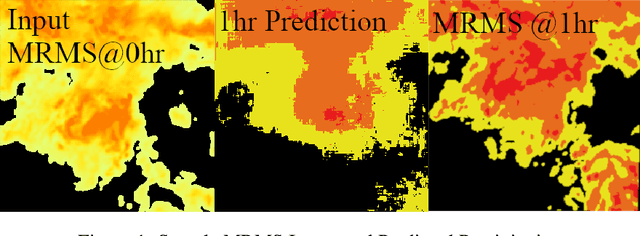

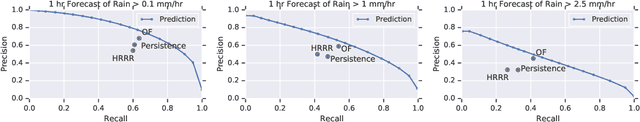

Machine Learning for Precipitation Nowcasting from Radar Images

Dec 11, 2019

Abstract:High-resolution nowcasting is an essential tool needed for effective adaptation to climate change, particularly for extreme weather. As Deep Learning (DL) techniques have shown dramatic promise in many domains, including the geosciences, we present an application of DL to the problem of precipitation nowcasting, i.e., high-resolution (1 km x 1 km) short-term (1 hour) predictions of precipitation. We treat forecasting as an image-to-image translation problem and leverage the power of the ubiquitous UNET convolutional neural network. We find this performs favorably when compared to three commonly used models: optical flow, persistence and NOAA's numerical one-hour HRRR nowcasting prediction.

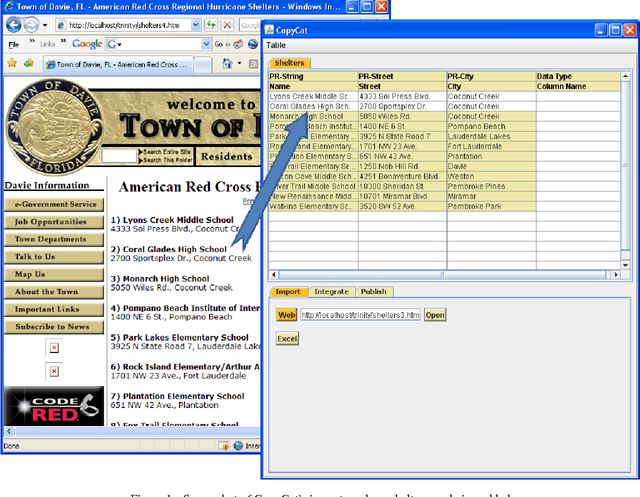

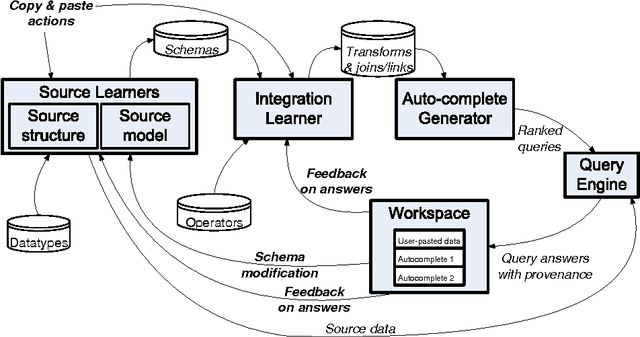

Interactive Data Integration through Smart Copy & Paste

Sep 09, 2009

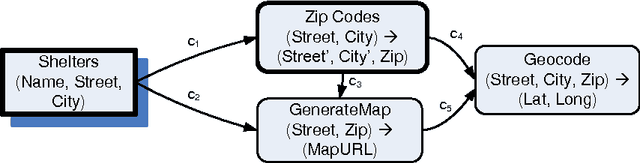

Abstract:In many scenarios, such as emergency response or ad hoc collaboration, it is critical to reduce the overhead in integrating data. Ideally, one could perform the entire process interactively under one unified interface: defining extractors and wrappers for sources, creating a mediated schema, and adding schema mappings ? while seeing how these impact the integrated view of the data, and refining the design accordingly. We propose a novel smart copy and paste (SCP) model and architecture for seamlessly combining the design-time and run-time aspects of data integration, and we describe an initial prototype, the CopyCat system. In CopyCat, the user does not need special tools for the different stages of integration: instead, the system watches as the user copies data from applications (including the Web browser) and pastes them into CopyCat?s spreadsheet-like workspace. CopyCat generalizes these actions and presents proposed auto-completions, each with an explanation in the form of provenance. The user provides feedback on these suggestions ? through either direct interactions or further copy-and-paste operations ? and the system learns from this feedback. This paper provides an overview of our prototype system, and identifies key research challenges in achieving SCP in its full generality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge