Data-driven metasurface discovery

Paper and Code

Nov 29, 2018

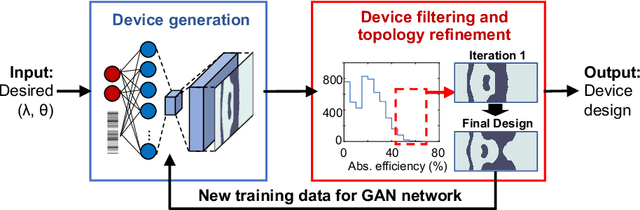

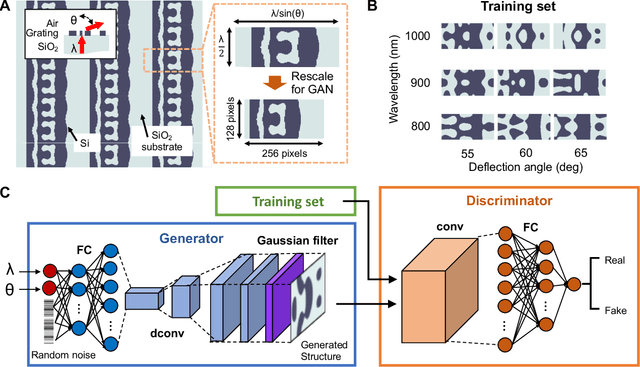

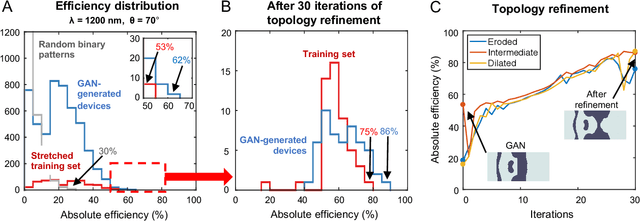

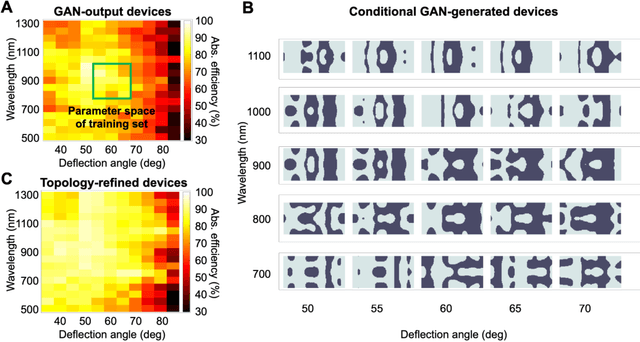

A long-standing challenge with metasurface design is identifying computationally efficient methods that produce high performance devices. Design methods based on iterative optimization push the performance limits of metasurfaces, but they require extensive computational resources that limit their implementation to small numbers of microscale devices. We show that generative neural networks can learn from a small set of topology-optimized metasurfaces to produce large numbers of high-efficiency, topologically-complex metasurfaces operating across a large parameter space. This approach enables considerable savings in computation cost compared to brute force optimization. As a model system, we employ conditional generative adversarial networks to design highly-efficient metagratings over a broad range of deflection angles and operating wavelengths. Generated device designs can be further locally optimized and serve as additional training data for network refinement. Our design concept utilizes a relatively small initial training set of just a few hundred devices, and it serves as a more general blueprint for the AI-based analysis of physical systems where access to large datasets is limited. We envision that such data-driven design tools can be broadly utilized in other domains of optics, acoustics, mechanics, and electronics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge