Ismail Alkhouri

NERD: Network-Regularized Diffusion Sampling For 3D Computed Tomography

Nov 18, 2025Abstract:Numerous diffusion model (DM)-based methods have been proposed for solving inverse imaging problems. Among these, a recent line of work has demonstrated strong performance by formulating sampling as an optimization procedure that enforces measurement consistency, forward diffusion consistency, and both step-wise and backward diffusion consistency. However, these methods have only considered 2D reconstruction tasks and do not directly extend to 3D image reconstruction problems, such as in Computed Tomography (CT). To bridge this gap, we propose NEtwork-Regularized diffusion sampling for 3D CT (NERD) by incorporating an L1 regularization into the optimization objective. This regularizer encourages spatial continuity across adjacent slices, reducing inter-slice artifacts and promoting coherent volumetric reconstructions. Additionally, we introduce two efficient optimization strategies to solve the resulting objective: one based on the Alternating Direction Method of Multipliers (ADMM) and another based on the Primal-Dual Hybrid Gradient (PDHG) method. Experiments on medical 3D CT data demonstrate that our approach achieves either state-of-the-art or highly competitive results.

A Dataless Reinforcement Learning Approach to Rounding Hyperplane Optimization for Max-Cut

May 19, 2025Abstract:The Maximum Cut (MaxCut) problem is NP-Complete, and obtaining its optimal solution is NP-hard in the worst case. As a result, heuristic-based algorithms are commonly used, though their design often requires significant domain expertise. More recently, learning-based methods trained on large (un)labeled datasets have been proposed; however, these approaches often struggle with generalizability and scalability. A well-known approximation algorithm for MaxCut is the Goemans-Williamson (GW) algorithm, which relaxes the Quadratic Unconstrained Binary Optimization (QUBO) formulation into a semidefinite program (SDP). The GW algorithm then applies hyperplane rounding by uniformly sampling a random hyperplane to convert the SDP solution into binary node assignments. In this paper, we propose a training-data-free approach based on a non-episodic reinforcement learning formulation, in which an agent learns to select improved rounding hyperplanes that yield better cuts than those produced by the GW algorithm. By optimizing over a Markov Decision Process (MDP), our method consistently achieves better cuts across large-scale graphs with varying densities and degree distributions.

Understanding Untrained Deep Models for Inverse Problems: Algorithms and Theory

Feb 25, 2025Abstract:In recent years, deep learning methods have been extensively developed for inverse imaging problems (IIPs), encompassing supervised, self-supervised, and generative approaches. Most of these methods require large amounts of labeled or unlabeled training data to learn effective models. However, in many practical applications, such as medical image reconstruction, extensive training datasets are often unavailable or limited. A significant milestone in addressing this challenge came in 2018 with the work of Ulyanov et al., which introduced the Deep Image Prior (DIP)--the first training-data-free neural network method for IIPs. Unlike conventional deep learning approaches, DIP requires only a convolutional neural network, the noisy measurements, and a forward operator. By leveraging the implicit regularization of deep networks initialized with random noise, DIP can learn and restore image structures without relying on external datasets. However, a well-known limitation of DIP is its susceptibility to overfitting, primarily due to the over-parameterization of the network. In this tutorial paper, we provide a comprehensive review of DIP, including a theoretical analysis of its training dynamics. We also categorize and discuss recent advancements in DIP-based methods aimed at mitigating overfitting, including techniques such as regularization, network re-parameterization, and early stopping. Furthermore, we discuss approaches that combine DIP with pre-trained neural networks, present empirical comparison results against data-centric methods, and highlight open research questions and future directions.

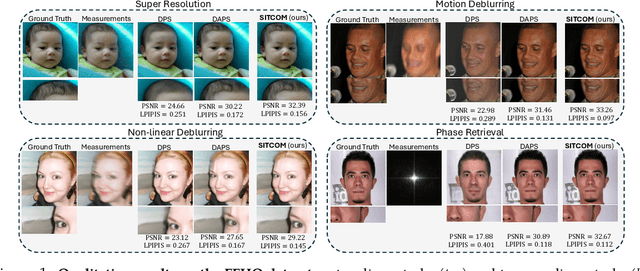

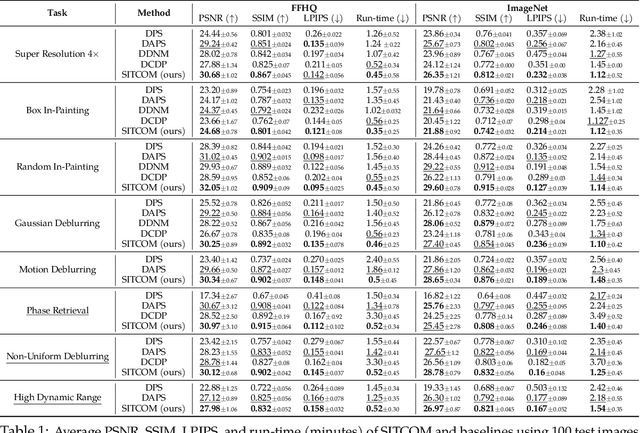

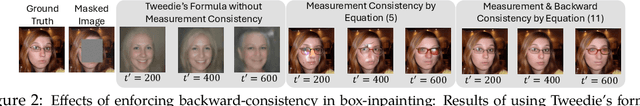

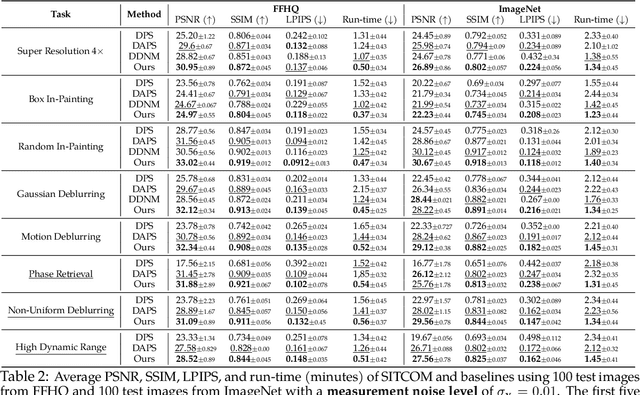

SITCOM: Step-wise Triple-Consistent Diffusion Sampling for Inverse Problems

Oct 06, 2024

Abstract:Diffusion models (DMs) are a class of generative models that allow sampling from a distribution learned over a training set. When applied to solving inverse imaging problems (IPs), the reverse sampling steps of DMs are typically modified to approximately sample from a measurement-conditioned distribution in the image space. However, these modifications may be unsuitable for certain settings (such as in the presence of measurement noise) and non-linear tasks, as they often struggle to correct errors from earlier sampling steps and generally require a large number of optimization and/or sampling steps. To address these challenges, we state three conditions for achieving measurement-consistent diffusion trajectories. Building on these conditions, we propose a new optimization-based sampling method that not only enforces the standard data manifold measurement consistency and forward diffusion consistency, as seen in previous studies, but also incorporates backward diffusion consistency that maintains a diffusion trajectory by optimizing over the input of the pre-trained model at every sampling step. By enforcing these conditions, either implicitly or explicitly, our sampler requires significantly fewer reverse steps. Therefore, we refer to our accelerated method as Step-wise Triple-Consistent Sampling (SITCOM). Compared to existing state-of-the-art baseline methods, under different levels of measurement noise, our extensive experiments across five linear and three non-linear image restoration tasks demonstrate that SITCOM achieves competitive or superior results in terms of standard image similarity metrics while requiring a significantly reduced run-time across all considered tasks.

uDiG-DIP: Unrolled Diffusion-Guided Deep Image Prior For Medical Image Reconstruction

Oct 06, 2024Abstract:Deep learning (DL) methods have been extensively applied to various image recovery problems, including magnetic resonance imaging (MRI) and computed tomography (CT) reconstruction. Beyond supervised models, other approaches have been recently explored including two key recent schemes: Deep Image Prior (DIP) that is an unsupervised scan-adaptive method that leverages the network architecture as implicit regularization but can suffer from noise overfitting, and diffusion models (DMs), where the sampling procedure of a pre-trained generative model is modified to allow sampling from the measurement-conditioned distribution through approximations. In this paper, we propose combining DIP and DMs for MRI and CT reconstruction, motivated by (i) the impact of the DIP network input and (ii) the use of DMs as diffusion purifiers (DPs). Specifically, we propose an unrolled procedure that iteratively optimizes the DIP network with a DM-refined adaptive input using a loss with data consistency and autoencoding terms. We term the approach unrolled Diffusion-Guided DIP (uDiG-DIP). Our experimental results demonstrate that uDiG-DIP achieves superior reconstruction results compared to leading DM-based baselines and the original DIP for MRI and CT tasks.

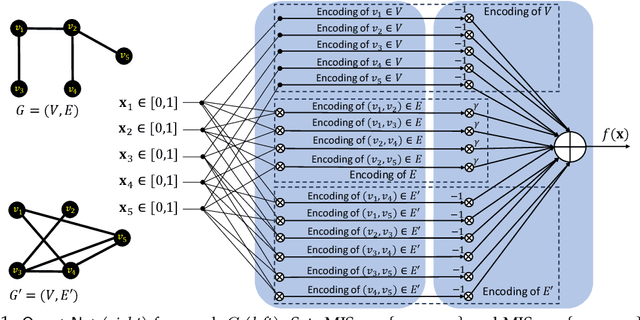

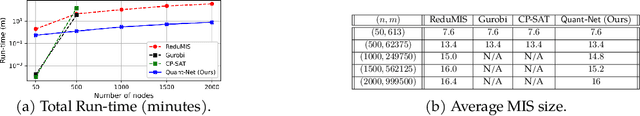

Dataless Quadratic Neural Networks for the Maximum Independent Set Problem

Jun 27, 2024

Abstract:Combinatorial Optimization (CO) plays a crucial role in addressing various significant problems, among them the challenging Maximum Independent Set (MIS) problem. In light of recent advancements in deep learning methods, efforts have been directed towards leveraging data-driven learning approaches, typically rooted in supervised learning and reinforcement learning, to tackle the NP-hard MIS problem. However, these approaches rely on labeled datasets, exhibit weak generalization, and often depend on problem-specific heuristics. Recently, ReLU-based dataless neural networks were introduced to address combinatorial optimization problems. This paper introduces a novel dataless quadratic neural network formulation, featuring a continuous quadratic relaxation for the MIS problem. Notably, our method eliminates the need for training data by treating the given MIS instance as a trainable entity. More specifically, the graph structure and constraints of the MIS instance are used to define the structure and parameters of the neural network such that training it on a fixed input provides a solution to the problem, thereby setting it apart from traditional supervised or reinforcement learning approaches. By employing a gradient-based optimization algorithm like ADAM and leveraging an efficient off-the-shelf GPU parallel implementation, our straightforward yet effective approach demonstrates competitive or superior performance compared to state-of-the-art learning-based methods. Another significant advantage of our approach is that, unlike exact and heuristic solvers, the running time of our method scales only with the number of nodes in the graph, not the number of edges.

Improving Efficiency of Diffusion Models via Multi-Stage Framework and Tailored Multi-Decoder Architectures

Dec 14, 2023

Abstract:Diffusion models, emerging as powerful deep generative tools, excel in various applications. They operate through a two-steps process: introducing noise into training samples and then employing a model to convert random noise into new samples (e.g., images). However, their remarkable generative performance is hindered by slow training and sampling. This is due to the necessity of tracking extensive forward and reverse diffusion trajectories, and employing a large model with numerous parameters across multiple timesteps (i.e., noise levels). To tackle these challenges, we present a multi-stage framework inspired by our empirical findings. These observations indicate the advantages of employing distinct parameters tailored to each timestep while retaining universal parameters shared across all time steps. Our approach involves segmenting the time interval into multiple stages where we employ custom multi-decoder U-net architecture that blends time-dependent models with a universally shared encoder. Our framework enables the efficient distribution of computational resources and mitigates inter-stage interference, which substantially improves training efficiency. Extensive numerical experiments affirm the effectiveness of our framework, showcasing significant training and sampling efficiency enhancements on three state-of-the-art diffusion models, including large-scale latent diffusion models. Furthermore, our ablation studies illustrate the impact of two important components in our framework: (i) a novel timestep clustering algorithm for stage division, and (ii) an innovative multi-decoder U-net architecture, seamlessly integrating universal and customized hyperparameters.

Robust MRI Reconstruction by Smoothed Unrolling (SMUG)

Dec 12, 2023

Abstract:As the popularity of deep learning (DL) in the field of magnetic resonance imaging (MRI) continues to rise, recent research has indicated that DL-based MRI reconstruction models might be excessively sensitive to minor input disturbances, including worst-case additive perturbations. This sensitivity often leads to unstable, aliased images. This raises the question of how to devise DL techniques for MRI reconstruction that can be robust to train-test variations. To address this problem, we propose a novel image reconstruction framework, termed Smoothed Unrolling (SMUG), which advances a deep unrolling-based MRI reconstruction model using a randomized smoothing (RS)-based robust learning approach. RS, which improves the tolerance of a model against input noises, has been widely used in the design of adversarial defense approaches for image classification tasks. Yet, we find that the conventional design that applies RS to the entire DL-based MRI model is ineffective. In this paper, we show that SMUG and its variants address the above issue by customizing the RS process based on the unrolling architecture of a DL-based MRI reconstruction model. Compared to the vanilla RS approach, we show that SMUG improves the robustness of MRI reconstruction with respect to a diverse set of instability sources, including worst-case and random noise perturbations to input measurements, varying measurement sampling rates, and different numbers of unrolling steps. Furthermore, we theoretically analyze the robustness of our method in the presence of perturbations.

Diffusion-based Adversarial Purification for Robust Deep MRI Reconstruction

Sep 11, 2023

Abstract:Deep learning (DL) methods have been extensively employed in magnetic resonance imaging (MRI) reconstruction, demonstrating remarkable performance improvements compared to traditional non-DL methods. However, recent studies have uncovered the susceptibility of these models to carefully engineered adversarial perturbations. In this paper, we tackle this issue by leveraging diffusion models. Specifically, we introduce a defense strategy that enhances the robustness of DL-based MRI reconstruction methods through the utilization of pre-trained diffusion models as adversarial purifiers. Unlike conventional state-of-the-art adversarial defense methods (e.g., adversarial training), our proposed approach eliminates the need to solve a minimax optimization problem to train the image reconstruction model from scratch, and only requires fine-tuning on purified adversarial examples. Our experimental findings underscore the effectiveness of our proposed technique when benchmarked against leading defense methodologies for MRI reconstruction such as adversarial training and randomized smoothing.

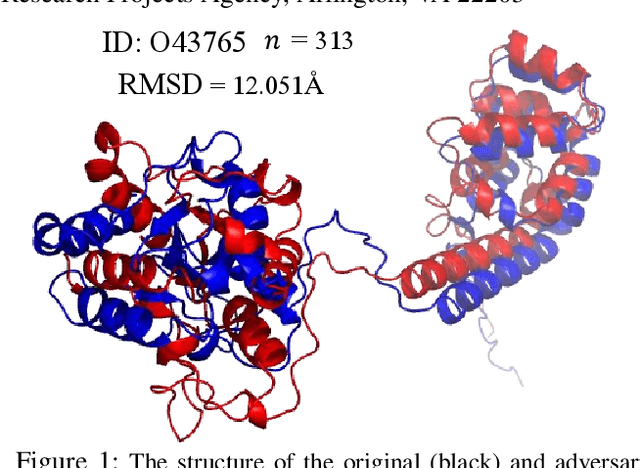

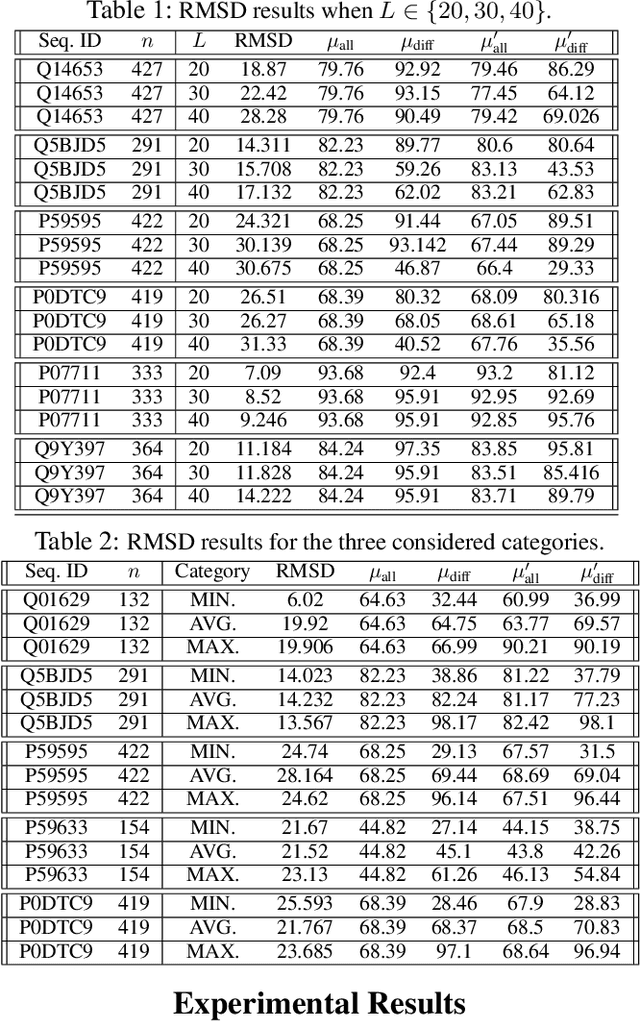

On the Robustness of AlphaFold: A COVID-19 Case Study

Jan 12, 2023

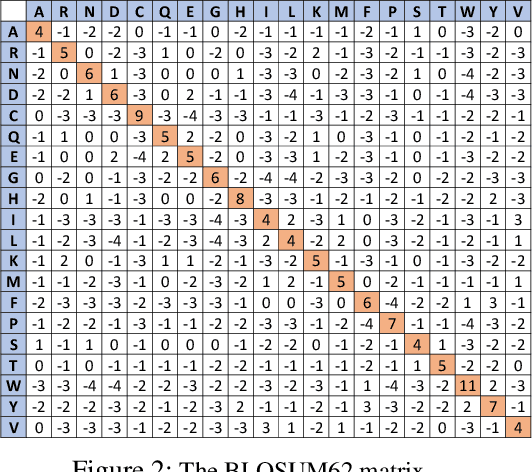

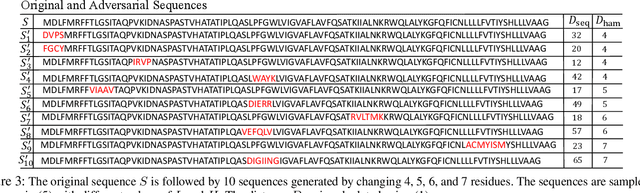

Abstract:Protein folding neural networks (PFNNs) such as AlphaFold predict remarkably accurate structures of proteins compared to other approaches. However, the robustness of such networks has heretofore not been explored. This is particularly relevant given the broad social implications of such technologies and the fact that biologically small perturbations in the protein sequence do not generally lead to drastic changes in the protein structure. In this paper, we demonstrate that AlphaFold does not exhibit such robustness despite its high accuracy. This raises the challenge of detecting and quantifying the extent to which these predicted protein structures can be trusted. To measure the robustness of the predicted structures, we utilize (i) the root-mean-square deviation (RMSD) and (ii) the Global Distance Test (GDT) similarity measure between the predicted structure of the original sequence and the structure of its adversarially perturbed version. We prove that the problem of minimally perturbing protein sequences to fool protein folding neural networks is NP-complete. Based on the well-established BLOSUM62 sequence alignment scoring matrix, we generate adversarial protein sequences and show that the RMSD between the predicted protein structure and the structure of the original sequence are very large when the adversarial changes are bounded by (i) 20 units in the BLOSUM62 distance, and (ii) five residues (out of hundreds or thousands of residues) in the given protein sequence. In our experimental evaluation, we consider 111 COVID-19 proteins in the Universal Protein resource (UniProt), a central resource for protein data managed by the European Bioinformatics Institute, Swiss Institute of Bioinformatics, and the US Protein Information Resource. These result in an overall GDT similarity test score average of around 34%, demonstrating a substantial drop in the performance of AlphaFold.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge