Shijun Liang

NERD: Network-Regularized Diffusion Sampling For 3D Computed Tomography

Nov 18, 2025Abstract:Numerous diffusion model (DM)-based methods have been proposed for solving inverse imaging problems. Among these, a recent line of work has demonstrated strong performance by formulating sampling as an optimization procedure that enforces measurement consistency, forward diffusion consistency, and both step-wise and backward diffusion consistency. However, these methods have only considered 2D reconstruction tasks and do not directly extend to 3D image reconstruction problems, such as in Computed Tomography (CT). To bridge this gap, we propose NEtwork-Regularized diffusion sampling for 3D CT (NERD) by incorporating an L1 regularization into the optimization objective. This regularizer encourages spatial continuity across adjacent slices, reducing inter-slice artifacts and promoting coherent volumetric reconstructions. Additionally, we introduce two efficient optimization strategies to solve the resulting objective: one based on the Alternating Direction Method of Multipliers (ADMM) and another based on the Primal-Dual Hybrid Gradient (PDHG) method. Experiments on medical 3D CT data demonstrate that our approach achieves either state-of-the-art or highly competitive results.

UGoDIT: Unsupervised Group Deep Image Prior Via Transferable Weights

May 16, 2025Abstract:Recent advances in data-centric deep generative models have led to significant progress in solving inverse imaging problems. However, these models (e.g., diffusion models (DMs)) typically require large amounts of fully sampled (clean) training data, which is often impractical in medical and scientific settings such as dynamic imaging. On the other hand, training-data-free approaches like the Deep Image Prior (DIP) do not require clean ground-truth images but suffer from noise overfitting and can be computationally expensive as the network parameters need to be optimized for each measurement set independently. Moreover, DIP-based methods often overlook the potential of learning a prior using a small number of sub-sampled measurements (or degraded images) available during training. In this paper, we propose UGoDIT, an Unsupervised Group DIP via Transferable weights, designed for the low-data regime where only a very small number, M, of sub-sampled measurement vectors are available during training. Our method learns a set of transferable weights by optimizing a shared encoder and M disentangled decoders. At test time, we reconstruct the unseen degraded image using a DIP network, where part of the parameters are fixed to the learned weights, while the remaining are optimized to enforce measurement consistency. We evaluate UGoDIT on both medical (multi-coil MRI) and natural (super resolution and non-linear deblurring) image recovery tasks under various settings. Compared to recent standalone DIP methods, UGoDIT provides accelerated convergence and notable improvement in reconstruction quality. Furthermore, our method achieves performance competitive with SOTA DM-based and supervised approaches, despite not requiring large amounts of clean training data.

Understanding Untrained Deep Models for Inverse Problems: Algorithms and Theory

Feb 25, 2025Abstract:In recent years, deep learning methods have been extensively developed for inverse imaging problems (IIPs), encompassing supervised, self-supervised, and generative approaches. Most of these methods require large amounts of labeled or unlabeled training data to learn effective models. However, in many practical applications, such as medical image reconstruction, extensive training datasets are often unavailable or limited. A significant milestone in addressing this challenge came in 2018 with the work of Ulyanov et al., which introduced the Deep Image Prior (DIP)--the first training-data-free neural network method for IIPs. Unlike conventional deep learning approaches, DIP requires only a convolutional neural network, the noisy measurements, and a forward operator. By leveraging the implicit regularization of deep networks initialized with random noise, DIP can learn and restore image structures without relying on external datasets. However, a well-known limitation of DIP is its susceptibility to overfitting, primarily due to the over-parameterization of the network. In this tutorial paper, we provide a comprehensive review of DIP, including a theoretical analysis of its training dynamics. We also categorize and discuss recent advancements in DIP-based methods aimed at mitigating overfitting, including techniques such as regularization, network re-parameterization, and early stopping. Furthermore, we discuss approaches that combine DIP with pre-trained neural networks, present empirical comparison results against data-centric methods, and highlight open research questions and future directions.

Pruning Unrolled Networks (PUN) at Initialization for MRI Reconstruction Improves Generalization

Dec 24, 2024

Abstract:Deep learning methods are highly effective for many image reconstruction tasks. However, the performance of supervised learned models can degrade when applied to distinct experimental settings at test time or in the presence of distribution shifts. In this study, we demonstrate that pruning deep image reconstruction networks at training time can improve their robustness to distribution shifts. In particular, we consider unrolled reconstruction architectures for accelerated magnetic resonance imaging and introduce a method for pruning unrolled networks (PUN) at initialization. Our experiments demonstrate that when compared to traditional dense networks, PUN offers improved generalization across a variety of experimental settings and even slight performance gains on in-distribution data.

uDiG-DIP: Unrolled Diffusion-Guided Deep Image Prior For Medical Image Reconstruction

Oct 06, 2024Abstract:Deep learning (DL) methods have been extensively applied to various image recovery problems, including magnetic resonance imaging (MRI) and computed tomography (CT) reconstruction. Beyond supervised models, other approaches have been recently explored including two key recent schemes: Deep Image Prior (DIP) that is an unsupervised scan-adaptive method that leverages the network architecture as implicit regularization but can suffer from noise overfitting, and diffusion models (DMs), where the sampling procedure of a pre-trained generative model is modified to allow sampling from the measurement-conditioned distribution through approximations. In this paper, we propose combining DIP and DMs for MRI and CT reconstruction, motivated by (i) the impact of the DIP network input and (ii) the use of DMs as diffusion purifiers (DPs). Specifically, we propose an unrolled procedure that iteratively optimizes the DIP network with a DM-refined adaptive input using a loss with data consistency and autoencoding terms. We term the approach unrolled Diffusion-Guided DIP (uDiG-DIP). Our experimental results demonstrate that uDiG-DIP achieves superior reconstruction results compared to leading DM-based baselines and the original DIP for MRI and CT tasks.

SITCOM: Step-wise Triple-Consistent Diffusion Sampling for Inverse Problems

Oct 06, 2024

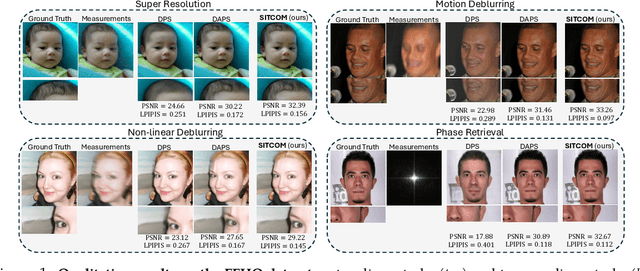

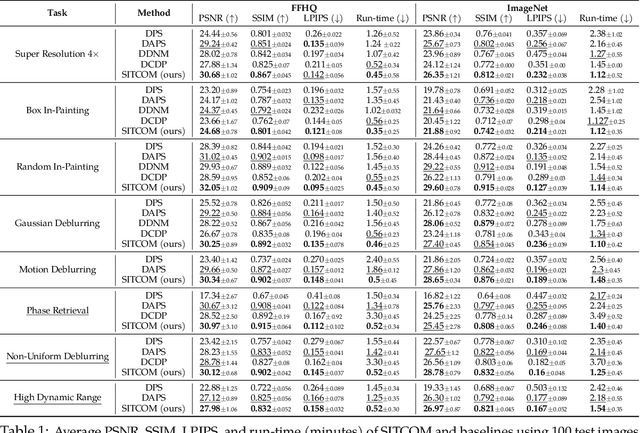

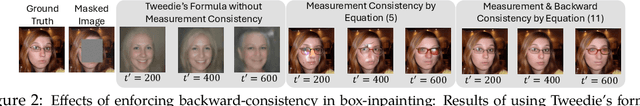

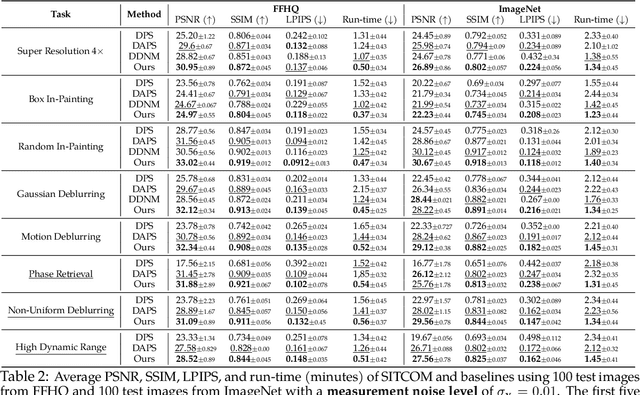

Abstract:Diffusion models (DMs) are a class of generative models that allow sampling from a distribution learned over a training set. When applied to solving inverse imaging problems (IPs), the reverse sampling steps of DMs are typically modified to approximately sample from a measurement-conditioned distribution in the image space. However, these modifications may be unsuitable for certain settings (such as in the presence of measurement noise) and non-linear tasks, as they often struggle to correct errors from earlier sampling steps and generally require a large number of optimization and/or sampling steps. To address these challenges, we state three conditions for achieving measurement-consistent diffusion trajectories. Building on these conditions, we propose a new optimization-based sampling method that not only enforces the standard data manifold measurement consistency and forward diffusion consistency, as seen in previous studies, but also incorporates backward diffusion consistency that maintains a diffusion trajectory by optimizing over the input of the pre-trained model at every sampling step. By enforcing these conditions, either implicitly or explicitly, our sampler requires significantly fewer reverse steps. Therefore, we refer to our accelerated method as Step-wise Triple-Consistent Sampling (SITCOM). Compared to existing state-of-the-art baseline methods, under different levels of measurement noise, our extensive experiments across five linear and three non-linear image restoration tasks demonstrate that SITCOM achieves competitive or superior results in terms of standard image similarity metrics while requiring a significantly reduced run-time across all considered tasks.

Analysis of Deep Image Prior and Exploiting Self-Guidance for Image Reconstruction

Feb 08, 2024

Abstract:The ability of deep image prior (DIP) to recover high-quality images from incomplete or corrupted measurements has made it popular in inverse problems in image restoration and medical imaging including magnetic resonance imaging (MRI). However, conventional DIP suffers from severe overfitting and spectral bias effects. In this work, we first provide an analysis of how DIP recovers information from undersampled imaging measurements by analyzing the training dynamics of the underlying networks in the kernel regime for different architectures. This study sheds light on important underlying properties for DIP-based recovery. Current research suggests that incorporating a reference image as network input can enhance DIP's performance in image reconstruction compared to using random inputs. However, obtaining suitable reference images requires supervision, and raises practical difficulties. In an attempt to overcome this obstacle, we further introduce a self-driven reconstruction process that concurrently optimizes both the network weights and the input while eliminating the need for training data. Our method incorporates a novel denoiser regularization term which enables robust and stable joint estimation of both the network input and reconstructed image. We demonstrate that our self-guided method surpasses both the original DIP and modern supervised methods in terms of MR image reconstruction performance and outperforms previous DIP-based schemes for image inpainting.

Robust MRI Reconstruction by Smoothed Unrolling (SMUG)

Dec 12, 2023

Abstract:As the popularity of deep learning (DL) in the field of magnetic resonance imaging (MRI) continues to rise, recent research has indicated that DL-based MRI reconstruction models might be excessively sensitive to minor input disturbances, including worst-case additive perturbations. This sensitivity often leads to unstable, aliased images. This raises the question of how to devise DL techniques for MRI reconstruction that can be robust to train-test variations. To address this problem, we propose a novel image reconstruction framework, termed Smoothed Unrolling (SMUG), which advances a deep unrolling-based MRI reconstruction model using a randomized smoothing (RS)-based robust learning approach. RS, which improves the tolerance of a model against input noises, has been widely used in the design of adversarial defense approaches for image classification tasks. Yet, we find that the conventional design that applies RS to the entire DL-based MRI model is ineffective. In this paper, we show that SMUG and its variants address the above issue by customizing the RS process based on the unrolling architecture of a DL-based MRI reconstruction model. Compared to the vanilla RS approach, we show that SMUG improves the robustness of MRI reconstruction with respect to a diverse set of instability sources, including worst-case and random noise perturbations to input measurements, varying measurement sampling rates, and different numbers of unrolling steps. Furthermore, we theoretically analyze the robustness of our method in the presence of perturbations.

Diffusion-based Adversarial Purification for Robust Deep MRI Reconstruction

Sep 11, 2023

Abstract:Deep learning (DL) methods have been extensively employed in magnetic resonance imaging (MRI) reconstruction, demonstrating remarkable performance improvements compared to traditional non-DL methods. However, recent studies have uncovered the susceptibility of these models to carefully engineered adversarial perturbations. In this paper, we tackle this issue by leveraging diffusion models. Specifically, we introduce a defense strategy that enhances the robustness of DL-based MRI reconstruction methods through the utilization of pre-trained diffusion models as adversarial purifiers. Unlike conventional state-of-the-art adversarial defense methods (e.g., adversarial training), our proposed approach eliminates the need to solve a minimax optimization problem to train the image reconstruction model from scratch, and only requires fine-tuning on purified adversarial examples. Our experimental findings underscore the effectiveness of our proposed technique when benchmarked against leading defense methodologies for MRI reconstruction such as adversarial training and randomized smoothing.

SMUG: Towards robust MRI reconstruction by smoothed unrolling

Mar 14, 2023Abstract:Although deep learning (DL) has gained much popularity for accelerated magnetic resonance imaging (MRI), recent studies have shown that DL-based MRI reconstruction models could be oversensitive to tiny input perturbations (that are called 'adversarial perturbations'), which cause unstable, low-quality reconstructed images. This raises the question of how to design robust DL methods for MRI reconstruction. To address this problem, we propose a novel image reconstruction framework, termed SMOOTHED UNROLLING (SMUG), which advances a deep unrolling-based MRI reconstruction model using a randomized smoothing (RS)-based robust learning operation. RS, which improves the tolerance of a model against input noises, has been widely used in the design of adversarial defense for image classification. Yet, we find that the conventional design that applies RS to the entire DL process is ineffective for MRI reconstruction. We show that SMUG addresses the above issue by customizing the RS operation based on the unrolling architecture of the DL-based MRI reconstruction model. Compared to the vanilla RS approach and several variants of SMUG, we show that SMUG improves the robustness of MRI reconstruction with respect to a diverse set of perturbation sources, including perturbations to the input measurements, different measurement sampling rates, and different unrolling steps. Code for SMUG will be available at https://github.com/LGM70/SMUG.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge