Ichiro Takeuchi

University of Maryland College Park, University of Maryland Quantum Materials Center

Quantum Kernel Machine Learning for Autonomous Materials Science

Jan 16, 2026Abstract:Autonomous materials science, where active learning is used to navigate large compositional phase space, has emerged as a powerful vehicle to rapidly explore new materials. A crucial aspect of autonomous materials science is exploring new materials using as little data as possible. Gaussian process-based active learning allows effective charting of multi-dimensional parameter space with a limited number of training data, and thus is a common algorithmic choice for autonomous materials science. An integral part of the autonomous workflow is the application of kernel functions for quantifying similarities among measured data points. A recent theoretical breakthrough has shown that quantum kernel models can achieve similar performance with less training data than classical models. This signals the possible advantage of applying quantum kernel machine learning to autonomous materials discovery. In this work, we compare quantum and classical kernels for their utility in sequential phase space navigation for autonomous materials science. Specifically, we compute a quantum kernel and several classical kernels for x-ray diffraction patterns taken from an Fe-Ga-Pd ternary composition spread library. We conduct our study on both IonQ's Aria trapped ion quantum computer hardware and the corresponding classical noisy simulator. We experimentally verify that a quantum kernel model can outperform some classical kernel models. The results highlight the potential of quantum kernel machine learning methods for accelerating materials discovery and suggest complex x-ray diffraction data is a candidate for robust quantum kernel model advantage.

Statistical Test for Saliency Maps of Graph Neural Networks via Selective Inference

May 22, 2025Abstract:Graph Neural Networks (GNNs) have gained prominence for their ability to process graph-structured data across various domains. However, interpreting GNN decisions remains a significant challenge, leading to the adoption of saliency maps for identifying influential nodes and edges. Despite their utility, the reliability of GNN saliency maps has been questioned, particularly in terms of their robustness to noise. In this study, we propose a statistical testing framework to rigorously evaluate the significance of saliency maps. Our main contribution lies in addressing the inflation of the Type I error rate caused by double-dipping of data, leveraging the framework of Selective Inference. Our method provides statistically valid $p$-values while controlling the Type I error rate, ensuring that identified salient subgraphs contain meaningful information rather than random artifacts. To demonstrate the effectiveness of our method, we conduct experiments on both synthetic and real-world datasets, showing its effectiveness in assessing the reliability of GNN interpretations.

Causal Discovery from Data Assisted by Large Language Models

Mar 18, 2025Abstract:Knowledge driven discovery of novel materials necessitates the development of the causal models for the property emergence. While in classical physical paradigm the causal relationships are deduced based on the physical principles or via experiment, rapid accumulation of observational data necessitates learning causal relationships between dissimilar aspects of materials structure and functionalities based on observations. For this, it is essential to integrate experimental data with prior domain knowledge. Here we demonstrate this approach by combining high-resolution scanning transmission electron microscopy (STEM) data with insights derived from large language models (LLMs). By fine-tuning ChatGPT on domain-specific literature, such as arXiv papers on ferroelectrics, and combining obtained information with data-driven causal discovery, we construct adjacency matrices for Directed Acyclic Graphs (DAGs) that map the causal relationships between structural, chemical, and polarization degrees of freedom in Sm-doped BiFeO3 (SmBFO). This approach enables us to hypothesize how synthesis conditions influence material properties, particularly the coercive field (E0), and guides experimental validation. The ultimate objective of this work is to develop a unified framework that integrates LLM-driven literature analysis with data-driven discovery, facilitating the precise engineering of ferroelectric materials by establishing clear connections between synthesis conditions and their resulting material properties.

Rapid analysis of point-contact Andreev reflection spectra via machine learning with adaptive data augmentation

Mar 13, 2025

Abstract:Delineating the superconducting order parameters is a pivotal task in investigating superconductivity for probing pairing mechanisms, as well as their symmetry and topology. Point-contact Andreev reflection (PCAR) measurement is a simple yet powerful tool for identifying the order parameters. The PCAR spectra exhibit significant variations depending on the type of the order parameter in a superconductor, including its magnitude ($\mathit{\Delta}$), as well as temperature, interfacial quality, Fermi velocity mismatch, and other factors. The information on the order parameter can be obtained by finding the combination of these parameters, generating a theoretical spectrum that fits a measured experimental spectrum. However, due to the complexity of the spectra and the high dimensionality of parameters, extracting the fitting parameters is often time-consuming and labor-intensive. In this study, we employ a convolutional neural network (CNN) algorithm to create models for rapid and automated analysis of PCAR spectra of various superconductors with different pairing symmetries (conventional $s$-wave, chiral $p_x+ip_y$-wave, and $d_{x^2-y^2}$-wave). The training datasets are generated based on the Blonder-Tinkham-Klapwijk (BTK) theory and further modified and augmented by selectively incorporating noise and peaks according to the bias voltages. This approach not only replicates the experimental spectra but also brings the model's attention to important features within the spectra. The optimized models provide fitting parameters for experimentally measured spectra in less than 100 ms per spectrum. Our approaches and findings pave the way for rapid and automated spectral analysis which will help accelerate research on superconductors with complex order parameters.

Explainable Classifier for Malignant Lymphoma Subtyping via Cell Graph and Image Fusion

Mar 02, 2025

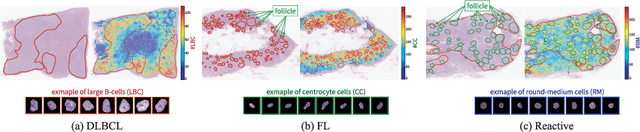

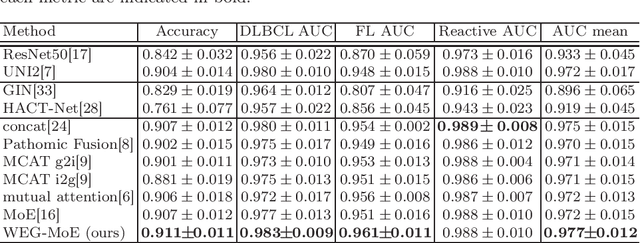

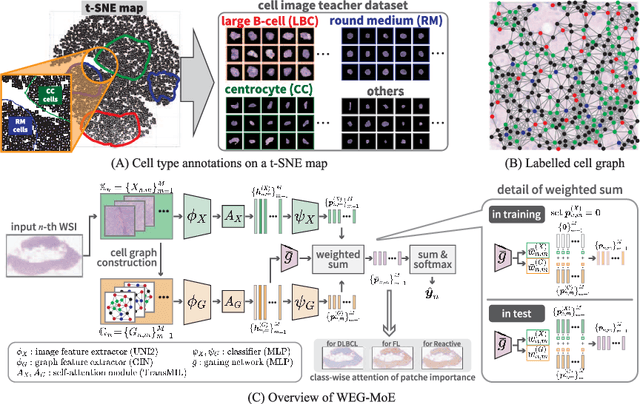

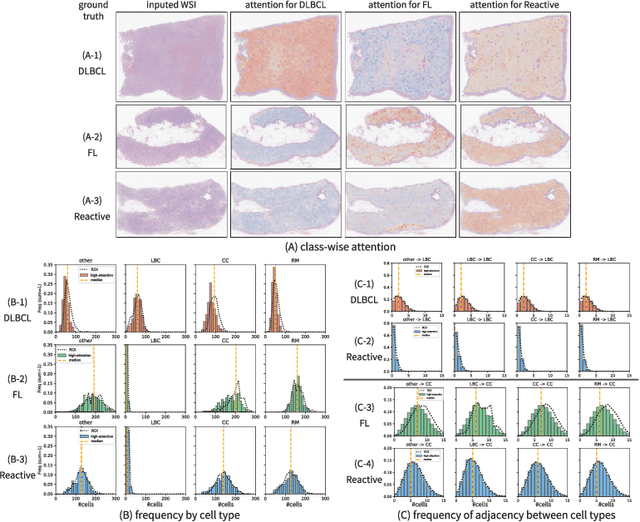

Abstract:Malignant lymphoma subtype classification directly impacts treatment strategies and patient outcomes, necessitating classification models that achieve both high accuracy and sufficient explainability. This study proposes a novel explainable Multi-Instance Learning (MIL) framework that identifies subtype-specific Regions of Interest (ROIs) from Whole Slide Images (WSIs) while integrating cell distribution characteristics and image information. Our framework simultaneously addresses three objectives: (1) indicating appropriate ROIs for each subtype, (2) explaining the frequency and spatial distribution of characteristic cell types, and (3) achieving high-accuracy subtyping by leveraging both image and cell-distribution modalities. The proposed method fuses cell graph and image features extracted from each patch in the WSI using a Mixture-of-Experts (MoE) approach and classifies subtypes within an MIL framework. Experiments on a dataset of 1,233 WSIs demonstrate that our approach achieves state-of-the-art accuracy among ten comparative methods and provides region-level and cell-level explanations that align with a pathologist's perspectives.

Distributionally Robust Active Learning for Gaussian Process Regression

Feb 24, 2025Abstract:Gaussian process regression (GPR) or kernel ridge regression is a widely used and powerful tool for nonlinear prediction. Therefore, active learning (AL) for GPR, which actively collects data labels to achieve an accurate prediction with fewer data labels, is an important problem. However, existing AL methods do not theoretically guarantee prediction accuracy for target distribution. Furthermore, as discussed in the distributionally robust learning literature, specifying the target distribution is often difficult. Thus, this paper proposes two AL methods that effectively reduce the worst-case expected error for GPR, which is the worst-case expectation in target distribution candidates. We show an upper bound of the worst-case expected squared error, which suggests that the error will be arbitrarily small by a finite number of data labels under mild conditions. Finally, we demonstrate the effectiveness of the proposed methods through synthetic and real-world datasets.

Generalized Kernel Inducing Points by Duality Gap for Dataset Distillation

Feb 18, 2025Abstract:We propose Duality Gap KIP (DGKIP), an extension of the Kernel Inducing Points (KIP) method for dataset distillation. While existing dataset distillation methods often rely on bi-level optimization, DGKIP eliminates the need for such optimization by leveraging duality theory in convex programming. The KIP method has been introduced as a way to avoid bi-level optimization; however, it is limited to the squared loss and does not support other loss functions (e.g., cross-entropy or hinge loss) that are more suitable for classification tasks. DGKIP addresses this limitation by exploiting an upper bound on parameter changes after dataset distillation using the duality gap, enabling its application to a wider range of loss functions. We also characterize theoretical properties of DGKIP by providing upper bounds on the test error and prediction consistency after dataset distillation. Experimental results on standard benchmarks such as MNIST and CIFAR-10 demonstrate that DGKIP retains the efficiency of KIP while offering broader applicability and robust performance.

Statistically Significant $k$NNAD by Selective Inference

Feb 18, 2025Abstract:In this paper, we investigate the problem of unsupervised anomaly detection using the k-Nearest Neighbor method. The k-Nearest Neighbor Anomaly Detection (kNNAD) is a simple yet effective approach for identifying anomalies across various domains and fields. A critical challenge in anomaly detection, including kNNAD, is appropriately quantifying the reliability of detected anomalies. To address this, we formulate kNNAD as a statistical hypothesis test and quantify the probability of false detection using $p$-values. The main technical challenge lies in performing both anomaly detection and statistical testing on the same data, which hinders correct $p$-value calculation within the conventional statistical testing framework. To resolve this issue, we introduce a statistical hypothesis testing framework called Selective Inference (SI) and propose a method named Statistically Significant NNAD (Stat-kNNAD). By leveraging SI, the Stat-kNNAD method ensures that detected anomalies are statistically significant with theoretical guarantees. The proposed Stat-kNNAD method is applicable to anomaly detection in both the original feature space and latent feature spaces derived from deep learning models. Through numerical experiments on synthetic data and applications to industrial product anomaly detection, we demonstrate the validity and effectiveness of the Stat-kNNAD method.

Bayesian Optimization for Simultaneous Selection of Machine Learning Algorithms and Hyperparameters on Shared Latent Space

Feb 13, 2025Abstract:Selecting the optimal combination of a machine learning (ML) algorithm and its hyper-parameters is crucial for the development of high-performance ML systems. However, since the combination of ML algorithms and hyper-parameters is enormous, the exhaustive validation requires a significant amount of time. Many existing studies use Bayesian optimization (BO) for accelerating the search. On the other hand, a significant difficulty is that, in general, there exists a different hyper-parameter space for each one of candidate ML algorithms. BO-based approaches typically build a surrogate model independently for each hyper-parameter space, by which sufficient observations are required for all candidate ML algorithms. In this study, our proposed method embeds different hyper-parameter spaces into a shared latent space, in which a surrogate multi-task model for BO is estimated. This approach can share information of observations from different ML algorithms by which efficient optimization is expected with a smaller number of total observations. We further propose the pre-training of the latent space embedding with an adversarial regularization, and a ranking model for selecting an effective pre-trained embedding for a given target dataset. Our empirical study demonstrates effectiveness of the proposed method through datasets from OpenML.

Time Series Anomaly Detection in the Frequency Domain with Statistical Reliability

Feb 05, 2025Abstract:Effective anomaly detection in complex systems requires identifying change points (CPs) in the frequency domain, as abnormalities often arise across multiple frequencies. This paper extends recent advancements in statistically significant CP detection, based on Selective Inference (SI), to the frequency domain. The proposed SI method quantifies the statistical significance of detected CPs in the frequency domain using $p$-values, ensuring that the detected changes reflect genuine structural shifts in the target system. We address two major technical challenges to achieve this. First, we extend the existing SI framework to the frequency domain by appropriately utilizing the properties of discrete Fourier transform (DFT). Second, we develop an SI method that provides valid $p$-values for CPs where changes occur across multiple frequencies. Experimental results demonstrate that the proposed method reliably identifies genuine CPs with strong statistical guarantees, enabling more accurate root-cause analysis in the frequency domain of complex systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge