Huiling Zhu

Anti-Byzantine Attacks Enabled Vehicle Selection for Asynchronous Federated Learning in Vehicular Edge Computing

Apr 12, 2024Abstract:In vehicle edge computing (VEC), asynchronous federated learning (AFL) is used, where the edge receives a local model and updates the global model, effectively reducing the global aggregation latency.Due to different amounts of local data,computing capabilities and locations of the vehicles, renewing the global model with same weight is inappropriate.The above factors will affect the local calculation time and upload time of the local model, and the vehicle may also be affected by Byzantine attacks, leading to the deterioration of the vehicle data. However, based on deep reinforcement learning (DRL), we can consider these factors comprehensively to eliminate vehicles with poor performance as much as possible and exclude vehicles that have suffered Byzantine attacks before AFL. At the same time, when aggregating AFL, we can focus on those vehicles with better performance to improve the accuracy and safety of the system. In this paper, we proposed a vehicle selection scheme based on DRL in VEC. In this scheme, vehicle s mobility, channel conditions with temporal variations, computational resources with temporal variations, different data amount, transmission channel status of vehicles as well as Byzantine attacks were taken into account.Simulation results show that the proposed scheme effectively improves the safety and accuracy of the global model.

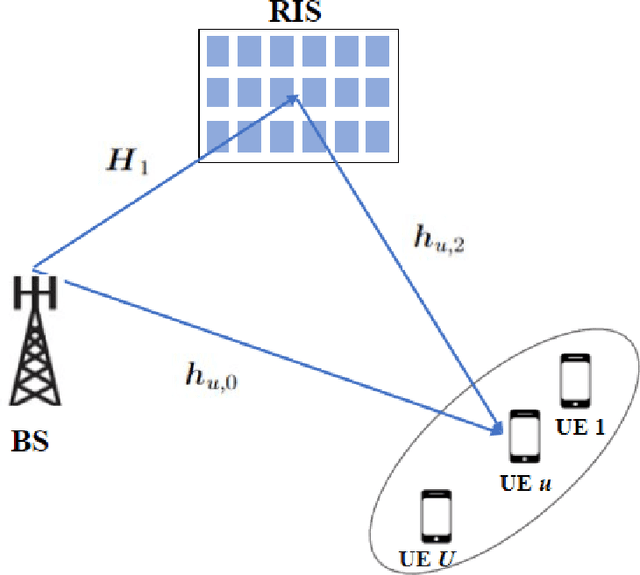

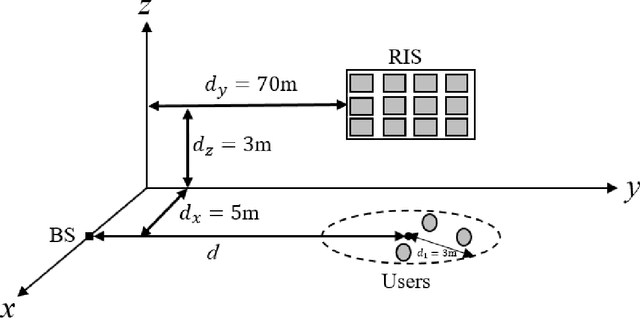

Spectral Efficiency Maximization for Active RIS-aided Cell-Free Massive MIMO Systems with Imperfect CSI

Feb 11, 2024

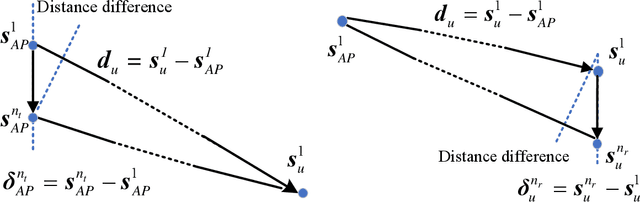

Abstract:A cell-free network merged with active reconfigurable reflecting surfaces (RIS) is investigated in this paper. Based on the imperfect channel state information (CSI), the aggregated channel from the user to the access point (AP) is initially estimated using the linear minimum mean square error (LMMSE) technique. The central processing unit (CPU) then detects uplink data from individual users through the utilization of the maximum ratio combining (MRC) approach, relying on the estimated channel. Then, a closed-form expression for uplink spectral efficiency (SE) is derived which demonstrates its reliance on statistical CSI (S-CSI) alone. The amplitude gain of each active RIS element is derived in a closed-form expression as a function of the number of active RIS elements, the number of users, and the size of each reflecting element. A soft actor-critic (SAC) algorithm is utilized to design the phase shift of the active RIS to maximize the uplink SE. Simulation results emphasize the robustness of the proposed SAC algorithm, showcasing its effectiveness in cell-free networks under the influence of imperfect CSI.

Cooperative Edge Caching Based on Elastic Federated and Multi-Agent Deep Reinforcement Learning in Next-Generation Network

Jan 18, 2024Abstract:Edge caching is a promising solution for next-generation networks by empowering caching units in small-cell base stations (SBSs), which allows user equipments (UEs) to fetch users' requested contents that have been pre-cached in SBSs. It is crucial for SBSs to predict accurate popular contents through learning while protecting users' personal information. Traditional federated learning (FL) can protect users' privacy but the data discrepancies among UEs can lead to a degradation in model quality. Therefore, it is necessary to train personalized local models for each UE to predict popular contents accurately. In addition, the cached contents can be shared among adjacent SBSs in next-generation networks, thus caching predicted popular contents in different SBSs may affect the cost to fetch contents. Hence, it is critical to determine where the popular contents are cached cooperatively. To address these issues, we propose a cooperative edge caching scheme based on elastic federated and multi-agent deep reinforcement learning (CEFMR) to optimize the cost in the network. We first propose an elastic FL algorithm to train the personalized model for each UE, where adversarial autoencoder (AAE) model is adopted for training to improve the prediction accuracy, then {a popular} content prediction algorithm is proposed to predict the popular contents for each SBS based on the trained AAE model. Finally, we propose a multi-agent deep reinforcement learning (MADRL) based algorithm to decide where the predicted popular contents are collaboratively cached among SBSs. Our experimental results demonstrate the superiority of our proposed scheme to existing baseline caching schemes.

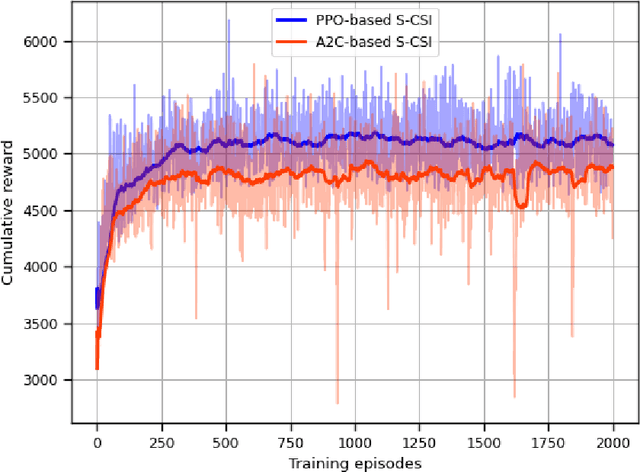

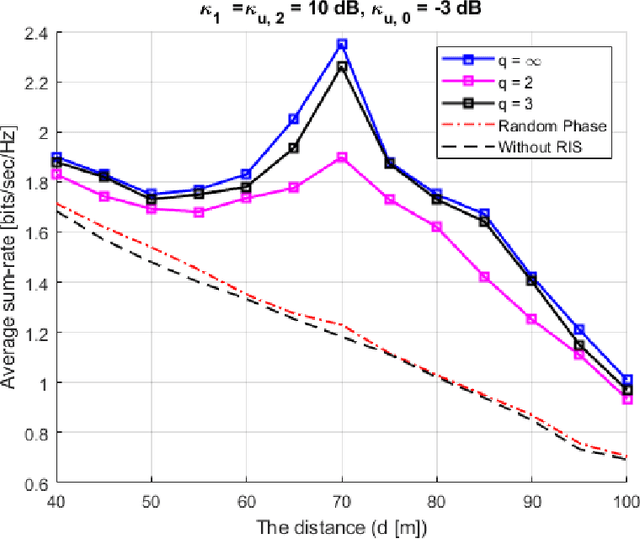

Statistical CSI-based Beamforming for RIS-Aided Multiuser MISO Systems using Deep Reinforcement Learning

Sep 03, 2022

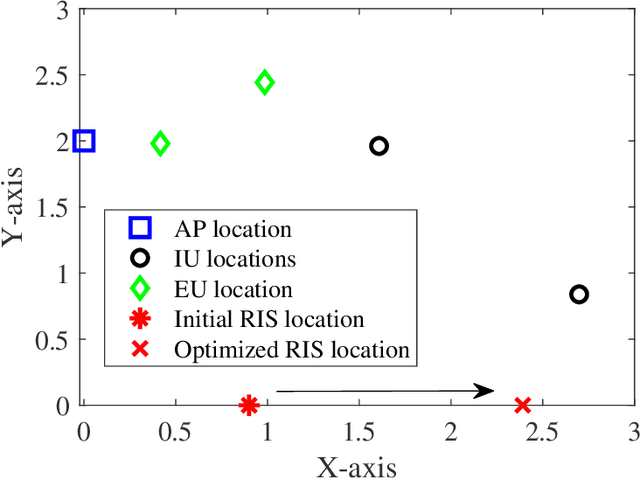

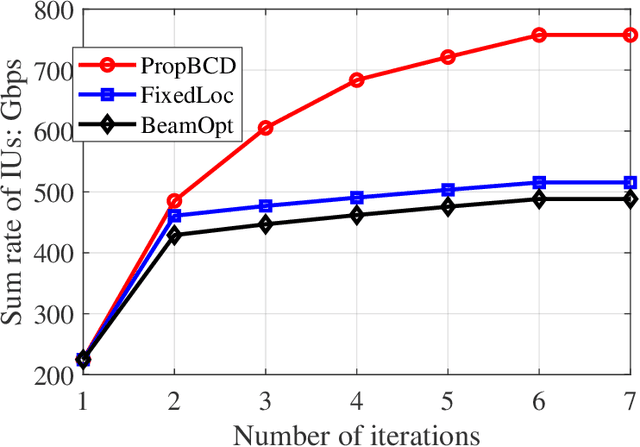

Abstract:The paper presents a joint beamforming algorithm using statistical channel state information (S-CSI) for reconfigurable intelligent surfaces (RIS) for multiuser MISO wireless communications. We used S-CSI, which is a long-term average of the cascaded channel as opposed to instantaneous CSI utilized in most existing works. Through this method, the overhead of channel estimation is dramatically reduced. We propose a proximal policy optimization (PPO) algorithm which is a well-known actor-critic based reinforcement learning (RL) algorithm to solve the optimization problem. To test the efficacy of this algorithm, simulation results are presented along with evaluations of key system parameters, including the Rician factor and RIS location, on the achievable sum rate of the users.

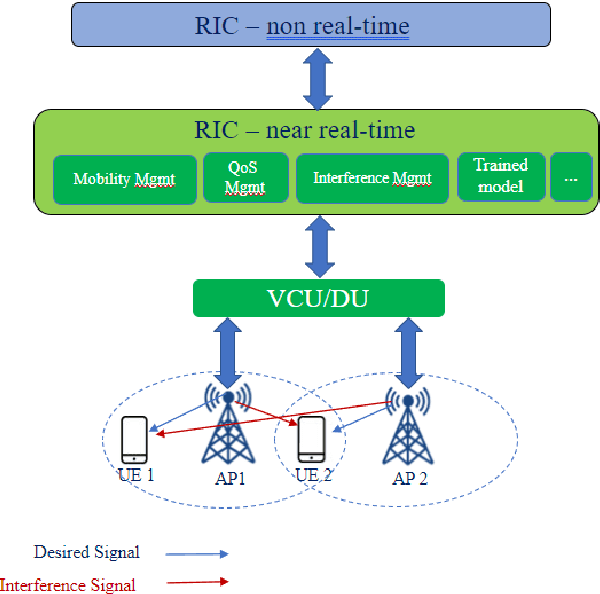

Smart Interference Management xApp using Deep Reinforcement Learning

Apr 12, 2022

Abstract:Interference continues to be a key limiting factor in cellular radio access network (RAN) deployments. Effective, data-driven, self-adapting radio resource management (RRM) solutions are essential for tackling interference, and thus achieving the desired performance levels particularly at the cell-edge. In future network architecture, RAN intelligent controller (RIC) running with near-real-time applications, called xApps, is considered as a potential component to enable RRM. In this paper, based on deep reinforcement learning (RL) xApp, a joint sub-band masking and power management is proposed for smart interference management. The sub-band resource masking problem is formulated as a Markov Decision Process (MDP) that can be solved employing deep RL to approximate the policy functions as well as to avoid extremely high computational and storage costs of conventional tabular-based approaches. The developed xApp is scalable in both storage and computation. Simulation results demonstrate advantages of the proposed approach over decentralized baselines in terms of the trade-off between cell-centre and cell-edge user rates, energy efficiency and computational efficiency.

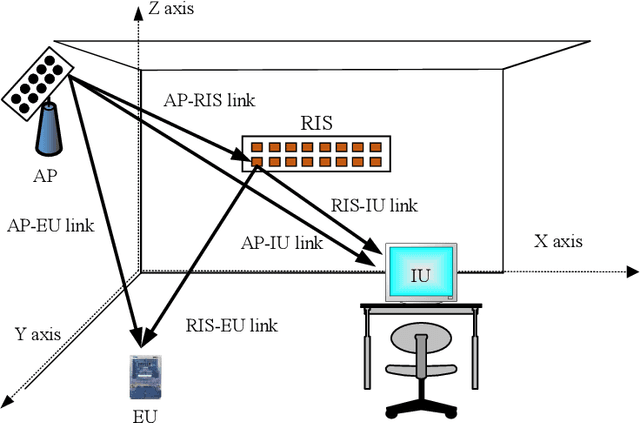

Self-Sustainable Reconfigurable Intelligent Surface Aided Simultaneous Terahertz Information and Power Transfer (STIPT)

Feb 08, 2021

Abstract:This paper proposes a new simultaneous terahertz (THz) information and power transfer (STIPT) system, which is assisted by reconfigurable intelligent surface (RIS) for both the information data and power transmission. We aim to maximize the information users' (IUs') data rate while guaranteeing the energy users' (EUs') and RIS's power harvesting requirements. To solve the formulated non-convex problem, the block coordinate descent (BCD) based algorithm is adopted to alternately optimize the transmit precoding of IUs, RIS's reflecting coefficients, and RIS's coordinate. The Penalty Constrained Convex Approximation (PCCA) Algorithm is proposed to solve the intractable optimization problem of the RIS's coordinate, where the solution's feasibility is guaranteed by the introduced penalties. Simulation results confirm that the proposed BCD algorithm can significantly enhance the performance of STIPT by employing RIS.

Dual Graph Representation Learning

Feb 25, 2020

Abstract:Graph representation learning embeds nodes in large graphs as low-dimensional vectors and is of great benefit to many downstream applications. Most embedding frameworks, however, are inherently transductive and unable to generalize to unseen nodes or learn representations across different graphs. Although inductive approaches can generalize to unseen nodes, they neglect different contexts of nodes and cannot learn node embeddings dually. In this paper, we present a context-aware unsupervised dual encoding framework, \textbf{CADE}, to generate representations of nodes by combining real-time neighborhoods with neighbor-attentioned representation, and preserving extra memory of known nodes. We exhibit that our approach is effective by comparing to state-of-the-art methods.

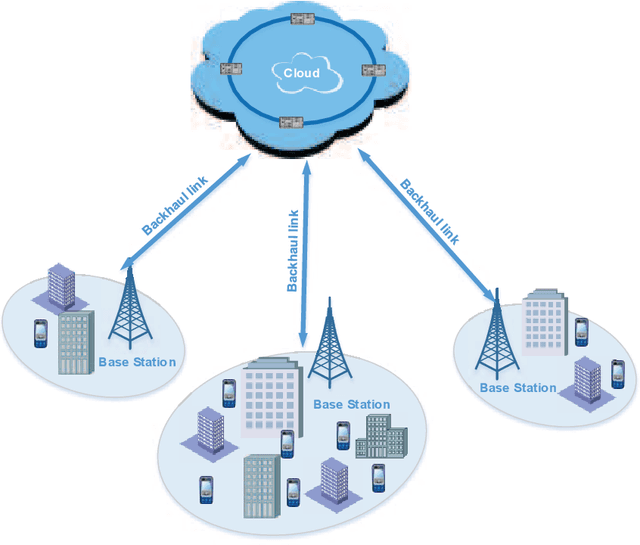

A Machine Learning Framework for Resource Allocation Assisted by Cloud Computing

Dec 16, 2017

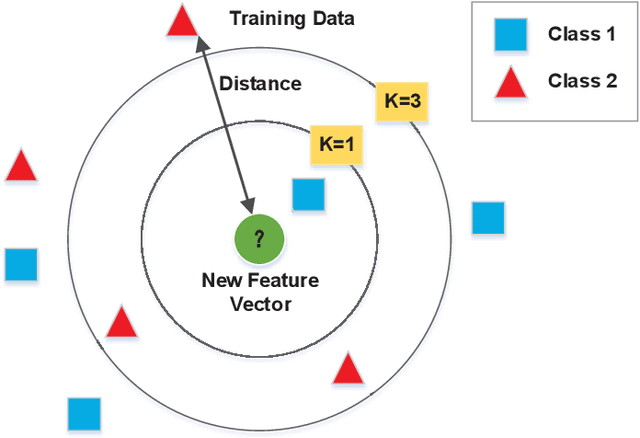

Abstract:Conventionally, the resource allocation is formulated as an optimization problem and solved online with instantaneous scenario information. Since most resource allocation problems are not convex, the optimal solutions are very difficult to be obtained in real time. Lagrangian relaxation or greedy methods are then often employed, which results in performance loss. Therefore, the conventional methods of resource allocation are facing great challenges to meet the ever-increasing QoS requirements of users with scarce radio resource. Assisted by cloud computing, a huge amount of historical data on scenarios can be collected for extracting similarities among scenarios using machine learning. Moreover, optimal or near-optimal solutions of historical scenarios can be searched offline and stored in advance. When the measured data of current scenario arrives, the current scenario is compared with historical scenarios to find the most similar one. Then, the optimal or near-optimal solution in the most similar historical scenario is adopted to allocate the radio resources for the current scenario. To facilitate the application of new design philosophy, a machine learning framework is proposed for resource allocation assisted by cloud computing. An example of beam allocation in multi-user massive multiple-input-multiple-output (MIMO) systems shows that the proposed machine-learning based resource allocation outperforms conventional methods.

Embedding Knowledge Graphs Based on Transitivity and Antisymmetry of Rules

Apr 19, 2017

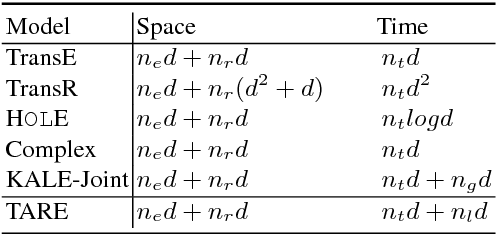

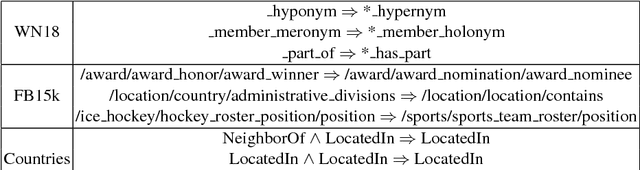

Abstract:Representation learning of knowledge graphs encodes entities and relation types into a continuous low-dimensional vector space, learns embeddings of entities and relation types. Most existing methods only concentrate on knowledge triples, ignoring logic rules which contain rich background knowledge. Although there has been some work aiming at leveraging both knowledge triples and logic rules, they ignore the transitivity and antisymmetry of logic rules. In this paper, we propose a novel approach to learn knowledge representations with entities and ordered relations in knowledges and logic rules. The key idea is to integrate knowledge triples and logic rules, and approximately order the relation types in logic rules to utilize the transitivity and antisymmetry of logic rules. All entries of the embeddings of relation types are constrained to be non-negative. We translate the general constrained optimization problem into an unconstrained optimization problem to solve the non-negative matrix factorization. Experimental results show that our model significantly outperforms other baselines on knowledge graph completion task. It indicates that our model is capable of capturing the transitivity and antisymmetry information, which is significant when learning embeddings of knowledge graphs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge