Jun-Bo Wang

Spatial Bandwidth of Bilateral Near-Field Channels for Linear Large-Scale Antenna Array System

Dec 06, 2024

Abstract:This paper analyzes the spatial bandwidth of line-of-sight (LoS) channels in massive MIMO systems. For the linear large-scale antenna arrays (LSAA) of transceivers placed in random locations in 3D space, a simple but accurate closed-form expression is derived to characterize the spatial bandwidth. Subsequent analysis of the LSAA's spatial bandwidth properties is also provided, leading to the formulation of an approximate expression for the effective degrees of freedom (EDoF) of bilateral near-field channels. Interestingly, as proved in this work, when the transmit and receive arrays are coplanar, with the receive array positioned perpendicular to the axis joining the centroids of the transmit and receive arrays, the EDoF of the LoS channel is found to be approximately maximized.

Spatial Non-Stationary Dual-Wideband Channel Estimation for XL-MIMO Systems

Jul 08, 2024Abstract:In this paper, we investigate the channel estimation problem for extremely large-scale multi-input and multi-output (XL-MIMO) systems, considering the spherical wavefront effect, spatially non-stationary (SnS) property, and dual-wideband effects. To accurately characterize the XL-MIMO channel, we first derive a novel spatial-and-frequency-domain channel model for XL-MIMO systems and carefully examine the channel characteristics in the angular-and-delay domain. Based on the obtained channel representation, we formulate XL-MIMO channel estimation as a Bayesian inference problem. To fully exploit the clustered sparsity of angular-and-delay channels and capture the inter-antenna and inter-subcarrier correlations, a Markov random field (MRF)-based hierarchical prior model is adopted. Meanwhile, to facilitate efficient channel reconstruction, we propose a sparse Bayesian learning (SBL) algorithm based on approximate message passing (AMP) with a unitary transformation. Tailored to the MRF-based hierarchical prior model, the message passing equations are reformulated using structured variational inference, belief propagation, and mean-field rules. Finally, simulation results validate the convergence and superiority of the proposed algorithm over existing methods.

Spatially Non-Stationary XL-MIMO Channel Estimation: A Three-Layer Generalized Approximate Message Passing Method

Mar 05, 2024

Abstract:In this paper, channel estimation problem for extremely large-scale multi-input multi-output (XL-MIMO) systems is investigated with the considerations of the spherical wavefront effect and the spatially non-stationary (SnS) property. Due to the diversities of SnS characteristics among different propagation paths, the concurrent channel estimation of multiple paths becomes intractable. To address this challenge, we propose a two-phase channel estimation scheme. In the first phase, the angles of departure (AoDs) on the user side are estimated, and a carefully designed pilot transmission scheme enables the decomposition of the received signal from different paths. In the second phase, the subchannel estimation corresponding to different paths is formulated as a three-layer Bayesian inference problem. Specifically, the first layer captures block sparsity in the angular domain, the second layer promotes SnS property in the antenna domain, and the third layer decouples the subchannels from the observed signals. To efficiently facilitate Bayesian inference, we propose a novel three-layer generalized approximate message passing (TL-GAMP) algorithm based on structured variational massage passing and belief propagation rules. Simulation results validate the convergence and effectiveness of the proposed algorithm, showcasing its robustness to different channel scenarios.

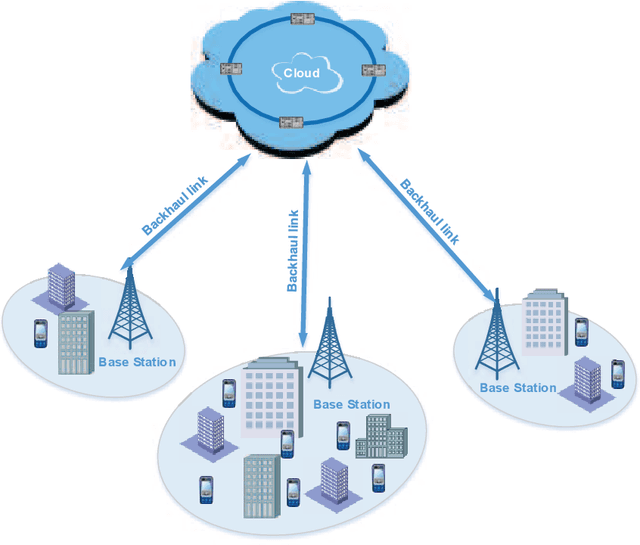

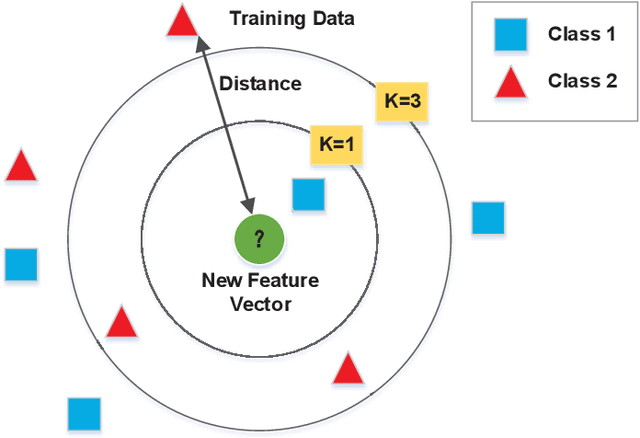

A Machine Learning Framework for Resource Allocation Assisted by Cloud Computing

Dec 16, 2017

Abstract:Conventionally, the resource allocation is formulated as an optimization problem and solved online with instantaneous scenario information. Since most resource allocation problems are not convex, the optimal solutions are very difficult to be obtained in real time. Lagrangian relaxation or greedy methods are then often employed, which results in performance loss. Therefore, the conventional methods of resource allocation are facing great challenges to meet the ever-increasing QoS requirements of users with scarce radio resource. Assisted by cloud computing, a huge amount of historical data on scenarios can be collected for extracting similarities among scenarios using machine learning. Moreover, optimal or near-optimal solutions of historical scenarios can be searched offline and stored in advance. When the measured data of current scenario arrives, the current scenario is compared with historical scenarios to find the most similar one. Then, the optimal or near-optimal solution in the most similar historical scenario is adopted to allocate the radio resources for the current scenario. To facilitate the application of new design philosophy, a machine learning framework is proposed for resource allocation assisted by cloud computing. An example of beam allocation in multi-user massive multiple-input-multiple-output (MIMO) systems shows that the proposed machine-learning based resource allocation outperforms conventional methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge