Junyuan Wang

Exploring Evolutionary Spectral Clustering for Temporal-Smoothed Clustered Cell-Free Networking

Dec 03, 2024Abstract:Clustered cell-free networking, which dynamically partitions the whole network into nonoverlapping subnetworks, has been recently proposed to mitigate the cell-edge problem in cellular networks. However, prior works only focused on optimizing clustered cell-free networking in static scenarios with fixed users. This could lead to a large number of handovers in the practical dynamic environment with moving users, seriously hindering the implementation of clustered cell-free networking in practice. This paper considers user mobility and aims to simultaneously maximize the sum rate and minimize the number of handovers. By transforming the multi-objective optimization problem into a time-varying graph partitioning problem and exploring evolutionary spectral clustering, a temporal-smoothed clustered cell-free networking algorithm is proposed, which is shown to be effective in smoothing network partitions over time and reducing handovers while maintaining similar sum rate.

Optimizing Clustered Cell-Free Networking for Sum Ergodic Capacity Maximization with Joint Processing Constraint

Nov 18, 2024

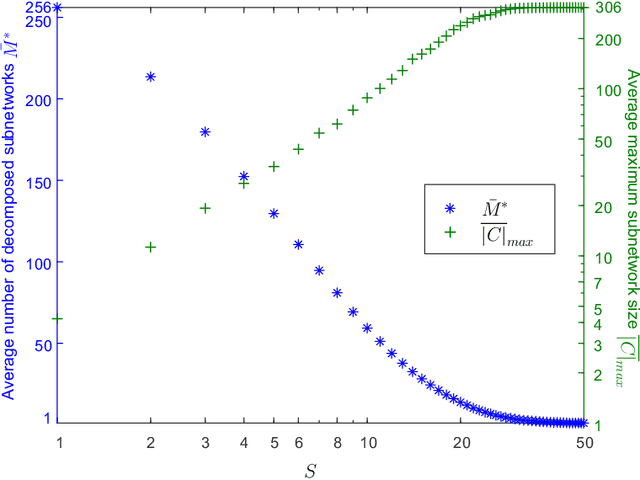

Abstract:Clustered cell-free networking has been considered as an effective scheme to trade off between the low complexity of current cellular networks and the superior performance of fully cooperative networks. With clustered cell-free networking, the wireless network is decomposed into a number of disjoint parallel operating subnetworks with joint processing adopted inside each subnetwork independently for intra-subnetwork interference mitigation. Different from the existing works that aim to maximize the number of subnetworks without considering the limited processing capability of base-stations (BSs), this paper investigates the clustered cell-free networking problem with the objective of maximizing the sum ergodic capacity while imposing a limit on the number of user equipments (UEs) in each subnetwork to constrain the joint processing complexity. By successfully transforming the combinatorial NP-hard clustered cell-free networking problem into an integer convex programming problem, the problem is solved by the branch-and-bound method. To further reduce the computational complexity, a bisection clustered cell-free networking (BC^2F-Net) algorithm is proposed to decompose the network hierarchically. Simulation results show that compared to the branch-and-bound based scheme, the proposed BC^2F-Net algorithm significantly reduces the computational complexity yet achieves nearly the same network decomposition result. Moreover, our BC^2F-Net algorithm achieves near-optimal performance and outperforms the state-of-the-art benchmarks with up to 25% capacity gain.

Energy-Efficient Clustered Cell-Free Networking with Access Point Selection

Mar 01, 2024Abstract:Ultra-densely deploying access points (APs) to support the increasing data traffic would significantly escalate the cell-edge problem resulting from traditional cellular networks. By removing the cell boundaries and coordinating all APs for joint transmission, the cell-edge problem can be alleviated, which in turn leads to unaffordable system complexity and channel measurement overhead. A new scalable clustered cell-free network architecture has been proposed recently, under which the large-scale network is flexibly partitioned into a set of independent subnetworks operating parallelly. In this paper, we study the energy-efficient clustered cell-free networking problem with AP selection. Specifically, we propose a user-centric ratio-fixed AP-selection based clustering (UCR-ApSel) algorithm to form subnetworks dynamically. Following this, we analyze the average energy efficiency achieved with the proposed UCR-ApSel scheme theoretically and derive an effective closed-form upper-bound. Based on the analytical upper-bound expression, the optimal AP-selection ratio that maximizes the average energy efficiency is further derived as a simple explicit function of the total number of APs and the number of subnetworks. Simulation results demonstrate the effectiveness of the derived optimal AP-selection ratio and show that the proposed UCR-ApSel algorithm with the optimal AP-selection ratio achieves around 40% higher energy efficiency than the baselines. The analysis provides important insights to the design and optimization of future ultra-dense wireless communication systems.

Clustered Cell-Free Networking: A Graph Partitioning Approach

Jul 24, 2022

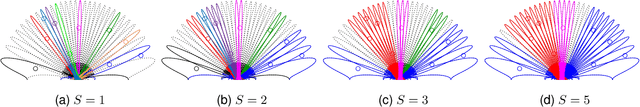

Abstract:By moving to millimeter wave (mmWave) frequencies, base stations (BSs) will be densely deployed to provide seamless coverage in sixth generation (6G) mobile communication systems, which, unfortunately, leads to severe cell-edge problem. In addition, with massive multiple-input-multiple-output (MIMO) antenna arrays employed at BSs, the beamspace channel is sparse for each user, and thus there is no need to serve all the users in a cell by all the beams therein jointly. Therefore, it is of paramount importance to develop a flexible clustered cell-free networking scheme that can decompose the whole network into a number of weakly interfered small subnetworks operating independently and in parallel. Given a per-user rate constraint for service quality guarantee, this paper aims to maximize the number of decomposed subnetworks so as to reduce the signaling overhead and system complexity as much as possible. By formulating it as a bipartite graph partitioning problem, a rate-constrained network decomposition (RC-NetDecomp) algorithm is proposed, which can smoothly tune the network structure from the current cellular network with simple beam allocation to a fully cooperative network by increasing the required per-user rate. Simulation results demonstrate that the proposed RC-NetDecomp algorithm outperforms existing baselines in terms of average per-user rate, fairness among users and energy efficiency.

A Machine Learning Framework for Resource Allocation Assisted by Cloud Computing

Dec 16, 2017

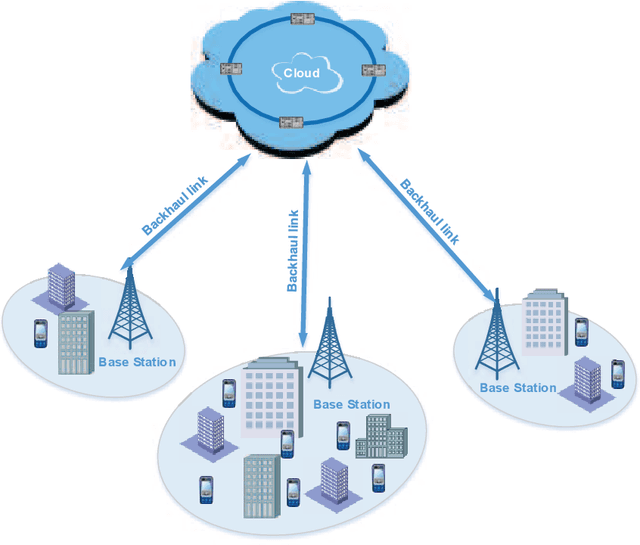

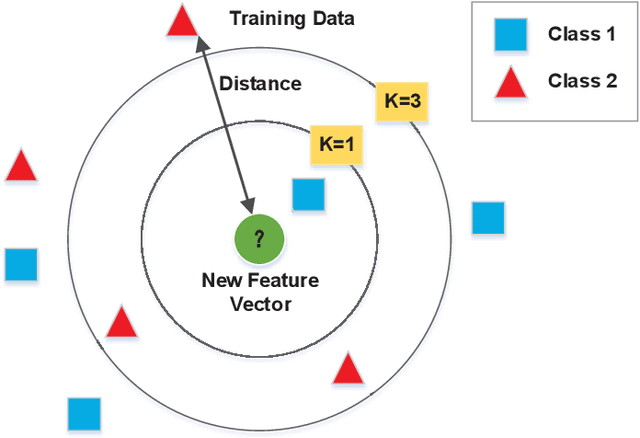

Abstract:Conventionally, the resource allocation is formulated as an optimization problem and solved online with instantaneous scenario information. Since most resource allocation problems are not convex, the optimal solutions are very difficult to be obtained in real time. Lagrangian relaxation or greedy methods are then often employed, which results in performance loss. Therefore, the conventional methods of resource allocation are facing great challenges to meet the ever-increasing QoS requirements of users with scarce radio resource. Assisted by cloud computing, a huge amount of historical data on scenarios can be collected for extracting similarities among scenarios using machine learning. Moreover, optimal or near-optimal solutions of historical scenarios can be searched offline and stored in advance. When the measured data of current scenario arrives, the current scenario is compared with historical scenarios to find the most similar one. Then, the optimal or near-optimal solution in the most similar historical scenario is adopted to allocate the radio resources for the current scenario. To facilitate the application of new design philosophy, a machine learning framework is proposed for resource allocation assisted by cloud computing. An example of beam allocation in multi-user massive multiple-input-multiple-output (MIMO) systems shows that the proposed machine-learning based resource allocation outperforms conventional methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge