Cui Zhang

Distributed Deep Reinforcement Learning Based Gradient Quantization for Federated Learning Enabled Vehicle Edge Computing

Jul 11, 2024

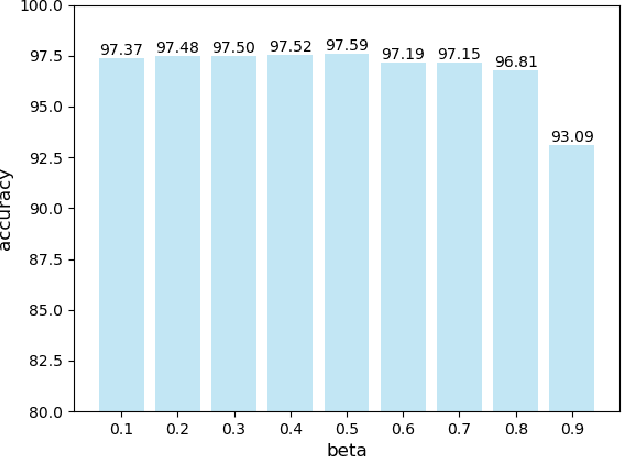

Abstract:Federated Learning (FL) can protect the privacy of the vehicles in vehicle edge computing (VEC) to a certain extent through sharing the gradients of vehicles' local models instead of local data. The gradients of vehicles' local models are usually large for the vehicular artificial intelligence (AI) applications, thus transmitting such large gradients would cause large per-round latency. Gradient quantization has been proposed as one effective approach to reduce the per-round latency in FL enabled VEC through compressing gradients and reducing the number of bits, i.e., the quantization level, to transmit gradients. The selection of quantization level and thresholds determines the quantization error, which further affects the model accuracy and training time. To do so, the total training time and quantization error (QE) become two key metrics for the FL enabled VEC. It is critical to jointly optimize the total training time and QE for the FL enabled VEC. However, the time-varying channel condition causes more challenges to solve this problem. In this paper, we propose a distributed deep reinforcement learning (DRL)-based quantization level allocation scheme to optimize the long-term reward in terms of the total training time and QE. Extensive simulations identify the optimal weighted factors between the total training time and QE, and demonstrate the feasibility and effectiveness of the proposed scheme.

Anti-Byzantine Attacks Enabled Vehicle Selection for Asynchronous Federated Learning in Vehicular Edge Computing

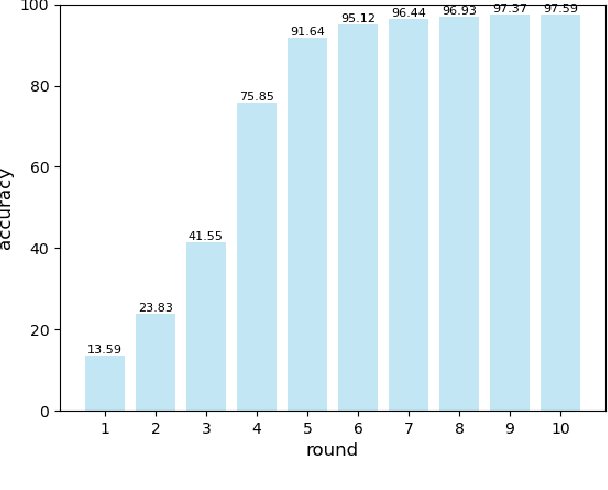

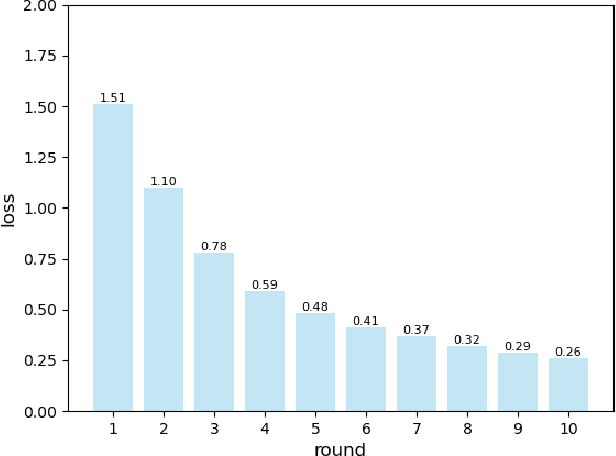

Apr 12, 2024Abstract:In vehicle edge computing (VEC), asynchronous federated learning (AFL) is used, where the edge receives a local model and updates the global model, effectively reducing the global aggregation latency.Due to different amounts of local data,computing capabilities and locations of the vehicles, renewing the global model with same weight is inappropriate.The above factors will affect the local calculation time and upload time of the local model, and the vehicle may also be affected by Byzantine attacks, leading to the deterioration of the vehicle data. However, based on deep reinforcement learning (DRL), we can consider these factors comprehensively to eliminate vehicles with poor performance as much as possible and exclude vehicles that have suffered Byzantine attacks before AFL. At the same time, when aggregating AFL, we can focus on those vehicles with better performance to improve the accuracy and safety of the system. In this paper, we proposed a vehicle selection scheme based on DRL in VEC. In this scheme, vehicle s mobility, channel conditions with temporal variations, computational resources with temporal variations, different data amount, transmission channel status of vehicles as well as Byzantine attacks were taken into account.Simulation results show that the proposed scheme effectively improves the safety and accuracy of the global model.

Asynchronous Federated Learning for Edge-assisted Vehicular Networks

Aug 03, 2022

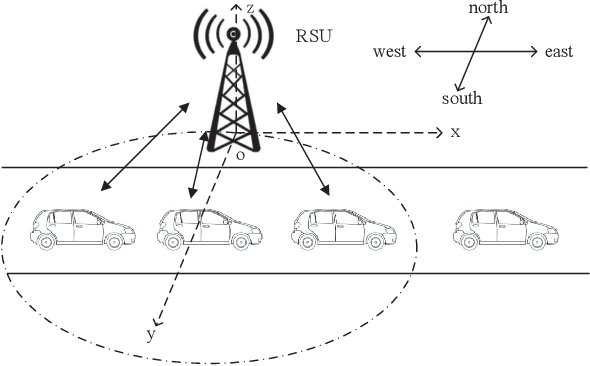

Abstract:Vehicular networks enable vehicles support real-time vehicular applications through training data. Due to the limited computing capability, vehicles usually transmit data to a road side unit (RSU) at the network edge to process data. However, vehicles are usually reluctant to share data with each other due to the privacy issue. For the traditional federated learning (FL), vehicles train the data locally to obtain a local model and then upload the local model to the RSU to update the global model, thus the data privacy can be protected through sharing model parameters instead of data. The traditional FL updates the global model synchronously, i.e., the RSU needs to wait for all vehicles to upload their models for the global model updating. However, vehicles may usually drive out of the coverage of the RSU before they obtain their local models through training, which reduces the accuracy of the global model. It is necessary to propose an asynchronous federated learning (AFL) to solve this problem, where the RSU updates the global model once it receives a local model from a vehicle. However, the amount of data, computing capability and vehicle mobility may affect the accuracy of the global model. In this paper, we jointly consider the amount of data, computing capability and vehicle mobility to design an AFL scheme to improve the accuracy of the global model. Extensive simulation experiments have demonstrated that our scheme outperforms the FL scheme

Towards V2I Age-aware Fairness Access: A DQN Based Intelligent Vehicular Node Training and Test Method

Aug 02, 2022

Abstract:Vehicles on the road exchange data with base station (BS) frequently through vehicle to infrastructure (V2I) communications to ensure the normal use of vehicular applications, where the IEEE 802.11 distributed coordination function (DCF) is employed to allocate a minimum contention window (MCW) for channel access. Each vehicle may change its MCW to achieve more access opportunities at the expense of others, which results in unfair communication performance. Moreover, the key access parameters MCW is the privacy information and each vehicle are not willing to share it with other vehicles. In this uncertain setting, age of information (AoI) is an important communication metric to measure the freshness of data, we design an intelligent vehicular node to learn the dynamic environment and predict the optimal MCW which can make it achieve age fairness. In order to allocate the optimal MCW for the vehicular node, we employ a learning algorithm to make a desirable decision by learning from replay history data. In particular, the algorithm is proposed by extending the traditional DQN training and testing method. Finally, by comparing with other methods, it is proved that the proposed DQN method can significantly improve the age fairness of the intelligent node.

Mobility-Aware Cooperative Caching in Vehicular Edge Computing Based on Asynchronous Federated and Deep Reinforcement Learning

Aug 02, 2022

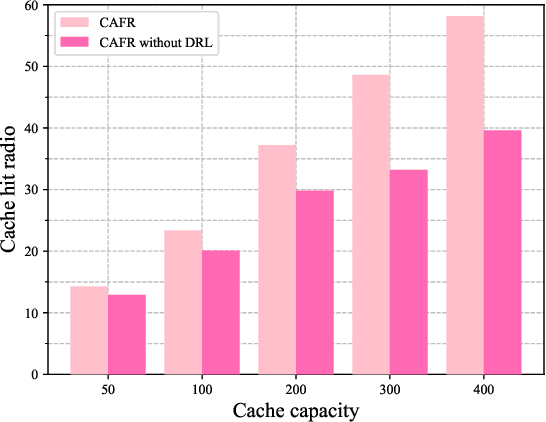

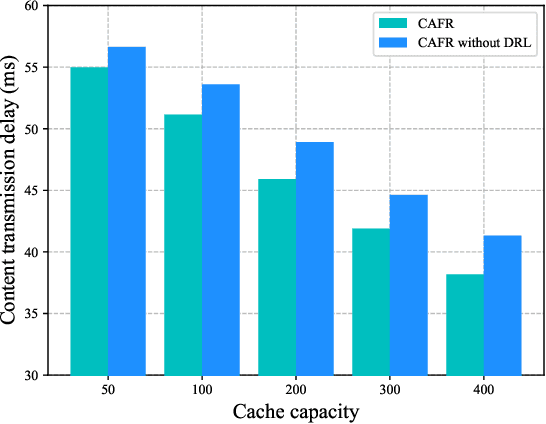

Abstract:The vehicular edge computing (VEC) can cache contents in different RSUs at the network edge to support the real-time vehicular applications. In VEC, owing to the high-mobility characteristics of vehicles, it is necessary to cache the user data in advance and learn the most popular and interesting contents for vehicular users. Since user data usually contains privacy information, users are reluctant to share their data with others. To solve this problem, traditional federated learning (FL) needs to update the global model synchronously through aggregating all users' local models to protect users' privacy. However, vehicles may frequently drive out of the coverage area of the VEC before they achieve their local model trainings and thus the local models cannot be uploaded as expected, which would reduce the accuracy of the global model. In addition, the caching capacity of the local RSU is limited and the popular contents are diverse, thus the size of the predicted popular contents usually exceeds the cache capacity of the local RSU. Hence, the VEC should cache the predicted popular contents in different RSUs while considering the content transmission delay. In this paper, we consider the mobility of vehicles and propose a cooperative Caching scheme in the VEC based on Asynchronous Federated and deep Reinforcement learning (CAFR). We first consider the mobility of vehicles and propose an asynchronous FL algorithm to obtain an accurate global model, and then propose an algorithm to predict the popular contents based on the global model. In addition, we consider the mobility of vehicles and propose a deep reinforcement learning algorithm to obtain the optimal cooperative caching location for the predicted popular contents in order to optimize the content transmission delay. Extensive experimental results have demonstrated that the CAFR scheme outperforms other baseline caching schemes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge