Asynchronous Federated Learning for Edge-assisted Vehicular Networks

Paper and Code

Aug 03, 2022

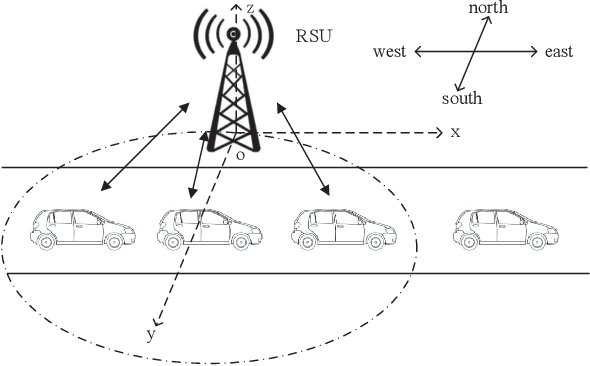

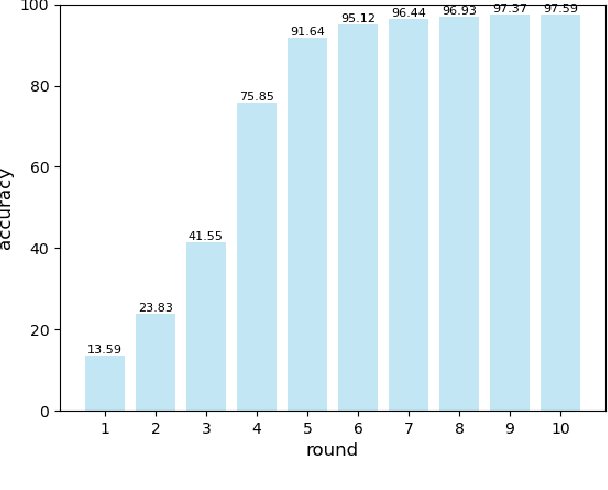

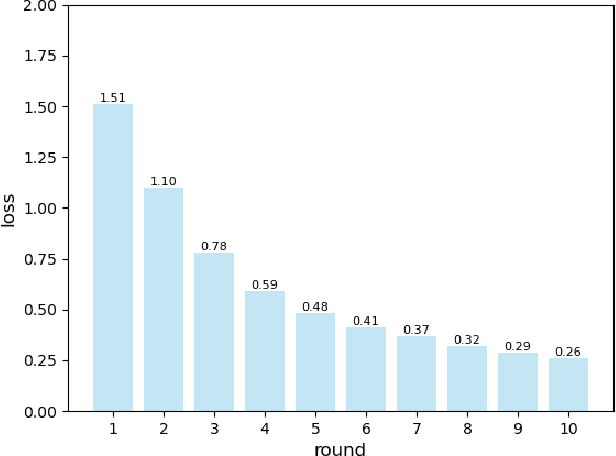

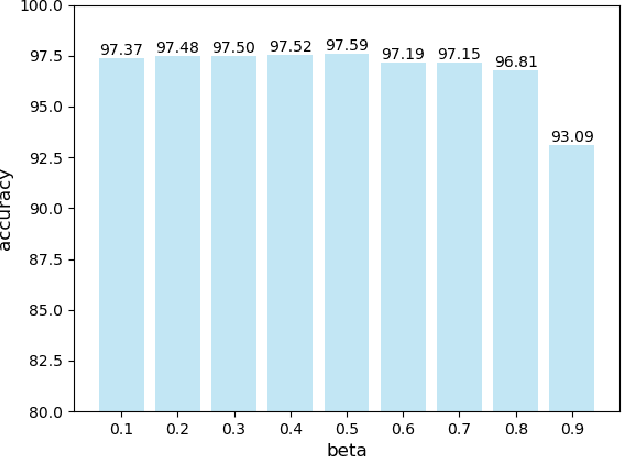

Vehicular networks enable vehicles support real-time vehicular applications through training data. Due to the limited computing capability, vehicles usually transmit data to a road side unit (RSU) at the network edge to process data. However, vehicles are usually reluctant to share data with each other due to the privacy issue. For the traditional federated learning (FL), vehicles train the data locally to obtain a local model and then upload the local model to the RSU to update the global model, thus the data privacy can be protected through sharing model parameters instead of data. The traditional FL updates the global model synchronously, i.e., the RSU needs to wait for all vehicles to upload their models for the global model updating. However, vehicles may usually drive out of the coverage of the RSU before they obtain their local models through training, which reduces the accuracy of the global model. It is necessary to propose an asynchronous federated learning (AFL) to solve this problem, where the RSU updates the global model once it receives a local model from a vehicle. However, the amount of data, computing capability and vehicle mobility may affect the accuracy of the global model. In this paper, we jointly consider the amount of data, computing capability and vehicle mobility to design an AFL scheme to improve the accuracy of the global model. Extensive simulation experiments have demonstrated that our scheme outperforms the FL scheme

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge