Holger Klinck

BIRB: A Generalization Benchmark for Information Retrieval in Bioacoustics

Dec 13, 2023

Abstract:The ability for a machine learning model to cope with differences in training and deployment conditions--e.g. in the presence of distribution shift or the generalization to new classes altogether--is crucial for real-world use cases. However, most empirical work in this area has focused on the image domain with artificial benchmarks constructed to measure individual aspects of generalization. We present BIRB, a complex benchmark centered on the retrieval of bird vocalizations from passively-recorded datasets given focal recordings from a large citizen science corpus available for training. We propose a baseline system for this collection of tasks using representation learning and a nearest-centroid search. Our thorough empirical evaluation and analysis surfaces open research directions, suggesting that BIRB fills the need for a more realistic and complex benchmark to drive progress on robustness to distribution shifts and generalization of ML models.

Feature Embeddings from Large-Scale Acoustic Bird Classifiers Enable Few-Shot Transfer Learning

Jul 12, 2023Abstract:Automated bioacoustic analysis aids understanding and protection of both marine and terrestrial animals and their habitats across extensive spatiotemporal scales, and typically involves analyzing vast collections of acoustic data. With the advent of deep learning models, classification of important signals from these datasets has markedly improved. These models power critical data analyses for research and decision-making in biodiversity monitoring, animal behaviour studies, and natural resource management. However, deep learning models are often data-hungry and require a significant amount of labeled training data to perform well. While sufficient training data is available for certain taxonomic groups (e.g., common bird species), many classes (such as rare and endangered species, many non-bird taxa, and call-type), lack enough data to train a robust model from scratch. This study investigates the utility of feature embeddings extracted from large-scale audio classification models to identify bioacoustic classes other than the ones these models were originally trained on. We evaluate models on diverse datasets, including different bird calls and dialect types, bat calls, marine mammals calls, and amphibians calls. The embeddings extracted from the models trained on bird vocalization data consistently allowed higher quality classification than the embeddings trained on general audio datasets. The results of this study indicate that high-quality feature embeddings from large-scale acoustic bird classifiers can be harnessed for few-shot transfer learning, enabling the learning of new classes from a limited quantity of training data. Our findings reveal the potential for efficient analyses of novel bioacoustic tasks, even in scenarios where available training data is limited to a few samples.

Learning Stage-wise GANs for Whistle Extraction in Time-Frequency Spectrograms

Apr 05, 2023

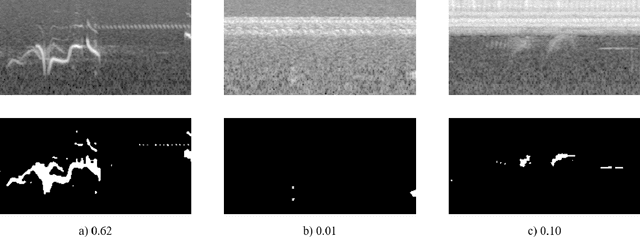

Abstract:Whistle contour extraction aims to derive animal whistles from time-frequency spectrograms as polylines. For toothed whales, whistle extraction results can serve as the basis for analyzing animal abundance, species identity, and social activities. During the last few decades, as long-term recording systems have become affordable, automated whistle extraction algorithms were proposed to process large volumes of recording data. Recently, a deep learning-based method demonstrated superior performance in extracting whistles under varying noise conditions. However, training such networks requires a large amount of labor-intensive annotation, which is not available for many species. To overcome this limitation, we present a framework of stage-wise generative adversarial networks (GANs), which compile new whistle data suitable for deep model training via three stages: generation of background noise in the spectrogram, generation of whistle contours, and generation of whistle signals. By separating the generation of different components in the samples, our framework composes visually promising whistle data and labels even when few expert annotated data are available. Regardless of the amount of human-annotated data, the proposed data augmentation framework leads to a consistent improvement in performance of the whistle extraction model, with a maximum increase of 1.69 in the whistle extraction mean F1-score. Our stage-wise GAN also surpasses one single GAN in improving whistle extraction models with augmented data. The data and code will be available at https://github.com/Paul-LiPu/CompositeGAN\_WhistleAugment.

Seeing biodiversity: perspectives in machine learning for wildlife conservation

Oct 25, 2021

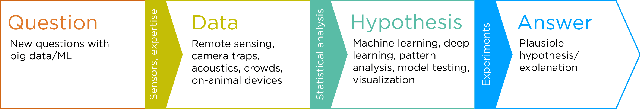

Abstract:Data acquisition in animal ecology is rapidly accelerating due to inexpensive and accessible sensors such as smartphones, drones, satellites, audio recorders and bio-logging devices. These new technologies and the data they generate hold great potential for large-scale environmental monitoring and understanding, but are limited by current data processing approaches which are inefficient in how they ingest, digest, and distill data into relevant information. We argue that machine learning, and especially deep learning approaches, can meet this analytic challenge to enhance our understanding, monitoring capacity, and conservation of wildlife species. Incorporating machine learning into ecological workflows could improve inputs for population and behavior models and eventually lead to integrated hybrid modeling tools, with ecological models acting as constraints for machine learning models and the latter providing data-supported insights. In essence, by combining new machine learning approaches with ecological domain knowledge, animal ecologists can capitalize on the abundance of data generated by modern sensor technologies in order to reliably estimate population abundances, study animal behavior and mitigate human/wildlife conflicts. To succeed, this approach will require close collaboration and cross-disciplinary education between the computer science and animal ecology communities in order to ensure the quality of machine learning approaches and train a new generation of data scientists in ecology and conservation.

Parsing Birdsong with Deep Audio Embeddings

Aug 20, 2021

Abstract:Monitoring of bird populations has played a vital role in conservation efforts and in understanding biodiversity loss. The automation of this process has been facilitated by both sensing technologies, such as passive acoustic monitoring, and accompanying analytical tools, such as deep learning. However, machine learning models frequently have difficulty generalizing to examples not encountered in the training data. In our work, we present a semi-supervised approach to identify characteristic calls and environmental noise. We utilize several methods to learn a latent representation of audio samples, including a convolutional autoencoder and two pre-trained networks, and group the resulting embeddings for a domain expert to identify cluster labels. We show that our approach can improve classification precision and provide insight into the latent structure of environmental acoustic datasets.

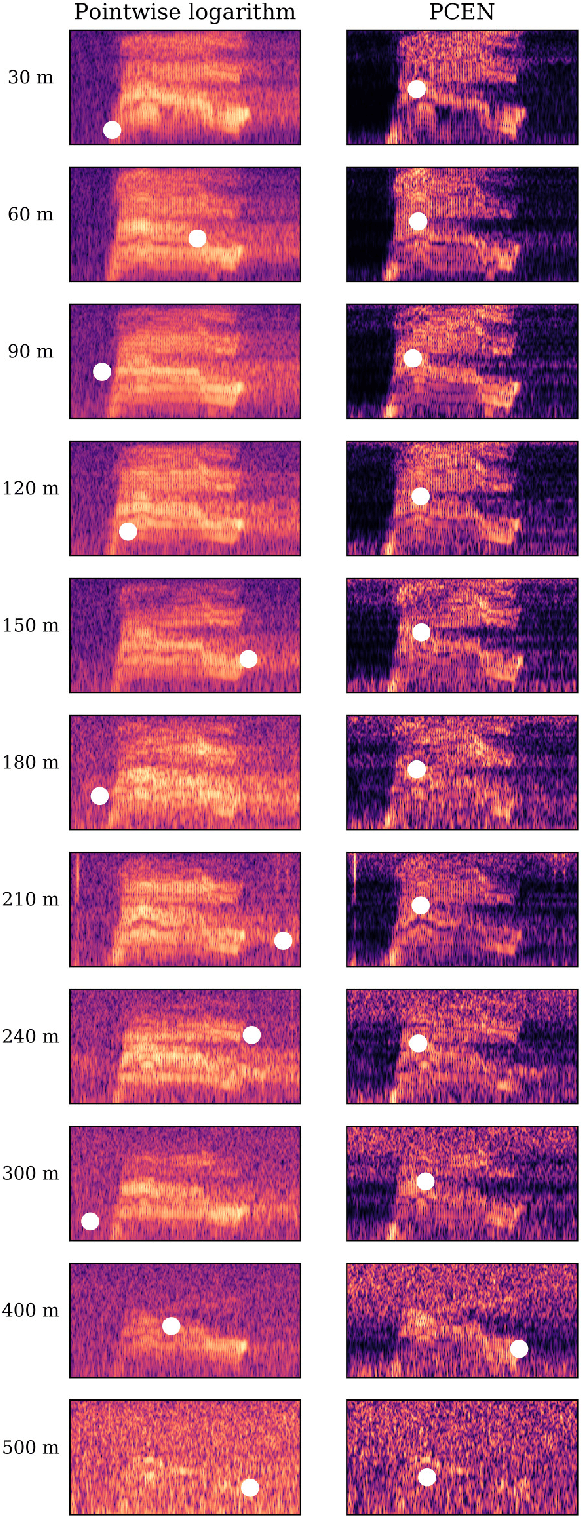

Long-distance Detection of Bioacoustic Events with Per-channel Energy Normalization

Nov 01, 2019

Abstract:This paper proposes to perform unsupervised detection of bioacoustic events by pooling the magnitudes of spectrogram frames after per-channel energy normalization (PCEN). Although PCEN was originally developed for speech recognition, it also has beneficial effects in enhancing animal vocalizations, despite the presence of atmospheric absorption and intermittent noise. We prove that PCEN generalizes logarithm-based spectral flux, yet with a tunable time scale for background noise estimation. In comparison with pointwise logarithm, PCEN reduces false alarm rate by 50x in the near field and 5x in the far field, both on avian and marine bioacoustic datasets. Such improvements come at moderate computational cost and require no human intervention, thus heralding a promising future for PCEN in bioacoustics.

GIBBONR: An R package for the detection and classification of acoustic signals using machine learning

Jun 06, 2019

Abstract:1. The recent improvements in recording technology, data storage and battery life have led to an increased interest in the use of passive acoustic monitoring for a variety of research questions. One of the main obstacles in implementing wide scale acoustic monitoring programs in terrestrial environments is the lack of user-friendly, open source programs for processing acoustic data. 2. Here we describe the new, open-source R package GIBBONR which has functions for classification, detection and visualization of acoustic signals using different readily available machine learning algorithms in the R programming environment. 3. We provide a case study showing how GIBBONR functions can be used in a workflow to classify and detect Bornean gibbon (Hylobates muelleri) calls in long-term recordings from Danum Valley Conservation Area, Sabah Malaysia. 4. Machine learning is currently one of the most rapidly growing fields-- with applications across many disciplines-- and our goal is to make commonly used signal processing techniques and machine learning algorithms readily available for ecologists who are interested in incorporating bioacoustics techniques into their research.

Recognizing Birds from Sound - The 2018 BirdCLEF Baseline System

Apr 19, 2018

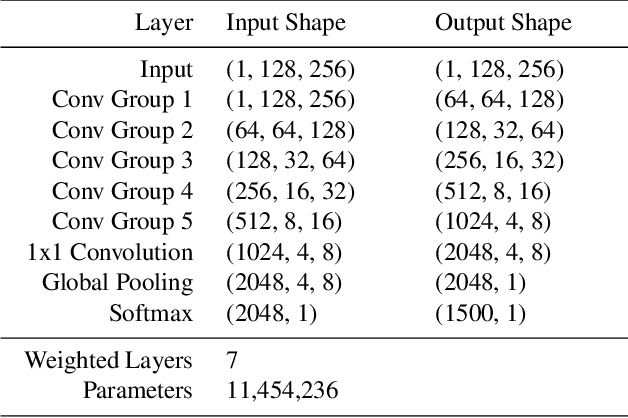

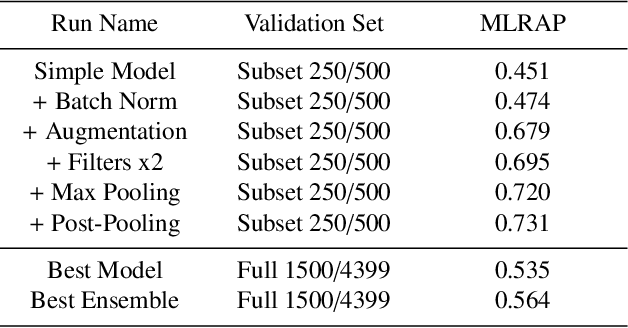

Abstract:Reliable identification of bird species in recorded audio files would be a transformative tool for researchers, conservation biologists, and birders. In recent years, artificial neural networks have greatly improved the detection quality of machine learning systems for bird species recognition. We present a baseline system using convolutional neural networks. We publish our code base as reference for participants in the 2018 LifeCLEF bird identification task and discuss our experiments and potential improvements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge