Heiner Kuhlmann

DogLegs: Robust Proprioceptive State Estimation for Legged Robots Using Multiple Leg-Mounted IMUs

Mar 06, 2025

Abstract:Robust and accurate proprioceptive state estimation of the main body is crucial for legged robots to execute tasks in extreme environments where exteroceptive sensors, such as LiDARs and cameras may become unreliable. In this paper, we propose DogLegs, a state estimation system for legged robots that fuses the measurements from a body-mounted inertial measurement unit (Body-IMU), joint encoders, and multiple leg-mounted IMUs (Leg-IMU) using an extended Kalman filter (EKF). The filter system contains the error states of all IMU frames. The Leg-IMUs are used to detect foot contact, thereby providing zero velocity measurements to update the state of the Leg-IMU frames. Additionally, we compute the relative position constraints between the Body-IMU and Leg-IMUs by the leg kinematics and use them to update the main body state and reduce the error drift of the individual IMU frames. Field experimental results have shown that our proposed system can achieve better state estimation accuracy compared to the traditional leg odometry method (using only Body-IMU and joint encoders) across different terrains. We make our datasets publicly available to benefit the research community.

Wheel-GINS: A GNSS/INS Integrated Navigation System with a Wheel-mounted IMU

Jan 06, 2025

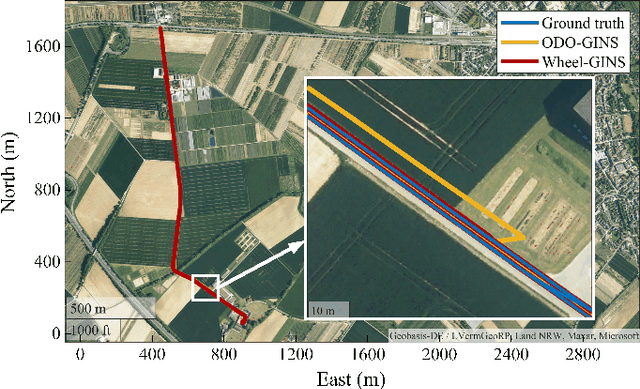

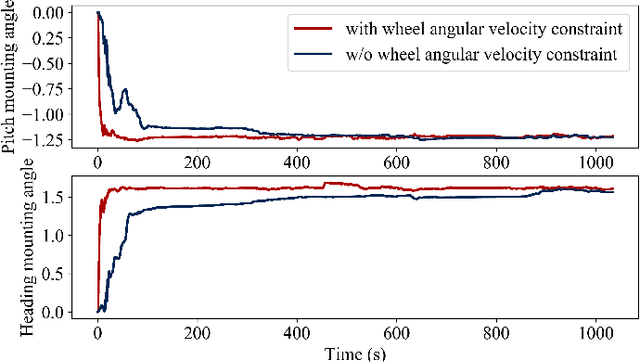

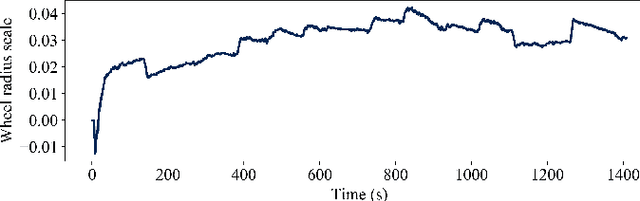

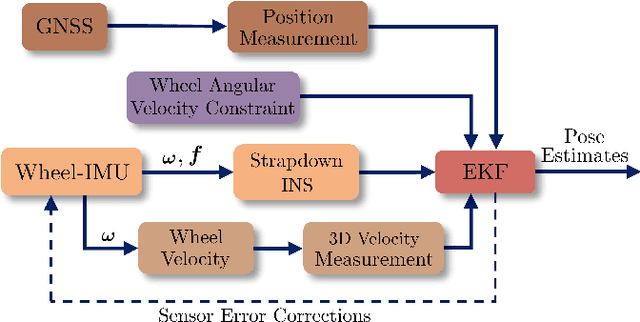

Abstract:A long-term accurate and robust localization system is essential for mobile robots to operate efficiently outdoors. Recent studies have shown the significant advantages of the wheel-mounted inertial measurement unit (Wheel-IMU)-based dead reckoning system. However, it still drifts over extended periods because of the absence of external correction signals. To achieve the goal of long-term accurate localization, we propose Wheel-GINS, a Global Navigation Satellite System (GNSS)/inertial navigation system (INS) integrated navigation system using a Wheel-IMU. Wheel-GINS fuses the GNSS position measurement with the Wheel-IMU via an extended Kalman filter to limit the long-term error drift and provide continuous state estimation when the GNSS signal is blocked. Considering the specificities of the GNSS/Wheel-IMU integration, we conduct detailed modeling and online estimation of the Wheel-IMU installation parameters, including the Wheel-IMU leverarm and mounting angle and the wheel radius error. Experimental results have shown that Wheel-GINS outperforms the traditional GNSS/Odometer/INS integrated navigation system during GNSS outages. At the same time, Wheel-GINS can effectively estimate the Wheel-IMU installation parameters online and, consequently, improve the localization accuracy and practicality of the system. The source code of our implementation is publicly available (https://github.com/i2Nav-WHU/Wheel-GINS).

A Dataset and Benchmark for Shape Completion of Fruits for Agricultural Robotics

Jul 18, 2024Abstract:As the population is expected to reach 10 billion by 2050, our agricultural production system needs to double its productivity despite a decline of human workforce in the agricultural sector. Autonomous robotic systems are one promising pathway to increase productivity by taking over labor-intensive manual tasks like fruit picking. To be effective, such systems need to monitor and interact with plants and fruits precisely, which is challenging due to the cluttered nature of agricultural environments causing, for example, strong occlusions. Thus, being able to estimate the complete 3D shapes of objects in presence of occlusions is crucial for automating operations such as fruit harvesting. In this paper, we propose the first publicly available 3D shape completion dataset for agricultural vision systems. We provide an RGB-D dataset for estimating the 3D shape of fruits. Specifically, our dataset contains RGB-D frames of single sweet peppers in lab conditions but also in a commercial greenhouse. For each fruit, we additionally collected high-precision point clouds that we use as ground truth. For acquiring the ground truth shape, we developed a measuring process that allows us to record data of real sweet pepper plants, both in the lab and in the greenhouse with high precision, and determine the shape of the sensed fruits. We release our dataset, consisting of almost 7000 RGB-D frames belonging to more than 100 different fruits. We provide segmented RGB-D frames, with camera instrinsics to easily obtain colored point clouds, together with the corresponding high-precision, occlusion-free point clouds obtained with a high-precision laser scanner. We additionally enable evaluation ofshape completion approaches on a hidden test set through a public challenge on a benchmark server.

System Calibration of a Field Phenotyping Robot with Multiple High-Precision Profile Laser Scanners

Mar 26, 2024Abstract:The creation of precise and high-resolution crop point clouds in agricultural fields has become a key challenge for high-throughput phenotyping applications. This work implements a novel calibration method to calibrate the laser scanning system of an agricultural field robot consisting of two industrial-grade laser scanners used for high-precise 3D crop point cloud creation. The calibration method optimizes the transformation between the scanner origins and the robot pose by minimizing 3D point omnivariances within the point cloud. Moreover, we present a novel factor graph-based pose estimation method that fuses total station prism measurements with IMU and GNSS heading information for high-precise pose determination during calibration. The root-mean-square error of the distances to a georeferenced ground truth point cloud results in 0.8 cm after parameter optimization. Furthermore, our results show the importance of a reference point cloud in the calibration method needed to estimate the vertical translation of the calibration. Challenges arise due to non-static parameters while the robot moves, indicated by systematic deviations to a ground truth terrestrial laser scan.

LIO-EKF: High Frequency LiDAR-Inertial Odometry using Extended Kalman Filters

Nov 16, 2023

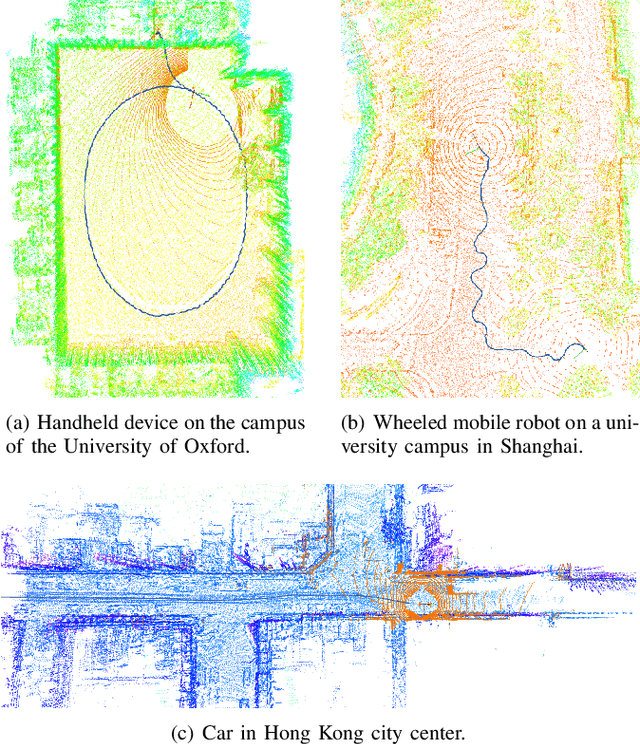

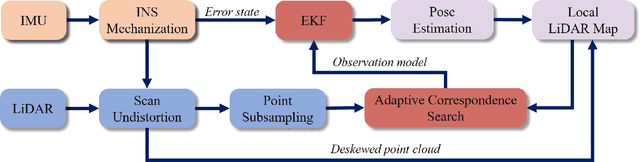

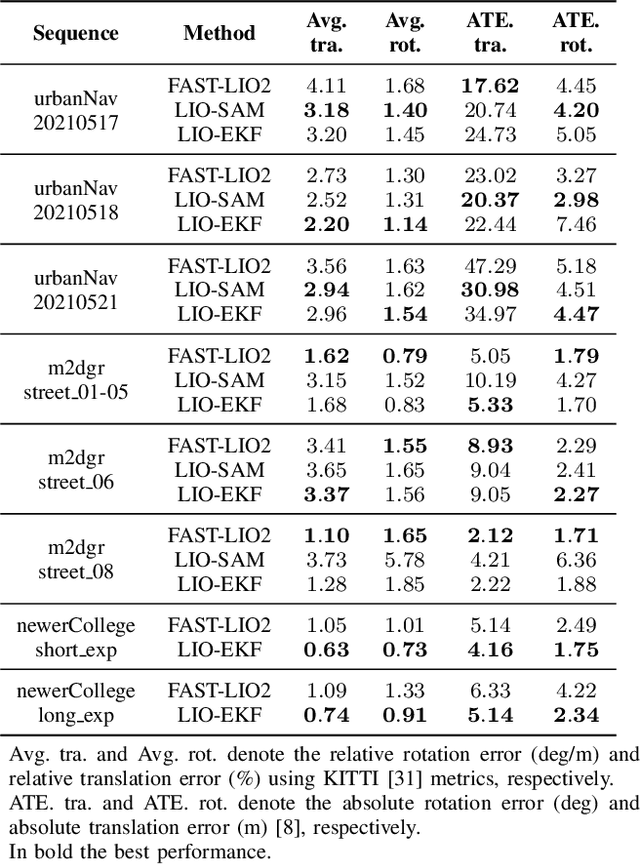

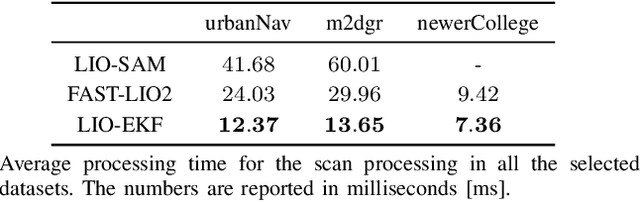

Abstract:Odometry estimation is a key element for every autonomous system requiring navigation in an unknown environment. In modern mobile robots, 3D LiDAR-inertial systems are often used for this task. By fusing LiDAR scans and IMU measurements, these systems can reduce the accumulated drift caused by sequentially registering individual LiDAR scans and provide a robust pose estimate. Although effective, LiDAR-inertial odometry systems require proper parameter tuning to be deployed. In this paper, we propose LIO-EKF, a tightly-coupled LiDAR-inertial odometry system based on point-to-point registration and the classical extended Kalman filter scheme. We propose an adaptive data association that considers the relative pose uncertainty, the map discretization errors, and the LiDAR noise. In this way, we can substantially reduce the parameters to tune for a given type of environment. The experimental evaluation suggests that the proposed system performs on par with the state-of-the-art LiDAR-inertial odometry pipelines, but is significantly faster in computing the odometry.

Field Robot for High-throughput and High-resolution 3D Plant Phenotyping

Nov 05, 2023Abstract:With the need to feed a growing world population, the efficiency of crop production is of paramount importance. To support breeding and field management, various characteristics of the plant phenotype need to be measured -- a time-consuming process when performed manually. We present a robotic platform equipped with multiple laser and camera sensors for high-throughput, high-resolution in-field plant scanning. We create digital twins of the plants through 3D reconstruction. This allows the estimation of phenotypic traits such as leaf area, leaf angle, and plant height. We validate our system on a real field, where we reconstruct accurate point clouds and meshes of sugar beet, soybean, and maize.

Wheel-SLAM: Simultaneous Localization and Terrain Mapping Using One Wheel-mounted IMU

Nov 06, 2022Abstract:A reliable pose estimator robust to environmental disturbances is desirable for mobile robots. To this end, inertial measurement units (IMUs) play an important role because they can perceive the full motion state of the vehicle independently. However, it suffers from accumulative error due to inherent noise and bias instability, especially for low-cost sensors. In our previous studies on Wheel-INS \cite{niu2021, wu2021}, we proposed to limit the error drift of the pure inertial navigation system (INS) by mounting an IMU to the wheel of the robot to take advantage of rotation modulation. However, it still drifted over a long period of time due to the lack of external correction signals. In this letter, we propose to exploit the environmental perception ability of Wheel-INS to achieve simultaneous localization and mapping (SLAM) with only one IMU. To be specific, we use the road bank angles (mirrored by the robot roll angles estimated by Wheel-INS) as terrain features to enable the loop closure with a Rao-Blackwellized particle filter. The road bank angle is sampled and stored according to the robot position in the grid maps maintained by the particles. The weights of the particles are updated according to the difference between the currently estimated roll sequence and the terrain map. Field experiments suggest the feasibility of the idea to perform SLAM in Wheel-INS using the robot roll angle estimates. In addition, the positioning accuracy is improved significantly (more than 30\%) over Wheel-INS. Source code of our implementation is publicly available (https://github.com/i2Nav-WHU/Wheel-SLAM).

Counting of Grapevine Berries in Images via Semantic Segmentation using Convolutional Neural Networks

Apr 29, 2020

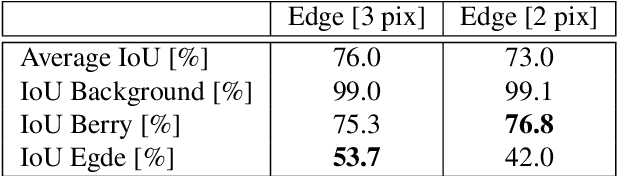

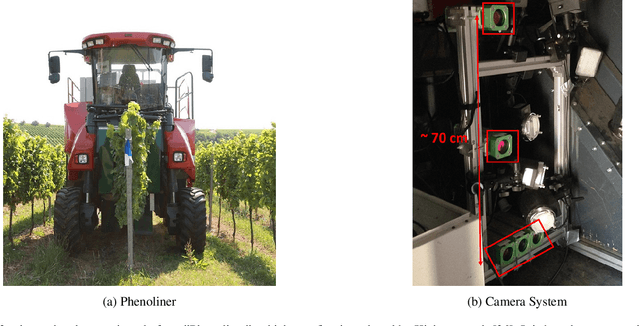

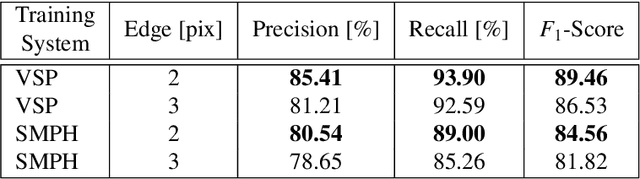

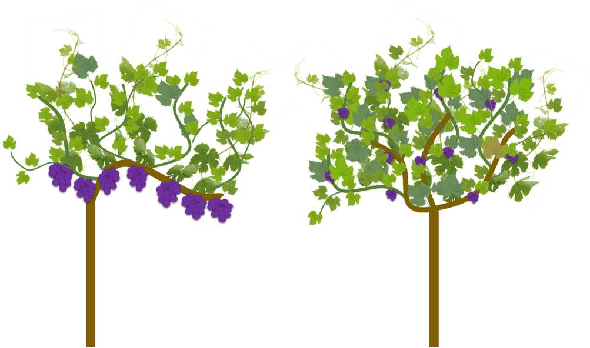

Abstract:The extraction of phenotypic traits is often very time and labour intensive. Especially the investigation in viticulture is restricted to an on-site analysis due to the perennial nature of grapevine. Traditionally skilled experts examine small samples and extrapolate the results to a whole plot. Thereby different grapevine varieties and training systems, e.g. vertical shoot positioning (VSP) and semi minimal pruned hedges (SMPH) pose different challenges. In this paper we present an objective framework based on automatic image analysis which works on two different training systems. The images are collected semi automatic by a camera system which is installed in a modified grape harvester. The system produces overlapping images from the sides of the plants. Our framework uses a convolutional neural network to detect single berries in images by performing a semantic segmentation. Each berry is then counted with a connected component algorithm. We compare our results with the Mask-RCNN, a state-of-the-art network for instance segmentation and with a regression approach for counting. The experiments presented in this paper show that we are able to detect green berries in images despite of different training systems. We achieve an accuracy for the berry detection of 94.0% in the VSP and 85.6% in the SMPH.

Detection of Single Grapevine Berries in Images Using Fully Convolutional Neural Networks

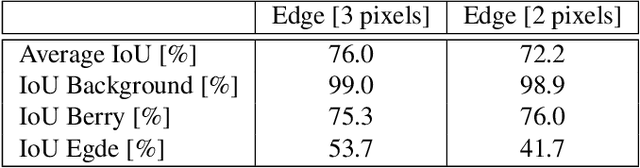

May 01, 2019

Abstract:Yield estimation and forecasting are of special interest in the field of grapevine breeding and viticulture. The number of harvested berries per plant is strongly correlated with the resulting quality. Therefore, early yield forecasting can enable a focused thinning of berries to ensure a high quality end product. Traditionally yield estimation is done by extrapolating from a small sample size and by utilizing historic data. Moreover, it needs to be carried out by skilled experts with much experience in this field. Berry detection in images offers a cheap, fast and non-invasive alternative to the otherwise time-consuming and subjective on-site analysis by experts. We apply fully convolutional neural networks on images acquired with the Phenoliner, a field phenotyping platform. We count single berries in images to avoid the error-prone detection of grapevine clusters. Clusters are often overlapping and can vary a lot in the size which makes the reliable detection of them difficult. We address especially the detection of white grapes directly in the vineyard. The detection of single berries is formulated as a classification task with three classes, namely 'berry', 'edge' and 'background'. A connected component algorithm is applied to determine the number of berries in one image. We compare the automatically counted number of berries with the manually detected berries in 60 images showing Riesling plants in vertical shoot positioned trellis (VSP) and semi minimal pruned hedges (SMPH). We are able to detect berries correctly within the VSP system with an accuracy of 94.0 \% and for the SMPH system with 85.6 \%.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge