Guisong Xia

SegEarth-R1: Geospatial Pixel Reasoning via Large Language Model

Apr 13, 2025Abstract:Remote sensing has become critical for understanding environmental dynamics, urban planning, and disaster management. However, traditional remote sensing workflows often rely on explicit segmentation or detection methods, which struggle to handle complex, implicit queries that require reasoning over spatial context, domain knowledge, and implicit user intent. Motivated by this, we introduce a new task, \ie, geospatial pixel reasoning, which allows implicit querying and reasoning and generates the mask of the target region. To advance this task, we construct and release the first large-scale benchmark dataset called EarthReason, which comprises 5,434 manually annotated image masks with over 30,000 implicit question-answer pairs. Moreover, we propose SegEarth-R1, a simple yet effective language-guided segmentation baseline that integrates a hierarchical visual encoder, a large language model (LLM) for instruction parsing, and a tailored mask generator for spatial correlation. The design of SegEarth-R1 incorporates domain-specific adaptations, including aggressive visual token compression to handle ultra-high-resolution remote sensing images, a description projection module to fuse language and multi-scale features, and a streamlined mask prediction pipeline that directly queries description embeddings. Extensive experiments demonstrate that SegEarth-R1 achieves state-of-the-art performance on both reasoning and referring segmentation tasks, significantly outperforming traditional and LLM-based segmentation methods. Our data and code will be released at https://github.com/earth-insights/SegEarth-R1.

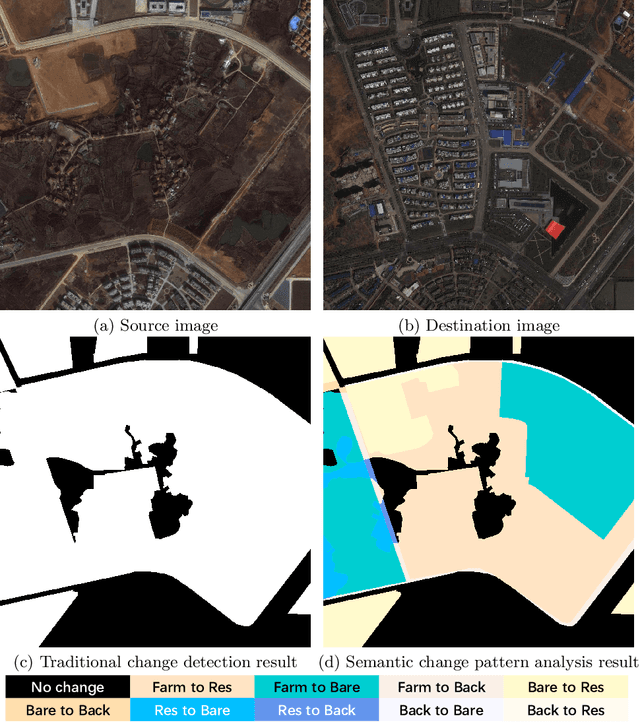

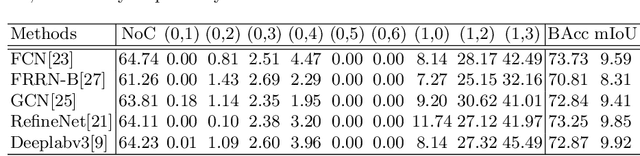

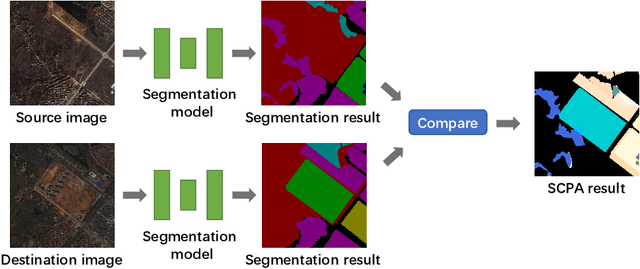

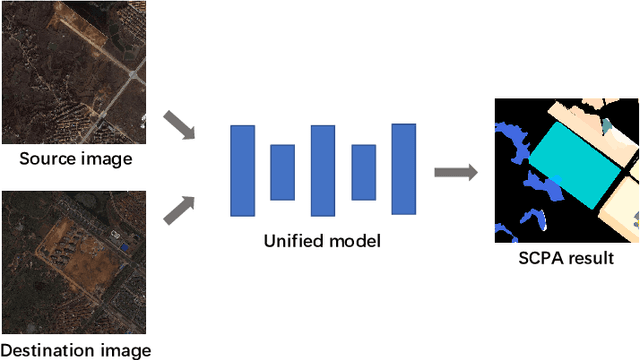

Semantic Change Pattern Analysis

Mar 07, 2020

Abstract:Change detection is an important problem in vision field, especially for aerial images. However, most works focus on traditional change detection, i.e., where changes happen, without considering the change type information, i.e., what changes happen. Although a few works have tried to apply semantic information to traditional change detection, they either only give the label of emerging objects without taking the change type into consideration, or set some kinds of change subjectively without specifying semantic information. To make use of semantic information and analyze change types comprehensively, we propose a new task called semantic change pattern analysis for aerial images. Given a pair of co-registered aerial images, the task requires a result including both where and what changes happen. We then describe the metric adopted for the task, which is clean and interpretable. We further provide the first well-annotated aerial image dataset for this task. Extensive baseline experiments are conducted as reference for following works. The aim of this work is to explore high-level information based on change detection and facilitate the development of this field with the publicly available dataset.

Toward Autonomous Rotation-Aware Unmanned Aerial Grasping

Nov 09, 2018

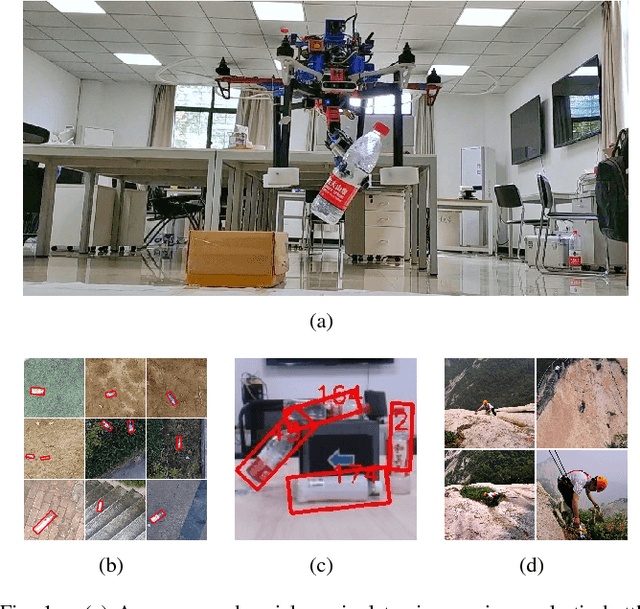

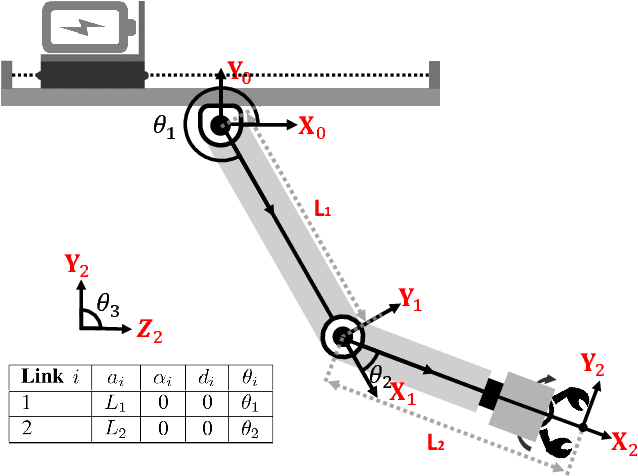

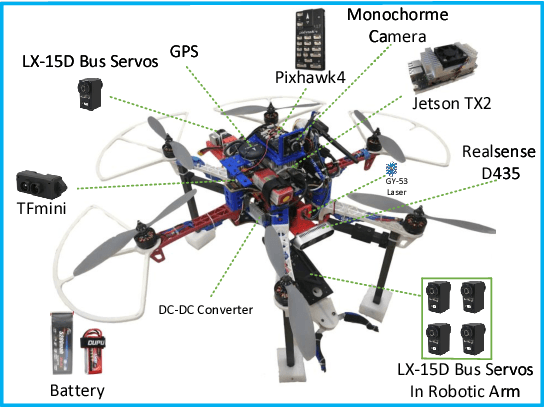

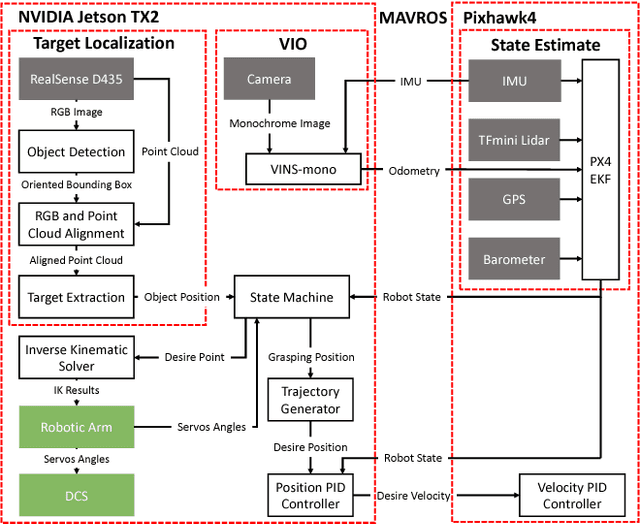

Abstract:Autonomous Unmanned Aerial Manipulators (UAMs) have shown promising potentials to transform passive sensing missions into active 3-dimension interactive missions, but they still suffer from some difficulties impeding their wide applications, such as target detection and stabilization. This letter presents a vision-based autonomous UAM with a 3DoF robotic arm for rotational grasping, with a compensation on displacement for center of gravity. First, the hardware, software architecture and state estimation methods are detailed. All the mechanical designs are fully provided as open-source hardware for the reuse by the community. Then, we analyze the flow distribution generated by rotors and plan the robotic arm's motion based on this analysis. Next, a novel detection approach called Rotation-SqueezeDet is proposed to enable rotation-aware grasping, which can give the target position and rotation angle in near real-time on Jetson TX2. Finally, the effectiveness of the proposed scheme is validated in multiple experimental trials, highlighting it's applicability of autonomous aerial grasping in GPS-denied environments.

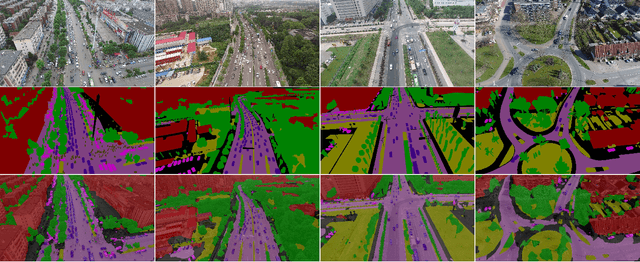

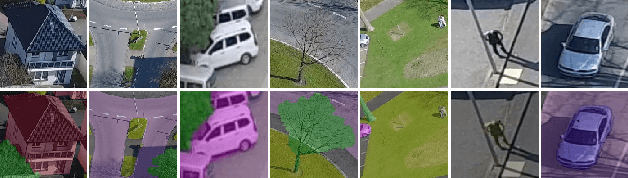

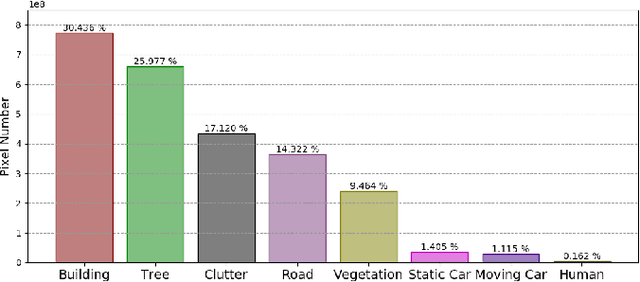

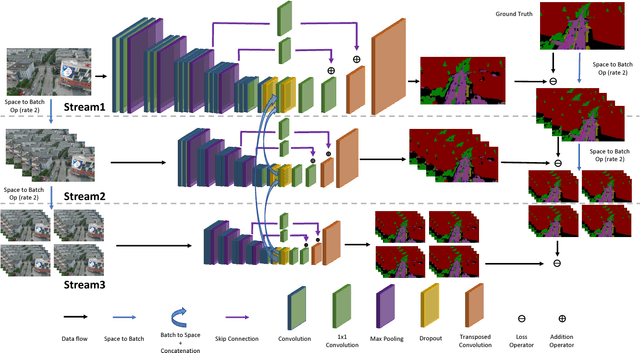

The UAVid Dataset for Video Semantic Segmentation

Oct 24, 2018

Abstract:Video semantic segmentation has been one of the research focus in computer vision recently. It serves as a perception foundation for many fields such as robotics and autonomous driving. The fast development of semantic segmentation attributes enormously to the large scale datasets, especially for the deep learning related methods. Currently, there already exist several semantic segmentation datasets for complex urban scenes, such as the Cityscapes and CamVid datasets. They have been the standard datasets for comparison among semantic segmentation methods. In this paper, we introduce a new high resolution UAV video semantic segmentation dataset as complement, UAVid. Our UAV dataset consists of 30 video sequences capturing high resolution images. In total, 300 images have been densely labelled with 8 classes for urban scene understanding task. Our dataset brings out new challenges. We provide several deep learning baseline methods, among which the proposed novel Multi-Scale-Dilation net performs the best via multi-scale feature extraction. We have also explored the usability of sequence data by leveraging on CRF model in both spatial and temporal domain.

Multi-feature combined cloud and cloud shadow detection in GaoFen-1 wide field of view imagery

Feb 05, 2017

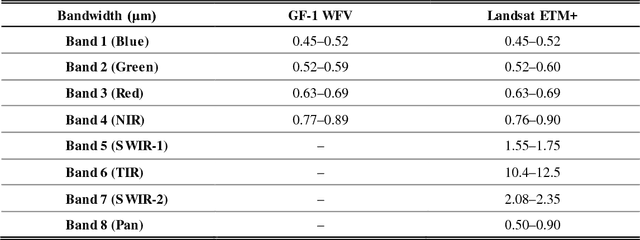

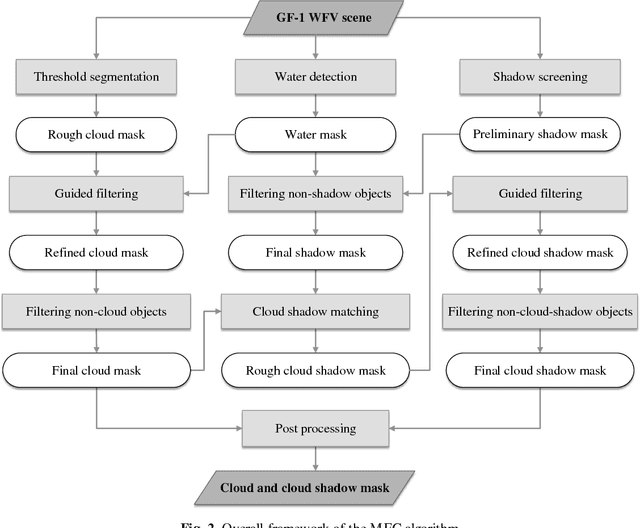

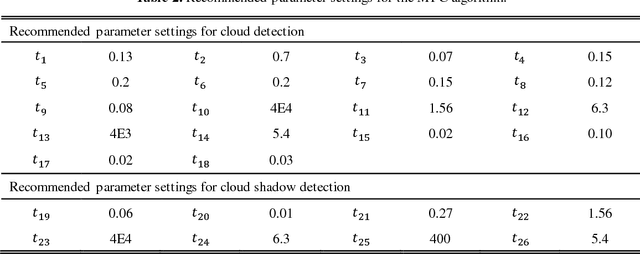

Abstract:The wide field of view (WFV) imaging system onboard the Chinese GaoFen-1 (GF-1) optical satellite has a 16-m resolution and four-day revisit cycle for large-scale Earth observation. The advantages of the high temporal-spatial resolution and the wide field of view make the GF-1 WFV imagery very popular. However, cloud cover is an inevitable problem in GF-1 WFV imagery, which influences its precise application. Accurate cloud and cloud shadow detection in GF-1 WFV imagery is quite difficult due to the fact that there are only three visible bands and one near-infrared band. In this paper, an automatic multi-feature combined (MFC) method is proposed for cloud and cloud shadow detection in GF-1 WFV imagery. The MFC algorithm first implements threshold segmentation based on the spectral features and mask refinement based on guided filtering to generate a preliminary cloud mask. The geometric features are then used in combination with the texture features to improve the cloud detection results and produce the final cloud mask. Finally, the cloud shadow mask can be acquired by means of the cloud and shadow matching and follow-up correction process. The method was validated using 108 globally distributed scenes. The results indicate that MFC performs well under most conditions, and the average overall accuracy of MFC cloud detection is as high as 96.8%. In the contrastive analysis with the official provided cloud fractions, MFC shows a significant improvement in cloud fraction estimation, and achieves a high accuracy for the cloud and cloud shadow detection in the GF-1 WFV imagery with fewer spectral bands. The proposed method could be used as a preprocessing step in the future to monitor land-cover change, and it could also be easily extended to other optical satellite imagery which has a similar spectral setting.

* This manuscript has been accepted for publication in Remote Sensing of Environment, vol. 191, pp.342-358, 2017. (http://www.sciencedirect.com/science/article/pii/S003442571730038X)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge